A Comprehensive Guide to SATK Best Practices for Somatic Variant Calling in Cancer Genomics

This article provides a complete roadmap for researchers and bioinformaticians implementing GATK for somatic short variant discovery (SNVs and Indels).

A Comprehensive Guide to SATK Best Practices for Somatic Variant Calling in Cancer Genomics

Abstract

This article provides a complete roadmap for researchers and bioinformaticians implementing GATK for somatic short variant discovery (SNVs and Indels). Covering foundational concepts, step-by-step methodologies, advanced troubleshooting, and rigorous validation, it synthesizes official GATK protocols with performance insights from independent studies. Readers will gain practical knowledge to optimize critical parameters like sequencing depth, handle low-frequency mutations, and implement filtering strategies to achieve high-confidence variant calls for precision oncology applications.

Understanding Somatic Variants and the GATK Framework for Cancer Analysis

Somatic mutations are genetic alterations acquired in individual cells throughout a person's lifetime, distinct from the germline mutations inherited from parents and present in every cell [1]. In the context of cancer, these changes occur in specific cells and can be passed to their progeny, leading to clonal expansions. When somatic mutations occur in key genes controlling cell growth and survival, they can drive the uncontrolled cell proliferation that characterizes cancer [1]. The comprehensive identification and analysis of these mutations through advanced genomic technologies has become fundamental to both understanding cancer biology and guiding clinical decision-making in oncology.

Technological advances, particularly next-generation sequencing (NGS), have revolutionized our ability to detect somatic mutations across the genome. These tools have revealed the astonishing genetic complexity of cancers, showing that tumors can harbor thousands of somatic mutations, only a subset of which directly contribute to cancer development [1]. The clinical impact of this knowledge is substantial, as identifying specific somatic mutations can inform prognosis, guide targeted therapy selection, and enable monitoring of treatment response and disease evolution.

Biological Significance of Somatic Mutations

Mutational Processes and Clonal Expansion

Somatic mutations accumulate in normal tissues throughout life due to both endogenous processes and exogenous mutagen exposures. Recent studies using ultra-sensitive sequencing techniques have revealed that normal tissues become colonized by microscopic clones carrying somatic "driver" mutations as we age [2]. Some of these clones represent a first step towards cancer, while others may contribute to ageing and other diseases without progressing to malignancy.

Research on oral epithelium demonstrates that mutations accumulate linearly with age, with observed rates of approximately 18.0 single-nucleotide variants (SNVs) per cell per year and 2.0 indels per cell per year [2]. When these mutational processes affect key regulatory genes, they can provide a selective advantage to certain cells, leading to clonal expansions that colonize substantial portions of tissue.

Genes and Pathways Under Selection

Cancer develops through the accumulation of somatic mutations that activate oncogenes and inactivate tumor suppressor genes. Large-scale genomic studies have identified numerous genes under positive selection in various tissues. In blood, sequencing studies have identified 14 genes acting as clonal haematopoiesis drivers, including DNMT3A and TET2 [2]. The landscape in oral epithelium is even richer, with 46 genes identified under positive selection and over 62,000 driver mutations observed across a study cohort [2].

Table 1: Key Somatic Mutations in Acute Myeloid Leukemia and Their Prognostic Impact [3]

| Gene | Mutation Prevalence (%) | Prognostic Impact | Affected Biological Process |

|---|---|---|---|

| NPM1 | 27 | Intermediate | Nucleocytoplasmic transport |

| DNMT3A | 26 | Unfavorable | DNA methylation |

| FLT3-ITD | 24 | Unfavorable | Signal transduction |

| TET2 | Not specified | Unfavorable | DNA demethylation |

| TP53 | Not specified | Unfavorable | Genome stability |

| ASXL1 | Not specified | Unfavorable | Chromatin modification |

| RUNX1 | Not specified | Unfavorable | Transcription regulation |

| CEBPA (biallelic) | Not specified | Favorable | Transcription regulation |

The specific genes under selection vary by tissue type, reflecting different regulatory architectures and environmental exposures. For example, while DNMT3A and TET2 mutations drive clonal expansion in both blood and oral epithelium, many genes are specific to particular tissues [2]. This tissue-specificity underscores how the same fundamental mutational processes can manifest differently depending on cellular context.

Challenging the Genetic Paradigm

While the role of somatic mutations in cancer is well-established, some researchers argue that the prevailing "genetic paradigm" requires refinement. The somatic mutation theory (SMT) posits that cancer originates from a single cell that acquires mutations causing abnormal behavior [4]. However, genome sequencing has revealed paradoxes, including that canonical oncogenic mutations are found in tissues that remain free of cancer [4]. This suggests that mutation acquisition alone may be insufficient for cancer development and that non-genetic factors, including cellular plasticity and tissue microenvironment, play crucial roles in carcinogenesis.

Clinical Impact of Somatic Mutations

Prognostic Stratification

The identification of specific somatic mutations has transformed cancer classification and prognostication, particularly in hematological malignancies. In acute myeloid leukemia (AML), comprehensive mutational profiling has revealed distinct prognostic groups that guide clinical management [3]. A systematic review and meta-analysis of 20,048 de novo AML patients found that mutations in CSF3R, TET2, TP53, ASXL1, DNMT3A, and RUNX1 were consistently associated with poorer overall survival and relapse-free survival [3]. Conversely, CEBPA biallelic mutations were linked to favorable outcomes, while mutations in GATA2, FLT3-TKD, KRAS, NRAS, IDH1, and IDH2 showed no significant prognostic impact in multivariate analyses [3].

Table 2: Somatic Mutation Rates in Normal Tissues Revealed by Ultra-Sensitive Sequencing [2]

| Tissue Type | Mutation Accumulation Rate | Number of Genes Under Selection | Estimated Driver Mutations Across Cohort |

|---|---|---|---|

| Oral epithelium | ~18.0 SNVs/cell/year, ~2.0 indels/cell/year | 46 | >62,000 |

| Blood | Consistent with previous WGS of hematopoietic stem cells | 14 | 4,406 non-synonymous mutations in driver genes |

| Genome-wide (oral epithelium) | ~23 SNVs/cell/year | Not specified | Not specified |

These findings have been incorporated into international classification systems, including the World Health Organization (WHO) and International Consensus Classification (ICC) guidelines, as well as the European LeukemiaNet (ELN) 2022 risk stratification framework [3]. The integration of mutational data with conventional cytogenetic analysis has significantly improved the precision of prognostic prediction in AML.

Therapeutic Targeting

Perhaps the most transformative clinical application of somatic mutation analysis has been the development of targeted therapies directed against specific mutant proteins. The KRAS oncogene provides a compelling example of this paradigm. Despite being discovered in 1982 and recognized as driving approximately 30% of all human cancers, including deadly forms of pancreatic, lung, and colorectal cancers, KRAS was long considered "undruggable" due to its complex structure and function [1].

Through fundamental research advances, including detailed structural studies that revealed a hidden binding pocket on a mutant form of KRAS (G12C), researchers finally developed effective inhibitors. This led to the 2021 FDA approval of sotorasib (Lumakras), the first KRAS inhibitor for adults with KRAS G12C-mutated non-small cell lung cancer, followed by adagrasib (Krazati) in 2022 [1]. The success of KRAS targeting has inspired similar approaches against other challenging targets, including MYC and TP53, with several compounds now entering clinical trials [1].

Experimental Protocols for Somatic Variant Detection

GATK Somatic Short Variant Discovery Workflow

The Genome Analysis Toolkit (GATK) provides an industry-standard framework for identifying somatic short variants (SNVs and indels) in tumor samples. The recommended best practices workflow involves several key steps [5]:

Call candidate variants: The process begins with Mutect2, which calls SNVs and indels via local de novo assembly of haplotypes in active regions. Like HaplotypeCaller, Mutect2 completely reassembles reads in regions showing signs of variation, then aligns each read to each haplotype to generate likelihoods. Finally, it applies a Bayesian somatic likelihoods model to obtain log odds for alleles being true somatic variants versus sequencing errors [5].

Calculate contamination: Using GetPileupSummaries and CalculateContamination tools, this step estimates the fraction of reads resulting from cross-sample contamination for each tumor sample. CalculateContamination is designed to work effectively without a matched normal, even in samples with significant copy number variation [5].

Learn orientation bias artifacts: Using LearnReadOrientationModel with optional F1R2 counts output from Mutect2, this step learns parameters for orientation bias artifacts. This is particularly important for formalin-fixed, paraffin-embedded (FFPE) tumor samples [5].

Filter variants: FilterMutectCalls accounts for correlated errors that Mutect2's independent error model cannot detect. It implements several hard filters for alignment artifacts and probabilistic models for strand bias, orientation bias, polymerase slippage artifacts, germline variants, and contamination. It also incorporates a Bayesian model for the overall SNV and indel mutation rate and allele fraction spectrum to refine Mutect2's initial log odds [5].

Annotate variants: Funcotator adds functional information to discovered variants, labeling each with one of twenty-three distinct variant classifications and providing gene information (affected gene, predicted amino acid change, etc.). It integrates data from multiple sources, including GENCODE, dbSNP, gnomAD, and COSMIC, producing either an annotated VCF or MAF file [5].

Advanced Detection Methods for Low-Frequency Variants

While GATK's Mutect2 workflow is optimized for standard tumor sequencing, detecting ultra-low frequency variants in normal tissues or liquid biopsies requires specialized approaches. Methods like nanorate sequencing (NanoSeq) achieve error rates lower than five errors per billion base pairs through duplex sequencing that tracks both strands of each original DNA molecule [2]. This enables detection of mutations present in single molecules, providing accurate mutation rates, signatures, and driver frequencies in any tissue.

Targeted NanoSeq applications have demonstrated remarkable sensitivity in population-scale studies. In analysis of 1,042 buccal swabs, 95% of mutations were detected in just one molecule, with 99% having variant allele fractions under 1% and 90% under 0.1% [2]. This represents a 100-200-fold improvement in detection sensitivity compared to standard sequencing approaches.

Table 3: Key Research Reagents and Computational Tools for Somatic Mutation Analysis

| Tool/Reagent | Function | Application Context |

|---|---|---|

| GATK Mutect2 | Primary somatic variant caller | Identifies SNVs and indels in tumor samples with/without matched normal [5] |

| Funcotator | Functional annotation | Annotates variants with gene consequences, protein effects, and database annotations [5] |

| Panel of Normals (PON) | Artifact filtering | Identifies and removes technical artifacts recurring across normal samples [5] |

| NanoSeq | Ultra-sensitive duplex sequencing | Detects low-frequency variants in normal tissues and early carcinogenesis [2] |

| dbSNP | Germline variant database | Filters common germline polymorphisms from somatic calls [5] |

| COSMIC | Somatic mutation database | Annotates variants with known cancer associations [5] |

Somatic mutations represent the fundamental molecular alterations that drive cancer development and progression. The biological significance of these mutations extends beyond their immediate effects on cell growth to include influences on tissue microenvironment, immune evasion, and metabolic reprogramming. Clinically, the identification of specific somatic mutations has transformed oncology practice, enabling precise prognostic stratification and targeted therapeutic interventions.

Advanced genomic technologies continue to refine our understanding of somatic mutations in cancer. Ultra-sensitive detection methods like NanoSeq are revealing the extensive clonal architecture of normal tissues, providing new insights into the earliest stages of carcinogenesis [2]. Meanwhile, standardized analysis pipelines like GATK's somatic variant calling workflow ensure reproducible identification of clinically actionable mutations across diverse cancer types [5]. As these technologies evolve, they promise to further illuminate the complex relationship between somatic mutations and cancer biology, enabling continued advances in precision oncology approaches.

The identification of somatic mutations is a cornerstone of cancer genomics research, enabling the discovery of driver genes, understanding tumor evolution, and informing targeted therapy decisions. The Genome Analysis Toolkit (GATK) Somatic Short Variant Discovery workflow provides a standardized, best-practice framework for identifying single nucleotide variants (SNVs) and small insertions/deletions (indels) in tumor samples. This workflow is specifically designed to address the unique challenges of somatic mutation calling, including tumor heterogeneity, sample contamination, and the need to detect low-allele-fraction variants present in subclonal populations [6] [7].

Unlike germline variant calling, which identifies variants present in the germline of an individual, somatic variant calling specifically detects mutations that have arisen in tumor tissue. These somatic variants are typically present at variable allele fractions due to factors such as tumor purity, ploidy variations, and clonal heterogeneity. The GATK somatic workflow employs Mutect2 as its core calling engine, which uses a Bayesian statistical model to distinguish true somatic variants from sequencing errors, germline variants, and other artifacts [5] [6].

Workflow Architecture and Core Components

The GATK Somatic Short Variant Discovery workflow follows a structured, multi-step process that transforms input BAM files into a filtered, annotated set of somatic variants. The workflow can operate with or without a matched normal sample, though the use of a matched normal significantly improves accuracy by enabling the identification of germline variants and sample-specific artifacts [5] [8]. The key stages include candidate variant calling, contamination assessment, orientation bias modeling, variant filtering, and functional annotation, each addressing specific analytical challenges in somatic variant discovery.

Table: Key Stages of the GATK Somatic Short Variant Discovery Workflow

| Workflow Stage | Primary Tool(s) | Purpose | Critical Outputs |

|---|---|---|---|

| Candidate Variant Calling | Mutect2 | Identify potential somatic SNVs and indels | Raw VCF with candidate variants |

| Contamination Estimation | GetPileupSummaries, CalculateContamination | Estimate cross-sample contamination | Contamination metrics table |

| Orientation Bias Modeling | LearnReadOrientationModel | Model sequencing artifacts from FFPE samples | Read orientation model |

| Variant Filtering | FilterMutectCalls | Remove false positives using probabilistic models | Filtered VCF file |

| Functional Annotation | Funcotator | Add biological context to variants | Annotated VCF or MAF file |

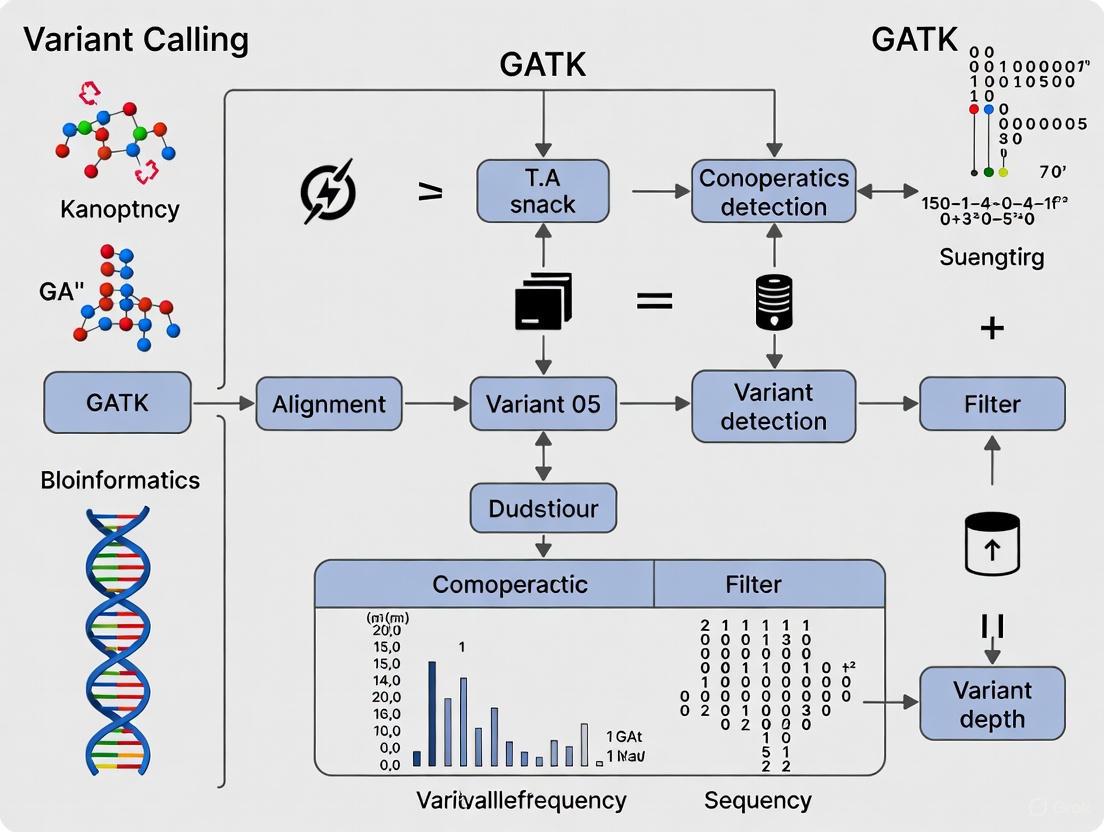

Conceptual Workflow Relationships

The following diagram illustrates the logical flow and data relationships between the major components of the somatic variant discovery workflow:

Detailed Experimental Protocols

Data Pre-processing Requirements

Prior to somatic variant discovery, sequencing data must undergo comprehensive pre-processing to ensure optimal variant calling performance. The GATK Best Practices require that input BAM files are processed through a standardized pre-processing pipeline consisting of alignment, duplicate marking, and base quality score recalibration [9]. This pre-processing is critical for reducing technical artifacts and ensuring the accuracy of downstream variant calls.

Read Alignment: Sequence reads should be aligned to a reference genome using BWA-MEM, resulting in coordinate-sorted BAM files. The alignment process should include proper read group information, which is essential for downstream analysis [9] [7].

Duplicate Marking: PCR duplicates must be identified and marked using tools such as MarkDuplicatesSpark or Picard MarkDuplicates. These duplicates represent non-independent observations and can bias variant calling results if not properly handled [9].

Base Quality Score Recalibration (BQSR): Systematic errors in base quality scores must be corrected using an empirical error model. This process involves collecting covariate statistics from all base calls, building a recalibration model, and applying quality score adjustments to the dataset. BQSR improves the accuracy of variant calling by ensuring that base quality scores properly reflect true error rates [9].

Somatic Variant Calling with Mutect2

The core somatic variant calling step utilizes Mutect2, which performs local de novo assembly of haplotypes in genomic regions showing evidence of variation. Unlike simple subtraction approaches, Mutect2 uses a sophisticated Bayesian model specifically designed for somatic variant detection [5] [6].

Protocol: Mutect2 Variant Calling

Input Preparation: Gather pre-processed BAM files for tumor and normal samples. Ensure reference genome and required resource files are available.

Command Execution:

- Parameter Optimization: For samples with specific characteristics (e.g., FFPE-derived DNA), additional parameters may be required. The

--af-of-alleles-not-in-resourceparameter defines the germline variant prior, while--normal-lodsets the threshold for filtering variants present in the normal sample [10] [6].

Contamination Assessment

Tumor samples are frequently contaminated with normal DNA, which can reduce the effective variant allele fraction and decrease sensitivity for detecting somatic mutations. The GATK workflow includes specialized tools for estimating contamination levels even in samples with significant copy number variation [5].

Protocol: Contamination Estimation

- GetPileupSummaries: First, run GetPileupSummaries on the tumor BAM file to collect summary statistics across genomic positions.

- CalculateContamination: Use the output to estimate contamination fractions:

Artifact Detection and Filtering

Somatic variant calls are prone to specific artifacts arising from sample preparation, sequencing chemistry, and alignment errors. The GATK workflow includes comprehensive filtering steps to address these artifacts [5] [6].

Protocol: Variant Filtering with FilterMutectCalls

- Orientation Bias Modeling: For FFPE samples, learn specific artifact models using LearnReadOrientationModel:

- Apply Comprehensive Filtering:

FilterMutectCalls employs multiple probabilistic models to address alignment artifacts, strand bias, orientation bias, polymerase slippage around indels, germline variants, and contamination. The tool automatically determines filtering thresholds to optimize the F-score, balancing sensitivity and precision [5].

Functional Annotation

The final step in the workflow involves annotating variants with biological information to support interpretation and prioritization. Funcotator provides comprehensive annotation, including variant classifications, gene effects, and protein consequences [5].

Protocol: Variant Annotation with Funcotator

Configuration: Prepare datasources for annotation, which can include local files or cloud-based resources.

Annotation Execution:

Funcotator supports multiple output formats, including VCF and Mutation Annotation Format (MAF), the latter being widely used in cancer genomics consortia such as The Cancer Genome Atlas (TCGA) [5].

Table: Essential Research Reagents and Resources for GATK Somatic Variant Discovery

| Resource Type | Specific Examples | Purpose and Function |

|---|---|---|

| Reference Genome | GRCh38, b37 | Provides coordinate system for alignment and variant calling |

| Germline Resource | gnomAD, 1000 Genomes | Helps distinguish somatic variants from common germline polymorphisms |

| Panel of Normals (PON) | Broad Institute PON, Custom PON | Identifies and filters systematic technical artifacts |

| Known Variants Databases | dbSNP, COSMIC | Annotates variants with population frequency and cancer relevance |

| Functional Annotation Sources | GENCODE, ClinVar | Provides gene models and clinical significance information |

Successful implementation of the GATK somatic workflow requires several key reference resources. The panel of normals (PON) is particularly important for identifying and filtering systematic artifacts. While pre-built PONs are available for common reference genomes, creating a study-specific PON from multiple normal samples is recommended when processing large cohorts [5] [10]. The germline resource (e.g., gnomAD) provides population allele frequencies that enable Mutect2 to distinguish rare germline variants from true somatic mutations [6].

Technical Considerations and Best Practices

Experimental Design Considerations

The performance of somatic variant discovery is significantly influenced by experimental design factors. The GATK Best Practices provide specific guidance for different sequencing approaches [11] [7]:

Whole Genome Sequencing (WGS): Provides uniform coverage across the genome, enabling comprehensive variant discovery in coding and non-coding regions. Typically sequenced at 30-60x depth for normal samples and higher depths (60-100x) for tumor samples.

Whole Exome Sequencing (WES): Targets protein-coding regions, typically achieving higher depth (100-150x) within exons but limited to approximately 2% of the genome.

Targeted Panels: Focus on specific genes of clinical interest, enabling very high sequencing depth (500-1000x) that facilitates detection of low-frequency variants.

The choice of strategy involves trade-offs between breadth of genomic coverage, sequencing depth, and cost. For cancer studies focused on somatic mutation detection, WES often provides an optimal balance, though WGS is superior for detecting variants in non-coding regions and structural variants [7].

Computational Requirements and Implementation

The GATK somatic workflow has specific computational requirements that should be considered when planning analyses. The toolkit is designed to run on Linux and other POSIX-compatible platforms, with Java 1.8 as the primary dependency [12]. For optimal performance:

Memory Requirements: Somatic variant discovery typically requires 8-16GB of RAM for exome datasets and 16-32GB for whole genomes, though larger panels of normals may increase memory usage.

Processing Time: The computational intensity varies with dataset size, with exome samples typically processing in hours and whole genomes requiring substantially more time.

Parallelization: GATK4 leverages Apache Spark for distributed computing, enabling significant speed improvements for large-scale analyses. The toolkit can be deployed on local clusters, cloud environments, or using container technologies like Docker [12].

The GATK Somatic Short Variant Discovery workflow provides a robust, battle-tested framework for identifying somatic mutations in cancer genomic studies. By integrating multiple specialized tools into a cohesive workflow, it addresses the unique challenges of somatic variant calling, including low variant allele fractions, tumor heterogeneity, and technical artifacts. The structured approach encompassing variant calling, contamination assessment, artifact modeling, and comprehensive filtering enables researchers to achieve high-specificity results suitable for both discovery research and clinical applications. When properly implemented with appropriate controls and resources, this workflow facilitates the generation of reliable somatic variant datasets that can power downstream analyses in cancer genomics research.

The accurate detection of somatic mutations is a cornerstone of cancer genomics, enabling the profiling of cancer development, progression, and chemotherapy resistance [13]. Whole Exome Sequencing (WES) has emerged as a popular and cost-effective approach for this purpose [13]. However, somatic variant discovery from next-generation sequencing data presents unique and significant challenges that can compromise the accuracy and clinical utility of the results. Key among these are tumor heterogeneity, sample contamination, and sequencing artifacts [13] [14] [15]. These factors collectively complicate the bioinformatic process of distinguishing true somatic mutations from germline variants and technical noise. Furthermore, the standard practice of using a matched normal sample for comparison is vulnerable to inaccuracies if the normal sample is contaminated with tumor cells, leading to false negatives and loss of clinically relevant variants [14]. This application note details these critical challenges within the context of using the Genome Analysis Toolkit (GATK) and provides structured experimental protocols and solutions to enhance the reliability of somatic variant calling in research and clinical settings.

Key Challenges and Impact Analysis

The pursuit of accurate somatic variant profiles is hindered by several interconnected biological and technical hurdles. The table below summarizes the primary challenges, their impact on variant calling, and the underlying causes.

Table 1: Key Challenges in Somatic Variant Calling and Their Impacts

| Challenge | Impact on Variant Calling | Primary Cause |

|---|---|---|

| Tumor Heterogeneity | Reduces the Variant Allele Frequency (VAF) of subclonal mutations, lowering detection sensitivity and obscuring the true clonal architecture [13]. | Presence of multiple subpopulations of cancer cells with different mutational profiles within a single tumor [13]. |

| Tumor-in-Normal (TIN) Contamination | Leads to the erroneous subtraction of genuine, high-frequency somatic mutations during matched analysis, increasing false negatives [14]. | Inadvertent presence of tumor cells in the matched normal sample (e.g., in blood or saliva) [14]. |

| Sequencing Artifacts & Errors | Generates false positive variant calls that are not biologically real, requiring extensive filtering and validation [13] [15]. | Limitations in sequencing chemistry, base calling, and read alignment [13]. |

| Germline Variation | Can be mistaken for somatic mutations, leading to a high false positive rate, especially in tumor-only analyses [15]. | Presence of inherited germline variants in the patient's genome [15]. |

The Critical Issue of Tumor-in-Normal Contamination

The problem of TIN contamination deserves particular emphasis due to its severe and often underestimated impact on clinical analysis. In a canonical tumor-normal analysis, the matched normal sample is used to subtract the patient's germline variants. If this normal sample contains tumor DNA, this subtraction step will incorrectly remove true somatic variants that are present in both the tumor and the "contaminated" normal [14]. This effect is biased towards variants with high allele frequency in the tumor, which are often the most clinically relevant clonal driver mutations [14]. A systematic review of 771 patients found contamination across a range of cancer types, with the highest prevalence in saliva samples from acute myeloid leukemia patients and sorted CD3+ T-cells from myeloproliferative neoplasms [14]. The following diagram illustrates the logical workflow for assessing and addressing this risk.

Quantitative Performance of Somatic Calling Methods

Different variant calling methods and pipelines exhibit varying performance characteristics, trading off between sensitivity (recall) and specificity (precision). The following table synthesizes key quantitative findings from benchmark studies.

Table 2: Performance Comparison of Somatic Variant Calling Approaches

| Method / Approach | Reported Validation Rate / Precision | Key Characteristics and Limitations |

|---|---|---|

| MuTect | 90% validation rate [13] | High specificity but may yield a limited number of somatic mutations [13]. |

| GATK (HaplotypeCaller) | Lower specificity; increased false positives [13] | Detects a high number of mutations but with low validation rates [13]. |

| GATK-LODN (MuTect+GATK) | Improved GATK performance (3 of 4 variants confirmed vs. 5 of 14) [13] | Combines GATK's sensitivity with MuTect's LODN classifier for improved specificity [13]. |

| Tumor-Only Analysis | 20-21% precision (vs. 30-82% for tumor-normal) [15] | Similar recall (43-60%) to tumor-normal but significantly lower precision due to germline contamination [15]. |

| ClairS-TO (Long-read) | Outperformed Mutect2 on short-read data in benchmarks [16] | A deep-learning method for long-read tumor-only calling; uses ensemble neural networks [16]. |

Recommended Protocols and Integrated Workflow

To address the challenges outlined above, researchers can employ the following structured protocols. The GATK Best Practices provide a robust foundation, which can be enhanced with specific tools and filters for somatic analysis.

Data Pre-processing and Somatic Variant Discovery Protocol

This protocol is adapted from the GATK Best Practices and validated research pipelines [13] [11] [17].

- Quality Control & Trimming: Process raw sequencing reads (FASTQ) using tools like FASTX-Toolkit. Use

fastq_quality_filterandfastq_quality_trimmerto remove low-quality reads and trim bases, typically using a Phred score threshold of 20 [13]. - Alignment to Reference: Align reads to a human reference genome (e.g., GRCh37/hg19) using BWA-MEM with default parameters [13].

- Post-Alignment Processing: This critical step reduces technical artifacts.

- Sorting & Marking Duplicates: Use Picard tools to sort aligned BAM files and mark duplicate reads introduced during PCR amplification [13].

- Local Realignment: Perform local realignment around known indels using GATK's

IndelRealignerto correct alignment artifacts [13]. - Base Quality Score Recalibration (BQSR): Use GATK's

BaseRecalibratorandPrintReadsto correct for systematic errors in base quality scores [13].

- Somatic Short Variant Discovery (SNVs + Indels):

- Option A: Paired Tumor-Normal with Mutect2. Use Mutect2 as the primary somatic caller, which is specifically designed for this purpose and is part of the GATK toolkit [18] [17].

- Option B: Integrated GATK-LODN Pipeline.

- Call variants in normal and tumor BAMs independently using GATK HaplotypeCaller.

- Filter variants using GATK's Variant Quality Score Recalibration (VQSR).

- Subtract normal variants from tumor variants to get an initial candidate set.

- Apply the MuTect LODN (Log Odds) classifier to these candidates. This Bayesian classifier uses read counts from the normal sample to ensure somatic classification (requiring, for example, read depth ≥8 in normal and ≥14 in tumor) [13].

- Final Callset: The union of variants from MuTect and the GATK-LODN filtered set forms the final high-confidence somatic callset [13].

Tumor-in-Normal Contamination Assessment Protocol

For studies using WGS, the following protocol using the TINC tool is recommended to assess normal sample purity [14].

- Input: Analysis-ready BAM files for the tumor and its matched normal, along with somatic SNV calls and (optionally) copy number alteration (CNA) calls for the tumor.

- Run TINC: Execute the TINC R package on the input data.

- TINC uses the MOBSTER tool to perform subclonal deconvolution and identify high-confidence clonal somatic mutations in the tumor sample.

- It then fits the read counts for these clonal mutations in the matched normal sample to a Binomial mixture model to estimate the TIN score (percentage of reads in the normal derived from the tumor) [14].

- Interpretation and Action:

- A low TIN score (e.g., below a predefined threshold for your study) provides confidence in the matched normal analysis.

- A high TIN score indicates significant contamination. In this case, the matched normal should not be used for germline subtraction. The analysis should switch to a tumor-only workflow, leveraging population frequency databases (e.g., gnomAD) to filter germline variants [14].

The following workflow diagram integrates these protocols into a cohesive analysis pipeline, incorporating key decision points.

This table catalogs key software tools, databases, and resources that are essential for implementing robust somatic variant calling workflows.

Table 3: Key Resources for Somatic Variant Analysis

| Resource Name | Type | Primary Function in Analysis |

|---|---|---|

| GATK (Genome Analysis Toolkit) | Software Toolkit | Industry-standard package for variant discovery; includes tools for data pre-processing, germline, and somatic (Mutect2) variant calling [12] [11] [17]. |

| Mutect2 | Variant Caller | A specialized GATK tool for calling somatic SNVs and indels with high accuracy using a Bayesian model, designed for paired and tumor-only analyses [18] [17]. |

| BWA (Burrows-Wheeler Aligner) | Aligner | Aligns sequencing reads to a reference genome, a critical first step in the analysis pipeline [13] [15]. |

| Picard | Software Toolkit | Provides essential command-line tools for manipulating sequencing data (BAM/SAM), including sorting and marking duplicates [13] [12]. |

| ANNOVAR | Annotation Tool | Functional annotation of genetic variants detected from sequencing data, linking them to known genes and databases [13] [15]. |

| TINC | Quality Control Tool | Estimates the level of tumour cell contamination in a matched normal sample from WGS data, which is crucial for clinical reporting confidence [14]. |

| dbSNP / 1000 Genomes | Germline Database | Public databases of known human germline variation used to filter out common polymorphisms from candidate somatic variant lists [13] [15]. |

| BAMSurgeon | Simulation Tool | Spikes simulated somatic variants into real BAM files to create in-silico tumor samples for benchmarking and tuning somatic variant callers [19]. |

In cancer genomics research, identifying somatic mutations from tumor samples is fundamental for understanding tumorigenesis, developing targeted therapeutic strategies, and identifying cancer susceptibility genes [20]. The Genome Analysis Toolkit (GATK) provides a robust, well-validated framework for somatic short variant discovery (SNVs and Indels) that has become a standard in both academic and clinical research settings [5]. This integrated workflow transforms raw sequencing data into biologically interpretable mutations through a multi-step process that ensures accuracy and reliability. The process begins with pre-processed BAM files and proceeds through variant calling, filtering, and annotation stages, each designed to address specific computational challenges in somatic mutation detection.

The critical challenge in somatic analysis lies in distinguishing true somatic variants from various artifacts, including sequencing errors, alignment issues, and sample contamination. The GATK somatic workflow specifically addresses these challenges through specialized tools that implement sophisticated statistical models and filters. Unlike germline variant calling, somatic analysis must detect low-allelic-fraction variants while accounting for tumor-specific phenomena such as contamination, orientation bias, and copy number variations [5]. This protocol focuses on the three core tools that form the backbone of the GATK somatic variant calling workflow: Mutect2 for initial variant calling, FilterMutectCalls for variant refinement, and Funcotator for biological interpretation, providing researchers with a complete framework for cancer genomic analysis.

The complete somatic analysis pathway integrates multiple GATK tools in a specific sequence to ensure optimal results. The following diagram illustrates the primary workflow and data flow between tools:

Figure 1: The GATK Somatic Variant Discovery Workflow. This end-to-end process transforms input BAM files into fully annotated mutation lists, integrating multiple quality control and filtering steps.

As illustrated in Figure 1, the workflow begins with Mutect2 generating raw candidate variants, which then undergo comprehensive filtering by FilterMutectCalls using additional inputs from contamination estimation and orientation bias analysis. The final step involves Funcotator adding biological context to the filtered variants. This structured approach ensures that only high-confidence variants proceed to annotation while maintaining sensitivity for detecting true somatic mutations, even at low allele frequencies. Each tool addresses specific aspects of the variant calling challenge, creating a synergistic system that outperforms any single tool used in isolation.

Tool-Specific Protocols and Applications

Mutect2: Somatic Variant Calling via Local Assembly

Overview and Scientific Rationale

Mutect2 represents the core variant discovery engine in the GATK somatic workflow, designed to identify both SNVs and indels simultaneously through local de novo assembly of haplotypes [5]. Unlike traditional methods that rely on existing alignment information, Mutect2 completely reassembles reads in active regions showing signs of variation, generating candidate haplotypes that are then realigned using the Pair-HMM algorithm. This assembly-based approach significantly improves detection of complex variants and indels in repetitive regions. The tool applies a Bayesian somatic likelihoods model to calculate log odds for alleles being true somatic variants versus sequencing errors, providing a robust statistical foundation for variant identification.

Mutect2 operates effectively with both matched tumor-normal pairs and tumor-only samples, making it versatile for various research scenarios. In matched mode, it directly compares tumor and normal samples to identify somatic changes. In tumor-only mode, it leverages population resources to filter common germline polymorphisms. The tool's ability to perform local reassembly makes it particularly powerful for detecting indels and complex mutations that would be missed by alignment-based callers, addressing a critical need in cancer genomics where such mutations play important roles in tumorigenesis.

Key Parameters and Implementation

Table 1: Essential Mutect2 Arguments for Somatic Variant Calling

| Argument | Default Value | Description | Research Application |

|---|---|---|---|

--germline-resource |

None | Population VCF of germline variants | Critical for tumor-only mode; enables filtering of common germline variants |

--panel-of-normals |

None | VCF of artifacts seen in normal samples | Reduces false positives from technical artifacts |

--af-of-alleles-not-in-resource |

0.000001 | Prior allele frequency for novel variants | Controls sensitivity for rare somatic variants |

--native-pair-hmm-threads |

4 | Computational threads for PairHMM | Optimizes runtime performance for large datasets |

--output |

None | Output VCF file | Specifies results destination |

--reference |

None | Reference genome FASTA | Required for accurate reassembly and coordinate handling |

Practical Protocol

Input Preparation: Begin with pre-processed BAM files aligned to an appropriate reference genome (hg19 or hg38). Ensure proper indexing and validation of input files.

Basic Command Execution:

Tumor-Only Calling Strategy: For studies without matched normal samples, omit the normal BAM and sample name while ensuring a comprehensive germline resource is provided:

Output Interpretation: The raw VCF output contains initial variant calls with extensive annotation including tumor LOD (log odds for tumor versus sequencing error), normal LOD (log odds for normal versus sequencing error), and population allele frequencies. These metrics provide the foundation for subsequent filtering steps.

FilterMutectCalls: Advanced Filtering for Somatic Variants

Overview and Scientific Rationale

FilterMutectCalls addresses the critical challenge of distinguishing true somatic variants from various artifacts that pass initial calling thresholds [21] [22]. While Mutect2's statistical model assumes independent read errors, real-world data often contains correlated errors arising from PCR artifacts, mapping issues, sample contamination, and sequencing artifacts. FilterMutectCalls implements multiple specialized filters to address these challenges through hard filters for alignment artifacts and probabilistic models for strand bias, orientation artifacts, polymerase slippage, germline contamination, and sample cross-contamination.

The tool incorporates a sophisticated Bayesian model that learns the SNV and indel mutation rate and allele fraction spectrum specific to each tumor, refining the initial log odds emitted by Mutect2 [5]. This adaptive approach allows it to set sample-specific filtering thresholds that optimize the F-score, balancing sensitivity and precision according to the unique characteristics of each dataset. The automatic threshold optimization represents a significant advancement over fixed threshold approaches, particularly for cancer samples with varying tumor purity and mutation burdens.

Comprehensive Filtering Criteria

Table 2: Key Filtering Criteria in FilterMutectCalls

| Filter Category | Specific Filters | Purpose | Default Threshold |

|---|---|---|---|

| Quality Metrics | min-median-mapping-quality | Filters variants with poor mapping | 30 |

| min-median-base-quality | Filters variants with low base quality | 20 | |

| unique-alt-read-count | Requires unique supporting reads | 0 (disabled) | |

| Artifact Detection | strand-artifact | Detects strand-specific artifacts | FDR < 0.05 |

| normal-artifact-lod | Identifies artifacts present in normal | 0.0 | |

| pcr-slippage | Detects polymerase slippage in STRs | p < 0.001 | |

| Contamination Control | contamination | Filters variants likely from contamination | p < 0.1 |

| max-germline-posterior | Removes likely germline variants | 0.1 | |

| Statistical Filters | tumor-lod | Minimum evidence for somatic origin | 5.3 |

| multiallelic | Filters complex sites with multiple alts | 2 events/region |

Practical Protocol

Prerequisite Analysis Steps: Before running FilterMutectCalls, generate necessary supporting data:

Comprehensive Filtering Execution:

Mitochondrial DNA Analysis: For mitochondrial genome analysis, enable specialized mode:

Output Interpretation: The filtered VCF contains PASS variants that represent high-confidence somatic calls. Carefully review the filter fields to understand why specific variants were removed, as this informs potential adjustments to filtering stringency based on research goals.

Funcotator: Functional Annotation of Somatic Variants

Overview and Scientific Rationale

Funcotator (FUNCtional annOTATOR) transforms genomic variant lists into biologically interpretable datasets by adding functional annotations from multiple curated data sources [23]. This tool addresses the critical research need to prioritize and interpret somatic mutations by providing gene-level information, functional predictions, and known variant annotations. Funcotator supports both VCF and MAF (Mutation Annotation Format) output, with MAF being particularly valuable for cancer genomics due to its compatibility with analysis tools like maftools and cBioPortal.

The tool employs a modular data source architecture that enables annotation based on gene name, transcript ID, or genomic position [23]. Pre-packaged data sources include GENCODE for gene structure and consequence prediction, dbSNP for known polymorphisms, gnomAD for population frequencies, and COSMIC for cancer-specific mutations. For somatic analysis, Funcotator assigns one of twenty-three distinct variant classifications that precisely describe the functional impact of each mutation, enabling immediate biological interpretation and prioritization of driver mutations.

Annotation Specifications and Data Sources

Table 3: Core Funcotator Data Sources for Somatic Analysis

| Data Source | Annotation Content | Research Utility | Activation Requirement |

|---|---|---|---|

| GENCODE | Gene structure, transcript information | Required for variant consequence prediction | Always enabled |

| dbSNP | Known polymorphism IDs and frequencies | Germline versus somatic discrimination | Included in pre-packaged sources |

| gnomAD | Population allele frequencies | Filtering of common germline variants | Manual extraction from tar.gz |

| COSMIC | Cancer-specific mutations | Identification of known cancer mutations | Included in somatic data sources |

| ClinVar | Clinical significance | Pathogenicity assessment | Included in pre-packaged sources |

| User-Defined | Custom gene sets or regions | Study-specific prioritization | Configurable via XSV files |

Variant Classification System

Funcotator employs a comprehensive classification system that includes:

- MISSENSE: Amino acid altering changes in coding regions

- NONSENSE: Premature stop codon creation

- SPLICE_SITE: Variants affecting splicing regions

- FRAMESHIFTINS/DEL: Frameshifting insertions or deletions

- INTRON: Variants in intronic regions

- 5'UTR/3'UTR: Untranslated region variants

- IGR: Intergenic region variants [24]

Practical Protocol

Data Source Setup: Download and prepare somatic data sources:

Basic Annotation Execution:

Customized Annotation for Specific Needs:

Output Interpretation: The MAF output contains comprehensive annotations including HugoSymbol, VariantClassification, Protein_Change, and data source-specific fields. Use these annotations to filter variants based on functional impact, population frequency, and prior evidence in cancer databases, enabling prioritization of biologically relevant mutations for experimental validation.

Integrated Analysis Protocol

End-to-End Experimental Workflow

A complete somatic analysis requires careful integration of all three tools in a coordinated workflow:

Quality Control and Preparation (Day 1):

- Verify BAM file integrity and indexing

- Confirm sample metadata and pairing information

- Prepare reference genome and resource files

Variant Discovery and Filtering (Days 1-2):

- Execute Mutect2 on all tumor-normal pairs

- Generate contamination estimates and orientation bias priors

- Apply FilterMutectCalls with all auxiliary inputs

- Quality check using variant statistics and filtering reports

Annotation and Interpretation (Day 2):

- Run Funcotator with somatic data sources

- Generate MAF files for downstream analysis

- Review variant classifications and prioritize candidates

The Scientist's Toolkit: Essential Research Reagents

Table 4: Key Research Reagent Solutions for GATK Somatic Analysis

| Reagent/Resource | Function | Example Source | Critical Application |

|---|---|---|---|

| Reference Genome | Coordinate reference for alignment | GATK Resource Bundle | Essential for all analysis steps |

| Germline Resource | Filtering common polymorphisms | gnomAD, 1000 Genomes | Tumor-only analysis quality |

| Panel of Normals | Technical artifact identification | Created in-house from normal samples | False positive reduction |

| Data Sources | Functional annotation | FuncotatorDataSourceDownloader | Biological interpretation |

| Common SNPs | Contamination estimation | GATK Resource Bundle | Cross-sample contamination |

| 2,5-Diiodopyrazine | 2,5-Diiodopyrazine, CAS:1093418-77-9, MF:C4H2I2N2, MW:331.88 g/mol | Chemical Reagent | Bench Chemicals |

| Urea oxalate | Urea oxalate, CAS:513-80-4, MF:C3H6N2O5, MW:150.09 g/mol | Chemical Reagent | Bench Chemicals |

The integrated use of Mutect2, FilterMutectCalls, and Funcotator represents a comprehensive, robust solution for somatic variant discovery in cancer genomics research. This toolchain transforms raw sequencing data into biologically interpretable mutations through a sophisticated multi-stage process that balances sensitivity and specificity. The workflow addresses the unique challenges of somatic analysis, including low variant allele fractions, tumor heterogeneity, and various technical artifacts, making it suitable for both research and clinical applications.

As cancer genomics continues to evolve toward single-cell applications [25] and integrated analysis platforms [20], the principles implemented in these GATK tools provide a foundation for accurate mutation detection. The structured approach outlined in this protocol ensures researchers can reliably identify somatic variants while maintaining flexibility for study-specific adaptations, ultimately supporting advances in understanding tumorigenesis and developing targeted cancer therapies.

In cancer genomics research, the detection of somatic variants from next-generation sequencing (NGS) data is a fundamental process for understanding tumor biology, identifying therapeutic targets, and guiding personalized treatment strategies. The accuracy of this detection hinges critically on the quality of the input data provided to variant callers. The Binary Alignment/Map (BAM) file serves as the primary data structure containing aligned sequence reads and their associated metadata, forming the foundation upon which all subsequent variant discovery is built [26]. This application note details the essential specifications and pre-processing best practices for BAM files within the context of the Genome Analysis Toolkit (GATK) framework, with a specific focus on optimizing somatic variant calling in cancer research.

The GATK Best Practices provide rigorously validated workflows for variant discovery in high-throughput sequencing data [11]. For somatic analysis in cancer, these practices emphasize that proper data pre-processing is not merely a preliminary step but a critical determinant of variant calling accuracy. Errors introduced during pre-processing or overlooked in quality control can manifest as both false positive and false negative variant calls, potentially compromising research conclusions and clinical interpretations [7]. This document outlines the complete pathway from raw sequencing reads to analysis-ready BAM files, specifying the technical requirements, quality metrics, and optimization strategies essential for reliable somatic mutation detection in tumor samples.

BAM File Structure and Essential Specifications

Fundamental BAM File Composition

A BAM file is the compressed binary representation of a Sequence Alignment/Map (SAM) file, storing aligned sequencing reads and their alignment information to a reference genome [26]. The file structure consists of two primary components:

- Header Section: Contains metadata describing the entire file content, including reference sequence information, sample identity, and processing history. The header stores read group (

@RG) information, which is indispensable for downstream analysis. - Alignment Section: Contains the detailed alignment information for each read, including chromosome coordinates, mapping quality, CIGAR string, and custom tags. Each alignment record must include the

RGtag associating it with a read group defined in the header [26].

Mandatory Read Group Specifications

Proper read group information is not optional in GATK workflows; the toolkit will not function correctly without these tags [27]. The @RG line in the header must be populated with specific fields that establish sample identity and sequencing experimental parameters:

Table 1: Essential Read Group Fields for Somatic Variant Calling

| Field | Description | Example Value | Importance in Somatic Calling |

|---|---|---|---|

ID |

Read group identifier | Tumor_L001 |

Uniquely identifies sequencing lane/library combinations |

SM |

Sample identifier | Patient_1_Tumor |

Critical: Links reads to sample; must match between tumor/normal pairs |

PL |

Sequencing platform | ILLUMINA |

Informs platform-specific error models |

LB |

Library identifier | Library_Prep_A |

Identifies potential batch effects in library preparation |

PU |

Platform unit | HISEQ2500_1 |

Distributes processing and identifies lane-specific artifacts |

During alignment using BWA-MEM, the read group information is incorporated using the -R parameter with the following syntax [27]:

Alignment-Specific Requirements

For somatic variant calling, alignment records must meet specific quality standards to ensure accurate mutation detection:

- Mapping Quality: Alignments should have appropriately calibrated mapping quality scores. Low mapping quality reads may indicate ambiguous mappings that can generate false positive variant calls.

- CIGAR String Accuracy: The CIGAR (Compact Idiosyncratic Gapped Alignment Report) string must accurately represent the alignment, including matches/mismatches, insertions, deletions, and clipping operations. Misrepresented gaps contribute to indel calling errors.

- Base Quality Scores: Original base quality scores from the sequencer must be preserved in the QUAL field, as these will be subsequently recalibrated but not fundamentally altered.

- Mate Information: For paired-end sequencing, proper mate alignment information must be maintained, including correct pairing orientation and insert size metrics.

Pre-processing Workflow for Somatic Variant Calling

The GATK Best Practices define a systematic pre-processing workflow that transforms raw sequencing data into analysis-ready BAM files [9]. This workflow consists of three principal stages that correct for technical artifacts and prepare the data for variant discovery.

Comprehensive Pre-processing Workflow

The following diagram illustrates the complete pre-processing pathway from raw sequencing data to analysis-ready BAM files:

Mapping to Reference

The initial processing step aligns raw sequencing reads to the reference genome using BWA-MEM, which is specifically recommended for its performance with longer reads (75bp and above) [27]. This step is performed per-read group and requires:

- Reference Genome Preparation: The reference genome must be properly indexed for BWA using

bwa index. For references larger than 2GB, the-a bwtswalgorithm must be specified [27]. - BWA-MEM Parameters: The

-Mflag marks shorter split hits as secondary alignments, which is essential for GATK compatibility. Proper read group information must be supplied using the-Rparameter [27]. - Output Handling: The SAM output from BWA is typically piped directly to sorting tools to avoid unnecessary intermediate file storage [28].

Post-Alignment Processing: Sorting and Duplicate Marking

After alignment, reads must be sorted by genomic coordinate to enable efficient processing in subsequent steps [9]. The GATK Best Practices recommend using SortSam with SORT_ORDER=coordinate to achieve this [27].

Duplicate marking identifies reads that likely originated from the same original DNA fragment due to PCR amplification artifacts [9]. These non-independent observations are tagged (not removed) so variant callers can appropriately weight the evidence. For optimal performance in somatic calling:

- Tool Selection:

MarkDuplicatesSparkprovides significant performance improvements through parallel processing while producing bit-wise identical results to the standardMarkDuplicatestool [9]. - Impact on Somatic Calling: PCR duplicates can bias variant allele frequency estimations, which is particularly critical for detecting subclonal populations in tumor samples. Proper duplicate marking ensures accurate allele frequency calculations [7].

Base Quality Score Recalibration (BQSR)

Base Quality Score Recalibration (BQSR) systematically corrects for systematic technical errors in base quality scores assigned by the sequencer [9]. This two-step process applies a machine learning model to detect patterns of systematic errors and adjust quality scores accordingly:

- Covariate Analysis:

BaseRecalibratoranalyzes multiple covariates including read group, quality score, cycle, and context to build an error model. - Quality Adjustment:

ApplyBQSRapplies the recalibration model to adjust base quality scores in the dataset.

For cancer genomics, BQSR is particularly important because variant calling algorithms rely heavily on base quality scores when weighing evidence for or against potential variants [9]. Inaccurate quality scores can lead to both false positives and false negatives in mutation detection.

Performance Optimization and Implementation Strategies

Computational Efficiency and Resource Optimization

Processing large whole-genome sequencing datasets for cancer research requires significant computational resources. The following table summarizes performance characteristics for key pre-processing steps:

Table 2: Performance Optimization Guidelines for Pre-processing Steps

| Processing Step | Optimal Thread Count | Memory Configuration | Throughput Benchmark | Recommended Tool |

|---|---|---|---|---|

| Adapter Trimming | 2-6 threads | Moderate (8-16GB) | 123,228 pairs/sec (6 threads) | fastp |

| Sequence Alignment | 16-32 threads | High (32GB+) | 22,087 pairs/sec (32 threads) | BWA-MEM |

| SAM to BAM Conversion | 10-16 threads | Moderate (16-32GB) | 263,068 pairs/sec (16 threads) | samtools view |

| Coordinate Sorting | 10-14 threads | High (32GB+, with temp storage) | 133,980 pairs/sec (14 threads) | samtools sort |

| Duplicate Marking | Varies by core count | High (32GB+) | 1.45-1.67x speedup | MarkDuplicatesSpark |

| Base Quality Recalibration | Parallel by genomic region | Moderate (16-32GB) | 4.76-4.85x overall workflow speedup | BaseRecalibrator |

Performance data derived from benchmarks indicates that efficient pipeline design should allocate resources strategically, with BWA-MEM typically being the most computationally intensive step [28]. Recent advancements in Apache Arrow in-memory data representation have demonstrated significant performance improvements, achieving speedups of 4.85x for whole-genome sequencing and 4.76x for whole-exome sequencing in overall variant calling workflows [29].

Integrated Processing Pipeline

For optimal performance, the pre-processing steps can be integrated into a single pipeline that minimizes intermediate disk I/O:

This approach streams data directly between processes, reducing disk I/O bottlenecks and improving overall throughput [28].

Quality Control and Validation Metrics

Essential Quality Metrics for Somatic Analysis

Rigorous quality control of pre-processed BAM files is essential before proceeding to variant calling. The following metrics must be evaluated to ensure data integrity:

Table 3: Essential Quality Control Metrics for Pre-processed BAM Files

| Quality Metric | Target Value | Calculation Method | Impact on Somatic Calling |

|---|---|---|---|

| Total Sequencing Coverage | ≥30× WGS, ≥100× WES | samtools depth | Affects sensitivity for heterozygous and subclonal variants |

| Duplicate Rate | ≤10-15% for exomes | MarkDuplicates metrics | High rates indicate library issues and bias allele frequencies |

| Mapping Quality | >30 for most reads | samtools stats | Low quality indicates ambiguous alignments causing false positives |

| Insert Size Metrics | Consistent with library prep | Picard CollectInsertSizeMetrics | Deviations indicate potential structural variants or artifacts |

| Base Quality Distribution | No systematic biases | BaseRecalibrator output | Affects variant quality scoring and filtering |

| Chromosome Coverage uniformity | <3-fold variation | mosdepth or Qualimap | Uneven coverage creates blind spots in variant detection |

Contamination and Sample Quality Verification

In cancer genomics, additional quality checks are necessary to verify sample integrity and identify potential contamination:

- Cross-Individual Contamination: VerifyBamID assesses the level of external sample contamination, which is critical for accurate somatic variant calling [7].

- Sample Identity Confirmation: For matched tumor-normal pairs, tools like the KING algorithm confirm expected biological relationships and sample identities [7].

- Tumor Purity Estimation: Methods for estimating tumor purity from sequencing data should be applied, as purity directly impacts variant allele frequency distributions and sensitivity for subclonal mutation detection.

The Scientist's Toolkit: Essential Research Reagents and Computational Tools

Table 4: Essential Research Reagent Solutions for Somatic Variant Calling

| Tool/Resource | Category | Primary Function | Application in Somatic Calling |

|---|---|---|---|

| BWA-MEM | Read Alignment | Maps sequencing reads to reference genome | Provides initial alignment for variant detection |

| Picard Tools | BAM Processing | Various BAM manipulation and QC utilities | Marking duplicates, read group management |

| GATK | Variant Discovery | Comprehensive variant calling toolkit | Somatic short variant discovery with Mutect2 |

| Samtools | BAM/CRAM Manipulation | Utilities for working with alignment files | File conversion, indexing, and basic QC |

| FastP | Quality Control | Adapter trimming and quality filtering | Prepares raw reads for alignment |

| VerifyBamID | Sample QC | Contamination detection | Ensures sample purity for accurate variant calls |

| Genome in a Bottle | Benchmarking | Reference materials with validated variants | Pipeline validation and performance assessment |

| Alnusdiol | Alnusdiol CAS 56973-51-4|Research Chemical | Alnusdiol is a natural phenol from Alnus japonica bark with research applications in antioxidant studies. This product is For Research Use Only. Not for human or veterinary use. | Bench Chemicals |

| 6beta-Oxymorphol | 6beta-Oxymorphol|CAS 54934-75-7|Research Chemical | 6beta-Oxymorphol (CAS 54934-75-7) is a high-purity analytical reference standard for opioid research. This product is For Research Use Only. Not for human or veterinary use. | Bench Chemicals |

Production of high-quality, analysis-ready BAM files through rigorous pre-processing is a foundational requirement for accurate somatic variant detection in cancer genomics research. By adhering to the specifications and best practices outlined in this document, researchers can ensure their data meets the quality standards necessary for reliable mutation detection. The integration of proper read group information, comprehensive duplicate marking, base quality score recalibration, and rigorous quality control establishes a robust foundation for downstream somatic analysis using GATK tools. Implementation of the performance optimization strategies further enhances the efficiency and scalability of these workflows, enabling their application to large-scale cancer genomics studies. As sequencing technologies and analytical methods continue to evolve, these core principles of data pre-processing will remain essential for generating biologically meaningful and clinically relevant insights from cancer genomic sequencing data.

A Step-by-Step GATK Somatic Variant Calling Pipeline: From Raw Data to Annotated Variants

In cancer genomics research, the accurate detection of somatic variants is fundamentally dependent on the quality of the initial data processing. The Genome Analysis Toolkit (GATK) Best Practices outline a rigorous pre-processing workflow designed to correct for technical artifacts and systematic biases inherent in next-generation sequencing data [9]. This pipeline transforms raw sequencing reads into analysis-ready BAM files, significantly enhancing the reliability of subsequent variant calling. For somatic variant discovery in cancer, this process is particularly crucial as it minimizes false positives that could otherwise be misinterpreted as tumor-specific mutations [5]. The pre-processing workflow consists of three critical computational steps: sequence alignment to a reference genome, duplicate marking to identify PCR artifacts, and base quality score recalibration (BQSR) to correct systematic errors in base quality estimates [9] [30].

The importance of this pre-processing regimen cannot be overstated in the context of drug development and clinical research. Even minor technical biases can profoundly impact variant calling accuracy, potentially leading to incorrect conclusions about mutation signatures in tumor samples [31]. By implementing this standardized protocol, researchers ensure that identified variants truly represent biological signals rather than sequencing or processing artifacts, thereby increasing the reproducibility and reliability of genomic findings across cancer research studies [9] [5].

The data pre-processing pipeline represents a methodical approach to preparing raw sequencing data for variant discovery. The workflow begins with unmapped BAM (uBAM) or FASTQ files and progresses through several quality control and correction stages before producing analysis-ready BAM files suitable for somatic variant calling with tools such as Mutect2 [9] [5]. Each step in this pipeline addresses specific technical challenges that, if left uncorrected, would compromise the accuracy of identified variants.

The conceptual foundation of this workflow rests on addressing three primary sources of error: misalignment of reads to the reference genome, over-representation of identical DNA fragments from PCR amplification, and systematic inaccuracies in the quality scores assigned by sequencing instruments [9] [30]. By sequentially correcting these issues, the pipeline ensures that the data presented to variant callers provides the most accurate representation of the actual tumor and normal sample genomes. This is particularly important in cancer genomics, where the signal of somatic variants can be subtle, especially in subclonal populations or samples with low tumor purity [5].

Step-by-Step Methodologies

Alignment to Reference Genome

The initial step in the pre-processing workflow involves aligning the raw sequencing reads to a reference genome, which provides a standardized coordinate system for all subsequent analyses [9]. This process is performed per-read group, preserving crucial metadata about the sequencing run, and can be massively parallelized to enhance computational efficiency [9]. The alignment process typically utilizes optimized algorithms such as BWA (Burrows-Wheeler Aligner), which generates SAM/BAM format files sorted by genomic coordinate [9].

For cancer genomics applications, proper alignment is particularly critical for accurately identifying structural variants and indels that are common in tumor genomes. The alignment must correctly handle reads spanning splice junctions in RNA-seq data or complex genomic rearrangements in DNA-seq data [32]. The resulting aligned BAM file serves as the foundation for all downstream analyses, making the quality of this initial alignment step paramount to the entire variant discovery process. After alignment, the MergeBamAlignments tool is often employed to merge the aligned data with the original uBAM file, preserving original read information while incorporating the alignment data [9].

Duplicate Marking

Duplicate marking addresses artifacts introduced during library preparation, particularly from PCR amplification, which can lead to over-representation of certain DNA fragments [9]. These duplicates are considered non-independent observations as they originate from the same original DNA molecule, and their inclusion would skew variant evidence [9]. The MarkDuplicatesSpark tool identifies read pairs mapped to the same genomic location and strand, flagging all but one representative read pair within each duplicate set to be ignored during variant discovery [9].

This step is performed per-sample and includes sorting reads into coordinate order, which is essential for subsequent processing steps [9]. The Spark-based implementation provides significant performance improvements over traditional approaches by leveraging parallel processing capabilities [9]. For cancer samples, where input DNA may be limited and require amplification, duplicate marking is particularly important to prevent artificial inflation of variant supporting reads that could lead to false positive calls in Mutect2 [5].

Base Quality Score Recalibration (BQSR)

Base Quality Score Recalibration (BQSR) employs machine learning to correct systematic errors in the quality scores assigned by sequencing instruments [30]. These quality scores represent per-base estimates of error probability emitted by the sequencer, and variant calling algorithms rely heavily on them to weigh evidence for or against potential variants [30]. BQSR detects patterns of systematic technical error resulting from sequencing biochemistry, manufacturing defects, or instrumentation issues, then adjusts quality scores accordingly [30].

The BQSR process involves building an empirical model of errors based on covariates such as read group, reported quality score, machine cycle, and dinucleotide context [30] [33]. Known variant databases (e.g., dbSNP) are used to mask real polymorphisms, ensuring they are not counted as errors during model building [30] [33]. The procedure follows a two-step approach: first, BaseRecalibrator analyzes the input BAM file to generate a recalibration model; second, ApplyBQSR applies the model to adjust base quality scores in the data [30] [33]. An optional third step using AnalyzeCovariates generates before/after plots for quality assessment [30].

Key Research Reagents and Computational Tools

Table 1: Essential Research Reagents and Computational Tools for GATK Pre-processing

| Tool/Resource | Function | Application Notes |

|---|---|---|

| BWA | Maps sequencing reads to reference genome | Primary alignment tool; optimized for human genomes [9] |

| MarkDuplicatesSpark | Identifies PCR duplicates | Parallelized implementation; significantly improves performance [9] |

| BaseRecalibrator | Builds recalibration model | Analyzes covariates: read group, quality score, cycle, dinucleotide context [30] [33] |

| ApplyBQSR | Applies base quality adjustments | Uses model from BaseRecalibrator to modify BAM file [30] |

| Known Sites VCF | Database of known variants | Critical for masking real variants during BQSR; dbSNP commonly used [30] [33] |

| Reference Genome | Standardized genomic coordinates | Provides framework for alignment; hg38 recommended for human studies [9] |

Quantitative Considerations and Performance Metrics

Table 2: Quantitative Parameters and Thresholds for Pre-processing Steps

| Processing Step | Key Parameters | Performance Impact | Quality Metrics |

|---|---|---|---|

| Alignment | Read group information, reference genome version | Mapping quality, coverage uniformity | Percentage of mapped reads, target coverage |

| Duplicate Marking | Expected duplication rate, library complexity | Variant calling accuracy, especially for indels | Duplicate rate (typically <20% for exomes) |

| BQSR | Known sites quality, context size (default: 2 for mismatches) | SNP calling sensitivity and precision | Empirical vs. reported quality scores |

The effectiveness of pre-processing steps varies depending on the specific mapper and caller combination, with research indicating that BQSR may reduce SNP calling sensitivity for some callers while improving precision, particularly in regions with low sequence divergence [31]. The benefits are most pronounced in standard genomic regions, while highly divergent regions such as the HLA locus may show less improvement [31]. For somatic variant calling in cancer, these considerations are particularly relevant given the abundance of genomic alterations in tumor samples.

Implementation Protocol

Detailed Command-Line Execution

The following protocols provide specific command-line implementations for each pre-processing step, formatted for execution in a Unix-like environment. These commands assume prerequisite installation of GATK and necessary dependencies, along with properly formatted reference genomes and known sites resources.

Alignment with BWA and Processing:

Duplicate Marking with MarkDuplicatesSpark:

Base Quality Score Recalibration:

Quality Assessment and Troubleshooting

After completing the pre-processing pipeline, quality assessment should include evaluation of alignment metrics (percentage mapped reads, insert size distribution), duplicate rates (typically <20% for exome data), and BQSR effectiveness through comparison of pre- and post-recalibration quality scores [9] [30]. For cancer genomics applications, special attention should be paid to regions with known technical challenges, such as highly polymorphic regions or areas with extreme GC content, which may require additional optimization [31].

Common issues include excessive duplicate rates indicating library preparation problems, poor alignment metrics suggesting reference genome mismatches, or BQSR failures due to insufficient known sites data [30] [31]. For non-human organisms or cancer samples with extensive genomic alterations, bootstrapping known variants may be necessary by performing an initial round of variant calling on unrecalibrated data, then using high-confidence variants as the known sites for subsequent BQSR [30].

The GATK pre-processing pipeline provides an essential foundation for accurate somatic variant discovery in cancer genomics research. Through systematic alignment, duplicate marking, and base quality score recalibration, this workflow addresses major sources of technical artifact that would otherwise compromise variant identification [9] [30]. While the benefits of each step may vary depending on the specific mapper-caller combination and genomic context [31], implementing the complete pipeline represents a critical investment in data quality that pays dividends throughout downstream analyses.

For researchers in cancer genomics and drug development, adherence to these standardized protocols ensures the reliability and reproducibility of variant calls, ultimately supporting more confident biological conclusions and therapeutic decisions. The comprehensive methodology outlined in this document provides both conceptual understanding and practical implementation guidance, enabling research teams to generate analysis-ready data suitable for the most demanding somatic variant discovery applications.

In cancer genomics, the reliable detection of somatic mutations is a critical step for understanding tumorigenesis, identifying therapeutic targets, and guiding personalized treatment strategies. Somatic variants, which arise in tumor cells and are absent from the germline, present unique computational challenges due to their often low allele frequencies, tumor heterogeneity, and the presence of sequencing artifacts. The Broad Institute's Mutect2, part of the Genome Analysis Toolkit (GATK), is a specialized tool designed to address these challenges through a sophisticated Bayesian statistical model coupled with local assembly of haplotypes [34] [6].

Unlike germline variant callers that assume fixed ploidy, Mutect2 is specifically engineered to identify somatic single nucleotide variants (SNVs) and insertions/deletions (indels) without ploidy constraints, accommodating the complex allele fractions often observed in tumor samples due to factors like fractional purity, multiple subclones, and copy number variations [6]. The tool operates within a broader best practices workflow for somatic short variant discovery, which includes subsequent steps for contamination estimation, artifact filtering, and functional annotation to produce a high-confidence callset [5].

Core Algorithmic Framework: Assembly and Modeling

Local Assembly of Haplotypes

Mutect2 shares its foundational assembly-based approach with the HaplotypeCaller [5] [6]. When it encounters a genomic region showing evidence of variation (an "active region"), it discards existing read mappings and performs local de novo assembly of the reads into a graph of possible haplotypes [5]. This process sensitively reconstructs candidate variant haplotypes, including those containing indels, which are often missed by simpler, position-based callers. The reads are then realigned to these candidate haplotypes using the Pair-Hidden Markov Model (Pair-HMM) algorithm to obtain a matrix of likelihoods, which forms the basis for subsequent statistical evaluation [5] [6].

Bayesian Somatic Genotyping Model