A Researcher's Guide to Troubleshooting Common GATK Variant Calling Errors

This guide provides a comprehensive framework for researchers, scientists, and drug development professionals to diagnose, troubleshoot, and resolve common errors encountered during GATK variant calling workflows.

A Researcher's Guide to Troubleshooting Common GATK Variant Calling Errors

Abstract

This guide provides a comprehensive framework for researchers, scientists, and drug development professionals to diagnose, troubleshoot, and resolve common errors encountered during GATK variant calling workflows. Covering the foundational principles of the GATK Best Practices, it details methodological approaches for germline short variant discovery, offers targeted solutions for frequent issues like contig mismatches and memory failures, and explores advanced strategies for validation and performance optimization. By integrating practical troubleshooting with validation techniques, this resource aims to enhance the accuracy, efficiency, and reliability of genomic analyses in both research and clinical settings.

Understanding GATK Best Practices and Common Error Landscapes

GATK Best Practices Workflow for Variant Discovery

This technical support center article provides an overview of the GATK Best Practices workflow for germline short variant discovery (SNPs and Indels) and addresses common troubleshooting issues, framed within a broader thesis on resolving errors in genomic research.

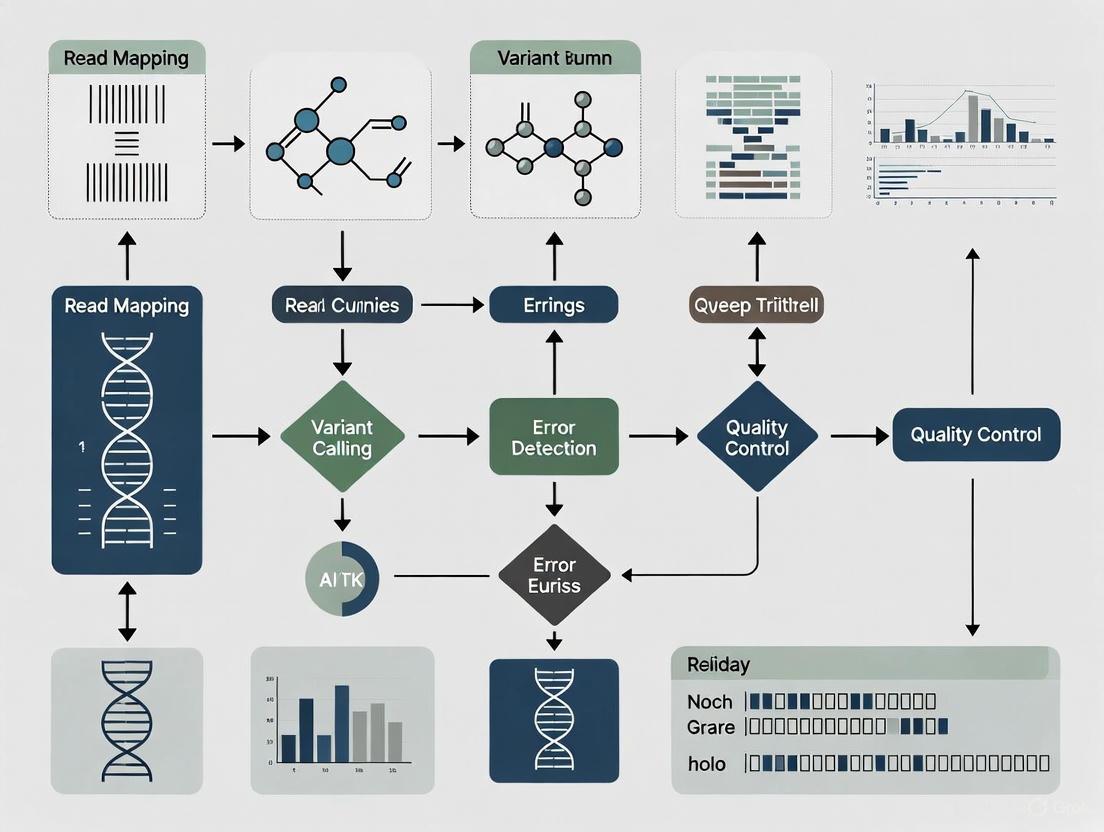

The GATK Best Practices workflow is a systematic, multi-step process for identifying genetic variants from high-throughput sequencing data. The entire process, from raw sequencing reads to high-confidence variants, involves three main phases: Data Preprocessing, Joint Calling, and Variant Filtering [1] [2] [3]. The following diagram illustrates the complete workflow and the logical relationship between its key stages.

Phase 1: Data Preprocessing for Variant Discovery

This initial phase prepares raw sequencing data for accurate variant calling by cleaning up alignments and correcting for systematic technical errors [1] [2]. Its purpose is to generate analysis-ready BAM files.

- Alignment: Map raw sequencing reads (FASTQ) to a reference genome using aligners like BWA-MEM [4] [3].

- Mark Duplicates: Identify and tag PCR duplicates that can bias variant allele frequencies using tools like

MarkDuplicatesSpark[2]. This step is critical because most variant detection tools require duplicates to be tagged to reduce bias [2]. - Base Quality Score Recalibration (BQSR): Correct systematic errors in the base quality scores assigned by the sequencer using

BaseRecalibratorandApplyBQSR[2] [5]. This process builds a model using covariates encoded in the read groups and then applies adjustments to generate recalibrated base qualities [2].

Phase 2: Joint Calling

Joint calling analyzes multiple samples together to improve the sensitivity and accuracy of genotype inference for each individual [2]. This approach accounts for the difference between a missing variant call due to absent data and good data showing no variation, and it makes it easier to add samples progressively [2].

- HaplotypeCaller: Call potential variants per sample and save them in GVCF format, which includes information about both variant and non-variant sites [4] [2].

- Consolidate GVCFs: Combine GVCF data from multiple samples into a single dataset using

GenomicsDBImportorCombineGVCFs[4] [2]. - GenotypeGVCFs: Perform joint genotyping on the consolidated GVCFs to identify candidate variants across the cohort [4] [2].

Phase 3: Variant Filtering

The final phase separates high-confidence real variants from technical artifacts using a machine learning-based approach [2].

- Variant Quality Score Recalibration (VQSR): Apply a Gaussian mixture model to classify variants based on how their annotation values cluster, using training sets of high-confidence variants [2]. This builds a recalibration model that is then applied to filter out low-quality calls.

- Calculate Genotype Posteriors (Optional): Use pedigree information and population allele frequencies to further refine genotype calls [2].

The following table details key resources required for executing the GATK Best Practices workflow.

| Resource Name | Function & Purpose in the Workflow |

|---|---|

| Reference Genome (e.g., GRCh38) | Serves as the map against which all sequencing reads are aligned; essential for alignment and variant calling [3]. |

| GATK Resource Bundle | Provides known variant sets (e.g., HapMap, 1000G, Mills indels) used as training resources for BQSR and VQSR [3]. |

| BWA Index Files | Specialized data structures created from the reference genome that allow the BWA aligner to quickly map reads [3]. |

Conda Environment (gatk4, bwa, samtools) |

An isolated software environment that manages tool versions and dependencies to ensure reproducibility and prevent conflicts [3] [6]. |

Frequently Asked Questions & Troubleshooting Guides

This section addresses specific, common issues encountered during GATK variant discovery projects.

FAQ 1: How should I handle a single DNA sample sequenced across multiple lanes?

Question: After following GATK's guide for multi-lane sequencing, my merged BAM files show highly discordant variant results and incorrect variant allele frequencies (VAFs) between lanes. Why does this happen and how can I resolve it? [4]

Answer: This issue can arise from several sources, including improper read group definitions, sample contamination, or software bugs in older GATK versions. The following troubleshooting flowchart outlines a systematic diagnostic approach.

Detailed Methodology & Commands:

Verify Read Groups: Ensure each lane's BAM file has a unique read group (

@RG)` with correct SM (sample), LB (library), PL (platform), and PU (platform unit) tags. Incorrect tags can cause the caller to treat lanes as different samples [4].- Example BWA command with read group info:

bwa-mem2 mem -t 12 -Y -R '@RG\tID:lane1\tSM:sample1\tLB:lib1\tPL:ILLUMINA' ref.fasta lane1_1.fq lane1_2.fq[4]

- Example BWA command with read group info:

Analyze Lanes Individually: Process the BAM files from each lane separately through the entire variant calling pipeline. If the final results remain discordant, the issue lies with the data itself or the per-lane analysis, not the merging step [4].

Visual Inspection in IGV: Load the individual lane BAM files into the Integrative Genomics Viewer (IGV) to visually confirm the presence or absence of aligned reads at discordant variant positions. This checks if the discordance is in the alignment or the calling step [4].

Check for Contamination: Significant discordance between lanes of the same sample can indicate contamination in one lane or a sample mix-up [4].

Update GATK Version: The user in our case study was using GATK v4.2.6.1. Some versions contain bugs that affect calling. Updating to the latest version (e.g., v4.6.1.0 or newer) can resolve these issues [4].

Advanced HaplotypeCaller Parameters: If specific variants are missing in one lane despite read support, assembly-level parameters might help recover them. Note that some advanced options like

--pileup-detectionare not available in older versions [4].- Example command:

gatk HaplotypeCaller ... --recover-all-dangling-branches true[4]

- Example command:

FAQ 2: How do I resolve "Dependency Not Found" and version compatibility errors?

Question: When setting up or running the pipeline, I encounter errors that required software like GATK, BWA, or Samtools cannot be found or have incompatible versions. What is the best solution? [6]

Answer: This is a common environment setup issue. The solution involves using a containerized or managed environment and verifying paths and versions.

Troubleshooting Steps:

Use Conda for Environment Management: Create a dedicated Conda environment with all necessary tools to isolate dependencies and prevent conflicts [3] [6].

- Example setup command:

conda create -n gatk_analysis -c bioconda gatk4 bwa samtools picard[3]

- Example setup command:

Verify Software Paths: If tools are not in your standard PATH, specify the full path to the executable in your workflow configuration or script [6].

Check Minimum Required Versions: Ensure your software meets the minimum version requirements. The table below summarizes these requirements [6].

| Software | Minimum Required Version |

|---|---|

| GATK | 4.0.0.0 |

| BWA | 0.7.17 |

| Samtools | 1.10 |

| Picard | 2.27.0 |

FAQ 3: What should I do when my pipeline fails with an "Out of Memory" error?

Question: My workflow, particularly during HaplotypeCaller or CombineGVCFs steps, crashes with Java heap space or memory allocation errors. How can I optimize resource usage? [6]

Answer: Memory errors indicate that the Java Virtual Machine (JVM) has insufficient RAM allocated for the task, especially when processing large genomic intervals or many samples.

Solutions:

Adjust JVM Memory Arguments: Explicitly set the maximum heap size for GATK commands using the

-Xmxparameter. Allocate enough memory based on your node's capacity but leave room for the operating system.- Example: Add

--java-options "-Xmx8G"to yourgatkcommand to allocate 8 GB of RAM.

- Example: Add

Reduce Thread Count: High parallelism (

--native-pair-hmm-threads) increases memory overhead. Reducing the number of threads can significantly lower total memory requirements [6].Split the Analysis: For large cohorts, break the analysis into smaller batches, such as by genomic region, to reduce the memory footprint of each job [6].

Use the

--resumeFlag: If using a workflow manager like Nextflow, use the--resumeflag to continue from the last successful step after fixing the memory issue, rather than restarting from the beginning [6].

The GATK Variant Calling Workflow

The following diagram illustrates the three primary phases of the GATK variant calling workflow, from raw sequencing data to a filtered variant callset.

This table details essential files and tools required for a successful GATK analysis.

| Resource Name | Function & Purpose |

|---|---|

| Reference Genome ( [7] [8]) | FASTA file for read alignment and variant calling. Must be indexed. |

| Known Sites Resources ( [9]) | VCFs of known polymorphisms (e.g., dbSNP, Mills indels) for BQSR and VQSR. |

| BWA-MEM ( [7] [8]) | Aligner for mapping sequencing reads to the reference genome. |

| Picard Tools ( [7]) | Suite for SAM/BAM processing (sorting, duplicate marking, validation). |

| ValidateSamFile ( [10]) | Picard tool for diagnosing errors in SAM/BAM file format and content. |

Frequently Asked Questions & Troubleshooting Guides

Data Pre-processing Phase

I get the error: "SAM/BAM file appears to be using the wrong encoding for quality scores." What should I do?

This error occurs when quality scores in your SAM/BAM file do not use the standard Sanger encoding (Phred+33) that the GATK expects, often because the data uses an older Illumina encoding (Phred+64) [11].

Solution:

- Use the

--fix_misencoded_quality_scores(GATK3) or-fixMisencodedQualsflag in your command to have the GATK automatically subtract 31 from each quality score during processing [11]. - Important: This is a temporary fix for the analysis flow. For a permanent correction, consider re-processing the raw data with the correct quality encoding.

My job fails with "Exit code 137" or a log message stating "Killed" or "Out of memory." How can I fix this?

This error indicates that the job was terminated because it ran out of memory (OOM) on the system [12].

Solution:

- Identify the Failing Task: Check the workflow logs to determine which specific step (e.g., BaseRecalibrator, HaplotypeCaller) ran out of memory [12].

- Increase Memory Allocation: Increase the memory allocated to that task. In WDL workflows, this is typically done by increasing the

mem_gbruntime attribute for the failing task [12]. - Consider Disk Space: If the error message states "no space left on device," you must also increase the

disk_gbruntime attribute for the task [12].

My GATK run fails with a cryptic error about the SAM/BAM file. How can I diagnose it?

Invalid or malformed SAM/BAM files are a common source of failures. Errors can be in the header, alignment fields, or optional tags [10].

Solution:

Use the Picard ValidateSamFile tool to diagnose the problem systematically [10].

- Generate an Error Summary:

- Inspect ERRORs First: The summary will list

ERRORandWARNINGcounts. Focus on fixingERRORs first, as they will block analysis. UseMODE=VERBOSEandIGNORE_WARNINGS=trueto get detailed reports on each error [10]. - Common Fixes:

Variant Discovery & Filtering Phase

When should I use VQSR vs. hard-filtering for my variant callset?

The choice depends on your data type and cohort size [9].

Solution: The following table compares the two approaches to help you decide.

| Feature | Variant Quality Score Recalibration (VQSR) | Hard-Filtering |

|---|---|---|

| Recommended Use Case | Germline SNPs and Indels in large cohorts (e.g., >30 WGS samples) [9]. | Small cohorts, non-model organisms, or when VQSR fails [9]. |

| Methodology | Applies a machine learning model to variant annotations to assign a probability of being a true variant [9]. | Applies fixed thresholds to specific variant annotations using tools like VariantFiltration [13]. |

| Key Advantage | Robust, data-adaptive filtering that considers multiple annotations simultaneously [9]. | Simple, transparent, and works on any dataset. Allows filtering on sample-level (FORMAT) annotations [9]. |

| Example Command | VariantRecalibrator & ApplyVQSR [9]. |

gatk VariantFiltration ... --filter-expression "QD < 2.0" --filter-name "LowQD" [13]. |

VQSR fails with an error about "Insufficient data" for modeling. What are my options?

This happens when your dataset is too small to fit the Gaussian mixture models used by VQSR, often because you have fewer than 30 samples [9].

Solution:

- Use Hard-Filtering: The primary solution is to switch to a hard-filtering approach. The GATK Best Practices provide recommended thresholds for filtering SNPs and indels [9].

- Adjust VQSR Parameters (Advanced): You can try decrementing the

--max-gaussiansparameter (e.g., from 4 to 2 for indels) to reduce the model's complexity, but this is not guaranteed to work for very small datasets [9].

How do I apply a basic hard-filter to a variant callset?

Use the VariantFiltration tool with one or more filtering expressions [13].

Solution: The command below shows the syntax for applying a filter based on Quality by Depth (QD) and Mapping Quality (MQ0).

--filter-expression: The JEXL expression that defines the filtering rule (e.g., "QD < 2.0", "FS > 60.0") [13].--filter-name: The label that will be added to theFILTERfield of any variant that fails the expression [13]. Variants that pass all filters are labeledPASS.

Systematic categorization of common GATK error types and their triggers

Container and Dependency Issues

Common Errors and Solutions

This table catalogs common errors related to software containers and dependencies, their primary triggers, and recommended solutions.

| Error Type | Common Triggers | Recommended Solutions |

|---|---|---|

| 401 Unauthorized Image Pull | Docker registry authentication issues; outdated or invalid container image tags [14]. | Use the singularity.pullTimeout configuration to increase pull timeouts; verify the container image exists and is accessible [14]. |

| Java Version Mismatch | Running GATK with an incompatible Java version [15]. | Ensure GATK is used with its required major Java version, as specified in the documentation [15]. |

| AbstractMethodError or NoSuchMethodError | Using Spark 1.6 instead of the required Spark 2 when running on a cluster [16]. | Specify the Spark 2 submit command by adding --sparkSubmitCommand spark2-submit to the GATK arguments [16]. |

Resource Allocation and Computational Limits

Common Errors and Solutions

This table outlines errors caused by insufficient computational resources and how to resolve them.

| Error Type | Common Triggers | Recommended Solutions |

|---|---|---|

| Job Exit Code 137 / "Killed" | The task was terminated by the operating system for exceeding its memory limit (OOM) [12]. | Increase the memory allocation (mem_gb) for the failed task [12]. |

| No space left on device | The task ran out of disk space on the virtual machine [12]. | Increase the disk allocation (disk_gb) for the task [12]. |

| OutOfMemoryError in Spark | Memory settings for Spark drivers or executors are not tuned for the job's complexity [16]. | Tune memory settings; prefer a smaller number of larger executors over many small ones [16]. |

| PAPI Error Code 10 / VM Crash | An abrupt crash of the virtual machine, often due to an out-of-memory condition [12]. | Check logs for memory issues and increase the memory, or both memory and disk resources [12]. |

Input Data and File Format Issues

Common Errors and Solutions

This table describes errors stemming from problematic input data or incorrectly formatted files.

| Error Type | Common Triggers | Recommended Solutions |

|---|---|---|

| Invalid/Malformed SAM/BAM | The SAM/BAM file has errors in header tags, alignment fields, or optional tags, often introduced by upstream processing tools [10]. | Use Picard's ValidateSamFile to diagnose issues; use tools like FixMateInformation or AddOrReplaceReadGroups to fix errors [10]. |

Missing Read Group (@RG) SM Tag |

The required SM (sample name) tag within the @RG header line is missing. While not always a formal format error, it is required by GATK analysis tools [10]. |

Add or replace read groups using Picard's AddOrReplaceReadGroups tool to ensure the SM tag is present [10]. |

| CIGAR Maps Off End of Reference | A read's CIGAR string indicates alignment beyond the end of a reference sequence. This can occur due to differences in reference assemblies [17]. | Use Picard's CleanSam tool to clean the BAM file [17]. |

| Path Errors on HDFS | Using relative paths instead of absolute paths for file locations on HDFS [16]. | Ensure all file paths on HDFS are absolute (e.g., hdfs://hostname:port/path/to/file) [16]. |

Algorithmic and Expected Variant Issues

Common Errors and Solutions

This table explains issues where the variant caller's output does not match user expectations.

| Error Type | Common Triggers | Recommended Solutions |

|---|---|---|

| Expected Variant Not Called | Low base quality or mapping quality of supporting reads; variant obscured after local reassembly; too many alternate alleles at a site [18]. | Generate a bamout file from HaplotypeCaller to inspect reassembled reads; check base and mapping qualities; consider adjusting --max-alternate-alleles (with caution) [18]. |

| Variant Missing in Repeats | In repetitive sequence contexts, default-sized kmers are non-unique, causing the variant to be pruned from the assembly graph [18]. | (Advanced) Use -allowNonUniqueKmersInRef or adjust --kmer-size parameters. Use with caution as it may increase false positives [18]. |

Troubleshooting Workflow Diagram

The following diagram provides a systematic workflow for diagnosing and resolving common GATK errors.

Key Research Reagent Solutions

This table lists essential resources and data files required for a successful GATK variant calling analysis.

| Research Reagent | Function in GATK Analysis |

|---|---|

| Known Sites (e.g., dbSNP) | A list of previously identified variants. Used by tools as input without the assumption that all calls are true [19]. |

| Training Set (e.g., HapMap, 1000G) | A high-quality, curated set of variants used to train machine learning models like Base Quality Score Recalibration (BQSR) and Variant Quality Score Recalibration (VQSR) [19]. |

| Truth Set | A highest-standard, orthogonally validated set of variants used to evaluate the quality (sensitivity/specificity) of a final variant callset [19]. |

| Reference Dictionary | A sequence dictionary file (.dict) for the reference genome, required by many GATK tools [19]. |

Experimental Protocols for Key Steps

Protocol: Base Quality Score Recalibration (BQSR)

This protocol corrects systematic errors in base quality scores emitted by sequencers [19] [2].

- Model Errors: Run

BaseRecalibrator. This tool uses known variant sites and training sets (e.g., dbSNP, Mills indels) to build a model of covariation based on sequence context and read position. - Apply Corrections: Run

ApplyBQSR. This tool adjusts the base quality scores in the BAM file based on the recalibration table produced in step 1. - Generate Plots (Optional): Run

AnalyzeCovariatesto produce plots comparing base qualities before and after recalibration, allowing for visual validation of the process.

Protocol: Germline Short Variant Discovery with HaplotypeCaller

This is the recommended workflow for germline SNP and Indel discovery [19] [2].

- Per-Sample GVCF Calling: Run

HaplotypeCalleron each sample's BAM file with the-ERC GVCFoption. This does not perform final genotyping but produces a GVCF file containing all sites for the sample, both variant and reference. - Consolidate Cohort Data: Use

GenomicsDBImport(for many samples) orCombineGVCFs(for smaller cohorts) to aggregate the data from all sample GVCFs into a single database. - Joint Genotyping: Run

GenotypeGVCFson the consolidated database. This performs joint genotyping across all samples, which increases sensitivity and statistical power. - Filter Variants: Apply filters to the raw variant calls. The recommended method is to use

VariantRecalibrator(VQSR) to build a machine learning model to separate true variants from artifacts, followed byApplyVQSR[2].

In the context of genomic variant discovery with the Genome Analysis Toolkit (GATK), the use of correct reference files, known sites, and training resources is fundamental to distinguishing true biological variants from technical artifacts. Many GATK tools require these auxiliary datasets to function correctly, as they provide a baseline of known variation that guides the statistical analysis of sequencing data. Without these resources, results may be subject to increased false positives and false negatives, potentially compromising the reliability of scientific conclusions and downstream applications in drug development research. This guide addresses the critical role these resources play and provides practical solutions to common implementation errors.

Resource categories and functions

GATK utilizes three main categories of known variants resources, each associated with different validation standards and use cases [20]:

- Known variants: These are lists of variants previously identified and reported (e.g., dbSNP), without an assumption that all calls are true. Tools use these to mask out positions where real variation is commonly found during Base Quality Score Recalibration (BQSR).

- Training sets: Curated lists of variants used by machine-learning algorithms (like Variant Quality Score Recalibration) to model the properties of true variation versus artifacts. These require higher validation standards.

- Truth sets: The highest standard of validation, these resources are used to evaluate the quality of a variant callset (e.g., sensitivity and specificity) and are assumed to contain exclusively true variants.

Essential resource repository

The following table summarizes the core resource types, their purposes, and examples essential for GATK workflows:

Table 1: Essential Resource Types for GATK Analysis

| Resource Type | Primary Function in GATK | Common Examples |

|---|---|---|

| Reference Genome | Provides the standard coordinate system and sequence against which reads are aligned and variants are called. | Broad Institute Resource Bundle (e.g., HG19, HG38), GATK Test Data (e.g., s3://gatk-test-data/refs/) [21] |

| Known Variants | Masks out known variant sites during BQSR to avoid confusing real variation with technical artifacts. | dbSNP, gnomAD |

| Training Resources | Trains the machine learning model for VQSR to identify annotation profiles of true vs. false variants. | HapMap, Omni array sites, 1000G gold standard indels |

| Truth Sets | Provides a high-confidence set of variants for benchmarking callset sensitivity and specificity. | Genome in a Bottle (GIAB) data for NA12878 [21] |

Integrated workflow for resource utilization

The following diagram illustrates how these essential resources integrate into a typical GATK variant discovery workflow, highlighting key steps where correct resource selection is critical.

Figure 1: Workflow for resource integration in GATK variant discovery. Resources from the Broad Institute Resource Bundle are integrated at multiple stages: known variants for BQSR, and training/truth sets for VQSR.

Troubleshooting common resource errors

FAQ: Contig mismatch errors

Question: I encountered the error "Input files have incompatible contigs" when running my GATK tool. What does this mean and how can I resolve it?

This is a classic problem indicating that the names or sizes of contigs (chromosomes/sequences) don't match between your input files (e.g., BAM or VCF) and your reference genome [22]. GATK evaluates everything relative to the reference, and any inconsistency will cause failure.

Error Example with BAM File:

ERROR: Input files reads and reference have incompatible contigs: Found contigs with the same name but different lengths: contig reads = chrM / 16569 contig reference = chrM / 16571[22]Error Example with VCF File:

ERROR: Input files known and reference have incompatible contigs...[22]

Solution Pathways:

The solution depends on which file is mismatched relative to your chosen reference.

Table 2: Troubleshooting Contig Mismatch Errors

| Faulty File Type | Root Cause | Recommended Solution |

|---|---|---|

| BAM File | The BAM was aligned against a different reference genome build than the one you are now using. | Option 1 (Best): Switch to the correct reference genome that matches your BAM file [22].Option 2 (Only if necessary): If you must use your current reference and lack the original FASTQs, use Picard tools to revert the BAM to an unaligned state (FASTQ or uBAM) and then re-align against your correct reference [22]. |

| VCF File | The VCF of known variants (e.g., dbSNP) was derived from a different reference build. | Option 1 (Best): Find a version of the VCF resource that is built for your reference. These are available in the Broad Institute's Resource Bundle [22].Option 2: Use Picard's LiftoverVCF tool to transfer the variants from the old reference coordinates to your new reference coordinates using a chain file [22]. |

Special Case - b37 vs. hg19: These human genome builds are very similar but use different contig naming conventions (e.g., "1" vs. "chr1") and have slight length differences in chrM [22]. While renaming contigs in your files is technically possible, it is error-prone and only applies to canonical chromosomes. It is generally safer to use the correctly matched reference build.

FAQ: Missing read group errors

Question: My pipeline failed because of "MISSINGREADGROUP" errors. How do I fix this?

Read groups (RG) are essential metadata in BAM files, containing information about the sample, library, and sequencing run. GATK requires them for virtually all analysis steps.

Solution: Add read group information to your BAM file. This can be done during the initial alignment with BWA using the -R parameter [17]. If you already have a BAM file without RGs, use Picard's AddOrReplaceReadGroups tool [17] [23].

Example Picard Command:

FAQ: Bootstrap resource generation

Question: For a non-model organism, how can I bootstrap a set of known variants?

Bootstrapping refers to generating a resource from your own data using a less stringent initial method [20].

Methodology:

- Initial Calling: Perform an initial round of variant calling on your data without using BQSR.

- Stringent Filtering: Apply manual hard filters (e.g., based on quality depth, strand bias) to this raw callset to produce a high-confidence subset of variants.

- Resource Creation: Use this high-confidence subset as your "known variants" file for subsequent runs of BQSR on the full dataset. This iterative process helps refine the results, though it benefits greatly from orthogonal validation [20].

Research reagent solutions

The following table lists key materials and resources, often called the "research reagent toolkit," required for setting up and executing a GATK variant discovery pipeline.

Table 3: Research Reagent Solutions for GATK Analysis

| Reagent / Resource | Function / Application | Technical Notes |

|---|---|---|

| Reference Genome (FASTA) | The foundational sequence for read alignment and variant calling. | Must include both the .fa file and the associated index (.fai) and dictionary (.dict) [22]. |

| BWA-MEM | Aligns sequencing reads from FASTQ format to the reference genome. | Use the -M and -R flags to ensure compatibility with downstream Picard/GATK steps [17]. |

| Picard Tools | A suite of Java command-line tools for manipulating SAM/BAM and VCF files. | Critical for file sorting, read group addition, duplicate marking, and file validation [17] [22]. |

| GATK Jar File | The core engine for variant discovery. | Be aware that command-line syntax can change between minor versions [23]. |

| Known Sites VCFs (dbSNP, indels) | Used for masking known variation during BQSR. | Ensure these VCFs are compatible with your reference genome build to avoid contig errors [22] [20]. |

| High-Quality Training Resources (HapMap, Omni) | Used as training sets for the VQSR machine learning model. | The quality of these resources directly impacts the success of variant recalibration [20]. |

| GATK Test Data | Publicly available datasets (e.g., NA12878) for pipeline validation and testing. | Accessed via s3://gatk-test-data/ [21]. Useful for benchmarking and troubleshooting. |

The critical role of experimental design (WGS, Exomes, Panels, RNAseq) in error prevention

Errors in genomic analysis often originate not during computational analysis, but in the initial experimental design phase. Proper experimental design establishes the foundation for reliable variant calling and prevents systematic errors that cannot be fully corrected bioinformatically. This guide addresses how strategic planning of sequencing experiments—including selection of appropriate sequencing methods, replication strategies, and quality controls—can prevent common errors in GATK variant calling pipelines and ensure robust, interpretable results for researchers and drug development professionals.

Sequencing Strategy Selection Guide

Comparative Analysis of Sequencing Approaches

The choice of sequencing strategy significantly impacts which variants can be detected and with what confidence. Each approach offers distinct advantages and limitations for variant detection [24].

Table 1: Sequencing Strategy Comparison for Variant Detection

| Strategy | Target Space | Average Depth | SNV/Indel Detection | CNV Detection | SV Detection | Low VAF Detection |

|---|---|---|---|---|---|---|

| Panel | ~0.5 Mbp | 500-1000× | ++ | + | - | ++ |

| Exome | ~50 Mbp | 100-150× | ++ | + | - | + |

| Genome | ~3200 Mbp | 30-60× | ++ | ++ | + | + |

Performance indicators: ++ (outstanding), + (good), - (poor/absent)

Technical Specifications by Method

Table 2: Technical Specifications and Experimental Design Requirements

| Sequencing Method | Minimum Replicates | Recommended Depth | Key Quality Metrics | Primary Applications |

|---|---|---|---|---|

| DNA Panel | 3 biological | 500-1000× | >95% target coverage at 20× | Inherited disorders, cancer driver mutations |

| Whole Exome | 4 biological | 100-150× | >90% exons at 20× | Mendelian disorders, rare variant discovery |

| Whole Genome | 3-4 biological | 30-60× | >95% genome at 15× | Comprehensive variant discovery, structural variants |

| RNA-Seq | 3-4 biological | 10-60M PE reads | RIN >8 for mRNA | Gene expression, splicing, fusion genes |

| ChIP-Seq | 2-3 biological | 10-30M reads | High-quality antibodies confirmed by ENCODE | Transcription factor binding, histone modifications |

Troubleshooting Guides & FAQs

Pre-Sequencing Experimental Design FAQs

Q1: How many biological replicates are needed for robust RNA-Seq results?

- Answer: Factor in at least 3 replicates (absolute minimum), but 4 if possible (optimum minimum). Biological replicates are recommended rather than technical replicates as they better capture biological variability [25].

Q2: What are the key considerations for ChIP-Seq experimental design?

- Answer: For ChIP-Seq, include at least 2 replicates (absolute minimum), but 3 if possible. Biological replicates are required, not technical replicates. Use "ChIP-seq grade" antibody validated by reliable sources such as ENCODE or Epigenome Roadmap. Include appropriate controls (input or IgG) for all experimental conditions [25].

Q3: When should I choose whole genome sequencing over exome sequencing?

- Answer: Whole genome sequencing is preferred when structural variant detection is needed, for more comprehensive coverage of exonic regions (avoiding capture biases), and when investigating non-coding regions. Due to considerable reductions in cost and overall higher accuracy, whole genome is now preferred for germline variant detection even when exonic variants are the primary focus [24].

Post-Sequencing Analysis Troubleshooting

Q4: Why do lanes from the same sample show discordant variant calls?

- Answer: This issue can arise from several experimental factors [4]:

- Contamination: One lane may have sample contamination

- Sample mix-up: Incorrect sample tracking during library preparation

- Library preparation artifacts: Inconsistent library preparation across lanes

- Solution: Check alignment files in IGV to visualize the source of discordance. Analyze lanes separately to identify which calls are consistent. Verify that read groups are properly assigned in BAM files.

Q5: How can I resolve variants of uncertain significance (VUS) from exome sequencing?

- Answer: Integrate RNA sequencing data to provide functional evidence. RNA sequencing facilitates the resolution of VUS by providing insights into the impact of DNA variants on protein production. In clinical practice, 10-15% of qualified VUS are expected to have a classification change with RNA-Seq data [26].

Q6: What are the minimum coverage requirements for somatic variant detection in tumor/normal pairs?

- Answer: For exome sequencing of tumor/normal pairs, aim for mean target depth of ≥100X for tumor sample and ≥50X for germline sample. When germline samples are unavailable, somatic variant detection is still possible but with significantly reduced sensitivity and precision [25].

Workflow Visualization

Experimental Design Decision Pathway

Multi-lane Sequencing Error Prevention Workflow

Research Reagent Solutions

Essential Materials for Error-Free Sequencing

Table 3: Key Research Reagents and Their Functions in Experimental Design

| Reagent/Resource | Function | Quality Control Requirements | Impact on Variant Calling |

|---|---|---|---|

| High-quality DNA | PCR-free WGS library prep | 900 ng DNA for PCR-free; RIN >8 for RNA | Reduces sequencing artifacts and false positives |

| Validated Antibodies | ChIP-Seq target enrichment | "ChIP-seq grade"; confirmed by ENCODE | Ensures specific enrichment, reduces background noise |

| Exome Capture Kits | Target enrichment for WES | TWIST Human Exome or NEXome; quality controls for capture efficiency | Affects uniformity of coverage, missing variants |

| Spike-in Controls | Normalization across samples | Remote organism spike-ins (e.g., fly for human) | Enables cross-sample comparison in ChIP-Seq |

| Reference Standards | Benchmarking variant calls | Genome in a Bottle (GIAB) or Platinum Genome datasets | Allows pipeline validation and accuracy assessment |

Preventing variant calling errors begins long before data analysis—it starts with rigorous experimental design. By selecting appropriate sequencing strategies, incorporating sufficient biological replicates, implementing proper controls, and understanding the limitations of each method, researchers can avoid systematic errors that compromise data interpretation. The integration of multiple genomic technologies, such as combining WES with RNA-Seq, provides orthogonal validation that significantly enhances diagnostic yield and variant interpretation confidence. These experimental design principles form the critical foundation for all subsequent bioinformatic analyses and ultimately determine the success of genomic studies in both research and clinical applications.

Implementing Robust Germline Variant Discovery Pipelines

Proceeding from an analysis-ready BAM file to a filtered Variant Call Format (VCF) file is a critical stage in genomic analysis, enabling the discovery of genetic variants such as single nucleotide polymorphisms (SNPs) and insertions/deletions (indels). The Genome Analysis Toolkit (GATK) developed by the Broad Institute provides a robust, well-validated framework for this process, widely regarded as the industry standard in both research and clinical settings [3]. This guide details the step-by-step methodology for this conversion process while incorporating essential troubleshooting methodologies that address common experimental challenges encountered during GATK variant calling research. The workflow is designed to handle whole genome sequencing (WGS) data, providing an unbiased view of genetic variation across the entire genome [3]. Following established best practices is crucial for generating high-quality, reliable variant calls suitable for downstream analysis in disease research, population genetics, and drug development applications.

Core Methodology: From BAM to Filtered VCF

Prerequisites and Input Requirements

Before executing the variant calling workflow, ensure you have the following inputs prepared and properly formatted:

- Analysis-Ready BAM File: A coordinate-sorted BAM file containing reads aligned to a reference genome. The file must be indexed (have a corresponding

.baifile) [27]. - Reference Genome: A FASTA file of the reference genome and its associated index (

.fai) and sequence dictionary (.dict) files [3]. - Known Sites Resources: VCF files of known polymorphic sites used for base quality score recalibration (BQSR). These typically include databases such as dbSNP, HapMap, 1000 Genomes, and Mills indel gold standard [3] [28].

The table below summarizes the essential research reagents and their functions in the GATK workflow:

Table 1: Key Research Reagent Solutions for GATK Variant Calling

| Reagent/Resource | Function & Importance |

|---|---|

| Reference Genome (FASTA) | The reference sequence against which sample reads are aligned and compared to identify variants [3]. |

| BAM File | The input aligned sequencing data; must be sorted and indexed for proper processing [27]. |

| Known Sites VCFs (dbSNP, Mills) | Provide datasets of known variants to guide the Base Quality Score Recalibration (BQSR) process, which corrects systematic errors in base quality scores [3]. |

| Genomic Intervals File (BED) | Defines specific genomic regions to analyze, confining variant calling to targeted areas and reducing computational runtime [27]. |

Step-by-Step Workflow

The following diagram illustrates the complete workflow from analysis-ready BAM to filtered VCF:

Step 1: BAM File Validation and Quality Control

Before variant calling, validate the integrity and formatting of your input BAM file using Picard's ValidateSamFile tool [10].

- Purpose: Identifies formatting issues in the BAM file that could cause failures in downstream analysis.

- Critical Checks: Look for

ERROR-level issues that must be fixed, such asMISSING_READ_GROUP,MATE_NOT_FOUND, orMISMATCH_MATE_ALIGNMENT_START[10]. - Troubleshooting: If errors are detected, run the tool in

VERBOSEmode withIGNORE_WARNINGS=trueto get detailed information about each problematic record. Common fixes include usingAddOrReplaceReadGroupsto add missing read group information orFixMateInformationto correct mate pair issues [10].

Step 2: Base Quality Score Recalibration (BQSR)

BQSR corrects systematic errors in the base quality scores assigned by the sequencer, which are important for accurate variant calling.

- Generate Recalibration Table:

- Apply Recalibration:

- Purpose: Uses known variant sites to build a model of systematic errors in base quality scores, then adjusts these scores to reflect more accurate probabilities [3].

Step 3: Variant Calling with HaplotypeCaller

The core variant calling step identifies potential genetic variants in your sample.

- Purpose: Uses a sophisticated assembly-based algorithm to call SNPs and indels in your sequencing data [27].

- Key Parameters:

-R: Reference genome file.-I: Input BAM file (use the recalibrated BAM from Step 2).-O: Output VCF file for raw variants.-L: (Optional) Genomic intervals to restrict calling to specific regions [27].

Step 4: Variant Filtering

Apply filters to separate high-confidence variants from likely false positives.

- Purpose: Applies hard filters to variant calls based on established quality metrics.

- Common Filtering Thresholds:

- QD (Quality by Depth): Variant confidence normalized by depth of supporting reads.

- FS (Fisher Strand): Phred-scaled probability of strand bias.

- MQ (Mapping Quality): Root mean square mapping quality of supporting reads [29].

Troubleshooting Common Experimental Issues

Missing Expected Variants

Problem: After running HaplotypeCaller, results don't show variants that were expected to be present based on prior knowledge or IGV visualization [30].

Diagnosis and Solutions:

- Examine the data in IGV: Verify that reads with good mapping quality (MAPQ) and base quality support the expected variant. Check if the region has complex characteristics like repeats [30].

- Check the GVCF output: If there is a

GQ=0hom-ref call at the site, the tool detected something unusual but couldn't make a confident variant call [30]. - Adjust assembly parameters: Try re-running HaplotypeCaller with advanced parameters that make the assembly more sensitive:

- Use the

--bam-outputoption: Generates a BAM file showing how reads were reassembled at the specific site, which is invaluable for diagnosing assembly failures [30].

Resource Allocation and Performance Issues

Problem: The workflow fails with out-of-memory errors or does not complete in a reasonable time frame.

Diagnosis and Solutions:

Table 2: Troubleshooting Resource Allocation Errors

| Error Message | Cause | Solution |

|---|---|---|

| Job exit code 137 or "Killed" in logs | The task ran out of memory [12]. | Increase the memory allocation (--java-options -Xmxg) for the offending step. For WGS, 32G is often a safe starting point. |

| "No space left on device" | The working directory ran out of disk space [12]. | Increase disk allocation (--tmp-dir or overall disk_gb in cloud environments). |

| PAPI error code 10 | Abrupt crash of the virtual machine, often due to memory issues [12]. | Increase both memory and disk allocation. |

| Extremely long runtime | Insufficient computational resources or lack of parallelization. | Use genomic intervals (-L or --intervals) to split the work across multiple threads or nodes [27]. |

File Format and Compatibility Issues

Problem: Errors related to input files, such as BAM, VCF, or reference genome formats.

Diagnosis and Solutions:

- BAM File Errors: Use

ValidateSamFileas described in Step 1. Address allERROR-level issues before proceeding [10]. - Reference Genome Mismatches: Ensure all input files (BAM, VCF resources) are aligned to the same reference genome build. The error often manifests as "contigs don't match between input files" [15].

- Incorrect File Indices: Verify that all BAM and VCF files have corresponding index files (

.bai,.tbi) in the same directory [27].

Frequently Asked Questions (FAQs)

Q1: Why do the Allele Depth (AD) values in my VCF not match what I see in IGV?

A: This discrepancy occurs because the VCF's AD field counts only informative reads that unambiguously support a specific haplotype. Reads that are ambiguous, don't span the entire variant, or have too many errors compared to their best-matching haplotype are excluded from the AD calculation by the genotyper, even though they may be visible in IGV [30].

Q2: Can I use this germline variant calling pipeline for non-human data?

A: Yes, the core workflow is applicable to other diploid organisms. However, you will need organism-specific reference genomes and known variant sets for the BQSR step. If known sites are unavailable, you may need to skip BQSR or generate a set of high-confidence variants from multiple samples first [8].

Q3: What should I do if my dataset is very small or from a non-model organism, making VQSR unsuitable?

A: The Variant Quality Score Recalibration (VQSR) method requires large sample sizes to build robust models. For small datasets, use the hard filtering approach described in Step 4 of this guide, as it does not depend on a cohort of samples [15].

Q4: Why does my BAM file pass validation but still fails in GATK with a read group error?

A: The SAM/BAM format specification does not strictly require SM (sample) tags in the @RG header lines. However, GATK tools require them for analysis. Use AddOrReplaceReadGroups to add missing SM tags [10].

Q5: How can I improve variant calling in difficult genomic regions, such as those with high homology or low complexity?

A: For amplicon data or regions with high homology, avoid using MarkDuplicates and turn off positional downsampling. Consider using the --debug and --bam-output parameters in HaplotypeCaller to inspect the local assembly and adjust assembly parameters like --min-pruning and --recover-all-dangling-branches [30].

Per-sample variant calling with HaplotypeCaller in GVCF mode

Frequently Asked Questions

Q1: Why should I use GVCF mode for a single sample instead of producing a regular VCF directly?

Even for a single sample, using GVCF mode is beneficial. A standard VCF only contains positions where the variant caller found evidence of variation. In contrast, a GVCF also includes records for non-variant regions, summarizing the confidence that a site is truly homozygous-reference. This allows you to distinguish between locations with high-quality data confirming the absence of variation and locations with insufficient data to make a determination. For a single sample, the genotype calls at variant sites are identical between the two methods, but the GVCF provides a more complete record of the entire sequencing effort, enabling you to answer questions like, "Could my sample have variant X?" by checking the site's status in the GVCF [31].

Q2: I am working with a cohort of samples. What is the advantage of the GVCF workflow over calling all samples together in a single step?

The GVCF workflow (per-sample GVCF creation followed by joint genotyping) is more efficient and scalable than running HaplotypeCaller on multiple BAM files simultaneously. If you add new samples to your cohort, the traditional multi-sample calling would require re-processing all BAM files together, which becomes progressively slower. With the GVCF workflow, you only need to generate a GVCF for the new sample and then re-run the much faster joint genotyping step on the combined dataset. This approach is the GATK best practice for scalable variant calling in cohorts [32] [33].

Q3: What are the minimum computational resources required to run HaplotypeCaller efficiently?

Benchmarking tests indicate that HaplotypeCaller's performance does not improve significantly with more than two CPU threads, as it is primarily a single-threaded tool. Allocating excessive memory also does not boost performance; however, a minimum of 20 GB is recommended to ensure the job completes successfully when using two threads [34].

Q4: My joint genotyping step with GenotypeGVCFs fails. What could be wrong?

A common issue is that GenotypeGVCFs can encounter errors when it misses reference confidence blocks, especially if no genomic interval is specified. To avoid this, always specify a genomic interval using the -L parameter when running GenotypeGVCFs, even if the interval was used in the previous GenomicsDBImport step [35].

Q5: When should I avoid using HaplotypeCaller?

HaplotypeCaller is not recommended for somatic variant discovery in cancer (tumor-normal pairs). The algorithms it uses to calculate variant likelihoods are not well-suited for the extreme allele frequencies often present in somatic data. For this purpose, you should use Mutect2 instead [36].

Troubleshooting Guide

| Problem | Possible Cause | Solution |

|---|---|---|

| Pipeline expects a VCF but finds a GVCF | Output file extension not updated in pipeline script after adding -ERC GVCF [32]. |

Ensure the output file in the HaplotypeCaller command and the pipeline's output declaration both use the .g.vcf or .g.vcf.gz extension [32]. |

| Extremely slow runtime | HaplotypeCaller is a resource-intensive tool. Running without intervals or with too many threads can hinder performance [34]. | 1. Use the -L parameter with a list of intervals (e.g., target regions for exome data) to restrict analysis [36].2. Do not use more than 2 threads for the non-Spark version of HaplotypeCaller [34]. |

| Job fails due to memory error | Insufficient Java heap memory allocation [34]. | Allocate sufficient memory using the -Xmx parameter (e.g., --java-options "-Xmx20G"). A starting point of 20GB is recommended [34]. |

| Poor indel calling accuracy | Read misalignment around indels can cause false positives and missed calls [33]. | HaplotypeCaller performs local reassembly, which improves indel calling. Use the --bam-output option to generate a realigned BAM for visual validation in IGV [33]. |

Benchmarking Data and Key Parameters

The following table summarizes quantitative data and key parameters to guide your experimental setup and performance expectations.

| Aspect | Configuration / Value | Notes / Effect |

|---|---|---|

| Recommended Threads | 2 [34] | Adding more threads does not reduce runtime significantly [34]. |

| Recommended Memory (Xmx) | 20 GB [34] | Minimum recommended for job completion; increasing further does not boost performance [34]. |

Base Quality Threshold (-mbq) |

10 [36] | Minimum base quality required to consider a base for calling. |

Heterozygosity (-heterozygosity) |

0.001 [36] | Heterozygosity value used to compute prior likelihoods for SNPs. |

Indel Heterozygosity (-indel-heterozygosity) |

1.25E-4 [36] | Heterozygosity value used to compute prior likelihoods for indels. |

| PCR-free Data | -pcr_indel_model NONE [36] |

Should be set when working with PCR-free sequencing data. |

Experimental Protocol: GVCF Workflow

This protocol details the steps for per-sample GVCF creation and subsequent joint genotyping for a cohort, following GATK best practices [32] [33].

Step 1: Generate a GVCF for Each Sample Run the following command for each sample's BAM file. This should be executed in a container or environment where GATK is installed.

-R: Reference genome FASTA file.-I: Input BAM file (must be indexed).-O: Output GVCF file.-ERC GVCF: Sets the emission mode to GVCF.-L: (Optional) Genomic intervals to process, highly recommended to speed up analysis [36].

Step 2: Consolidate GVCFs into a GenomicsDB Data Store Combine the per-sample GVCFs into a single data store for efficient joint genotyping.

-V: List each sample's GVCF file.--genomicsdb-workspace-path: Path to the output database directory.-L: The genomic interval to operate on (e.g., a chromosome or a set of intervals).

Step 3: Perform Joint Genotyping Run the joint genotyping analysis on the combined data store to produce a final cohort VCF.

-V: Path to the GenomicsDB workspace created in Step 2.-O: Final output VCF file for the cohort.-L: Critical: Always specify an interval to avoid failures related to missing reference confidence blocks [35].

The workflow for this protocol is summarized in the following diagram:

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in the Experiment |

|---|---|

| Reference Genome FASTA | The reference sequence file to which the reads were aligned. It is required for all steps to determine reference bases and coordinate contexts [36]. |

| Indexed BAM File | The input sequence data (aligned reads). The BAM file must have a corresponding index (.bai file) for random access [32]. |

| GVCF File | The intermediate output from HaplotypeCaller. It contains variant calls and, critically, blocks of non-variant sites with confidence scores, enabling joint genotyping [32] [33]. |

| GenomicsDB Data Store | A specialized database for efficiently storing and accessing variant data from many GVCFs, which is used as input for the joint genotyping step [32] [35]. |

| Intervals List File | A file specifying genomic regions (e.g., exome capture targets) to restrict analysis. Using intervals dramatically speeds up computation by limiting processing to areas of interest [36]. |

| 6-Cyanohexanoic acid | 6-Cyanohexanoic Acid|CAS 5602-19-7|Supplier |

| Fendizoic acid | Fendizoic acid, CAS:84627-04-3, MF:C20H14O4, MW:318.3 g/mol |

Efficient cohort consolidation using GenomicsDBImport

Frequently Asked Questions (FAQs)

Q1: What is the primary function of GenomicsDBImport in the GATK workflow? GenomicsDBImport is a GATK tool that imports single-sample GVCFs into a GenomicsDB workspace before joint genotyping. It efficiently consolidates data from multiple samples by transposing sample-centric variant information across genomic loci, making the data more accessible for subsequent analysis. This replaces the older CombineGVCFs approach and is optimized for handling large cohorts [37].

Q2: What are the most common causes of GenomicsDBImport failures? The most frequent issues users encounter include:

- Incorrectly formatted sample map files

- Insufficient memory allocation

- Malformed input GVCFs

- Interval list parsing errors

- Issues with mixed ploidy samples [38] [39] [40]

Q3: Can GenomicsDBImport handle samples with different ploidy levels? GenomicsDBImport has known limitations with mixed ploidy samples. While the tool may start without errors, it can fail to process beyond certain positions or produce invalid block size errors during subsequent operations like SelectVariants [39].

Q4: What memory considerations are important for GenomicsDBImport? The -Xmx value should be less than the total physical memory by at least a few GB, as the native TileDB library requires additional memory beyond Java's allocation. Insufficient memory can cause confusing error messages or fatal runtime errors [37] [40].

Troubleshooting Guides

Issue 1: Sample Map Formatting Errors

Problem: GenomicsDBImport fails with errors about incorrect field numbers in sample map.

Error Message Examples:

- "Bad input: Expected a file with 2 fields per line" [38]

- "Sample name map file must have 2 or 3 fields per line" [41]

Solution:

- Ensure your sample map follows the exact format: samplename[TAB]fileuri

- Verify no blank lines or trailing whitespace exists

- Check that tabs (not spaces) separate columns

- Validate all file paths are accessible

Sample Map Structure:

Validation Method:

Use --validate-sample-name-map true to enable checks on the sample map file. This verifies that feature readers are valid and warns if sample names don't match headers [37].

Issue 2: Input GVCF Malformations

Problem: Failures due to problematic GVCF content, including duplicate fields or formatting issues.

Error Message Examples:

- "Duplicate fields exist in vid attribute" [42]

- "The provided VCF file is malformed" [42]

- "There aren't enough columns for line" [42]

Solution:

- Remove duplicate INFO field definitions from VCF headers

- Ensure all variant records have the required eight columns

- Verify no positional errors (e.g., "." as start position) exist

- Check for truncated or corrupted GVCF files

Diagnostic Approach:

Issue 3: Interval List Parsing Problems

Problem: Errors when specifying genomic intervals for import.

Error Message Examples:

Solution:

- Use properly formatted interval lists with standard notation:

chr:start-end - Ensure contig names match your reference dictionary

- Avoid mixing interval formats (BED vs. GATK interval list)

- Use consistent coordinate systems throughout

Proper Interval Formats:

Issue 4: Memory and Resource Allocation

Problem: Tool crashes with memory errors or resource exhaustion.

Error Message Examples:

- "A fatal error has been detected by the Java Runtime Environment" [40]

- "SIGSEGV" in native code [40]

- Job exit code 137 (out of memory) [12]

Solution:

- Allocate adequate memory with

-Xmxbut leave headroom for native libraries - Use

--tmp-dirto specify sufficient temporary storage - Implement batching (

--batch-size) for large sample sets - Enable consolidation (

--consolidate) when using many batches

Memory Configuration Example:

Issue 5: Mixed Ploidy and Complex Variants

Problem: Incomplete processing or invalid output with samples of varying ploidy.

Error Message Examples:

- "Invalid block size" when querying database [39]

- Tool appears to hang at certain genomic positions [39]

Solution:

- Consider separating samples by ploidy when possible

- Verify GVCFs with ValidateVariants

- Use smaller intervals to isolate problematic regions

- As a last resort, consider alternative approaches for mixed ploidy datasets

Error Reference Table

The following table summarizes common GenomicsDBImport errors and their solutions:

| Error Category | Example Error Messages | Solution Steps |

|---|---|---|

| Sample Map Issues | "Bad input: Expected a file with 2 fields per line" [38], "Sample name map file must have 2 or 3 fields per line" [41] | Verify tab separation, remove blank lines, validate file paths |

| GVCF Format Problems | "Duplicate fields exist in vid attribute" [42], "The provided VCF file is malformed" [42] | Remove duplicate header lines, validate VCF structure, check for truncated files |

| Interval Parsing Errors | "Problem parsing start/end value in interval string" [43], "Badly formed genome unclippedLoc" [43] | Standardize interval format, verify contig names, use consistent coordinate systems |

| Memory Allocation | "A fatal error has been detected by the JRE" [40], "SIGSEGV" [40], Exit code 137 [12] | Increase -Xmx with headroom, use tmp-dir with sufficient space, implement batching |

| Mixed Ploidy Limitations | "Invalid block size" during querying [39], Incomplete genomic coverage [39] | Separate samples by ploidy, validate input GVCFs, use smaller intervals |

Research Reagent Solutions

Essential materials and their functions for successful GenomicsDBImport experiments:

| Reagent/Resource | Function | Specification |

|---|---|---|

| Sample Map File | Maps sample names to GVCF file paths | Tab-delimited text, 2-3 columns, no header [37] |

| Input GVCFs | Single-sample variant calls with probabilities | HaplotypeCaller output with -ERC GVCF or -ERC BP_RESOLUTION [37] |

| GenomicsDB Workspace | Database structure for consolidated variant data | Empty or non-existent directory path [37] |

| Interval Lists | Genomic regions to process | Standardized format matching reference contigs [43] |

| Reference Genome | Reference sequence for coordinate mapping | Same version used for GVCF generation [37] |

GenomicsDBImport Workflow

GenomicsDBImport Workflow Relationship: This diagram illustrates the data flow from individual GVCF files through GenomicsDBImport to joint genotyping, highlighting key inputs and outputs.

Joint genotyping of cohorts with GenotypeGVCFs

The following diagram illustrates the GATK joint genotyping workflow, from raw data to filtered variants.

Troubleshooting Common Errors

Error: "Couldn't create GenomicsDBFeatureReader"

Problem Description

When running GenotypeGVCFs on a GenomicsDB workspace created with GenomicsDBImport, the tool fails with the error: "A USER ERROR has occurred: Couldn't create GenomicsDBFeatureReader" [44].

Diagnosis and Solutions

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| Incremental database update issues | Check if the database was created by progressively adding samples using --genomicsdb-update-workspace-path [44]. |

Recreate the GenomicsDB workspace in a single operation if possible, rather than using incremental updates. |

| Corrupted callset.json | Verify the structure and content of the callset.json file for inconsistencies or missing sample entries [44]. |

Ensure the callset.json file is correctly formatted and contains all expected samples with proper row_idx mapping. |

| Version incompatibility | Confirm that the same GATK version is used for both GenomicsDBImport and GenotypeGVCFs [6]. |

Use consistent GATK versions (preferably the latest stable release) for all workflow steps. |

| Memory allocation | Check system logs for memory-related errors preceding the failure [6]. | Increase memory allocation using --java-options "-Xmx4g" or higher based on cohort size [45]. |

Error: "use-new-qual-calculator is not a recognized option"

Problem Description

The GenotypeGVCFs command fails with an error stating that --use-new-qual-calculator is not a recognized option [46].

Diagnosis and Solutions

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| GATK version mismatch | Verify the GATK version using gatk --version [6]. |

This option was removed in GATK4.1.5.0; remove the --use-new-qual-calculator argument from your command [46]. |

| Outdated workflow script | Check if the command comes from a workflow designed for an older GATK version [46]. | Update to the latest version of the joint genotyping workflow from official GATK repositories [46]. |

Error: "Bad input: Presence of '-RAW_MQ' annotation is detected"

Problem Description

The tool fails with an error about the deprecated -RAW_MQ annotation, indicating the input was produced with an older GATK version [46].

Diagnosis and Solutions

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| GVCFs from older GATK | Identify which input GVCFs were generated with older GATK versions [45]. | Re-generate GVCF inputs using the current version of HaplotypeCaller, or use the argument --allow-old-rms-mapping-quality-annotation-data to override (may yield suboptimal results) [46]. |

| Inconsistent tool versions | Check for version mismatches between HaplotypeCaller and GenotypeGVCFs [6]. | Use the same GATK version for HaplotypeCaller (GVCF creation) and GenotypeGVCFs [6]. |

Research Reagent Solutions

The following table details the essential inputs and resources required for the GenotypeGVCFs process.

| Item | Function in Experiment | Key Specifications |

|---|---|---|

| Reference Genome | Provides the reference sequence for alignment and variant calling [45]. | Must match the build used for read alignment; FASTA format with associated index (.fai) and dictionary (.dict) files. |

| GVCF Inputs | Contain per-sample genotype likelihoods for joint analysis [45] [2]. | Must be produced by HaplotypeCaller with -ERC GVCF or -ERC BP_RESOLUTION; other "GVCF-like" files are incompatible [45]. |

| GenomicsDB Workspace | Efficiently consolidates cohort data from multiple GVCFs for joint genotyping [2]. | Created by GenomicsDBImport; specified to GenotypeGVCFs via gendb:// path [45]. |

| dbSNP Database | Known variant resource used for annotation and reference [45]. | VCF format; provided via the --dbsnp argument. |

Frequently Asked Questions (FAQs)

What are the valid input types for GenotypeGVCFs?

GenotypeGVCFs accepts only one input track, which can be one of three types [45]:

- A single-sample GVCF produced by HaplotypeCaller.

- A multi-sample GVCF created by CombineGVCFs.

- A GenomicsDB workspace created by GenomicsDBImport.

Can I provide multiple GVCF files directly to GenotypeGVCFs?

No. The tool is designed to take only a single input track [45]. To process multiple samples, you must first consolidate them into either a multi-sample GVCF using CombineGVCFs or, more efficiently for larger cohorts, into a GenomicsDB workspace using GenomicsDBImport [45] [2].

How do I handle "Out of Memory" errors during processing?

Increase the memory allocated to the Java virtual machine [6]:

- Use the

--java-optionsparameter: e.g.,--java-options "-Xmx8g"to allocate 8 GB of memory. - For very large cohorts, you may need to further increase this value or split your analysis into smaller genomic intervals using the

-Loption [6].

My job failed partway through. Do I need to start over?

Many workflow execution systems support resumption. Use the --resume or similar flag in your workflow orchestrator to skip successfully completed steps and continue from the last failed step [6]. Always check the specific documentation for your pipeline runner.

Does GenotypeGVCFs require a specific ploidy setting for non-diploid organisms?

No. The tool intelligently handles any ploidy (or mix of ploidies) automatically. There is generally no need to specify a ploidy for non-diploid organisms [45].

Frequently Asked Questions

Q1: Can I use VQSR for my non-model organism if I have a small number of samples? Generally, no. VQSR requires well-curated training resources (like known variant databases) that are typically unavailable for non-model organisms. It also needs a large amount of variant sites to operate properly, which small datasets (e.g., under 30 exomes) often cannot provide [47] [48].

Q2: What is the main disadvantage of hard-filtering compared to VQSR? Hard-filtering forces you to look at each annotation dimension individually, which can result in throwing out good variants that have one bad annotation or keeping bad variants to avoid losing the good ones. In contrast, VQSR uses machine learning to evaluate how variants cluster across multiple annotation dimensions simultaneously, providing a more powerful and nuanced filtering approach [49].

Q3: My dataset has fewer than 10 samples. Which hard-filtering annotation should I omit?

You should omit the InbreedingCoeff filter. The InbreedingCoeff statistic is a population-level calculation that is only available with 10 or more samples [47] [48].

Q4: What should I do if I get a "No data found" error when running VariantRecalibrator?

This error often occurs when the dataset gives fewer distinct clusters than expected. The tool will notify you of insufficient data. Try decrementing the --max-gaussians parameter value (e.g., from 4 to 2) and rerun the analysis [9].

Troubleshooting Guides

Problem: VQSR is Unsuitable or Fails

Scenario: You are working with a non-model organism or a small cohort and encounter issues running the VariantRecalibrator tool.

Diagnosis: VQSR has two primary requirements that are often unmet in non-human studies:

- Well-curated training/truth resources (e.g., HapMap, Omni, 1000G) [47] [48].

- A large number of variant sites (typically from many samples) for the machine learning model to train effectively [47] [48]. Small datasets, such as targeted panels or exome studies with fewer than 30 samples, often cannot support VQSR.

Solution: Use hard-filtering with VariantFiltration.

- Split your variants into SNPs and Indels, as they require different filters.

- Apply hard filters using the recommended thresholds in the tables below.

- Merge the filtered files.

Problem: Base Quality Score Recalibration (BQSR) for Non-Model Organisms

Scenario: You want to perform BQSR but lack a large, high-confidence known variant dataset for your non-model organism.

Diagnosis: BQSR uses known variant sites to detect systematic errors. Without a species-specific training database, the recalibration may be ineffective or even reduce data quality [50].

Solution: If a reliable known sites file is unavailable, it may be necessary to skip the BQSR step. Attempting to create a custom training set from your own data with stringent filters is theoretically possible but can be challenging to implement correctly [50].

Strategy Selection Workflow

The following diagram outlines the decision process for choosing between VQSR and hard-filtering, helping you quickly identify the appropriate path for your research context.

Hard-Filtering Annotation Guide

The tables below provide the standard filtering recommendations. Use them as a starting point and adjust based on your specific data [47] [48].

SNP Filtering Parameters

| Annotation | Filter Expression | Threshold | Explanation |

|---|---|---|---|

| QD | QD < 2.0 |

2.0 | Variant confidence normalized by depth [49]. |

| FS | FS > 60.0 |

60.0 | Phred-scaled probability of strand bias [49]. |

| SOR | SOR > 3.0 |

3.0 | Symmetric Odds Ratio for strand bias [49]. |

| MQ | MQ < 40.0 |

40.0 | Root mean square of mapping quality [49]. |

| MQRankSum | MQRankSum < -12.5 |

-12.5 | Mapping quality rank sum test statistic [49]. |

| ReadPosRankSum | ReadPosRankSum < -8.0 |

-8.0 | Read position rank sum test statistic [49]. |

Indel Filtering Parameters

| Annotation | Filter Expression | Threshold | Explanation & Caveats |

|---|---|---|---|

| QD | QD < 2.0 |

2.0 | Variant confidence normalized by depth [47]. |

| FS | FS > 200.0 |

200.0 | Phred-scaled probability of strand bias [47]. |

| SOR | SOR > 10.0 |

10.0 | Symmetric Odds Ratio for strand bias [48]. |

| ReadPosRankSum | ReadPosRankSum < -20.0 |

-20.0 | Read position rank sum test statistic [47]. |

| InbreedingCoeff | InbreedingCoeff < -0.8 |

-0.8 | Omit if fewer than 10 samples [47]. |

The Scientist's Toolkit: Key Research Reagents

This table lists essential data resources and their functions for setting up a robust variant filtering workflow.

| Item Name | Function / Purpose | Key Considerations |

|---|---|---|

| Known Sites Resources (e.g., dbSNP, HapMap) | Used as training/truth sets for VQSR and BQSR. | For non-model organisms, these are often unavailable and must be skipped or custom-built [47] [51]. |

| Reference Genome | The standard sequence for read alignment and variant calling. | High-quality assembly is crucial. For non-model organisms, a sister species genome is often used, which can impact mapping [50]. |

| Variant Call Format (VCF) File | The output of the variant caller containing raw variants and annotations. | This is the primary input for both VQSR and hard-filtering tools [9] [52]. |

| Sites-Only VCF | A VCF that drops sample-level information, keeping only the first 8 columns. | Can speed up the VQSR analysis during the modeling step [9]. |

| Z-3-Amino-propenal | Z-3-Amino-propenal, CAS:25186-34-9, MF:C3H5NO, MW:71.08 g/mol | Chemical Reagent |

| Glu-Ser | H-GLU-SER-OH|5875-38-7|Research Dipeptide | H-GLU-SER-OH is a high-purity Glu-Ser dipeptide for research into bioactive peptides and synthesis. For Research Use Only. Not for human or veterinary use. |

Methodology: Implementing Hard-Filtering

This section provides the detailed command-line protocol for applying hard filters to a variant dataset.

Step 1: Filter SNPs This command applies multiple filter expressions to the SNP VCF file. Variants that fail one or more checks are tagged in the FILTER column, but not removed.

Step 2: Filter Indels

This command applies the recommended filters for indel variants. Remember to omit the InbreedingCoeff filter for cohorts with fewer than 10 samples.

Important Note on Application: These recommendations are a starting point. You must evaluate the results critically and adjust parameters for your specific data, as the appropriateness of annotations and thresholds is highly dataset-dependent [47] [48].

Diagnosing and Resolving Specific GATK Errors and Performance Issues

Solving input file contig mismatches between BAM, VCF, and reference

Table of Contents

- Problem Overview: Understanding Contig Mismatches

- Troubleshooting Guide: Diagnosis and Solutions

- FAQs: Specific Scenarios and Solutions

- The Scientist's Toolkit: Essential Resources

Contig mismatches are a common error in GATK variant calling that occur when the sequence dictionaries (the "contigs" or chromosomes) in your input files—such as BAM alignment files, VCF variant files, and the reference genome FASTA file—do not agree with each other. The GATK requires consistency in contig names, lengths, and order across all files to function correctly [22]. These errors typically fall into two main categories:

- True Mismatches: The contig names or lengths are fundamentally different between files. For example, a contig like

chrMin your BAM file might have a length of 16569, while the same contig in your reference file has a length of 16571 [22]. This often indicates the use of different genome builds (e.g., hg19 vs. GRCh38). - Sorting/Ordering Mismatches: The contigs are the same, but their order in the file's sequence dictionary is not identical. The GATK can be particular about the ordering of contigs in BAM and VCF files and will fail with an error even if the contig sets are otherwise compatible [53] [54].

The following workflow helps diagnose the specific type of contig error and directs you to the appropriate solution.

Troubleshooting Guide: Diagnosis and Solutions

Step 1: Analyze the Error Message

The GATK error message is the first place to look. It will specify which files are incompatible and the nature of the problem [22]. Key phrases include:

"Found contigs with the same name but different lengths"→ A true mismatch, likely a wrong genome build [22]."The contig order in ... and reference is not the same"→ A sorting issue [55] [56]."No overlapping contigs found"→ A severe mismatch, often with different naming conventions (e.g., "chr1" vs. "1") [22] [57].

Step 2: Identify the Faulty File

The error message identifies the problematic input file. It will say, for example, "Input files reads and reference have incompatible contigs" (BAM is the problem) or "Input files knownSites and reference have incompatible contigs" (VCF is the problem) [22].

Step 3: Apply the Correct Solution

Based on your diagnosis from the flowchart and error log, apply the solutions below.

Table 1: Solutions for Contig Mismatches and Ordering Issues

| File Type | Problem Type | Recommended Solution | Key Tools & Commands |

|---|---|---|---|

| BAM | True Mismatch | 1. Use the correct reference. This is the preferred solution if possible. 2. Realign the BAM. If the correct reference is unavailable, revert the BAM to unaligned FASTQ and realign with the correct reference. | picard RevertSam or picard SamToFastq followed by bwa mem |

| VCF | True Mismatch | 1. Use a compatible resource. Download a version matched to your reference (e.g., from GATK Resource Bundle). 2. Liftover the VCF. Convert coordinates to your reference build. | picard LiftoverVCF (requires a chain file) |

| BAM | Order Mismatch | Reorder the BAM file to match the reference dictionary's order. | java -jar picard.jar ReorderSam I=input.bam O=reordered.bam R=reference.fasta CREATE_INDEX=TRUE [53] [54] |

| VCF | Order Mismatch | Sort the VCF file using the reference dictionary as a template. | java -jar picard.jar SortVcf I=input.vcf O=sorted.vcf SEQUENCE_DICTIONARY=reference.dict [53] [54] |

FAQs: Specific Scenarios and Solutions

Q1: I downloaded my VCF from the official GATK resource bundle, but I'm still getting a contig error. Why?

This usually happens when there is a naming convention disparity. The resource bundle for GRCh38 often uses contig names without the "chr" prefix (e.g., "1", "2", "MT"), while many custom references use the "chr" prefix (e.g., "chr1", "chr2", "chrM") [55]. If you manually add "chr" to the VCF, you must ensure the contig order also matches your reference. The solution is to use Picard SortVcf with your reference's dictionary (reference.dict) to re-sort the VCF correctly [55].

Q2: My BAM files are very large, and reordering them is storage-intensive. Can I adjust the reference instead?