A Comprehensive Guide to Single-Cell RNA-Seq Exploratory Analysis: From Foundational Concepts to Clinical Applications

This article provides a comprehensive guide to the exploratory analysis of single-cell RNA-sequencing (scRNA-seq) data, tailored for researchers, scientists, and drug development professionals.

A Comprehensive Guide to Single-Cell RNA-Seq Exploratory Analysis: From Foundational Concepts to Clinical Applications

Abstract

This article provides a comprehensive guide to the exploratory analysis of single-cell RNA-sequencing (scRNA-seq) data, tailored for researchers, scientists, and drug development professionals. It covers the foundational principles of scRNA-seq, highlighting its power in uncovering cellular heterogeneity and its advantages over bulk sequencing. The guide details the core methodological workflow—from quality control and normalization to clustering and trajectory inference—using popular tools like Seurat and Scanpy. It addresses common analytical challenges such as batch effects, dropout events, and data sparsity, offering practical troubleshooting and optimization strategies. Finally, the article explores the critical validation of findings and the growing application of scRNA-seq in drug discovery, including target identification, mechanism of action studies, and patient stratification, providing a vital resource for leveraging this transformative technology.

Unlocking Cellular Heterogeneity: The Foundational Power of Single-Cell RNA-Seq

Single-cell RNA sequencing (scRNA-seq) represents a paradigm shift in genomic analysis, transitioning from population-level averaging to single-cell resolution. This transformation enables researchers to unravel cellular heterogeneity, identify rare cell populations, and reconstruct developmental trajectories with unprecedented clarity. As a foundational component of exploratory single-cell RNA-seq data research, this technology has revolutionized our understanding of biological systems in development, homeostasis, and disease. This technical guide examines the core principles, methodological framework, and critical analytical considerations of scRNA-seq, providing researchers and drug development professionals with comprehensive insights into its transformative applications in biomedical research.

Traditional bulk RNA sequencing measures the average gene expression profile across thousands to millions of cells, obscuring cellular heterogeneity and masking rare but biologically significant cell populations [1]. The transcriptome programs of tumors and complex tissues are highly heterogeneous both between cells and within tumor microenvironments. The true signals driving biological processes or therapeutic resistance from rare cell populations can be obscured by an average gene expression profile from bulk RNA-seq [2].

Single-cell RNA sequencing (scRNA-seq) has emerged as a powerful solution to this limitation, allowing researchers to investigate the transcriptome of individual cells within complex biological systems. Since its conceptual and technical breakthrough in 2009 [3] [4], scRNA-seq has evolved from a specialized technique to an accessible tool that fuels discoveries across diverse fields including oncology, neuroscience, immunology, and developmental biology [3]. This technology has become indispensable for creating comprehensive cellular atlases, understanding disease mechanisms, and identifying novel therapeutic targets [3] [2].

Fundamental Technological Principles

Core Differences Between Bulk and Single-Cell RNA-seq

The fundamental difference between bulk and scRNA-seq lies in their approach to cellular sampling and data resolution. Bulk RNA-seq provides a population-level perspective by measuring the average gene expression across all cells in a sample, analogous to viewing a forest from a distance. In contrast, scRNA-seq enables examination of individual cellular transcriptomes, comparable to distinguishing every tree within that forest [1].

Table 1: Key Experimental and Analytical Differences Between Bulk and Single-Cell RNA-seq

| Feature | Bulk RNA-seq | Single-Cell RNA-seq |

|---|---|---|

| Sample Input | Population of thousands to millions of cells [1] | Individual cells isolated from tissue [1] |

| Resolution | Average gene expression across cell population [1] | Gene expression per individual cell [1] |

| Primary Applications | Differential gene expression between conditions; Biomarker discovery; Pathway analysis [1] | Cellular heterogeneity mapping; Rare cell identification; Lineage tracing; Developmental trajectories [1] [2] |

| Data Structure | Gene expression matrix (genes × sample) | Gene expression matrix (genes × cells) with cell barcodes and UMIs [5] |

| Technical Challenges | Limited resolution of cellular heterogeneity [1] | Cell dissociation artifacts; Amplification bias; High data sparsity [3] [5] |

| Cost Considerations | Lower per-sample cost [1] | Higher per-cell cost but increasingly accessible [1] [6] |

Unique Molecular Identifiers and Barcoding Strategies

A critical innovation in scRNA-seq is the incorporation of unique molecular identifiers (UMIs) and cellular barcodes [3] [5]. UMIs are short random nucleotide sequences that uniquely tag individual mRNA molecules during reverse transcription, enabling accurate quantification by correcting for amplification biases [3]. Cellular barcodes are sequences that uniquely identify each cell, allowing transcripts from thousands of individual cells to be pooled and sequenced simultaneously while maintaining the ability to attribute each transcript to its cell of origin [5].

Experimental Workflow and Methodologies

Core Single-Cell RNA-seq Workflow

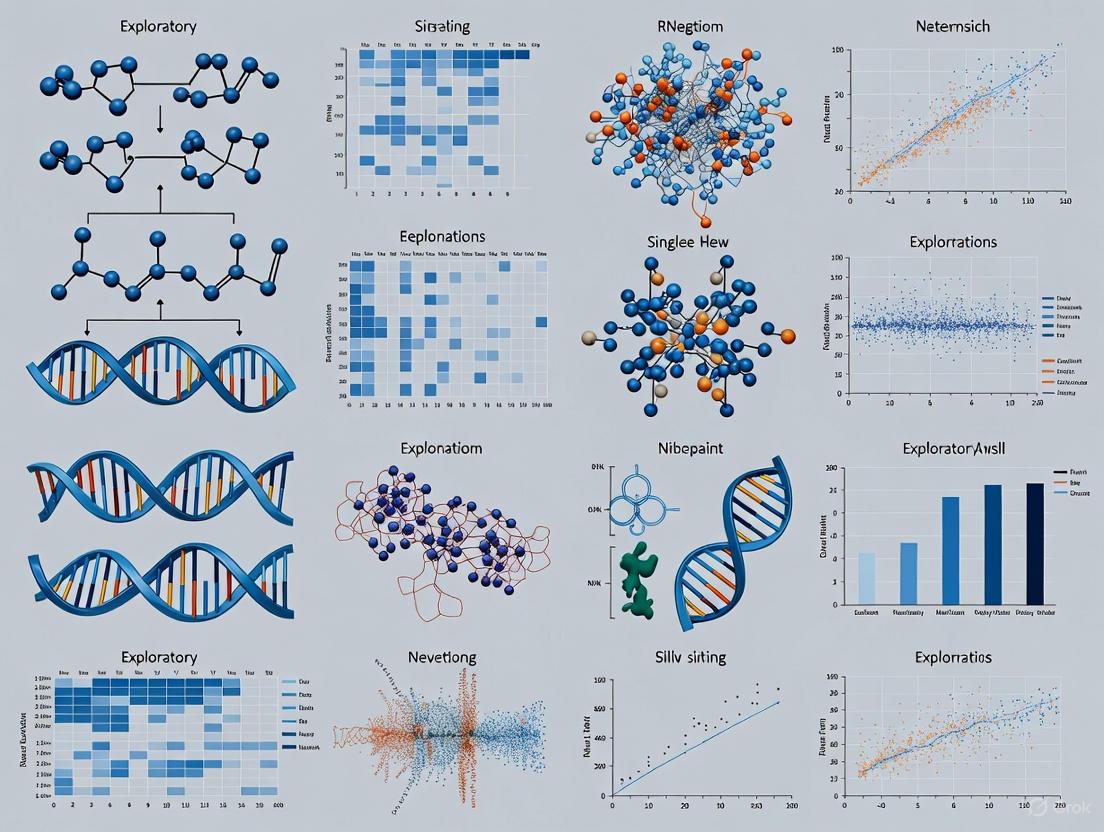

The following diagram illustrates the standardized workflow for droplet-based single-cell RNA sequencing, which represents the most widely adopted high-throughput approach:

Detailed Experimental Procedures

Single-Cell Isolation and Capture

The initial critical step involves creating viable single-cell suspensions from intact tissues through enzymatic or mechanical dissociation [1]. This process must balance cell yield with preservation of transcriptional states, as dissociation conditions can induce artificial stress responses that alter transcriptional patterns [3]. For tissues difficult to dissociate, such as brain tissue, single-nucleus RNA sequencing (snRNA-seq) provides an alternative approach that sequences nuclear mRNA while minimizing dissociation artifacts [3].

Common cell isolation techniques include:

- Fluorescence-activated cell sorting (FACS): Enables selection of specific cell types based on surface markers

- Microfluidic systems: Allow high-throughput automated cell capture

- Droplet-based partitioning: Encapsulate individual cells in oil-emulsion droplets along with barcoded beads [3] [6]

Library Preparation and Barcoding Strategies

In droplet-based systems like 10x Genomics Chromium, single cells are partitioned into Gel Beads-in-emulsion (GEMs) containing:

- A single cell

- A barcoded gel bead with oligo sequences containing cell barcodes and UMIs

- Reverse transcription reagents [6]

Within each GEM, cells are lysed, mRNA molecules are captured by poly(dT) primers, and reverse transcription occurs where all cDNA from a single cell receives identical barcodes [6]. Two main amplification strategies are employed:

- PCR-based amplification: Used in Smart-seq2, Drop-seq, and 10x Genomics protocols, leveraging template-switching oligos for cDNA amplification [3]

- In vitro transcription (IVT): A linear amplification approach used in CEL-seq and MARS-seq protocols [3]

Following amplification, barcoded cDNA from all cells is pooled for library preparation and high-throughput sequencing [3] [6].

Research Reagent Solutions

Table 2: Essential Research Reagents and Their Functions in scRNA-seq Workflows

| Reagent/Consumable | Function | Application Notes |

|---|---|---|

| Barcoded Gel Beads | Provide cell barcodes and UMIs for mRNA labeling | 10x Genomics systems use beads with ~3.6 million barcode combinations [2] |

| Partitioning Oil & Microfluidic Chips | Create GEMs for individual cell processing | GEM-X technology generates twice as many GEMs at smaller volumes, reducing multiplet rates [6] |

| Reverse Transcription Mix | Convert captured mRNA to barcoded cDNA | Contains template-switching activity for full-length transcript capture [3] |

| Library Preparation Kits | Prepare sequencing-ready libraries from barcoded cDNA | Compatible with Illumina, PacBio, and other sequencing platforms [6] |

| Cell Viability Stains | Assess quality of single-cell suspensions | Critical for ensuring high-quality input material [1] |

| Enzymatic Dissociation Kits | Tissue-specific protocols for cell isolation | Optimization required for different tissue types to minimize stress responses [3] |

Quality Control and Data Preprocessing

Critical Quality Control Metrics

Quality control (QC) represents a crucial step in scRNA-seq analysis to distinguish high-quality cells from artifacts. The following QC parameters require careful evaluation:

Table 3: Essential Quality Control Metrics for scRNA-seq Data

| QC Metric | Interpretation | Common Thresholds |

|---|---|---|

| Transcripts per Cell | Indicates capture efficiency and cell integrity | Cutoffs vary by protocol; outliers may represent dead cells or doublets [5] |

| Genes per Cell | Reflects library complexity | Cells with low gene counts may be compromised or empty droplets [7] |

| Mitochondrial RNA % | Marker of cellular stress and apoptosis | Typically 5-10%; elevated percentages indicate low-quality cells [7] [5] |

| Ribosomal RNA % | May indicate technical bias | High percentages may necessitate filtering [7] |

| UMI Counts per Cell | Measures sequencing depth and capture efficiency | Varies by cell type and protocol [5] |

Data Processing and Analysis Pipeline

The computational analysis of scRNA-seq data involves multiple stages that transform raw sequencing data into biological insights:

Key analytical steps include:

- Data Normalization: Corrects for technical variations in sequencing depth between cells using methods like SCTransform [5]

- Feature Selection: Identifies highly variable genes that drive biological heterogeneity [7]

- Dimensionality Reduction: Projects high-dimensional data into 2D or 3D space using PCA, t-SNE, or UMAP for visualization [5] [8]

- Clustering: Groups cells based on transcriptional similarity to identify cell types and states [5]

Applications in Exploratory Data Analysis

Resolving Cellular Heterogeneity

ScRNA-seq excels at deconvoluting complex tissues into constituent cell types and states. Unlike bulk RNA-seq that averages across populations, scRNA-seq can identify novel cell types, rare populations, and continuous transitional states [2] [4]. In oncology, this has enabled the discovery of rare drug-resistant subpopulations in melanoma and breast cancer that were undetectable with bulk approaches [2]. Similarly, in immunology, scRNA-seq has revealed previously unappreciated diversity in T cell states and activation patterns [1].

Reconstructing Developmental Trajectories

Pseudotemporal ordering algorithms applied to scRNA-seq data can reconstruct cellular differentiation pathways and lineage relationships without time-series experiments [3] [2]. This approach has been successfully applied to model embryonic development, cancer evolution, and cellular responses to perturbations, providing insights into the regulatory networks that control cell fate decisions [3].

Integrating with Spatial Transcriptomics

While scRNA-seq provides deep molecular characterization of individual cells, it loses native spatial context. Emerging spatial transcriptomics technologies complement scRNA-seq by preserving geographical information about cell localization within tissues [3] [2]. Computational integration of scRNA-seq with spatial data enables mapping cell types to their tissue locations, revealing how spatial organization influences cellular function and cell-cell communication [2].

Single-cell RNA sequencing has fundamentally transformed our approach to transcriptomic analysis by providing unprecedented resolution to examine cellular heterogeneity and dynamics. The transition from bulk averages to single-cell resolution has enabled discoveries across biomedical research, from characterizing novel cell types to understanding disease mechanisms and identifying therapeutic targets. As the technology continues to evolve with improvements in throughput, sensitivity, and spatial context integration, scRNA-seq will remain a cornerstone of exploratory biological research and precision medicine initiatives. For researchers and drug development professionals, mastering scRNA-seq technologies and analytical approaches is essential for leveraging its full potential in unraveling biological complexity and advancing human health.

Single-cell RNA sequencing (scRNA-seq) has revolutionized biological research by enabling the quantification of gene expression within individual cells. This high-resolution view allows researchers to dissect the intricate cellular heterogeneity of complex tissues, moving beyond the limitations of bulk RNA sequencing, which only provides an averaged transcriptome profile [9]. Since its inception in 2009, scRNA-seq has evolved into a powerful tool for exploratory data analysis, fundamentally changing how we study somatic cell evolution in health and disease [9]. The core strength of scRNA-seq lies in its ability to uncover new biological insights without prior hypotheses. This article details its three key applications in exploratory research: discovering rare cell types, deconvoluting complex tissues, and mapping dynamic cell states, providing a technical guide for researchers and drug development professionals.

The standard scRNA-seq workflow begins with sample preparation and single-cell dissociation, followed by single-cell capture using platforms like fluorescence-activated cell sorting (FACS) or droplet-based systems (e.g., 10x Genomics Chromium) [9]. After cell capture, transcripts are barcoded, reverse-transcribed into cDNA, and amplified before library construction and high-throughput sequencing [9]. The resulting data undergoes a rigorous bioinformatic pipeline including quality control, normalization, dimensionality reduction, and clustering, enabling the identification of distinct cell populations and states [10]. This process provides the foundation for the advanced applications discussed herein, forming an essential component of modern molecular biology and precision medicine research [9].

Discovering Rare Cell Types

The unbiased nature of scRNA-seq makes it uniquely powerful for identifying rare cell populations that constitute less than 1% of a tissue's cellular makeup but often play critically important biological roles, such as stem cells, transitional progenitors, or rare immune cell subsets.

Technical Considerations for Rare Cell Discovery

Successfully identifying rare cell types requires careful experimental design and analysis. Key technical considerations include sequencing depth, cell throughput, and analytical strategies. Table 1 summarizes the primary computational tools used for rare cell identification.

Table 1: Computational Tools for Rare Cell Type Discovery

| Tool/Method | Underlying Algorithm | Key Strength for Rare Cells | Reference/Location |

|---|---|---|---|

| SEURAT | Graph-based clustering | Canonical tool for identifying distinct populations | [9] |

| STRIDE | Topic modeling (LDA) | Identifies latent "topics" of gene expression | [11] |

| TSCS | Topographic sequencing | Preserves spatial context for rare cells | [9] |

| SCINA | Marker-based annotation | Uses pre-defined signatures for cell typing | [12] |

Experimental Protocol for Rare Cell Identification

A robust experimental workflow is essential for reliable rare cell discovery:

- Sample Preparation: Optimize dissociation protocols to maintain viability while preventing stress-induced transcriptional changes. Include viability markers during cell capture [9].

- Cell Capture and Library Preparation: Use high-throughput droplet-based methods (e.g., 10x Genomics) to profile tens of thousands of cells, ensuring adequate sampling of rare populations. Consider targeted approaches for enhanced sensitivity to specific gene panels of interest [13].

- Sequencing: Increase sequencing depth compared to standard studies (aim for 50,000-100,000 reads/cell) to improve detection of lowly expressed marker genes characteristic of rare subsets [10].

- Quality Control: Filter out low-quality cells using metrics like total UMIs, genes detected, and mitochondrial percentage, but apply conservative thresholds to avoid excluding genuine rare populations with naturally low RNA content [10].

- Dimensionality Reduction and Clustering: Apply PCA followed by graph-based clustering on highly variable genes. Use UMAP or t-SNE for visualization. Employ multi-step clustering approaches with increasing resolution parameters to resolve rare subsets from dominant populations [9].

- Differential Expression and Annotation: Identify cluster-specific marker genes. Compare expression against existing databases and literature to annotate novel populations. Validate findings using orthogonal methods like FISH or flow cytometry [9].

The following diagram illustrates the core analytical workflow for discovering rare cell types from raw single-cell data:

Deconvoluting Complex Tissues

Spatial transcriptomics technologies have revolutionized our ability to study gene expression profiles while retaining crucial spatial information within intact tissue sections [11]. However, most platforms have limited resolution, with capture spots containing signals from multiple cells, necessitating computational deconvolution to deduce the underlying cellular composition [11].

Computational Deconvolution Approaches

Deconvolution methods leverage single-cell RNA-seq data as a reference to resolve spatial mixtures into constituent cell types. Table 2 classifies the primary algorithmic strategies for spatial deconvolution.

Table 2: Categories of Spatial Transcriptomics Deconvolution Methods

| Method Category | Representative Tools | Key Principles | Best For |

|---|---|---|---|

| Probabilistic Models | cell2location, RCTD, CARD, STRIDE | Uses statistical models to estimate likelihood of cell type presence | Comprehensive tissue mapping, uncertainty estimation |

| NMF-Based Methods | SPOTlight, SpatialDWLS | Matrix factorization to identify latent components | Patterns of co-occurring cell types |

| Deep Learning Frameworks | Tangram, TransformerST | Neural networks learn mapping functions | Complex spatial patterns, large datasets |

| Optimal Transport | SpaOTsc, novoSpaRC | Models cellular dynamics across space | Developmental processes, trajectory mapping |

| Graph-Based Methods | DSTG, SD2, SpiceMix | Leverages spatial neighborhood relationships | Tissue niches, cell-cell interactions |

Detailed Methodology: TACIT for Spatial Multiomics

TACIT (Threshold-based Assignment of Cell Types from Multiplexed Imaging Data) is an unsupervised algorithm that exemplifies modern approaches to cell-type deconvolution in spatial multiomics data [12]. The method operates through several key stages:

- Input Processing: TACIT accepts segmented cell boundaries from multiplexed imaging data (spatial transcriptomics or proteomics) and generates a CELLxFEATURE matrix of normalized expression values [12].

- MicroCluster Formation: Cells are first clustered into highly homogeneous MicroClusters (MCs) using graph-based clustering, capturing small cell communities representing 0.1-0.5% of the total population [12].

- Cell Type Relevance Scoring: For each cell, TACIT calculates Cell Type Relevance scores (CTRs) by multiplying the normalized marker intensity vector with predefined cell type signature vectors, quantitatively evaluating congruence with considered cell types [12].

- Threshold Learning: The algorithm learns a positivity threshold that separates cells with strong positive signals from background noise by applying segmental regression to the ranked median CTRs across MicroClusters [12].

- Ambiguity Resolution: Initial labeling can assign multiple cell types to a single cell. TACIT employs a k-nearest neighbors (k-NN) algorithm on a feature subspace relevant to the mixed cell type category to resolve these ambiguities [12].

- Quality Assessment: Final annotations are evaluated using p-value and fold change calculations to quantify marker enrichment strength for each cell type [12].

In benchmark evaluations using human colorectal cancer and healthy intestine datasets, TACIT outperformed existing methods (CELESTA, SCINA, and Louvain) with weighted F1 scores of 0.75, demonstrating particular strength in identifying rare cell types where it achieved a correlation of R=0.76 compared to R=0.62 for the next best method [12].

The following diagram illustrates the spatial deconvolution process that infers cell type composition from mixed spatial transcriptomics spots:

Mapping Cell States

Beyond identifying static cell types, scRNA-seq enables the mapping of continuous cellular transitions, such as differentiation trajectories, immune activation, or disease progression. These dynamic processes represent a fundamental aspect of tissue function in both development and disease.

Trajectory Inference Methods

Trajectory inference methodologies reconstruct cellular dynamics by ordering cells along pseudotemporal trajectories based on transcriptomic similarity:

- Pseudotemporal Ordering: Algorithms project cells into a lower-dimensional space where the structure reveals progression paths. Cells are then ordered along these paths based on transcriptional similarity, creating a "pseudotime" metric from起始 to advanced states [9].

- Branching Analysis: Advanced tools can reconstruct complex lineage decisions with branching points, identifying genes that drive fate decisions and those that become specific to particular lineages [9].

- RNA Velocity: This approach analyzes spliced versus unspliced mRNA ratios to predict future cell states, going beyond static snapshots to model the direction and speed of transcriptional changes [9].

Experimental Framework for Cell State Mapping

A comprehensive approach to mapping cell states involves:

- Time-Series Sampling: Collect samples across multiple time points to capture dynamic processes, with careful experimental design to minimize batch effects [9].

- Multiomic Integration: Combine scRNA-seq with other modalities (epigenomics, proteomics) to gain mechanistic insights into regulatory drivers of state transitions [12].

- Spatiotemporal Contextualization: Integrate with spatial transcriptomics data to understand how cellular states relate to tissue location and microenvironmental cues [12] [11].

- Validation: Employ CRISPR-based perturbations or pharmacological interventions to functionally validate predicted regulatory relationships and state transitions [9].

The following workflow diagram illustrates the process of mapping cellular trajectories from single-cell data:

The Scientist's Toolkit: Essential Research Reagents and Platforms

Successful single-cell RNA sequencing studies require careful selection of platforms and reagents tailored to specific research goals. The choice between whole transcriptome and targeted approaches is particularly critical, with each offering distinct advantages [13].

Table 3: Research Reagent Solutions for Single-Cell RNA-Seq Applications

| Tool/Category | Example Products | Function | Considerations |

|---|---|---|---|

| Whole Transcriptome Platforms | 10x Genomics Chromium, Smart-seq2 | Comprehensive gene expression profiling | Ideal for discovery; higher cost per cell; detects ~20,000 genes [13] |

| Targeted Gene Expression | 10x Genomics Feature Barcoding, Custom panels | Focused profiling of specific gene sets | Superior sensitivity for low-abundance targets; cost-effective for large studies [13] |

| Spatial Transcriptomics | 10x Visium, Slide-seq, MERFISH | Gene expression with spatial context | Resolves tissue organization; varying resolution (spot vs. single-cell) [11] |

| Single-Cell Multiomics | 10x Multiome, TEA-seq, CITE-seq | Simultaneous measurement of multiple modalities | Links gene expression to surface proteins or chromatin accessibility [12] |

| Bioinformatics Suites | Seurat, Scanpy, Bioconductor | Data processing and analysis | Essential for interpretation; requires computational expertise [9] [14] |

The exploratory analysis of single-cell RNA-seq data has fundamentally transformed our ability to discover rare cell types, deconvolute complex tissues, and map dynamic cell states. These three key applications provide unprecedented resolution for understanding cellular heterogeneity in development, health, and disease. As the field continues to evolve with advancements in spatial multiomics, computational methods, and targeted profiling approaches, single-cell technologies are poised to become increasingly integral to both basic research and translational applications. The integration of artificial intelligence and machine learning with single-cell multiomics offers particular promise for overcoming current analytical challenges and extracting deeper biological insights from these complex datasets [9]. For researchers embarking on single-cell studies, careful consideration of experimental design, platform selection, and analytical strategies—as outlined in this technical guide—will be essential for generating robust, biologically meaningful findings that advance our understanding of cellular systems.

Single-cell RNA sequencing (scRNA-seq) has fundamentally transformed biomedical research by enabling the precise measurement of gene expression in individual cells. This technology moves beyond the limitations of bulk RNA sequencing, which averages expression across thousands of cells, to reveal the profound heterogeneity within seemingly uniform cell populations [15]. Such resolution is particularly valuable for studying complex systems like the immune system, the brain, and tumors, where it can identify rare cell types and trace developmental trajectories [15]. This guide provides a comprehensive technical overview of the complete scRNA-seq workflow, framed within the context of exploratory data analysis, which is essential for researchers and drug development professionals aiming to leverage this powerful technology.

The Integrated scRNA-Seq Workflow: From Physical to Digital

The journey from a biological sample to visual insights involves a complex, integrated pipeline of laboratory and computational steps. The diagram below synthesizes these parallel physical and digital processes into a unified workflow.

Experimental Phase: From Tissue to Sequencing Library

Sample Dissociation and Preparation

The workflow begins with the creation of a high-quality single-cell suspension from your sample source, which can include fresh tissues, frozen specimens, or even FFPE-preserved materials [16]. The dissociation protocol must be carefully optimized for each tissue type to maximize cell viability while preserving RNA integrity. As emphasized by 10x Genomics, "garbage in, garbage out" is a critical principle—the quality of your final data is fundamentally constrained by the initial sample quality [16]. For sensitive samples like blood, this step may involve density gradient centrifugation to isolate target populations like peripheral blood mononuclear cells (PBMCs) [17].

Cell Partitioning and mRNA Barcoding

Modern high-throughput scRNA-seq platforms, such as those from 10x Genomics, use microfluidic technology to partition individual cells into nanoliter-scale droplets called GEMs (Gel Beads-in-Emulsions) [16]. Within each GEM, a unique combination of molecular barcodes is attached to every mRNA molecule from a single cell. This process involves:

- Cell Barcoding: All mRNA molecules from the same cell receive an identical 10x Barcode, allowing bioinformatic tracing back to their cellular origin.

- Molecular Barcoding: Each mRNA molecule receives a Unique Molecular Identifier (UMI) to enable accurate quantification and distinguish biological expression from amplification artifacts [16].

Different chemistries exist for this barcoding step, including 3' or 5' gene expression assays that capture transcript ends, and whole transcriptome approaches [16].

Library Preparation and Sequencing

The barcoded cDNA fragments undergo amplification via Polymerase Chain Reaction (PCR) to generate sufficient material for sequencing [16]. Sample index sequences are then added to allow multiplexing of multiple libraries in a single sequencing run. The final library preparation step adds platform-specific adapter sequences (e.g., P5 and P7 for Illumina platforms) required for next-generation sequencing [16]. The resulting sequencing-ready libraries undergo quality control before being loaded onto high-throughput sequencers.

Computational Phase: From Raw Data to Biological Insights

Raw Data Processing and Quality Control

Sequencing raw data (FASTQ files) undergoes alignment and processing to generate gene expression matrices. Cell Ranger is the established processing pipeline for 10x Genomics data, employing the STAR aligner to map reads to a reference genome and generate a count matrix where each row represents a gene and each column represents a cell [18].

Quality control is critical to ensure downstream analyses reflect biology rather than technical artifacts. The table below summarizes key QC metrics and their interpretation.

Table 1: Essential Quality Control Metrics for scRNA-seq Data

| QC Metric | Description | Interpretation Guidelines | Potential Issues Indicated |

|---|---|---|---|

| Count Depth | Total UMI counts per cell | Too low: damaged cells; Too high: doublets | Cell viability, amplification efficiency |

| Detected Genes | Number of genes detected per cell | Tissue/protocol dependent; reference similar studies | Cell integrity, sequencing depth |

| Mitochondrial % | Fraction of reads mapping to mitochondrial genes | >10-20% may indicate stressed/dying cells | Cellular stress, apoptosis |

| Doublet Rate | Percentage of multiplets in dataset | Platform-dependent (0.8-6% for 10x) | Cell loading concentration issues |

Based on [17]

Tools like Seurat and Scater provide functions to calculate and visualize these metrics, enabling researchers to set appropriate filtering thresholds [17]. Additionally, specialized tools like CellBender use deep learning to identify and remove ambient RNA noise—a common issue in droplet-based technologies [18].

Normalization, Integration, and Feature Selection

Normalization corrects for technical variations between cells, such as differences in sequencing depth or capture efficiency [15]. For UMI-based protocols, common approaches include log-normalization. In parallel, dataset integration may be necessary when combining data from multiple batches, samples, or experimental conditions. Methods like Harmony effectively correct batch effects while preserving biological variation, which is particularly important for large cohort studies [18].

Feature selection identifies highly variable genes (HVGs) that drive heterogeneity across the cell population. These genes, which exhibit more variance than expected by technical noise alone, form the feature set for downstream dimensionality reduction and clustering.

Dimensionality Reduction and Cell Clustering

Due to the high-dimensional nature of scRNA-seq data (measuring thousands of genes per cell), dimensionality reduction techniques are essential for visualization and analysis. Principal Component Analysis (PCA) is typically applied first to capture the main axes of variation [15]. Subsequently, non-linear methods like UMAP (Uniform Manifold Approximation and Projection) or t-SNE (t-Distributed Stochastic Neighbor Embedding) create two-dimensional representations for visualization [18].

Cell clustering groups cells with similar expression profiles, potentially corresponding to distinct cell types or states. Graph-based clustering methods (as implemented in Seurat and Scanpy) are widely used, with resolution parameters controlling the granularity of the clusters identified [18].

Advanced Analytical Approaches for Exploratory Analysis

Table 2: Advanced Analytical Methods for scRNA-seq Data Exploration

| Method Category | Representative Tools | Biological Application | Key Output |

|---|---|---|---|

| Trajectory Inference | Monocle 3, Velocyto | Developmental processes, cell differentiation | Pseudotime ordering, lineage trajectories |

| Cell-Cell Communication | Squidpy, CellChat | Intercellular signaling networks | Ligand-receptor interaction networks |

| Regulatory Network Inference | SCENIC, DoRothEA | Transcription factor activity | Regulon activity, key transcriptional drivers |

| Multi-omic Integration | Seurat v5, scvi-tools | Combined RNA+ATAC, RNA+protein data | Unified cell state definitions |

| Sample-Level Analysis | GloScope | Population-scale sample comparisons | Sample-level embeddings and visualizations |

For population-scale studies, the GloScope framework provides a innovative approach by representing each sample as a probability distribution of its cells, enabling sample-level visualization and quality control assessment [19]. This method is particularly valuable for exploring phenotypic differences or batch effects across large cohorts.

Visualization and Interpretation: The Final Frontier

Effective visualization transforms analytical results into biological insights. Standard visualization approaches include:

- UMAP/t-SNE Plots: Visualize cell clusters in two dimensions, with coloring by cluster identity, sample origin, or expression of key genes.

- Feature Plots: Visualize expression levels of specific genes across the reduced-dimensionality space.

- Violin Plots: Display expression distributions of marker genes across clusters.

- Heatmaps: Compare expression patterns of key marker genes across clusters or conditions.

For trajectory analysis, tools like Monocle 3 create visualizations that place cells along inferred developmental paths, often with branching points representing cell fate decisions [18]. Spatial transcriptomics data can be visualized with tools like Squidpy to explore expression patterns within tissue architecture [18].

Essential Bioinformatics Tools for scRNA-seq Analysis

The scRNA-seq bioinformatics landscape in 2025 features specialized tools operating within a broadly compatible ecosystem. The table below summarizes core tools that anchor modern analytical workflows.

Table 3: Essential Bioinformatics Tools for scRNA-seq Analysis in 2025

| Tool Name | Primary Function | Language | Key Features | Ideal Use Case |

|---|---|---|---|---|

| Cell Ranger | Raw data processing | - | FASTQ to count matrix; STAR aligner | Processing 10x Genomics data |

| Seurat | Comprehensive analysis | R | Data integration, spatial transcriptomics | Versatile analysis for R users |

| Scanpy | Comprehensive analysis | Python | Scalable to millions of cells | Large-scale datasets in Python |

| scvi-tools | Probabilistic modeling | Python | Deep generative models, batch correction | Complex integration, imputation |

| Harmony | Batch correction | R/Python | Fast, preserves biological variation | Multi-sample, multi-batch studies |

| CellBender | Ambient RNA removal | Python | Deep learning-based background removal | Droplet-based data cleaning |

| Monocle 3 | Trajectory inference | R | Graph-based trajectory modeling | Developmental processes, dynamics |

| Squidpy | Spatial analysis | Python | Spatial neighborhood analysis | Spatial transcriptomics data |

Based on [18]

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 4: Essential Research Reagents and Materials for scRNA-seq Workflows

| Reagent/Material | Function | Example Applications | Technical Considerations |

|---|---|---|---|

| Gel Beads | Delivery of barcoded oligonucleotides | 10x Genomics platforms | Store desiccated, protect from light |

| Partitioning Oil | Immiscible phase for droplet generation | Microfluidic droplet formation | Viscosity and stability critical |

| Lysis Buffer | Cell membrane disruption, RNA release | Cell partitioning step | Inhibitor removal, RNA stability |

| Reverse Transcriptase | cDNA synthesis from mRNA templates | Universal 3'/5' assays | Processivity, temperature optimum |

| Template Switch Oligo (TSO) | cDNA amplification initiation | Universal 5' assay | Enhances full-length transcript capture |

| Poly(dT) Primers | mRNA capture via poly-A tail binding | Universal 3' assay | Specificity for eukaryotic mRNA |

| Nucleotide Mix (dNTPs) | cDNA synthesis and amplification | Library preparation throughout | Quality affects error rates |

| PCR Primers | Library amplification and indexing | Sample index PCR | Design critical for specificity |

| Solid Tissue Dissociation Kits | Single-cell suspension preparation | Tumor, brain, complex tissues | Enzyme composition affects viability |

| Viability Stains | Live/dead cell discrimination | Pre-sequencing QC | DNA-binding dyes (e.g., DAPI, PI) |

Based on [16]

Successful scRNA-seq experiments require careful selection and handling of specialized reagents. The molecular biology reagents must be of high quality to ensure efficient reverse transcription, amplification, and library preparation. For sample preparation, tissue-specific dissociation protocols and enzymes are critical for obtaining high-viability single-cell suspensions without inducing significant stress responses [17]. Platform-specific reagent kits from commercial providers like 10x Genomics, Singleron, and others offer standardized workflows but require strict adherence to storage and handling specifications [17] [16].

Single-cell RNA sequencing (scRNA-seq) has revolutionized biomedical research by enabling the investigation of cellular heterogeneity, lineage dynamics, and spatial architecture at unprecedented resolution. As the scale and complexity of datasets have increased, with studies now routinely comprising millions of cells, the sophistication of computational tools has similarly advanced [18]. The scRNA-seq bioinformatics landscape in 2025 reflects a mature ecosystem of specialized tools operating within broadly compatible frameworks, allowing researchers to address questions previously beyond reach through integrated workflows that combine spatial, epigenetic, and transcriptomic data [18].

This evolving landscape presents both opportunities and challenges for researchers. Foundational platforms such as Scanpy and Seurat anchor analytical workflows, while advanced tools like scvi-tools and CellBender enable modeling of latent structures, correction of technical variance, and data denoising with increasing granularity [18]. The integration of spatial context through frameworks like Squidpy, coupled with refined trajectory inference using Monocle 3 and Velocyto, signals a shift toward dynamic, context-aware representations of cell state [18]. This technical guide provides a comprehensive overview of the current scRNA-tool ecosystem, structured to help researchers navigate the complex landscape of available platforms and methodologies for exploratory analysis of single-cell RNA-seq data.

Foundational Analytical Frameworks

Programming-Based Platforms

For researchers with computational expertise, programming-based platforms offer maximum flexibility and analytical power. These frameworks dominate large-scale single-cell analysis and method development.

Table 1: Foundational Programming-Based scRNA-seq Analysis Platforms

| Tool | Language | Primary Strengths | Core Functionality | Integration & Ecosystem |

|---|---|---|---|---|

| Scanpy [18] | Python | Scalability for millions of cells, memory optimization | Comprehensive preprocessing, clustering, visualization, pseudotime analysis | scverse ecosystem (scvi-tools, Squidpy), AnnData objects |

| Seurat [18] [20] | R | Versatility, multi-modal integration, spatial transcriptomics | Robust data integration, label transfer, clustering, differential expression | Bioconductor, Monocle ecosystems |

| scvi-tools [18] | Python (PyTorch) | Deep generative modeling, superior batch correction | Probabilistic modeling of gene expression, imputation, transfer learning | Built on AnnData, extensible to multiple data modalities |

| SingleCellExperiment [18] | R (Bioconductor) | Reproducibility, method benchmarking | Standardized data structure, robust normalization, quality control | Compatibility with Seurat and Monocle, many Bioconductor packages |

Scanpy continues to dominate large-scale scRNA-seq analysis, particularly for datasets exceeding millions of cells. Its architecture, built around the AnnData object, optimizes memory use and enables scalable workflows [18]. As part of the broader scverse ecosystem, Scanpy integrates seamlessly with other Python tools for statistical modeling and visualization, creating a cohesive analytical environment.

Seurat remains the most mature and flexible toolkit for R users, with its anchoring method enabling robust data integration across batches, tissues, and even modalities [18]. In 2025, Seurat has expanded to natively support spatial transcriptomics, multiome data (e.g., RNA + ATAC), and protein expression via CITE-seq [18]. Its modular workflows and integration with Bioconductor and Monocle ecosystems make it indispensable for many research pipelines, particularly in neuroscience where its structured approach facilitates reproducible analysis [20].

Specialized Analytical Tools

Beyond foundational frameworks, specialized tools address specific analytical challenges within the scRNA-seq workflow, from data preprocessing to dynamic modeling.

Table 2: Specialized scRNA-seq Analytical Tools

| Tool | Primary Function | Key Algorithms/Methods | Integration | Unique Capabilities |

|---|---|---|---|---|

| Cell Ranger [18] [10] | Preprocessing of 10x data | STAR aligner, cell calling | Direct pipeline to Scanpy/Seurat | Supports single-cell and multiome workflows |

| CellBender [18] | Ambient RNA removal | Deep probabilistic modeling | Seurat, Scanpy | Distinguishes real cellular signals from background noise |

| Harmony [18] | Batch effect correction | Iterative refinement algorithm | Direct Seurat/Scanpy integration | Preserves biological variation while aligning datasets |

| Velocyto [18] | RNA velocity | Spliced/unspliced transcript quantification | Scanpy workflows, .loom files | Infers future transcriptional states |

| Monocle 3 [18] | Trajectory inference | Graph-based abstraction, UMAP reduction | Seurat compatibility | Models lineage branching and temporal dynamics |

| Squidpy [18] | Spatial analysis | Neighborhood graphs, ligand-receptor analysis | Built on Scanpy | Spatial patterns, cell-cell communication |

Cell Ranger remains the gold standard for preprocessing raw sequencing data from 10x Genomics platforms, reliably transforming raw FASTQ files into gene-barcode count matrices using the STAR aligner [18] [10]. Its latest versions support both single-cell and multiome workflows, including RNA + ATAC and Feature Barcode technology, defining the foundational layer for many downstream analyses [18].

For advanced modeling, scvi-tools brings deep generative modeling into the mainstream through variational autoencoders (VAEs) to model the noise and latent structure of single-cell data [18]. This provides superior batch correction, imputation, and annotation compared to conventional methods, with extensibility across scRNA-seq, scATAC-seq, spatial transcriptomics, and CITE-seq data [18].

Commercial & User-Friendly Platforms

Cloud-Based Analysis Platforms

For researchers preferring graphical interfaces or without extensive programming expertise, numerous cloud-based platforms provide user-friendly access to sophisticated analytical capabilities.

Table 3: Commercial and Cloud-Based scRNA-seq Analysis Platforms

| Platform | Target Users | Key Features | AI/ML Capabilities | Data Compatibility | Pricing Model |

|---|---|---|---|---|---|

| Nygen [21] | All researchers, especially no-code needs | AI-powered cell annotation, batch correction, intuitive dashboards | LLM-augmented insights, automated annotation | Seurat, Scanpy, multiple formats | Freemium tier, subscription from $99/month |

| BBrowserX [21] [22] | Researchers needing AI-assisted analysis | BioTuring Single-Cell Atlas access, customizable plots, GSEA | AI-powered cell annotation, predictive modeling | CellRanger, Seurat, Scanpy objects | Paid software, custom pricing |

| Trailmaker [21] [22] | Parse Biosciences users, academic researchers | Direct pipeline integration, trajectory analysis, automated workflow | Automated annotation using ScType | Parse Biosciences, 10x Genomics, Seurat objects | Free for academics and Parse customers |

| CytoAnalyst [23] | Teams requiring collaboration and customization | Grid-layout visualization, parallel analysis instances, real-time collaboration | AI-powered inference tools | 10X Cell Ranger output, AnnData objects | Free web platform |

| Partek Flow [21] [22] | Labs needing modular, scalable workflows | Drag-and-drop workflow builder, pathway analysis | Automated analytics | Multiple NGS data types | Subscription from $249/month |

| ROSALIND [21] [22] | Collaborative teams focusing on interpretation | GO enrichment, automated cell annotation, interactive reports | Automated analysis pipelines | Optimized for 10x Genomics | From $149/month |

| Loupe Browser [21] [22] [10] | 10x Genomics users needing visualization | Integrates with 10x pipelines, t-SNE/UMAP, spatial analysis | Basic analytical features | 10x Genomics .cloupe files | Free for 10x data |

Nygen exemplifies the trend toward AI-enhanced analysis, offering LLM-augmented insights for disease impact analysis and automated cell type annotation with confidence scores [21]. Its no-code design lowers the barrier to entry for researchers without programming skills while maintaining comprehensive workflow integration that reduces the need for multiple tools.

CytoAnalyst, a recently introduced web-based platform, advances workflow flexibility through custom pipeline configuration and parallel analysis instances that facilitate comparison of different methods or parameter settings [23]. Its grid-layout visualization system supports simultaneous displays of different data aspects, allowing comparison of multiple labels and plots side-by-side for comprehensive data insights [23]. The platform also features an advanced sharing system that facilitates real-time synchronization among team members, addressing the growing need for collaborative analysis in large-scale single-cell studies.

Platform Selection Considerations

Choosing the appropriate analytical platform depends on several factors that directly impact research outcomes and efficiency:

Data Compatibility: Ensure support for common data formats (FASTQ, CSV, H5AD) and interoperability with popular frameworks like Seurat or Scanpy, plus compatibility with multimodal data if needed [21] [22].

Usability and Accessibility: Evaluate the learning curve, with tools offering intuitive interfaces or no-code functionality enabling faster onboarding for biologists without programming expertise [21] [22].

Feature Set: Prioritize tools providing end-to-end solutions, including data preprocessing, clustering, dimensionality reduction, visualization, and differential expression analysis [21].

Performance and Scalability: Consider optimization for large datasets, with fast processing times and ability to handle thousands of cells without compromising accuracy [21].

Cost and Licensing: Balance budget constraints against needs, noting that open-source tools are cost-effective while premium platforms often offer added support, enhanced security, and unique features [21] [22].

Community and Support: Prefer tools with active user communities, robust documentation, and dedicated support teams to ensure smooth troubleshooting and knowledge-sharing [21].

Experimental Workflows and Best Practices

End-to-End Analytical Workflow

A standardized workflow for scRNA-seq analysis encompasses multiple stages from raw data to biological interpretation, with tool selection critical at each step.

Diagram 1: Comprehensive scRNA-seq Analysis Workflow

Quality Control and Preprocessing Protocol

Robust quality control is essential for reliable downstream analysis. The following protocol outlines key steps based on best practices for 10x Genomics data [10]:

Initial Quality Assessment: Begin with the Cell Ranger

web_summary.htmlfile to evaluate critical metrics including:- Number of cells recovered versus targeted

- Percentage of confidently mapped reads in cells (should be >90%)

- Median genes per cell (varies by sample type)

- Barcode rank plot showing characteristic "cliff-and-knee" shape [10]

Cell Filtering in Loupe Browser: For 10x Genomics data, use Loupe Browser to filter cell barcodes based on:

- UMI counts: Remove extreme outliers with very high UMI counts (potential multiplets) and very low UMI counts (potential ambient RNA)

- Number of features: Filter barcodes with unusually high or low numbers of detected genes

- Mitochondrial reads: Remove cells with high percentage of mitochondrial UMIs (>10% for PBMCs, varies by cell type) [10]

Ambient RNA Removal: For droplet-based technologies, apply computational methods to address background noise:

Batch Effect Correction: When integrating multiple datasets, apply Harmony or scvi-tools to align datasets while preserving biological variation [18]. For Harmony:

- Implementation: Direct integration into Seurat and Scanpy pipelines

- Advantage: Scalable and preserves biological variation while aligning datasets

- Application: Particularly useful for large consortia data like the Human Cell Atlas [18]

Data Integration and Clustering Methodology

For integrative analysis across multiple samples or conditions:

Normalization Approach: Select appropriate normalization based on data characteristics:

- Log-normalization: Standard approach for most datasets

- SCTransform: Improved normalization for UMI-based data addressing technical noise

Feature Selection: Identify highly variable genes using method-specific approaches:

- Seurat: vst algorithm

- Scanpy: Highly variable gene selection with flavor options

Dimensionality Reduction: Implement sequential reduction approaches:

Clustering Analysis: Apply graph-based clustering algorithms:

Successful scRNA-seq analysis requires both computational infrastructure and access to reference data for annotation and interpretation.

Table 4: Essential Research Reagents and Resources for scRNA-seq Analysis

| Resource Type | Specific Tools/Databases | Primary Function | Access Method |

|---|---|---|---|

| Public Data Repositories | GEO/SRA [24] | Repository for raw and processed data | Web interface, API |

| Single Cell Portal [24] | scRNA-seq specific data exploration | Web portal | |

| CZ Cell x Gene Discover [24] | Curated single-cell data collection | Web interface | |

| PanglaoDB [24] | scRNA-seq marker gene database | Web portal, R package | |

| Reference Atlases | Human Cell Atlas | Comprehensive human cell references | Multiple access points |

| Allen Brain Cell Atlas [24] | Brain-specific cell taxonomy | Web portal | |

| BioTuring Single-Cell Atlas [21] | Commercial reference database | BBrowserX integration | |

| Analysis Formats | AnnData (.h5ad) [18] [23] | Standardized Python data structure | Scanpy, scvi-tools |

| Seurat Object (.rds) [22] | R-based data structure | Seurat ecosystem | |

| 10X Cell Ranger Output [10] [23] | Processed count matrices | Loupe Browser, most platforms | |

| Quality Control Tools | FastQC [25] | Sequence quality assessment | Standalone application |

| MultiQC [25] | Aggregate QC reports | Command line | |

| Cell Ranger QC [10] | Platform-specific quality metrics | 10x Genomics Cloud |

Public Data Utilization Strategies

Leveraging public data resources enhances single-cell research through comparative analysis and biological context:

Dataset Discovery: Utilize specialized portals for efficient data identification:

Reference-Based Annotation: Employ annotated datasets for cell type identification:

- Method: Transfer learning from reference to query datasets

- Tools: Seurat label transfer, scvi-tools conditional VAE

- Resources: Human Cell Atlas, Tabula Sapiens, tissue-specific references

Cross-Study Validation: Validate findings against public datasets to assess robustness:

- Approach: Compare cluster markers with established cell type signatures

- Databases: PanglaoDB for marker genes, CellMarker for curated signatures

- Implementation: Automated querying through platforms like BBrowserX [21]

The scRNA-seq analytical landscape in 2025 offers researchers diverse tools ranging from programmable frameworks for maximal flexibility to user-friendly platforms enhancing accessibility through AI and visualization. Foundational tools like Scanpy and Seurat continue to evolve, supporting increasingly complex multi-modal analyses while maintaining robust performance at scale. Simultaneously, commercial platforms are lowering barriers to entry through intuitive interfaces and automated workflows without sacrificing analytical depth.

The future trajectory of scRNA-seq analysis points toward greater integration of spatial and dynamic modeling, with tools like Squidpy and Velocyto becoming standard components of analytical workflows. As single-cell technologies continue to advance, generating increasingly complex and multi-modal datasets, the tools and platforms overviewed in this guide provide the foundation for extracting meaningful biological insights from these powerful data resources. By selecting tools aligned with their technical capabilities and research objectives, researchers can effectively navigate the complex single-cell tool landscape to advance our understanding of cellular biology in health and disease.

The scRNA-Seq Analysis Pipeline: A Step-by-Step Methodological Guide

Quality control (QC) and filtering of cells and genes constitute the critical first step in the exploratory analysis of single-cell RNA-sequencing (scRNA-seq) data. This process is foundational to all subsequent biological interpretations, as it aims to remove technical artifacts and low-quality data that can confound downstream analyses [26]. Single-cell RNA-sequencing technologies have revolutionized biomedical science by enabling comprehensive exploration of cellular heterogeneity, individual cell characteristics, and cell lineage trajectories at unprecedented resolution [27]. However, scRNA-seq data possess two important properties that necessitate careful QC: they are characterized by an excessive number of zeros (drop-out events) due to limiting mRNA, and the potential for correcting the data might be limited as technical artifacts can be confounded with genuine biology [28].

The fundamental goal of QC is to filter the data to include only true cells of high quality, making it easier to identify distinct cell type populations during clustering [29]. This process involves addressing multiple technical challenges, including delineating poor-quality cells from less complex cells of biological interest, choosing appropriate filtering thresholds to retain high-quality cells without removing biologically relevant cell types, and accounting for platform-specific artifacts [29] [30]. The impact of QC filtering can only be fully judged based on the performance of downstream analyses, making this often an iterative process that may require revisiting filtering parameters if subsequent analysis results prove difficult to interpret [30]. A recommended approach is to begin with permissive filtering strategies, particularly when analyzing novel cell types or biological systems where the expected QC metrics may not be fully established [30].

Key QC Metrics and Their Biological Significance

Core Quality Control Metrics

Quality control in scRNA-seq analysis primarily relies on three fundamental metrics that help distinguish high-quality cells from technical artifacts and compromised cells. The table below summarizes these core QC metrics, their measurement, and biological significance:

Table 1: Core QC Metrics for Single-Cell RNA-Sequencing Data

| QC Metric | What It Measures | Indication of Low Quality | Biological Confounder |

|---|---|---|---|

| Count Depth (Library Size) | Total number of UMIs or reads per cell [29] [31] | Low counts indicate poor cDNA capture or amplification efficiency; unexpectedly high counts may indicate multiplets [30] [26] | Quiescent cells or small cell types naturally have low RNA content; large cells may have higher counts [26] |

| Number of Detected Genes | Number of genes with positive counts per cell [28] [29] | Low number indicates limited transcript diversity captured [31] | Less complex cell types (e.g., platelets, red blood cells) naturally express fewer genes [29] |

| Mitochondrial Read Percentage | Fraction of counts mapping to mitochondrial genes [28] [31] | High percentage suggests broken cell membrane and cytoplasmic mRNA leakage [26] | Cells involved in respiratory processes (e.g., cardiomyocytes) may naturally have high mitochondrial gene expression [28] [30] |

These three QC covariates should be considered jointly when making thresholding decisions, as considering them in isolation can lead to misinterpretation of cellular signals [26]. For example, cells with a relatively high fraction of mitochondrial counts may be involved in respiratory processes rather than being low quality, while cells with low counts may represent quiescent cell populations rather than compromised cells [26]. The distributions of these metrics are examined for outlier peaks that are filtered out by thresholding, with the goal of setting thresholds as permissive as possible to avoid unintentionally filtering out viable cell populations [26].

Additional QC metrics provide further insights into data quality. The number of genes detected per UMI offers information about dataset complexity, with higher values indicating more complex data [29]. Ribosomal gene percentages can also be calculated, though there is less consensus on their use for filtering [28]. In plate-based protocols without UMIs, the proportion of reads mapped to spike-in transcripts provides a valuable alternative QC metric, where high proportions indicate poor-quality cells that have lost endogenous RNA [31].

Advanced QC Considerations

Beyond the core metrics, several advanced QC considerations are essential for robust analysis:

Doublet Detection: Doublets or multiplets occur when two or more cells are partitioned into a single droplet or well, creating artificial hybrid expression profiles [32]. The multiplet rate is influenced by the scRNA-seq platform and the number of loaded cells [27]. For example, 10x Genomics reports that when 7,000 target cells are loaded, 378 multiplets are identified (5.4% of total cells), increasing to 7.6% with 10,000 target cells [27]. Specialized tools such as DoubletFinder, Scrublet, and Solo have been developed to identify multiplets by generating artificial doublets and comparing gene expression profiles of barcodes against these in silico doublets [30] [32]. However, a benchmarking study found that even the method with the highest multiplet-detection accuracy was relatively low at 0.537, with substantial variation across datasets, recommending a combination of automated tools and manual inspection [27].

Ambient RNA Contamination: Ambient RNAs originate from transcripts of damaged or apoptotic cells that leak out during single-cell isolation and become encapsulated in droplets along with other cells [27]. This contamination can distort UMI counting and downstream analysis of gene expressions by causing the detection of cell-type-specific markers in inappropriate cell types [30] [27]. Tools such as SoupX and CellBender have been developed to remove ambient RNA signal, with CellBender particularly noted for providing accurate estimation of background noise compared to other tools [30] [27].

Empty Droplet Identification: In droplet-based methods, most droplets (>90%) do not contain an actual cell [32]. Algorithms like barcodeRanks and EmptyDrops from the dropletUtils package help distinguish cell-containing droplets from empty ones by deriving an "ambient profile" based on gene expression from droplets with small UMI counts and identifying barcodes with significantly different profiles [32].

Methodologies and Experimental Protocols

QC Metric Calculation Workflow

The process of calculating QC metrics follows a systematic workflow that transforms raw sequencing data into filtered high-quality cells ready for downstream analysis. The following diagram illustrates the key steps and decision points in this workflow:

Diagram 1: QC Metric Calculation Workflow

The initial QC metric calculation begins with importing the count matrix, which can be a "Droplet" matrix (containing all barcodes including empty droplets), "Cell" matrix (empty droplets excluded), or "FilteredCell" matrix (poor quality cells also excluded) [32]. The calculate_qc_metrics function in Scanpy or similar functions in other packages computes the essential metrics [28]. For mitochondrial gene identification, genes with prefixes "MT-" (human) or "mt-" (mouse) are typically selected, though this varies by species [28]. Ribosomal and hemoglobin genes can also be identified for additional QC insights [28].

Threshold Determination Strategies

Two primary approaches exist for determining filtering thresholds, each with distinct advantages and limitations:

Table 2: Approaches for Determining QC Filtering Thresholds

| Approach | Methodology | Advantages | Limitations | Best Suited For |

|---|---|---|---|---|

| Fixed Thresholds | Apply predetermined cutoffs (e.g., >5% mitochondrial reads, <200 genes) [31] | Simple to implement, consistent across analyses | Requires prior experience, may not adapt to different protocols or cell types [31] | Standardized protocols with well-established expectations |

| Adaptive Thresholds (MAD) | Identify outliers using Median Absolute Deviation (typically 3-5 MADs from median) [28] [30] | Data-driven, adapts to specific dataset characteristics | May not perform well with highly heterogeneous cell populations [30] | Novel cell types or when dataset characteristics are unknown |

The adaptive thresholding approach using MAD is increasingly recommended as it provides a robust statistical method for outlier detection that adapts to the specific characteristics of each dataset [28] [30]. The MAD is calculated as MAD = median(|X_i - median(X)|) with X_i being the respective QC metric, and cells are typically flagged as outliers if they deviate by more than 3-5 MADs from the median [28]. This approach is particularly valuable for novel cell types or experimental conditions where established fixed thresholds may not apply.

Implementation in Analysis Environments

The calculation of QC metrics is implemented across major single-cell analysis platforms. In Scanpy (Python), the sc.pp.calculate_qc_metrics function computes key metrics and stores them in the .obs and .var data frames [28]. In Seurat (R), similar functionality is provided through the PercentageFeatureSet function and metadata manipulation [29]. The singleCellTK package provides a comprehensive QC pipeline that integrates multiple tools across R and Python environments, generating standardized HTML reports for quality assessment [32].

Computational Tools and Software Packages

A robust ecosystem of computational tools has been developed to address the various QC challenges in scRNA-seq analysis. The table below highlights essential tools and their specific functions in the QC process:

Table 3: Essential Computational Tools for scRNA-seq Quality Control

| Tool/Package | Programming Environment | Primary QC Function | Key Algorithm/Approach |

|---|---|---|---|

| Scanpy [28] | Python | Comprehensive preprocessing and QC | calculate_qc_metrics, visualizations, MAD-based filtering |

| Seurat [29] | R | QC metric calculation and visualization | PercentageFeatureSet, diagnostic plots, variable feature selection |

| singleCellTK [32] | R | Integrated QC pipeline | Empty droplet detection, doublet scoring, ambient RNA estimation |

| DoubletFinder [27] | R | Doublet detection | Artificial nearest-neighbor network classification |

| Scrublet [29] | Python | Doublet detection | In silico doublet simulation and scoring |

| SoupX [27] | R | Ambient RNA correction | Estimation of background contamination profile |

| CellBender [27] | Python | Ambient RNA removal | Deep learning-based background model |

These tools can be integrated into comprehensive workflows that address the full spectrum of QC challenges. For example, the SCTK-QC pipeline within the singleCellTK package incorporates empty droplet detection, standard QC metric calculation, doublet prediction with multiple algorithms, and ambient RNA estimation [32]. This pipeline supports importing data from 11 different preprocessing tools, highlighting the importance of interoperability in scRNA-seq analysis ecosystems.

Laboratory Reagents and Experimental Solutions

While computational methods are essential for QC, the foundation of quality single-cell data begins with proper sample preparation. Key reagents and solutions include:

Viable Single-Cell Suspensions: The starting material for most single-cell protocols, requiring minimization of cellular aggregates, dead cells, and non-cellular nucleic acids [33]. Proper tissue dissociation is critical, though aggressive dissociation can induce stress responses that manifest as technical artifacts in the data [27].

Unique Molecular Identifiers (UMIs): Short nucleotide sequences that label individual mRNA molecules during reverse transcription, enabling correction for amplification biases and more accurate transcript quantification [34]. UMIs are incorporated into many modern scRNA-seq protocols including CEL-Seq, MARS-Seq, Drop-Seq, inDrop-Seq, and 10x Genomics [34].

Spike-In RNAs: External RNA controls added in known quantities across all cells, enabling normalization and detection of cells with poor RNA capture efficiency [31]. In the absence of spike-ins, mitochondrial read percentages serve as an alternative QC metric [31].

Cell Viability Dyes: Critical for assessing sample quality before loading onto single-cell platforms, helping to ensure that input samples meet minimum viability requirements (typically >80% viability for 10x Genomics protocols) [33].

Proper implementation of both laboratory and computational QC measures creates a foundation for reliable single-cell analysis, enabling researchers to distinguish technical artifacts from biological signals and ultimately draw meaningful conclusions from their data.

Quality control and filtering represent the critical gateway to biologically meaningful single-cell RNA-sequencing analysis. By systematically addressing low-quality cells, doublets, and ambient RNA contamination through the methodical application of established QC metrics and thresholds, researchers can ensure that their downstream analyses build upon a foundation of high-quality data. The integration of both experimental best practices and computational QC tools creates a robust framework for exploratory scRNA-seq analysis that maximizes biological insights while minimizing technical artifacts. As the field continues to evolve with new protocols and analysis methods, the fundamental principles of rigorous quality control will remain essential for generating reliable, reproducible single-cell research with potential implications for drug development and personalized medicine.

In the exploratory analysis of single-cell RNA-sequencing (scRNA-seq) data, accounting for technical variation is a critical prerequisite for uncovering meaningful biological insights. Technical artifacts, such as differences in sequencing depth, capture efficiency, and the presence of ambient RNA, can obscure true biological heterogeneity and lead to misinterpretation of cell types and states [35] [26]. This guide details the methodologies for normalizing and scaling scRNA-seq data to correct for these non-biological variations, framed within the broader context of a robust exploratory analysis workflow.

Why Normalization is Essential

In scRNA-seq protocols, including those that use Unique Molecular Identifiers (UMIs), the raw molecular counts reflect a combination of true biological signal and unwanted technical noise [35]. Key sources of technical variation include:

- Sequencing Depth: The total number of sequenced reads per cell can vary significantly, making direct comparisons of gene expression between cells unreliable [35] [26].

- Capture Efficiency: Differences in the efficiency of mRNA capture and reverse transcription during library preparation can affect the number of transcripts detected per cell [35].

- Gene-Level Biases: Technical variability is not uniform across all genes; high-abundance genes often exhibit disproportionately higher variance, especially in cells with low UMI counts [35].

The goal of normalization is to remove or minimize these technical effects, enabling downstream analyses—such as dimensionality reduction, clustering, and differential expression—to be driven by biological heterogeneity rather than technical artifacts [35] [26].

A Landscape of Normalization Methods

Numerous normalization methods have been developed, each with distinct underlying models and assumptions. The table below summarizes some commonly used approaches in the single-cell community.

| Method Name | Underlying Model / Approach | Key Features | Programming Language |

|---|---|---|---|

| SCTransform [35] | Regularized Negative Binomial Regression | Models gene expression with sequencing depth as a covariate; outputs Pearson residuals that are independent of sequencing depth. | R |

| BASiCS [35] | Bayesian Hierarchical Model | Jointly models spike-in genes and biological genes to quantify technical and biological variation; requires spike-ins or technical replicates. | R |

| SCnorm [35] | Quantile Regression | Groups genes with similar dependence on sequencing depth and estimates scale factors for each group; robust for complex data. | R |

| Scran [35] | Pooling and Deconvolution | Pools cells to compute pool-based size factors, then deconvolves them to obtain cell-specific size factors; effective for data with many zero counts. | R |

| Linnorm [35] | Linear Model and Transformation | Transforms data to minimize deviation from homoscedasticity and normality; can also be used for data transformation. | R |

| PsiNorm [35] | Power-Law Pareto Distribution | Estimates a shape parameter for each cell using maximum likelihood; highly scalable for large datasets. | R |

| Log-Normalize [35] | Size Factor and Log-Transform | Divides counts by total cellular UMI count, scales by a factor (e.g., 10,000), and log-transforms (log1p). Implemented in Seurat (NormalizeData) and Scanpy. |

R, Python |

Table 1: A comparison of single-cell RNA-seq data normalization methods.

There is no universal "best" normalization method. The choice depends on the dataset characteristics and the specific biological questions. For instance, while the simple log-normalization method is widely used and performs satisfactorily in many clustering tasks, it may fail to adequately normalize high-abundance genes and can leave a residual correlation between cellular sequencing depth and low-dimensional embedding [35]. It is considered good practice to test multiple normalization methods and compare their performance in downstream tasks like clustering and differential expression [35].

The Normalization Workflow in Context

Data normalization is not a standalone step but an integral part of a larger analytical pipeline. The following diagram illustrates how normalization fits into the broader workflow of scRNA-seq exploratory analysis.

Diagram 1: The role of normalization in the single-cell RNA-seq analysis workflow.

Foundational Pre-Normalization Steps

The quality of the input data is paramount. Before normalization, rigorous quality control (QC) must be performed to remove low-quality cells and uninformative genes [7] [26] [10].

Cell-Level QC: This involves filtering out cells based on three key metrics visualized in diagnostic plots (e.g., violin plots) [7] [10]:

- Low total UMI counts: Indicates poor capture or damaged cells.

- Low number of detected genes: Suggests compromised cell integrity.

- High fraction of mitochondrial reads: A marker for apoptotic or dying cells (common thresholds are 5-10%, though this is sample-dependent) [7] [26].

- High UMI counts/genes: Can indicate multiplets (doublets/triplets) where multiple cells were captured together [7] [10].

Gene-Level Filtering: To reduce noise, researchers often filter out genes detected in only a few cells or genes with consistently low counts across the dataset. This step also sometimes involves removing genes from specific classes, like ribosomal genes, unless they are the subject of study [7].

The Act of Normalization

After QC, the filtered count matrix is used as input for the chosen normalization method (as described in Table 1). This step adjusts the counts to eliminate the dominant effect of technical variability, creating a "normalized" expression matrix.

Post-Normalization Scaling and Downstream Analysis

Following normalization, a scaling step (often called "standardization") is typically applied. This shifts the expression of each gene to have a mean of zero and a standard deviation of one across all cells. This ensures that highly expressed genes do not dominate dimensional reduction techniques like Principal Component Analysis (PCA) [26]. The scaled data then fuel the core exploratory analyses of clustering and visualization, ultimately leading to biological interpretation.

Successfully implementing a normalization strategy requires both computational tools and conceptual resources. The table below lists key reagents, software, and data sources.

| Tool/Resource | Type | Function in Normalization & Analysis |

|---|---|---|

| Seurat [35] [20] | R Software Package | Provides integrated environment for scRNA-seq analysis; includes functions like NormalizeData (log-normalization) and SCTransform. |

| Scanpy [35] | Python Software Package | Python-based toolkit for analyzing single-cell gene expression data; includes normalize_total and log1p functions. |

| Scran [35] | R/Bioconductor Package | Implements the pooling-based size factor estimation method for normalization. |

| Spike-In RNAs [35] | Laboratory Reagent | Exogenous RNA molecules added in known quantities to help calibrate and measure technical variation. |

| Cell Ranger [10] | Data Processing Pipeline | 10x Genomics' proprietary software for processing raw sequencing data into a count matrix, which is the starting point for normalization. |

| Loupe Browser [35] [10] | Visualization Software | Allows for initial data exploration, quality control (e.g., visualizing UMI distributions), and filtering before normalization. |

| scRNA-tools.org [36] | Online Database | A curated database of software tools for scRNA-seq analysis, helping researchers navigate the available normalization methods. |

Table 2: Key research reagents and software solutions for scRNA-seq normalization and analysis.

Effective normalization and scaling are foundational to the accurate exploratory analysis of single-cell RNA-sequencing data. By systematically correcting for technical variation, researchers can ensure that the observed heterogeneity and differential expression patterns in their data reflect underlying biology rather than experimental artifact. As the field continues to mature with an ever-expanding toolkit [36], understanding the principles and practical implementations of these methods remains essential for all researchers, scientists, and drug development professionals leveraging this transformative technology.

Single-cell RNA sequencing (scRNA-seq) has revolutionized biomedical research by enabling the measurement of gene expression at the single-cell resolution, facilitating the study of cellular heterogeneity, identification of rare populations, and inference of developmental trajectories [37] [4]. However, the resulting datasets are characterized by extreme high-dimensionality, where each of the thousands of cells is measured across thousands of genes, creating a complex data structure that poses significant analytical challenges [37] [38]. This high-dimensionality stems from analyzing numerous cells and genes, while data sparsity arises from zero counts in gene expression data, known as dropout events [38]. Dimensionality reduction techniques thus become an indispensable step in scRNA-seq analysis workflows, transforming complex gene expression profiles into interpretable low-dimensional embeddings that preserve biologically meaningful structures [37] [39].