Batch Effect Correction for RNA-seq: A 2025 Guide for Robust Genomic Analysis

This article provides a comprehensive guide for researchers and drug development professionals on managing batch effects in RNA-seq data analysis.

Batch Effect Correction for RNA-seq: A 2025 Guide for Robust Genomic Analysis

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on managing batch effects in RNA-seq data analysis. It covers the foundational concepts of batch effects, from their sources to their impact on differential expression and clustering. The guide details current methodologies, including established algorithms like ComBat-Seq and Harmony, and explores practical implementation in R. It further addresses critical troubleshooting and optimization strategies for complex scenarios, such as confounded designs and data integration. Finally, it presents a framework for the rigorous validation and benchmarking of correction performance using established metrics, empowering scientists to ensure the reliability of their genomic findings.

What Are Batch Effects and Why Do They Threaten Your RNA-seq Analysis?

In molecular biology, a batch effect occurs when non-biological factors in an experiment introduce systematic changes in the produced data. These effects can lead to inaccurate conclusions when their causes are correlated with one or more outcomes of interest, and they are particularly common in high-throughput sequencing experiments like RNA sequencing (RNA-seq) [1]. Batch effects arise from various technical sources, including differences in laboratory conditions, reagent lots, personnel, processing times, and the specific instruments used for the experiment [1]. In RNA-seq studies, these effects can obscure true biological differences, such as differentially expressed genes, and significantly reduce the statistical power of an analysis [2] [3]. This document outlines the principles, detection methods, and correction protocols for managing batch effects within the context of RNA-seq research.

Core Principles and Key Causes

Batch effects represent systematic technical variations unrelated to any biological variation recorded in a microarray or RNA-seq experiment [1]. They are a form of confounding variable that, if unaddressed, can compromise data integrity and lead to spurious scientific findings.

The following table summarizes the primary technical sources of batch effects in RNA-seq workflows:

Table 1: Common Causes of Batch Effects in RNA-seq Experiments

| Category | Specific Examples |

|---|---|

| Reagents & Kits | Different lots of sequencing kits, reverse transcriptase, or other reagents [1]. |

| Personnel & Workflow | Variations in technique between different researchers handling samples [1]. |

| Laboratory Conditions | Fluctuations in ambient temperature, humidity, or atmospheric ozone levels [1]. |

| Instrumentation | Different sequencing platforms (e.g., Illumina HiSeq vs. NovaSeq) or calibrations [1]. |

| Experimental Timing | Samples processed at different times (e.g., different days or months) [1] [4]. |

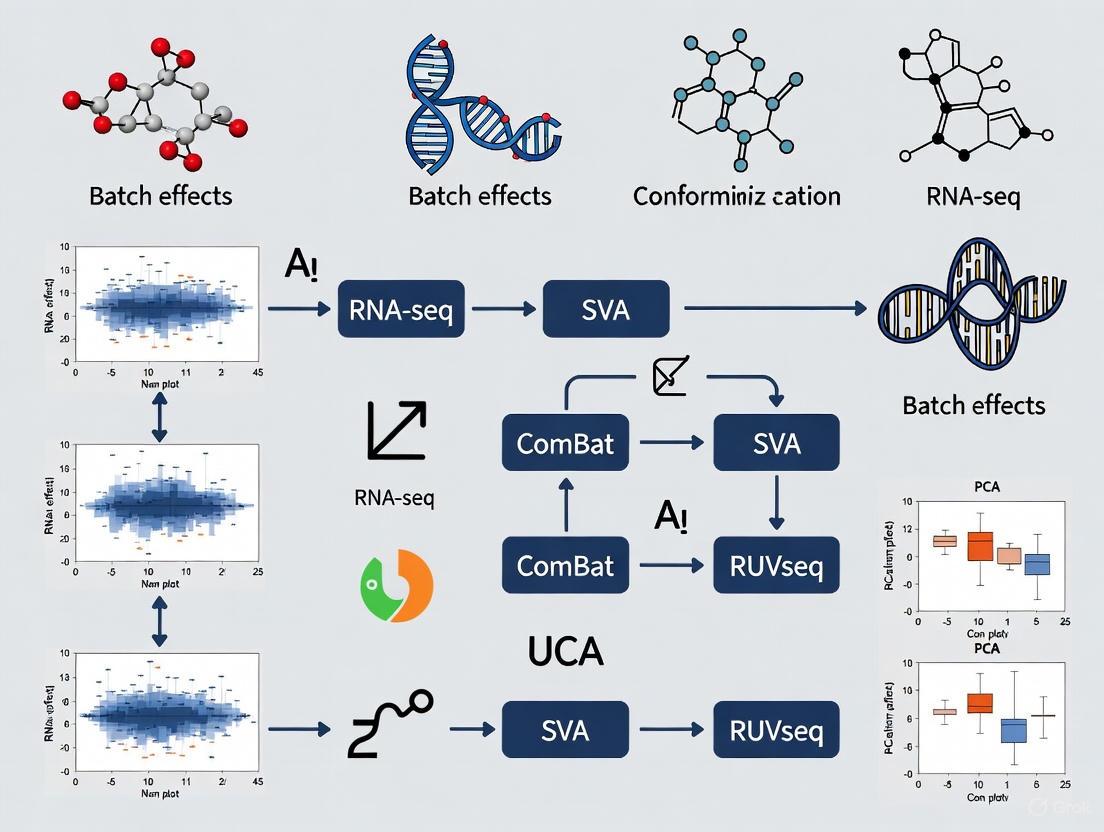

The diagram below illustrates how these technical factors introduce variation that is confounded with the biological signal of interest in a typical RNA-seq data generation workflow.

Detection and Quality Control

Before correction, batch effects must be detected and their impact assessed. A combination of visual and quantitative methods is recommended.

Visual Assessment

Principal Component Analysis (PCA) is the most common method for visualizing batch effects. In an uncorrected PCA plot, samples often cluster by technical batch rather than by biological group when a strong batch effect is present [4].

Quality Score Analysis: Machine-learning-derived quality scores (e.g., Plow, a probability that a sample is of low quality) can be leveraged to detect batches. Significant differences in quality scores between processing groups can indicate the presence of a batch effect correlated with technical quality [4].

Quantitative Metrics

The following quantitative measures help in evaluating the extent of batch effects and the success of correction methods:

- Clustering Metrics: Metrics such as the Gamma, Dunn1, and Within-Between Ratio (WbRatio) can be calculated from PCA plots to evaluate the separation of biological groups versus technical batches [4].

- Differentially Expressed Genes (DEGs): An implausibly low or high number of DEGs between biological groups known to be different can indicate that batch effects are confounding the analysis. A successful correction should lead to a more biologically plausible number of DEGs [4].

- Dispersion Factors: In metrics used by methods like ComBat-ref, the dispersion of count data distribution within each batch is a key parameter. Batches with significantly different dispersions indicate a need for correction [3].

Table 2: Summary of Key Batch Effect Detection Methods

| Method | Description | Key Output/Statistic |

|---|---|---|

| Principal Component Analysis (PCA) | Unsupervised visualization of the largest sources of variation in the dataset. | 2D/3D plot showing clustering by batch or biological group. |

| Quality Score Analysis | Uses a machine-learning model to predict sample quality and tests for association with batch. | Plow score; Kruskal-Wallis test for differences between batches [4]. |

| Data-Adaptive Shrinkage & Clustering (DASC) | A non-parametric algorithm to detect hidden batch factors without prior knowledge of batches [5]. | Estimated batch factors for use in downstream correction. |

| Dispersion Analysis | Compares the overdispersion of read counts across different batches. | Batch-specific dispersion parameters (e.g., λi) [3]. |

Correction Methods and Experimental Design

Once detected, batch effects can be mitigated through careful experimental design and statistical correction.

Statistical Correction Methods

Multiple computational methods have been developed to remove batch effects from RNA-seq count data. The choice of method depends on the data structure and whether batch information is known.

For Known Batches:

- ComBat-seq: Uses an empirical Bayes framework within a negative binomial (NB) model to adjust for batch effects, preserving the integer nature of count data [3] [6].

- ComBat-ref: An advanced version of ComBat-seq that selects the batch with the smallest dispersion as a reference and adjusts all other batches toward it, improving sensitivity and specificity in differential expression analysis [2] [3].

- Covariate Inclusion: Batch can be included as a covariate in the generalized linear models (GLMs) of differential expression packages like DESeq2 and edgeR [3].

For Unknown Batches:

- Surrogate Variable Analysis (sva): Estimates hidden sources of variation (surrogate variables) from the data, which can then be used in downstream models to correct for unmodeled batch effects [1] [5].

- Data-Adaptive Shrinkage and Clustering (DASC): A non-parametric algorithm that uses fusion penalties and semi-non-negative matrix factorization (semi-NMF) to detect hidden batch factors without assuming all genes are affected equally [5].

Performance Comparison of Correction Methods

The performance of batch effect correction methods can be evaluated based on their ability to recover true biological signal while minimizing artifacts. Recent evaluations highlight trade-offs between different approaches.

Table 3: Comparison of Select Batch Effect Correction Methods for RNA-seq Data

| Method | Underlying Model | Key Feature | Considerations |

|---|---|---|---|

| ComBat-seq | Empirical Bayes / Negative Binomial | Preserves integer count data; good for downstream DE analysis with edgeR/DESeq2 [3]. | Statistical power can be lower when batch dispersions vary greatly [3]. |

| ComBat-ref | Negative Binomial / GLM | Selects lowest-dispersion batch as reference; high sensitivity & controlled FPR [2] [3]. | Potential for a slight increase in false positives, but often acceptable [3]. |

| sva | Linear Model / SVD | Does not require known batches; estimates latent surrogate variables [1] [5]. | Orthogonality assumptions may not hold in real data; can remove biological signal [5]. |

| DASC | Non-parametric / semi-NMF | Detects hidden batches without regression; data-adaptive per-gene adjustment [5]. | Demonstrates power in identifying subtle batch effects missed by other methods [5]. |

| Harmony | (Not covered in detail for RNA-seq) | - | While highly effective for single-cell RNA-seq [6], its performance for bulk RNA-seq is less documented. |

Important Note on Over-Correction: A significant risk in batch effect correction is the unintentional removal of true biological variation. Methods should be applied carefully, and results should be validated biologically where possible [1] [4].

The Role of Experimental Design

Proactive experimental design is the most effective strategy for managing batch effects.

- Randomization: Whenever possible, samples from different biological conditions should be randomly distributed across processing batches [7].

- Balancing: Ensure that each batch contains a balanced representation of all biological groups of interest.

- Advanced Designs: When a completely randomized design is not feasible, reference panel designs (where a control sample is included in every batch) or chain-type designs (where batches are linked by shared biological samples) can be valid alternatives that allow for batch effect correction post-hoc [7].

The Scientist's Toolkit: Research Reagent Solutions

The following table lists key materials and computational tools essential for conducting batch effect-aware RNA-seq research.

Table 4: Essential Reagents and Tools for Batch Effect Management in RNA-seq

| Item | Function/Description | Example/Note |

|---|---|---|

| Stable Reagent Lots | Minimizes technical variation from differing chemical compositions. | Request a single, large lot for a multi-batch study [1]. |

| Spike-In Controls | Exogenous RNA added to samples to monitor technical performance. | ERCC (External RNA Controls Consortium) RNA Spike-In Mixes. |

| UMI (Unique Molecular Identifier) | Tags individual mRNA molecules to correct for PCR amplification bias. | Incorporated in library preparation kits (e.g., from 10x Genomics). |

| sva R Package | Bioconductor package for identifying and correcting for surrogate variables. | Used for correcting unknown batch effects [1] [4]. |

| ComBat-seq/ComBat-ref | R functions for batch effect correction of known batches in count data. | Available as part of the 'sva' package or custom implementations [2] [3]. |

| DASC R Package | Bioconductor package for detecting hidden batch factors. | Implements the Data-Adaptive Shrinkage and Clustering algorithm [5]. |

| DESeq2 / edgeR | R packages for differential expression analysis. | Allow inclusion of "batch" as a covariate in the statistical model [3]. |

Detailed Protocol: Applying ComBat-ref for Batch Correction

This protocol provides a step-by-step guide for using the ComBat-ref method to correct for known batch effects in an RNA-seq count matrix.

Background and Principle

ComBat-ref builds on ComBat-seq by modeling RNA-seq counts with a negative binomial distribution. Its innovation lies in estimating a pooled dispersion parameter for each batch, selecting the batch with the smallest dispersion as a reference, and adjusting all other batches toward this reference. This approach maintains high statistical power for differential expression analysis, even when batch dispersions vary significantly [3].

Materials and Software Requirements

- Input Data: A raw count matrix (genes x samples) from an RNA-seq experiment. Sample information must include known batch labels and the biological condition of interest.

- Software: R statistical environment (version 4.0 or higher).

- Required R Packages:

edgeR(for dispersion estimation and GLM fitting), and a compatible implementation of the ComBat-ref algorithm (as described in the research literature [3]).

Step-by-Step Procedure

Data Preparation and Normalization:

- Load the raw count matrix and sample metadata into R.

- Perform standard normalization for sequencing depth (e.g., using TMM normalization in

edgeR). This is for the purpose of initial dispersion estimation.

Dispersion Estimation and Reference Batch Selection:

- Use

edgeRto estimate a dispersion parameter (λi) for each batch separately by pooling genes within the batch. - Compare the dispersion values across all batches.

- Select the batch with the smallest dispersion parameter as the reference batch.

- Use

Generalized Linear Model (GLM) Fitting:

- A GLM is fit for each gene. The model is typically specified as:

log(μ_ijg) = α_g + γ_ig + β_cjg + log(N_j)where:μ_ijgis the expected count for gene g in sample j from batch i.α_gis the global background expression for gene g.γ_igis the effect of batch i on gene g.β_cjgis the effect of the biological condition c of sample j on gene g.N_jis the library size for sample j [3].

- A GLM is fit for each gene. The model is typically specified as:

Data Adjustment:

- For all batches i that are not the reference batch, the adjusted gene expression level is calculated as:

log(μ~_ijg) = log(μ_ijg) + γ_refg - γ_igwhereγ_refgis the batch effect parameter for the reference batch [3]. - The dispersion parameter for the adjusted data is set to match that of the reference batch (λ~i = λref).

- For all batches i that are not the reference batch, the adjusted gene expression level is calculated as:

Count Adjustment:

- The final adjusted counts

n~_ijgare calculated by matching the cumulative distribution function (CDF) of the original negative binomial distributionNB(μ_ijg, λ_i)at the original countn_ijgwith the CDF of the adjusted distributionNB(μ~_ijg, λ~_i)at the new countn~_ijg[3]. - This step ensures the output remains as integers, suitable for tools like DESeq2 and edgeR.

- The final adjusted counts

Validation and Downstream Analysis

- Visual Inspection: Generate PCA plots on the corrected count matrix. Successful correction should show samples clustering primarily by biological condition, not by batch.

- Differential Expression: Perform differential expression analysis on the corrected data using standard pipelines. The results should be biologically interpretable and, if possible, validated with external data.

- Clustering Metrics: Calculate internal clustering metrics (e.g., Gamma, Dunn1) to quantitatively assess the improvement in biological group separation post-correction [4].

Batch effects are systematic technical variations in RNA sequencing (RNA-seq) data that are unrelated to the biological subject of the study. These non-biological variations compromise data reliability, obscure true biological differences, and can lead to misleading outcomes and irreproducible research [2] [8]. In clinical and drug development contexts, failure to address batch effects has even led to incorrect patient classifications and treatment regimens [8]. This Application Note details the common sources of batch effects—sequencing runs, reagents, protocols, and personnel—and provides standardized protocols for their mitigation within a comprehensive batch effect correction framework for RNA-seq research.

Batch effects arise from technical inconsistencies throughout the RNA-seq workflow. A "batch" refers to a group of samples processed differently from other groups in the experiment [9]. The table below summarizes the primary sources, their specific causes, and measurable impacts on data.

Table 1: Common Sources of Batch Effects in RNA-Seq and Their Data Impacts

| Source Category | Specific Examples of Variation | Potential Impact on RNA-Seq Data |

|---|---|---|

| Sequencing Runs | Different flow cells, sequencing lanes, or machines [9] [8]. | Systematic shifts in read depth, base quality scores, or sequence composition. |

| Reagents | Different lots of reverse transcriptase, Tn5 transposase, or oligo-dT primers [10] [8]. | Variations in library complexity, insert size, and 3'-end bias. |

| Protocols | Use of different RNA extraction kits, library prep protocols (e.g., SHERRY vs. standard), or single-cell vs. single-nuclei protocols [11] [10]. | Significant differences in gene detection sensitivity, intronic vs. exonic read coverage, and overall expression profiles. |

| Personnel | Different technicians handling samples, with minor variations in pipetting, incubation timing, or tissue dissociation techniques [9]. | Increased technical noise and variability, particularly in complex protocols. |

Experimental Protocols for Batch Effect Mitigation

A multi-layered strategy combining rigorous experimental design with computational correction is essential for robust RNA-seq data generation and analysis.

Pre-Analysis: Experimental Design and Wet-Lab Protocols

Preventing batch effects at the source is the most effective strategy.

A. Proactive Experimental Design

- Randomization: Assign samples from different biological groups (e.g., treatment and control) across different batches in a randomized manner.

- Blocking: If full randomization is impossible, use a blocked design where each batch contains a complete set of biological conditions, making the batch effect orthogonal to the biological effect of interest [8].

- Replication: Include technical replicates (the same sample processed in different batches) to empirically estimate the batch effect [8].

B. Standardized Laboratory Protocols The following protocol for SHERRY-based RNA-seq library preparation minimizes batch effects through consistency and the use of internal controls.

Table 2: Key Research Reagent Solutions for RNA-Seq Library Prep

| Reagent / Material | Function | Critical Control for Batch Effects |

|---|---|---|

| RQ1 RNase-Free DNase | Digests residual genomic DNA to prevent false signals. | Ensures consistent removal of gDNA across all samples and batches, preventing technical bias [10]. |

| VAHTS RNA Clean Beads | Purifies RNA and cDNA; performs size selection. | Using the same bead lot and strict 1.8x purification ratios ensures reproducible recovery and library yield [10]. |

| Tn5 Transposase (Loaded) | Enzymatically fragments and tags cDNA with sequencing adapters. | Using a single, pre-assembled lot of Tn5 transposome ensures uniform tagmentation efficiency, a major source of variability [10]. |

| Oligo-dT Primer | Captures polyadenylated RNA during reverse transcription. | Consistent primer quality and lot are critical for maintaining stable 3'-end coverage profiles across batches [10]. |

Protocol: SHERRY Library Preparation from Low-Volume Total RNA Input This protocol is optimized for 200 ng of total RNA and highlights steps critical for batch-to-batch consistency [10].

Genomic DNA Digestion and RNA Purification

- Objective: Remove gDNA without degrading RNA.

- Steps: a. Set up a 10 μL digestion reaction with 1x Reaction Buffer, 0.2 U/μL RQ1 RNase-Free DNase, and 1 μg of total RNA. b. Incubate at 37°C for 30 minutes. c. Terminate the reaction with 1 μL of RQ1 DNase Stop Solution and incubate at 65°C for 10 minutes. d. Purify the RNA using RNA Clean Beads at a 1.8x ratio. Elute in 10 μL nuclease-free water.

- Batch Effect Mitigation: Using the same DNase and bead lots across all batches is critical. Adhere strictly to incubation times and temperatures.

Reverse Transcription and Hybrid Tagmentation

- Objective: Generate RNA-cDNA hybrids and fragment them in a consistent manner.

- Steps: a. Perform reverse transcription using an oligo-dT primer. b. Directly tagment the RNA-cDNA hybrid using a pre-assembled, loaded Tn5 transposome.

- Batch Effect Mitigation: The use of a single, large batch of in-house assembled or commercially sourced Tn5 transposome is highly recommended to minimize a major source of reagent-based batch effects [10].

Library Generation and QC

- Objective: Amplify and complete the sequencing library.

- Steps: a. Amplify the tagmented product via PCR with a low cycle number. b. Perform a final bead-based clean-up. c. Assess library quality and quantity using methods like Agilent Bioanalyzer.

- Batch Effect Mitigation: Process all samples for the entire project using the same PCR reagents and thermocycler program. Pool libraries from different conditions and batches before sequencing.

Diagram 1: RNA-seq library prep workflow. Key reagent-controlled steps highlighted.

Post-Analysis: Computational Batch Effect Correction

When batch effects persist despite careful experimental design, computational correction is required. The choice of method depends on the data type (bulk vs. single-cell) and the nature of the batch effect.

Table 3: Selection Guide for Batch Effect Correction Methods

| Method | Best For | Principle | Key Considerations |

|---|---|---|---|

| ComBat-ref [2] | Bulk RNA-seq count data with a clear reference batch. | Empirical Bayes framework with a negative binomial model; adjusts batches towards a low-dispersion reference. | Preserves biological signal in the reference. Superior performance in differential expression analysis. |

| Harmony [6] | Single-cell RNA-seq (scRNA-seq) data. | Iteratively removes batch-specific effects in a low-dimensional embedding while preserving biological structure. | In a 2025 benchmark, Harmony was the only method that performed well without introducing detectable artifacts [6]. |

| sysVI (VAMP + CYC) [11] | scRNA-seq with substantial batch effects (e.g., cross-species, different protocols). | Conditional Variational Autoencoder (cVAE) using VampPrior and cycle-consistency constraints. | Specifically designed for strong "system"-level confounders where other methods fail or remove biological signal. |

| Order-Preserving Methods [12] | Analyses where maintaining original gene-gene correlation structures is critical. | Monotonic deep learning networks that maintain the relative ranking of gene expression levels during correction. | Prevents disruption of biologically meaningful patterns like differential expression and co-expression. |

Diagram 2: Decision workflow for selecting a batch effect correction method.

Mitigating batch effects originating from sequencing runs, reagents, protocols, and personnel requires a holistic strategy. This involves proactive experimental design, stringent wet-lab protocols with standardized reagents, and the careful application of computational correction methods tailored to the data type and research question. By systematically addressing these sources, researchers can significantly enhance the reliability, reproducibility, and biological interpretability of their RNA-seq data, thereby strengthening the foundation for scientific discovery and drug development.

Batch effects are technical variations introduced during different experimental runs that are unrelated to the biological signals of interest. In RNA-seq research, these effects represent a formidable challenge, particularly as studies grow in scale and complexity. When unaddressed, batch effects systematically compromise downstream analyses by introducing false discoveries in differential expression analysis and creating misleading clusters in cell type identification [8]. The consequences extend beyond reduced statistical power, potentially leading to incorrect biological conclusions and irreproducible findings that can misdirect entire research fields [13] [8]. This application note examines the mechanistic basis for how batch effects generate these analytical artifacts and provides validated protocols to mitigate their impact within the broader context of batch effect correction methodologies for RNA-seq research.

The Mechanisms of Misleading Analyses

How Batch Effects Induce False Discoveries in Differential Expression

Batch effects confound differential expression analysis by introducing systematic technical variation that can be misattributed to biological phenomena. The core issue arises when batch effects become correlated with biological conditions of interest, making it statistically challenging to disentangle technical artifacts from true biological signals [8]. For instance, if all control samples are processed in one batch and all treatment samples in another, the technical differences between batches will be indistinguishable from treatment-induced expression changes.

In single-cell RNA-seq (scRNA-seq) analyses, a prevalent source of false discoveries stems from improperly accounting for biological replicates. Methods that treat individual cells as independent observations, rather than aggregating cells within the same biological replicate into "pseudobulks," dramatically increase false discovery rates (FDR) because they ignore the inherent statistical dependence between cells from the same donor or sample [14] [13]. This pseudoreplication problem artificially inflates confidence in differentially expressed genes (DEGs), as demonstrated in a reanalysis of Alzheimer's disease data where a pseudobulk approach identified 549 times fewer DEGs at an FDR of 0.05 compared to the original cell-level analysis [13].

Single-cell DE methods also exhibit a systematic bias toward highly expressed genes, incorrectly identifying them as differentially expressed even when their expression remains unchanged. This phenomenon was experimentally validated using spike-in RNAs, where single-cell methods falsely identified many abundant spike-ins as differentially expressed while pseudobulk methods avoided this bias [14].

The Generation of Misleading Clusters in Cell Type Identification

In clustering analyses, batch effects can cause cells of the same type from different batches to separate artificially while potentially causing biologically distinct cell types to merge if their batch-specific technical signatures overwhelm subtle biological differences. The fundamental challenge is that clustering algorithms, which typically rely on distance measures in high-dimensional space, cannot distinguish between technical and biological sources of variation [15].

This problem is exacerbated by the practice of "double dipping" - using the same dataset both to identify clusters and test for differential expression between them. When algorithms over-cluster, downstream differential expression analyses can produce misleading results because the same data is used to define groups and test hypotheses about them [16]. Batch effects compound this problem by introducing systematic variations that clustering algorithms may interpret as biologically meaningful population structure.

Table 1: Performance Comparison of Differential Expression Methods in Ground-Truth Tests

| Method Category | Representative Tools | Concordance with Bulk RNA-seq (AUCC) | False Discovery Rate Control | Bias Toward Highly Expressed Genes |

|---|---|---|---|---|

| Pseudobulk Methods | edgeR, DESeq2, limma | High (0.80-0.95) [14] | Appropriate control [14] | Minimal bias [14] |

| Single-Cell Methods | Wilcoxon rank-sum test, etc. | Low (0.40-0.65) [14] | Inflated (hundreds of false DEGs) [14] | Strong bias [14] |

| Mixed Models | Poisson mixed models | Variable | Moderate inflation [13] | Moderate bias [13] |

Diagram 1: Mechanism of how batch effects confound downstream analysis when correlated with biological conditions of interest.

Quantitative Evidence of Impact

The impact of batch effects on analytical validity is not merely theoretical. A comprehensive benchmark study evaluating differential expression methods across eighteen "gold standard" datasets with known ground truths demonstrated that the most widely used single-cell methods discovered hundreds of differentially expressed genes in the absence of any biological differences [14]. This systematic false discovery problem was universal across 46 scRNA-seq datasets encompassing disparate species, cell types, technologies, and biological perturbations.

The Alzheimer's disease research example provides a sobering case study of batch effect consequences. The original analysis identified 1,031 DEGs associated with Alzheimer's pathology using a method that treated individual cells as independent replicates [13]. However, when reanalyzed with a pseudobulk approach that properly accounted for biological replicates, only 26 DEGs were identified - a 97% reduction in claimed findings [13]. This dramatic discrepancy highlights how analytical approaches that ignore batch effects and proper replicate structure can generate potentially massive numbers of false discoveries that misdirect subsequent research.

In batch correction benchmarking studies, the choice of correction method significantly impacts the ability to recover true biological signals. Methods like Harmony and Seurat RPCA consistently rank among top performers for removing batch effects while preserving biological variation [17]. Novel approaches like Adversarial Information Factorization have shown promise in complex scenarios with low signal-to-noise ratios or batch-specific cell types [15].

Table 2: Performance of Batch Correction Methods Across Different Scenarios

| Method | Underlying Approach | Optimal Use Case | Limitations |

|---|---|---|---|

| Harmony [17] | Mixture model | Multiple batches from single laboratory | Requires batch labels |

| Seurat RPCA [17] | Reciprocal PCA | Multiple laboratories with different microscopes | Allows more heterogeneity between datasets |

| ComBat-ref [3] | Negative binomial model with reference batch | RNA-seq with dispersion differences | Selects batch with smallest dispersion as reference |

| Adversarial Information Factorization [15] | Deep learning with conditional VAE | Low signal-to-noise ratio, batch-specific cell types | Computational intensity |

| MNN Correct [17] | Mutual nearest neighbors | Batches with shared cell types | Requires at least one shared cell type across batches |

Recommended Experimental Protocols

Pseudobulk Differential Expression Analysis

Purpose: To properly account for biological replicates and minimize false discoveries in single-cell RNA-seq differential expression analysis.

Materials:

- Single-cell RNA-seq count matrix

- Sample metadata including biological replicate identifiers

- Statistical software (R/Bioconductor)

Procedure:

- Aggregate counts by biological replicate: Sum raw counts across all cells belonging to the same biological replicate (e.g., same patient, same animal) within each experimental condition to create a pseudobulk count matrix [14] [13].

- Quality filtering: Remove genes with low expression across replicates (e.g., genes with less than 10 counts across all samples).

- Normalization: Apply standard bulk RNA-seq normalization (e.g., TMM in edgeR or median-of-ratios in DESeq2) to the pseudobulk count matrix [14].

- Model fitting: Use established bulk RNA-seq tools (edgeR, DESeq2, or limma) to test for differential expression between conditions while accounting for any remaining batch effects as covariates in the design matrix [14].

- Result interpretation: Focus on genes that show both statistical significance and biological relevance, considering effect sizes (fold changes) in addition to p-values.

Validation: When possible, validate key findings using orthogonal methods such as proteomics, spike-in controls, or bulk RNA-seq from purified cell populations [14].

Diagram 2: Pseudobulk differential expression workflow for proper false discovery rate control by aggregating cells by biological replicate.

Batch Effect Correction Prior to Clustering

Purpose: To remove technical variation that would otherwise confound cell type identification through clustering.

Materials:

- Normalized single-cell RNA-seq data

- Batch metadata

- Computational resources for batch correction

Procedure:

- Batch effect assessment: Visualize data using PCA or UMAP colored by batch to identify whether batch effects are present [8].

- Method selection: Choose an appropriate batch correction method based on dataset characteristics:

- Correction application: Apply the selected method following developer guidelines, ensuring proper parameter specification.

- Quality control: Verify that batch effects have been removed by:

- Visual inspection of PCA/UMAP plots post-correction

- Quantitative metrics such as ASW (Average Silhouette Width) for batch mixing [17]

- Confirming that biological conditions remain separable

- Clustering: Perform clustering on the batch-corrected data using preferred method (e.g., Louvain, Leiden).

- Validation: Use known marker genes to validate that clusters correspond to biologically meaningful cell types rather than batch artifacts.

Troubleshooting: If biological signal is lost during correction, adjust correction strength parameters or try an alternative method. If over-correction persists, consider using a method that allows for explicit specification of biological covariates to preserve.

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for Batch Effect Management

| Tool/Category | Specific Examples | Function | Considerations |

|---|---|---|---|

| Differential Expression Tools | edgeR, DESeq2, limma [14] | Pseudobulk differential expression analysis | Use after cell aggregation by biological replicate |

| Batch Correction Algorithms | Harmony, Seurat RPCA [17] | Remove technical variation while preserving biology | Performance varies by scenario; no one-size-fits-all |

| Spike-in Controls | ERCC RNA spike-in mixes [14] | Distinguish technical from biological variation | Add to cell lysis buffer before library preparation |

| Quality Control Metrics | Mitochondrial percentage, detected genes per cell [13] | Identify low-quality cells | Establish thresholds based on data quality |

| Clustering Validation | Recall [16] | Control for double dipping in clustering | Applicable to various clustering algorithms |

| Reference-Based Correction | ComBat-ref [3] | Adjust batches toward low-dispersion reference | Particularly effective for RNA-seq count data |

Batch effects represent a fundamental challenge in RNA-seq research that directly impacts the validity of downstream analyses. When unaddressed, they systematically generate false discoveries in differential expression testing and create misleading clusters in cell type identification, potentially directing research efforts down unproductive paths. The protocols and methodologies presented here provide a framework for recognizing, addressing, and mitigating these effects. By implementing pseudobulk approaches for differential expression, selecting appropriate batch correction strategies for clustering, and maintaining rigorous analytical practices throughout, researchers can substantially improve the reliability and reproducibility of their RNA-seq findings. As batch effect correction methodologies continue to evolve, maintaining focus on these core principles will ensure that biological signals remain distinct from technical artifacts in increasingly complex experimental designs.

In high-throughput RNA sequencing (RNA-seq) research, batch effects represent one of the most challenging technical hurdles. These are systematic variations that arise not from biological differences but from technical factors throughout the experimental process, including different sequencing runs, reagent lots, personnel, time-related factors, or environmental conditions [18] [19]. If undetected, these artifacts can confound downstream analysis, leading to false discoveries in differential expression, erroneous clustering, and compromised meta-analyses [18] [19].

Principal Component Analysis (PCA) serves as a powerful, unsupervised dimensionality reduction technique for initial data exploration and quality control. It transforms high-dimensional gene expression data into a lower-dimensional space composed of principal components (PCs), where the first component (PC1) explains the most variance, the second (PC2) the next most, and so on [20] [21]. By visualizing the first two or three PCs, researchers can rapidly diagnose batch effects before applying corrective algorithms, observing whether samples cluster by technical batches rather than by biological conditions of interest [22] [23]. This diagnostic step is crucial for ensuring the reliability and reproducibility of subsequent findings in translational research and drug development [24].

Theoretical Foundation: How PCA Reveals Batch Effects

The Mechanics of Principal Component Analysis

PCA operates by identifying the principal components—orthogonal linear combinations of the original genes—that capture the maximum possible variance in the dataset. Each subsequent component is constrained to be uncorrelated with previous ones and captures the next highest amount of remaining variance [21]. The resulting components provide a lower-dimensional representation that preserves the major patterns within the data. When applied to RNA-seq data (typically a samples × genes matrix), PCA summarizes the predominant patterns of gene expression variation across all samples [20] [25].

Interpreting PCA Plots for Batch Effect Diagnosis

In a typical PCA plot for batch effect diagnosis, each point represents a single sample positioned according to its scores on two principal components (e.g., PC1 vs. PC2). The spatial arrangement reveals underlying sample similarities and differences [22]. When batch effects are present, samples processed together technically (e.g., in the same sequencing run) will exhibit similar expression patterns driven by these non-biological factors, causing them to cluster together distinctly from samples in other batches [18] [23]. Conversely, in the absence of strong batch effects, samples should primarily group by their biological conditions (e.g., treatment vs. control, different tissue types) if those conditions account for the greatest expression variance [22].

Experimental Protocol: PCA Workflow for Batch Effect Detection

Sample Preparation and Experimental Design

Table 1: Key Research Reagent Solutions for RNA-seq Batch Effect Analysis

| Item | Function/Description | Example/Note |

|---|---|---|

| RNA Extraction Kit | Isolate high-quality RNA from biological samples | Consistent kit lot across batches minimizes variation [18] |

| Library Prep Kit | Prepare sequencing libraries; polyA vs. ribo-depletion | Different methods can cause major batch effects [22] |

| Sequencing Platform | Generate raw read data; different instruments/flow cells | Platform-specific technical signatures [19] |

| R/Bioconductor | Statistical programming environment | Foundation for analysis packages [18] [21] |

| sva Package | Contains ComBat-seq for count-based batch correction | Essential for batch effect correction post-diagnosis [18] |

| limma Package | Provides removeBatchEffect function |

Normalization and linear model adjustment [18] |

| ggplot2 Package | Create publication-quality PCA visualizations | Customize colors, point shapes, labels [18] [22] |

| PCAtools Package | Streamlined PCA visualization and analysis | Horn's parallel analysis for optimal PC selection [21] |

Computational Requirements and Software Setup

The following protocol utilizes the R programming environment and Bioconductor packages, which are standard for genomic analysis. Begin by installing and loading the necessary packages:

Step-by-Step Protocol for PCA-Based Batch Effect Diagnosis

Step 1: Data Preparation and Preprocessing Load your RNA-seq count data and associated metadata. The metadata must include both the biological conditions and the potential batch variables (e.g., sequencing date, lab technician, reagent lot).

Step 2: Data Transformation and Normalization Raw count data requires normalization before PCA to address differences in library size and distribution. The voom method or variance-stabilizing transformation is recommended.

Step 3: Perform Principal Component Analysis

Execute PCA on the normalized expression matrix. The prcomp() function is commonly used, or the pca() function from the PCAtools package for enhanced functionality.

Step 4: Visualize PCA Results Generate PCA plots colored by both batch and biological condition to diagnose whether batch effects are present.

Step 5: Interpretation and Quantitative Assessment Examine the proportion of variance explained by each principal component, which indicates how much of the total expression variability each component captures. Components with high batch-related variance often require correction before downstream analysis.

Results Interpretation and Quantitative Metrics

Qualitative Assessment of PCA Plots

In the generated PCA plots, specific patterns indicate different scenarios. Strong batch effects are evident when samples form distinct clusters based on technical batches rather than biological conditions [18] [22]. For example, all samples from "Batch 1" might cluster separately from "Batch 2" along PC1, while biological conditions are intermixed. Ideal patterns show clear separation by biological condition with batches well-mixed within conditions. Complex scenarios might show both batch and biological effects influencing different principal components, such as batch separation along PC1 and condition separation along PC2 [20].

Quantitative Metrics for Batch Effect Assessment

Table 2: Key Quantitative Metrics for PCA and Batch Effect Evaluation

| Metric | Calculation/Description | Interpretation |

|---|---|---|

| Variance Explained | Percentage of total data variance captured by each PC [20] | Higher values in early PCs indicate stronger patterns (batch or biological) |

| Cluster Separation Metrics | Gamma, Dunn1, WbRatio based on PCA coordinates [19] | Evaluate distinctness of batch vs. condition clusters |

| Design Bias | Correlation between quality scores (Plow) and sample groups [19] | Values >0.4 suggest potential technical confounds |

| Kruskal-Wallis Test | Non-parametric test for quality score differences between batches [19] | p-value <0.05 indicates significant batch-quality association |

Advanced Applications and Integration with Correction Methods

PCA in the Broader Context of Batch Effect Management

PCA visualization represents just the initial diagnostic phase within a comprehensive batch effect management strategy. Following detection, researchers can employ various correction methods, such as ComBat-seq (specifically designed for RNA-seq count data) [18] [2], limma's removeBatchEffect (for normalized expression data) [18], or mixed linear models (for complex experimental designs) [18]. After applying these methods, PCA should be repeated to verify successful batch effect removal while preservation of biological signal [18] [22].

Special Considerations for Single-Cell RNA-seq

While this protocol focuses on bulk RNA-seq, single-cell RNA-seq (scRNA-seq) presents additional challenges for batch effect diagnosis, including extreme sparsity (approximately 80% dropout rate) and greater technical variability [23]. For scRNA-seq data, PCA remains valuable for initial exploration, but researchers often complement it with t-SNE or UMAP visualizations and specialized metrics like kBET or graph iLISI for more robust batch effect assessment in high-dimensional single-cell data [23].

Visualizing the Experimental Workflow

The following diagram illustrates the complete workflow for PCA-based batch effect diagnosis and correction in RNA-seq studies:

Workflow for PCA-based batch effect diagnosis and correction in RNA-seq data.

PCA remains an indispensable first-line diagnostic tool for detecting batch effects in RNA-seq data analysis. The protocol outlined here provides researchers and drug development professionals with a standardized approach to visualize technical artifacts, interpret patterns, and make informed decisions about subsequent correction strategies. By integrating PCA-based diagnosis into routine RNA-seq workflows, scientists can enhance the reliability of their differential expression results, ensure valid clustering outcomes, and ultimately produce more robust and reproducible transcriptomic findings. As sequencing technologies evolve and multi-omics integration becomes more prevalent, PCA will continue to serve as a fundamental quality control measure in high-throughput genomic research.

A Practical Toolkit: Implementing Major Batch Correction Algorithms

In high-throughput RNA sequencing (RNA-Seq) experiments, technical variation is an unavoidable challenge that can confound biological interpretation. Batch effects are systematic technical variations arising from differences in experimental conditions such as sequencing runs, reagent lots, personnel, or instrumentation [26] [9]. These effects manifest as shifts in gene expression profiles that are unrelated to the biological phenomena under investigation. Without proper handling, batch effects can lead to false positives in differential expression analysis, misclassification of cell types in single-cell studies, and reduced statistical power [27] [28].

The terms "normalization" and "batch effect correction" are often used interchangeably but address distinct aspects of technical variation. Normalization primarily adjusts for cell- or sample-specific technical biases such as differences in sequencing depth (total number of reads or unique molecular identifiers per cell) and RNA capture efficiency [28] [29]. It operates under the assumption that any technical bias affects all genes similarly within a sample. In contrast, explicit batch effect correction specifically targets systematic differences between groups of samples processed in different batches, requiring prior knowledge (or discovery) of batch variables [26] [27]. Understanding when and how to apply these complementary approaches is crucial for generating biologically meaningful results from RNA-Seq data.

Normalization: Foundation of Data Preprocessing

Core Principles and Methods

Normalization serves as the critical first step in RNA-Seq data preprocessing, aiming to make expression measurements comparable across samples by removing technical artifacts. The fundamental assumption underlying most normalization methods is that the majority of genes are not differentially expressed across samples [29]. Methods vary in their computational approaches and underlying assumptions about the data structure.

Library size normalization methods, such as Counts Per Million (CPM) and Trimmed Mean of M-values (TMM), scale raw counts by sample-specific factors to account for differences in sequencing depth [29]. The CPM approach divides each count by the total number of counts for that sample multiplied by one million, providing a simple readout of relative abundance. TMM normalization, implemented in the edgeR package, goes further by calculating a weighted trimmed mean of the log expression ratios between samples, making it robust to outliers and highly differentially expressed genes [29]. For bulk RNA-seq data, the Transcripts Per Kilobase Million (TPM) metric extends this concept by additionally accounting for gene length, enabling more appropriate cross-gene comparisons within samples.

Advanced Normalization Techniques

More sophisticated normalization approaches have been developed to address specific challenges in different RNA-Seq modalities. For single-cell RNA-seq (scRNA-seq) data, methods like SCTransform model gene expression using regularized negative binomial regression, simultaneously accounting for sequencing depth and technical covariates while stabilizing variance [28]. Scran's pooling-based normalization employs a deconvolution strategy that estimates size factors by pooling cells with similar expression profiles, making it particularly effective for heterogeneous datasets containing diverse cell types [28].

The Centered Log Ratio (CLR) normalization is especially valuable for multi-modal datasets such as CITE-seq, where it transforms antibody-derived tags (ADTs) by log-transforming the ratio of each feature's expression to the geometric mean across all features in a cell [28]. This approach effectively handles the compositional nature of such data but requires pseudocount addition to manage zero values.

Explicit Batch Effect Correction: When and How

Methodological Landscape

Explicit batch effect correction becomes necessary when samples processed in different batches exhibit systematic technical differences that could confound biological signals. These algorithms require either known batch variables or strategies to discover latent batch factors. The methodological approaches vary considerably in their underlying assumptions and correction strategies.

Empirical Bayes methods like ComBat and ComBat-seq use parametric empirical Bayes frameworks to adjust for batch effects, assuming these effects follow a parametric distribution across genes [6] [30]. ComBat operates on normalized continuous data, while ComBat-seq works directly with raw count matrices using a negative binomial model [30]. Mutual Nearest Neighbors (MNN)-based methods, implemented in tools like Seurat, identify pairs of cells across batches that are mutual nearest neighbors in expression space, then estimate and remove the batch effect [30] [9]. Iterative clustering approaches such as Harmony perform batch correction by integrating datasets through an iterative process of clustering and correction in low-dimensional embedding spaces like PCA [6] [30] [28].

Deep learning methods including SCVI (single-cell variational inference) employ variational autoencoders to learn low-dimensional embeddings that explicitly model batch effects, from which corrected count matrices can be imputed [30]. Graph-based correction approaches like BBKNN (Batch Balanced K-Nearest Neighbors) modify the k-NN graph construction to create connections between similar cells across batches without altering the underlying count matrix [30] [28].

Comparative Performance of Batch Correction Methods

Recent systematic evaluations have revealed significant differences in performance among batch correction methods. A comprehensive 2025 assessment of eight widely used scRNA-seq batch correction methods found that most introduce measurable artifacts during the correction process [6] [30]. The study demonstrated that MNN, SCVI, and LIGER often perform poorly, substantially altering the data, while Combat, ComBat-seq, BBKNN, and Seurat introduce detectable artifacts [30]. Harmony emerged as the only method consistently performing well across all tests, making it the currently recommended approach for scRNA-seq data [6] [30].

For differential expression analysis with known batch variables, incorporating batch as a covariate in a regression model (e.g., in DESeq2 or edgeR) generally outperforms approaches using a pre-corrected matrix [31]. When dealing with unknown/latent batch variables, surrogate variable analysis (SVA) methods generally control false discovery rates well while maintaining good power, particularly with small group effects [31].

Table 1: Comparison of Major Batch Effect Correction Methods

| Method | Input Data | Correction Approach | Strengths | Limitations |

|---|---|---|---|---|

| Harmony [6] [30] [28] | Normalized count matrix | Iterative clustering in low-dimensional space | Fast, scalable, preserves biological variation | Limited native visualization tools |

| ComBat/ComBat-seq [6] [30] | Normalized counts (ComBat) or raw counts (ComBat-seq) | Empirical Bayes framework | Established, widely used | Can introduce artifacts; performance varies |

| Seurat Integration [30] [28] [9] | Normalized count matrix | CCA and MNN correction | High biological fidelity, comprehensive workflow | Computationally intensive for large datasets |

| BBKNN [30] [28] | k-NN graph | Graph-based batch balancing | Computationally efficient, lightweight | Less effective for non-linear batch effects |

| SCVI [30] [28] | Raw count matrix | Variational autoencoder | Handles complex, non-linear batch effects | Requires GPU, deep learning expertise |

Practical Protocols and Implementation

Bulk RNA-Seq Normalization and Batch Correction Protocol

For bulk RNA-Seq analysis, the following protocol outlines a robust workflow for normalization and batch correction using established R packages:

Step 1: Data Input and Preprocessing

Step 2: Normalization

Step 3: Batch Effect Correction with Known Batches

Step 4: Differential Expression Analysis

Single-Cell RNA-Seq Normalization and Integration Protocol

For scRNA-seq data, the following protocol demonstrates a complete workflow using the Seurat package with Harmony integration:

Step 1: Data Preprocessing and Normalization

Step 2: Feature Selection and Scaling

Step 3: Dimensionality Reduction and Integration

Decision Framework: Normalization, Correction, or Both?

Strategic Selection Guide

Choosing the appropriate approach for handling technical variation depends on multiple factors including experimental design, data structure, and research objectives. The following decision framework provides guidance for selecting the optimal strategy:

Table 2: Decision Framework for Handling Technical Variation

| Scenario | Recommended Approach | Rationale | Implementation Example |

|---|---|---|---|

| Single batch, uniform samples | Normalization only | No cross-batch variation to correct | TMM for bulk RNA-seq; LogNormalize for scRNA-seq |

| Multiple batches with balanced design | Normalization + explicit batch correction | Balanced designs enable robust batch effect estimation | ComBat after TMM normalization (bulk); Harmony integration (single-cell) |

| Strong confounding between batch and condition | Caution in correction; covariate inclusion | Batch correction may remove biological signal | Include batch as covariate in DESeq2/edgeR models |

| Unknown batch effects suspected | Latent factor discovery | Address unrecorded technical variation | SVA for bulk RNA-seq; Harmony with inferred batches |

| Downstream machine learning applications | Conservative correction | Preserve data structure for classification | Harmony or BBKNN that minimally alters original space |

The most critical consideration is the degree of confounding between batch and biological conditions of interest. When batch and condition are perfectly confounded (e.g., all control samples in one batch and all treatment samples in another), no batch correction method can reliably distinguish technical from biological variation [26]. In such cases, the only safe approaches are including batch as a covariate in statistical models or using conservative correction methods that minimize overcorrection.

Experimental design plays a crucial role in determining the appropriate analytical strategy. Balanced designs, where each batch contains samples from all experimental conditions, enable more robust batch effect estimation and correction [26]. For strongly confounded designs, where batch and condition are perfectly correlated, batch correction is not recommended as it may remove biological signal of interest [26].

Table 3: Essential Research Reagents and Computational Tools

| Item | Function | Application Context |

|---|---|---|

| Spike-in RNA controls (e.g., SIRVs) | Technical controls for normalization | Assess dynamic range, sensitivity, and reproducibility in large-scale experiments [32] |

| UMI barcodes | Molecular tagging to correct PCR amplification bias | scRNA-seq protocols to distinguish biological from technical variation [28] |

| edgeR | Statistical analysis of digital gene expression data | Bulk RNA-seq normalization (TMM) and differential expression [29] |

| DESeq2 | Differential expression analysis based on negative binomial distribution | Bulk RNA-seq analysis with built-in normalization and batch covariate inclusion [33] |

| Harmony | Fast, scalable integration of single-cell data | scRNA-seq batch correction in low-dimensional embedding space [6] [30] [28] |

| Seurat | Comprehensive toolkit for single-cell genomics | scRNA-seq normalization, integration, and downstream analysis [28] [9] |

| Scanpy | Python-based single-cell analysis infrastructure | scRNA-seq analysis with BBKNN for efficient batch correction [28] |

Workflow Visualization

Diagram Title: RNA-Seq Normalization and Batch Correction Decision Workflow

Normalization and explicit batch correction address complementary aspects of technical variation in RNA-Seq data analysis. While normalization is an essential first step that adjusts for sample-specific biases, batch correction specifically targets systematic differences between experimental batches. The optimal approach depends critically on experimental design, particularly the degree of confounding between batch and biological variables. Recent evaluations suggest that Harmony currently outperforms other methods for single-cell data integration, while including batch as a covariate in regression models often works well for bulk RNA-seq differential expression analysis. Regardless of the method chosen, careful experimental design with balanced batches remains the most effective strategy for minimizing the confounding effects of technical variation.

In high-throughput RNA sequencing (RNA-seq) experiments, batch effects represent a significant technical challenge, introducing non-biological variation that can compromise data integrity and lead to spurious scientific conclusions. These systematic biases arise from various technical sources, including different sequencing runs or instruments, variations in reagent lots, changes in sample preparation protocols, different personnel handling samples, and environmental conditions such as temperature and humidity [18]. When not properly accounted for, these effects can cause clustering algorithms to group samples by batch rather than biological similarity and can lead to the false identification of differentially expressed genes that actually differ between batches rather than biological conditions [18].

The Empirical Bayes framework provides a powerful statistical approach for addressing these technical artifacts, particularly when dealing with the limited sample sizes common in sequencing experiments. This methodology leverages information across all features (genes) in a dataset to improve parameter estimation for individual features, making it especially valuable for stabilizing estimates when per-feature data is sparse. The ComBat (Combating Batch Effects When Combining Batches of Gene Expression Microarray Data) method, initially developed for microarray data, employs this approach by assuming a normal distribution for expression values and using empirical Bayes to estimate batch-effect parameters, which are then removed from the data [34]. For RNA-seq count data, which follows a negative binomial distribution rather than a normal distribution, ComBat-Seq was developed as an extension that maintains the count-based nature of the data while applying similar empirical Bayes principles for batch effect correction [34].

Comparative Analysis of Batch Effect Correction Methods

The landscape of batch effect correction methods has expanded considerably, with ComBat and ComBat-Seq occupying distinct positions within this ecosystem. Recent evaluations have systematically compared these methods, particularly in the context of single-cell RNA sequencing (scRNA-seq) data. A 2025 comparative study examined eight widely used batch correction methods, including ComBat and ComBat-Seq, using a novel approach to measure the degree to which these methods alter data during the correction process [6] [30]. The findings revealed that many published methods are poorly calibrated, creating measurable artifacts during correction. Specifically, this evaluation found that ComBat, ComBat-seq, BBKNN, and Seurat introduce artifacts detectable through their testing methodology, while Harmony was the only method that consistently performed well across all tests [30].

The performance differences between methods can be attributed to their underlying statistical approaches and the specific data structures they target. ComBat-Seq specifically addresses the characteristics of RNA-seq count data by utilizing a negative binomial regression framework, which better reflects the statistical behavior of raw counts data compared to normal distribution-based approaches [34]. This methodological distinction makes ComBat-Seq particularly suitable for sequencing count data, as it preserves the integer nature of counts during correction, unlike standard ComBat, which operates on continuous transformed data [34].

Computational Implementations and Performance

Recent developments have expanded the computational accessibility of these methods through multiple implementations. The pyComBat implementation, introduced in 2023, provides a Python tool for batch effect correction in high-throughput molecular data using empirical Bayes methods, offering similar correction power to the original R implementations with improved computational efficiency [34]. Benchmarking tests demonstrate that the parametric version of pyComBat performs 4-5 times faster than the original R implementation of ComBat, and approximately 1.5 times faster than the Scanpy implementation of ComBat [34]. For the non-parametric version, which is inherently more computationally intensive, pyComBat maintains this 4-5 times speed advantage, reducing computation time from over an hour to approximately 15 minutes for typical datasets [34].

Table 1: Comparison of ComBat and ComBat-Seq Characteristics

| Characteristic | ComBat | ComBat-Seq |

|---|---|---|

| Data Type | Microarray/continuous data | RNA-seq count data |

| Distribution Assumption | Normal distribution | Negative binomial distribution |

| Input Data | Normalized count matrix | Raw count matrix |

| Correction Object | Count matrix | Count matrix |

| Implementation | R (sva), Python (pyComBat, Scanpy) | R (sva), Python (pyComBat) |

| Key Reference | Johnson et al., 2007 [34] | Zhang et al., 2020 [30] |

Detailed Experimental Protocols

ComBat-Seq Protocol for Bulk RNA-seq Data

The following protocol provides a step-by-step methodology for implementing ComBat-Seq correction in bulk RNA-seq analysis, using R and the sva package.

Environment Setup and Data Preparation

Begin by installing and loading the required R packages, then import your raw count data:

This initial setup ensures the proper computational environment and prepares the count data for downstream analysis. The filtering step is crucial as low-count genes can introduce substantial noise in batch effect correction [18].

Batch Effect Correction with ComBat-Seq

Implement the ComBat-Seq algorithm directly on the filtered count matrix:

This implementation utilizes the core ComBat-Seq function, which applies a negative binomial regression framework to estimate and remove batch effects while preserving biological signals of interest [34].

Alternative Workflow: Covariate Adjustment in Differential Expression

For comparative purposes, below is a protocol for incorporating batch information directly into differential expression analysis using edgeR and limma, an alternative to pre-correction with ComBat-Seq:

This approach maintains the original count structure while statistically accounting for batch effects during differential expression testing, which some studies suggest may be preferable to pre-correction methods in certain experimental designs [18].

Workflow Visualization and Conceptual Framework

ComBat-Seq Algorithm Workflow

The following diagram illustrates the core computational workflow of the ComBat-Seq algorithm for batch effect correction:

ComBat-Seq Algorithm Workflow

The ComBat-Seq workflow begins with the raw count matrix and proceeds through model specification using the negative binomial distribution, empirical Bayes parameter estimation that borrows information across genes, and finally batch effect adjustment to produce the corrected count matrix suitable for downstream analyses [34].

Batch Effect Correction Decision Framework

Researchers must select appropriate batch correction strategies based on their data characteristics and research goals. The following decision framework guides method selection:

Batch Correction Decision Framework

This decision framework highlights the position of ComBat-Seq within the broader ecosystem of batch effect correction methods, emphasizing its specific applicability to raw count data from bulk RNA-seq experiments where batch information is known [18] [34].

Table 2: Essential Computational Tools for Batch Effect Correction

| Tool/Package | Implementation | Primary Function | Application Context |

|---|---|---|---|

| sva | R | Contains ComBat and ComBat-Seq functions | Bulk RNA-seq, microarray data |

| pyComBat | Python | Python implementation of ComBat/ComBat-Seq | High-throughput molecular data |

| edgeR | R | Differential expression analysis with normalization | RNA-seq count data |

| limma | R | Linear models for microarray and RNA-seq data | Differential expression with batch correction |

| inmoose | Python | Python package containing pyComBat | Batch effect correction in Python workflows |

The sva package in R represents the original implementation of both ComBat and ComBat-Seq algorithms and remains the most widely used tool for applying these methods [34]. For researchers working primarily in Python, the pyComBat implementation offers equivalent functionality with improved computational efficiency, serving as the sole Python implementation of ComBat-Seq currently available [34]. The edgeR and limma packages provide complementary functionality for normalization and differential expression analysis that can be integrated with batch correction approaches, either through pre-correction or by including batch as a covariate in linear models [18] [29].

When implementing these tools, researchers should consider that recent comparative evidence has indicated that Harmony may outperform ComBat and ComBat-Seq in scRNA-seq applications, particularly in minimizing the introduction of artifacts during correction [30]. However, ComBat-Seq remains a robust choice for bulk RNA-seq count data where preserving the integer nature of counts is prioritized.

Harmony is a computationally efficient algorithm for integrating single-cell RNA sequencing (scRNA-seq) datasets to address the critical challenge of batch effects. Batch effects are systematic technical discrepancies arising from differences in experimental conditions, protocols, or sequencing platforms that can obscure true biological variation [35] [30]. By projecting cells into a shared embedding where they group by cell type rather than dataset-specific conditions, Harmony enables accurate joint analysis across multiple studies and biological contexts [36]. Its robust performance and scalability make it particularly valuable for large-scale atlas-level integration, facilitating the identification of cell types and states across diverse clinical and biological conditions [36] [30].

The ability to profile thousands of individual cells through scRNA-seq has revolutionized the study of cellular heterogeneity. However, integrating datasets from different sources remains challenging due to batch effects—systematic technical variations that confound biological signals [35]. These effects can arise from differences in sample preparation, sequencing protocols, platforms, or laboratory conditions [12]. Without proper correction, batch effects can lead to misleading conclusions in downstream analyses, including cell type identification, differential expression, and trajectory inference [30].

Several computational methods have been developed to address batch effects in scRNA-seq data, including anchor-based approaches (e.g., Seurat, MNN Correct, Scanorama), clustering-based methods (e.g., Harmony, LIGER), and deep learning techniques (e.g., SCVI) [35] [30]. A recent comprehensive evaluation demonstrated that Harmony consistently performs well across multiple testing methodologies and is the only method recommended for batch correction of scRNA-seq data due to its calibrated performance and minimal introduction of artifacts [30].

Algorithmic Principles of Harmony

Harmony operates through an iterative process that combines soft clustering and dataset-specific correction to align multiple datasets in a low-dimensional embedding. The algorithm begins with a low-dimensional representation of cells, typically from Principal Component Analysis (PCA), that meets key criteria for downstream processing [36].

Core Computational Workflow

The Harmony algorithm proceeds through four iterative steps until convergence:

Clustering: Cells are grouped into multi-dataset clusters using a soft k-means clustering approach that favors clusters containing cells from multiple datasets. This soft clustering accounts for smooth transitions between cell states and avoids local minima [36].

Centroid Calculation: For each cluster, dataset-specific centroids are computed, representing the average position of cells from each dataset within that cluster [36] [37].

Correction Factor Estimation: Cluster-specific linear correction factors are computed based on the displacement between dataset-specific centroids and the overall cluster centroid [36].

Cell-Specific Correction: Each cell receives a cluster-weighted average of these correction terms and is adjusted by its cell-specific linear factor [36].

A key innovation in Harmony is the use of cosine distance for clustering, achieved by L₂ normalization of cells in the embedding space. This normalization scales each cell to have unit length, inducing cosine distance which is more robust for measuring cell-to-cell similarity in high-dimensional space [37].

Table 1: Key Parameters in the Harmony Algorithm

| Parameter | Description | Default/Range |

|---|---|---|

theta |

Diversity enforcement parameter controlling strength of integration | Typically 1-2 |

nclust (K) |

Number of clusters in Harmony model | User-defined (default ~5-30) |

max.iter.harmony |

Maximum number of iterations to run | Until convergence (default ~10-20) |

vars_use |

Variables to integrate out (e.g., dataset, batch) | User-specified |

Performance Evaluation and Comparative Analysis

Quantitative Performance Metrics

Harmony's performance can be quantitatively assessed using several metrics designed to evaluate both integration effectiveness (mixing of batches) and biological conservation (separation of cell types):

- Local Inverse Simpson's Index (LISI): Measures the effective number of datasets or cell types in a local neighborhood. Integration LISI (iLISI) quantifies batch mixing, while cell-type LISI (cLISI) assesses cell type separation [36] [35].

- Adjusted Rand Index (ARI): Evaluates clustering accuracy against known cell type labels [35].

- Average Silhouette Width (ASW): Measures cluster compactness and separation for both batch labels (ASWbatch) and cell types (ASWcelltype) [35].

- kBET: Tests whether batch labels are randomly distributed in local neighborhoods [35].

Comparative Performance

In systematic evaluations, Harmony has demonstrated superior performance compared to other batch correction methods:

Table 2: Performance Comparison of Batch Correction Methods

| Method | Integration Score (iLISI) | Accuracy (cLISI) | Runtime (minutes) | Memory (GB) |

|---|---|---|---|---|

| Harmony | 1.96 | 1.00 | 68 | 7.2 |

| MNN Correct | Lower than Harmony | Lower than Harmony | >200 | >30 |

| Seurat | Lower than Harmony | Lower than Harmony | >200 | >30 |

| BBKNN | Lower than Harmony | Lower than Harmony | Comparable for <125k cells | Higher than Harmony |

| Scanorama | Lower than Harmony | Lower than Harmony | Comparable for <125k cells | >30 |

Note: Performance metrics based on analysis of 500,000 cells from HCA data [36]. Integration and accuracy scores are relative comparisons with higher iLISI and lower cLISI indicating better performance.

A recent comprehensive analysis found that Harmony was the only method that consistently performed well across all evaluation criteria and did not introduce measurable artifacts during the correction process [30]. Methods including MNN, SCVI, LIGER, ComBat, and Seurat were found to alter the data considerably in ways that could affect downstream analysis [30].

Experimental Protocols

Standard Harmony Integration Workflow

Harmony Computational Workflow

Detailed Protocol for PBMC Dataset Integration

Objective: Integrate three PBMC datasets assayed with different 10X Chromium protocols (3-prime end v1, 3-prime end v2, and 5-prime end chemistries) to identify shared cell populations across protocols.

Materials and Reagents:

- Datasets: scRNA-seq data from PBMCs generated using different 10X protocols

- Software: R environment with required packages

- Computational Resources: Standard personal computer sufficient for datasets of ~50,000 cells

Procedure:

Data Preprocessing

- Load count matrices for each dataset

- Perform quality control to remove low-quality cells and genes

- Normalize counts using library size normalization and log transformation

- Identify highly variable genes for downstream analysis

Dimensionality Reduction

- Perform PCA on the concatenated dataset using highly variable genes

- Retire top principal components (typically 20-50) for Harmony integration

Harmony Integration

- Initialize Harmony object with PCA embedding and metadata indicating dataset source

- Run Harmony integration with appropriate parameters

Downstream Analysis

- Use Harmony-corrected embeddings for clustering and visualization

- Perform differential expression analysis to identify marker genes

- Annotate cell types based on canonical markers

Troubleshooting Tips:

- If integration appears incomplete, increase

thetaparameter to enforce greater diversity - For large datasets (>100,000 cells), adjust

nclustparameter upward to capture finer structure - Monitor convergence through iteration history; increase

max.iter.harmonyif needed

Research Reagent Solutions

Table 3: Essential Computational Tools for Harmony Implementation

| Resource | Type | Function | Availability |

|---|---|---|---|

| Harmony R Package | Software Package | Core algorithm implementation | https://github.com/immunogenomics/harmony |

| Seurat with Harmony | Software Integration | Harmony integration within Seurat workflow | Seurat v3.0+ |

| Single-cell Datasets | Data Resources | PBMC, cell line, and tissue atlases for method validation | 10X Genomics, Human Cell Atlas |

| Presto | Software Package | Efficient differential expression analysis | https://github.com/immunogenomics/presto |

| MUDAN Package | Software Package | Single-cell analysis utilities including clustering | https://github.com/jefworks/MUDAN |

Applications and Case Studies

Cell Line Validation

In a controlled experiment using three datasets from two cell lines (Jurkat and 293T), including one 50:50 mixture dataset, Harmony successfully integrated Jurkat cells from the mixture dataset with cells from the pure Jurkat dataset, and similarly for 293T cells, while maintaining clear separation between cell types. This demonstrated Harmony's ability to achieve both high integration (median iLISI = 1.59) and high accuracy (median cLISI = 1.00) [36].

Pancreatic Islet Cell Meta-analysis

Harmony has been applied to integrate five independent studies of pancreatic islet cells, successfully aligning similar cell types across studies while preserving subtle cell states and transitions. This meta-analysis demonstrated Harmony's utility for combining data from diverse experimental conditions to identify conserved cell populations [36].

Cross-Modality Spatial Integration

Harmony has shown promise in integrating dissociated single-cell data with spatially resolved expression datasets, enabling the mapping of cell types to tissue locations while accounting for technical differences between measurement modalities [36].

Technical Considerations and Limitations

While Harmony demonstrates robust performance across diverse datasets, several technical considerations should be noted:

Input Requirements: Harmony operates on a low-dimensional embedding (typically PCA) rather than raw count data, which means it doesn't directly correct the count matrix itself [30].

Cluster Number Sensitivity: The algorithm's performance can be sensitive to the number of clusters specified, requiring careful parameter tuning for optimal results [36].

Order-Preserving Limitation: Unlike some methods (e.g., ComBat), Harmony's output is an integrated embedding rather than a corrected count matrix, which means it doesn't preserve the original order of gene expression levels [12].

Scalability: Harmony is notably efficient, capable of integrating ~1 million cells on a personal computer, making it accessible without high-performance computing resources [36].

Harmony represents a significant advancement in batch correction methodology, offering researchers a robust, scalable, and accurate tool for integrating diverse scRNA-seq datasets to uncover biologically meaningful patterns across experimental conditions.