Breaking the Detection Barrier: Advanced Strategies for Low-Abundance Proteomics in Biomedical Research

Low-abundance proteins are critical regulators in biology and promising biomarkers for disease, yet their analysis is hindered by the immense dynamic range of complex proteomes.

Breaking the Detection Barrier: Advanced Strategies for Low-Abundance Proteomics in Biomedical Research

Abstract

Low-abundance proteins are critical regulators in biology and promising biomarkers for disease, yet their analysis is hindered by the immense dynamic range of complex proteomes. This article provides a comprehensive guide for researchers and drug development professionals on overcoming these sensitivity challenges. We explore the foundational principles of proteomic complexity, detail cutting-edge methodological solutions from sample preparation to instrumentation, offer practical troubleshooting and optimization protocols, and present a comparative analysis of validation frameworks and technology platforms. By synthesizing the latest advancements, this resource aims to equip scientists with the knowledge to deepen proteomic coverage and accelerate discoveries in basic biology and clinical translation.

Understanding the Low-Abundance Proteome: Why It Matters and What Makes It Challenging

The Biological Significance of Low-Abundance Proteins in Signaling and Disease

Technical Support Center: Troubleshooting Low-Abundance Proteomics

Frequently Asked Questions (FAQs)

Q: My mass spectrometry analysis of plasma is dominated by albumin and immunoglobulins, masking potential low-abundance biomarkers. What depletion or enrichment strategies can I use?

A: The dominance of high-abundance proteins is a common challenge. The following table summarizes the primary strategies to overcome this:

| Strategy | Mechanism | Key Consideration |

|---|---|---|

| Immunodepletion [1] [2] | Uses antibodies to remove top 7-14 abundant proteins (e.g., albumin, IgG). | Risk of off-target removal of proteins bound to carriers (the "sponge effect") [1]. |

| Proteome Equalization (e.g., ProteoMiner) [3] | Uses a hexapeptide library to normalize protein concentrations; high-abundance proteins saturate their ligands. | Enriches low-abundance proteins by increasing their relative concentration [3]. |

| Preparative Gel Electrophoresis [1] | Physically separates and removes abundant proteins like albumin in the presence of strong ionic detergents (SDS). | Avoids the "sponge effect" by performing separation under denaturing conditions [1]. |

Q: I am not detecting my low-abundance protein of interest, even after enrichment. What are the potential causes?

A: This can result from several issues in the sample preparation workflow:

- Sample Loss: Low-abundant proteins can be lost during preparation steps. Always monitor critical steps by Western Blot or Coomassie staining [4].

- Protein Degradation: Ensure you are using protease inhibitor cocktails (EDTA-free recommended) in all buffers during preparation to prevent degradation [4].

- Adsorption to Surfaces: Peptides can adsorb to vial walls and pipette tips. Use "high-recovery" vials, limit sample transfers, and consider "one-pot" preparation methods to minimize surface contact [5].

- Polymer Contamination: Contaminants like polyethylene glycol (PEG) from skin creams or detergents can obscure MS signals. Avoid surfactants in lysis buffers and always wear gloves (though be aware gloves themselves can be a source of polymers) [5].

Q: How can I improve the sensitivity of my mass spectrometry for trace-level proteins?

A: Beyond sample preparation, advances in MS data acquisition can significantly enhance sensitivity.

- Use Scanning Data-Independent Acquisition (ZT Scan DIA): This method uses a continuously scanning quadrupole for isolation, improving the identification and quantitation of proteins at sub-nanogram sample loads by up to 50% compared to conventional DIA methods [6].

- Optimize Mobile Phase: While TFA improves chromatographic peak shape, it strongly suppresses ionization. Use formic acid in the mobile phase for better sensitivity [5].

- Ensure High Water Purity: Use fresh, MS-grade water for mobile phases and samples. Avoid using water that has been stored for more than a few days and never wash MS glassware with detergent [5].

Experimental Protocols for Key Methodologies

Protocol 1: Depletion of Serum IgG Using a Protein G Column [1]

- Column Equilibration: Equilibrate a protein G column (e.g., HiTrap) with 5 column volumes of binding buffer (20 mM sodium phosphate, pH 7.0) at a flow rate of 1 mL/min.

- Sample Application: Dilute the serum sample 5-fold and load onto the column at 1 mL/min. Collect the flow-through as the IgG-depleted serum.

- Elution: Elute the bound IgG fraction using a low-pH elution buffer.

- Assessment: Analyze the efficiency of depletion using SDS-PAGE and measure protein concentration with a Bradford assay.

Protocol 2: Denaturing Preparative Gel Electrophoresis for Albumin Reduction [1]

- Gel Casting: Prepare a 6 cm separating gel (e.g., a stepwise gel of 15%, 12%, and 10% polyacrylamide layers) topped with a 1 cm 4% stacking gel in a PrepCell apparatus. Seal the bottom with a 5 kDa cut-off dialysis membrane.

- Sample Preparation: Mix up to 15 mg of IgG-depleted serum protein with loading dye and incubate at 62°C for 20 minutes.

- Electrophoresis: Load the sample and run the gel initially at 25 mA for 20 minutes, then increase to 45 mA until completion.

- Fraction Collection: Collect eluted fractions automatically. Use SDS-PAGE and silver staining to identify and pool the albumin-reduced fractions.

- Concentration: Concentrate and buffer-exchange the pooled fractions using a stirred cell with a 5 kDa cut-off membrane.

Quantitative Data on Enrichment Efficacy

The impact of implementing low-abundance protein enrichment strategies is demonstrated by the following quantitative improvements in proteome coverage:

Table 1: Gains in Protein Identification with ZT Scan DIA at Low Loadings [6]

| On-Column Loading | Gradient | Gain in Total Protein Groups | Gain in Protein Groups (CV<20%) |

|---|---|---|---|

| 5 ng | 15-min Microflow | >25% | >25% |

| 0.25 ng | 30-min Nanoflow | ~20% | ~50% |

Table 2: Impact of Low-Abundance Enrichment on Blood Proteome Coverage [2]

| Workflow | Typical Proteins Identified | Key Characteristics of Detected Proteins |

|---|---|---|

| Without Enrichment | A few hundred | Dominated by high-abundance proteins (e.g., complement, coagulation factors). |

| With Optimized Enrichment | 3,000 - 5,000 | Includes cytokines, tissue-specific markers, and signaling molecules; reveals broader biological processes (e.g., inflammation, cell communication). |

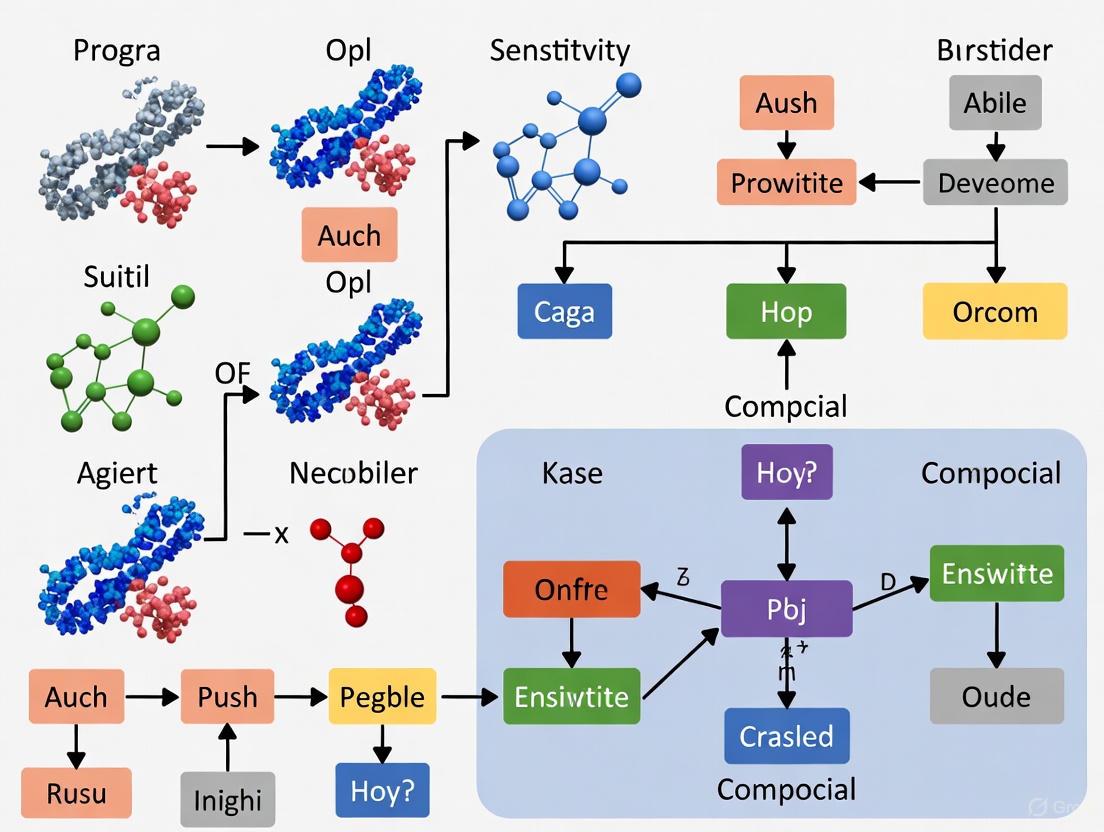

Visualizing Workflows and Signaling Pathways

Diagram: Experimental Workflow for Serum Proteomics

Diagram: Signaling Pathways of Identified Low-Abundance Proteins

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Low-Abundance Proteomics Workflows

| Item | Function in Experiment |

|---|---|

| Protein G Column [1] | Immunoaffinity depletion of immunoglobulins from serum/plasma. |

| PrepCell Apparatus [1] | Preparative gel electrophoresis for physical separation and removal of abundant proteins like albumin under denaturing conditions. |

| ProteoMiner (Hexapeptide Library) [3] | Proteome equalization beads to compress dynamic range by enriching low-abundance proteins. |

| Trypsin [1] | Protease for digesting proteins into peptides for LC-MS/MS analysis. |

| Mass Spectrometry Grade Water [5] | Prevents contamination from polymers or ions that interfere with chromatography and ionization. |

| High-Recovery LC Vials [5] | Engineered surfaces minimize adsorption and loss of low-abundance peptides. |

| Iodoacetamide (IAA) [1] | Alkylating agent for cysteine residues during sample preparation. |

| Protease Inhibitor Cocktail [4] | Prevents protein degradation during sample extraction and preparation. |

In mass spectrometry-based proteomics, the dynamic range problem represents a fundamental challenge, particularly when analyzing complex biological fluids like plasma and serum. The protein concentration in these samples can vary by more than ten orders of magnitude, where highly abundant proteins such as albumin and immunoglobulins can constitute over 90% of the total protein content, effectively masking the detection of low-abundance proteins that often have high biological and clinical relevance [7] [8]. This technical brief provides targeted troubleshooting guidance and FAQs to help researchers overcome these barriers and improve sensitivity in low-abundance proteomic analysis.

Understanding the Dynamic Range Challenge

The vast concentration difference between high-abundance and low-abundance proteins in plasma and serum creates significant analytical interference [9]. Key challenges include:

- Signal Masking: High-abundance proteins dominate the MS signal, preventing detection of less abundant species [7].

- Ion Suppression: Low-abundance peptides ionize inefficiently in the presence of highly abundant counterparts [8].

- Sample Capacity Limits: The analytical system becomes saturated by abundant proteins, reducing its ability to detect rare analytes [7].

The following diagram illustrates the core strategy for navigating the dynamic range challenge:

Methodological Approaches & Workflows

Bead-Based Enrichment for Low-Abundance Proteins

Bead-based enrichment uses paramagnetic beads coated with specific binders to selectively isolate target proteins from complex samples [9]. This technique specifically addresses the dynamic range challenge by reducing background interference from highly abundant proteins.

Detailed Protocol:

- Binding: Incubate the plasma/serum sample with coated beads, allowing target proteins to bind via specific interactions [9].

- Washing: Wash beads thoroughly to remove non-specifically bound material and contaminants [9].

- Lysis: Denature, reduce, and alkylate bound proteins using LYSE reagent with incubation in a thermal shaker for 10 minutes [9].

- Digestion: Digest proteins into peptides using trypsin under optimized conditions for complete digestion [9].

- Purification: Clean digested peptides using solid-phase extraction (SPE) to remove contaminants [9].

- MS Analysis: Reconstitute peptides in appropriate solvent for mass spectrometry analysis [9].

Fractionation Techniques for Dynamic Range Compression

Fractionation separates the complex protein mixture into simpler subsets, reducing complexity and increasing proteome coverage [7].

Table 1: Fractionation Techniques for Dynamic Range Management

| Technique | Principle | Application | Impact on Dynamic Range |

|---|---|---|---|

| Liquid Chromatography (LC) | Separates peptides based on hydrophobicity | Pre-fractionation before MS analysis | Redances sample complexity by spreading it over time [7] |

| Solid-Phase Extraction (SPE) | Selective retention using sorbents (C18, silica, ion-exchange) | Sample clean-up and concentration | Removes interfering substances; concentrates analytes [10] |

| Immunoaffinity Depletion | Antibodies remove top abundant proteins | Pre-processing of plasma/serum samples | Removes ~90% of dominant proteins [8] |

Troubleshooting Guide: FAQs

FAQ 1: How can I improve recovery of low-abundance proteins during sample preparation?

Issue: Low recovery of low-abundance proteins leading to poor detection sensitivity.

Solutions:

- Implement Bead-Based Enrichment: Use specialized kits (e.g., ENRICH-iST) designed specifically for enriching low-abundance proteins from plasma and serum. These kits provide standardized, automatable protocols that improve reproducibility and reduce processing time to approximately 5 hours [9].

- Optimize Solid-Phase Extraction: Select appropriate sorbents for your target analytes. Use reversed-phase sorbents like C18 for hydrophobic compounds, normal-phase sorbents like silica for polar analytes, or ion-exchange sorbents like quaternary amine for charged molecules. Incorporate a weak wash step to remove weakly bound impurities before eluting analytes with a strong wash solvent [10].

- Control Sample Handling: Maintain consistent temperature during processing, use protease inhibitors, and avoid excessive heating during preparation steps to prevent protein degradation [11].

Issue: Contamination introducing artifacts and masking low-abundance signals.

Solutions:

- Implement Rigorous Contamination Control: Use syringe filters or membrane filters to remove particulate matter. Maintain a clean workspace with proper lab hygiene practices [11].

- Employ Proper Filtration Techniques: Utilize membrane filtration, glass fiber filtration, or centrifugation to remove particulate matter from liquid samples [10].

- Address Common Contamination Sources:

- Human Keratins: Wear gloves and use clean lab coats

- Plasticizers: Use high-quality plasticware and filters

- Carryover Between Samples: Implement proper cleaning protocols and use dedicated equipment [11]

FAQ 3: Why do I get inconsistent results when analyzing multiple plasma samples?

Issue: High variability between technical and biological replicates.

Solutions:

- Standardize Sample Collection: Implement uniform protocols for blood collection, anticoagulant use, and processing timelines [8].

- Automate Sample Preparation: Utilize automated systems for dilution, filtration, solid-phase extraction, and liquid-liquid extraction to minimize human error and variability, especially in high-throughput environments [12].

- Implement Quality Control Measures:

- Establish standard operating procedures (SOPs)

- Verify reagent purity and stability

- Perform regular instrument calibration and maintenance

- Conduct continuous training and competency assessment for personnel [10]

FAQ 4: How can I reduce matrix effects that suppress ionization of low-abundance peptides?

Issue: Matrix effects causing ion suppression in mass spectrometry.

Solutions:

- Enhance Sample Cleanup: Utilize comprehensive purification steps including solid-phase extraction or liquid-liquid extraction to remove phospholipids and other interfering substances [10] [9].

- Combine Protein Precipitation with Phospholipid Removal: Use products that allow simultaneous removal of proteins and phospholipids for improved MS results [10].

- Implement Extensive Fractionation: Combine multiple separation dimensions (e.g., high-pH reversed-phase fractionation) to reduce sample complexity and minimize co-elution of interfering substances [7].

Research Reagent Solutions

Table 2: Essential Materials for Dynamic Range Challenge in Plasma Proteomics

| Reagent/Kit | Function | Application Context |

|---|---|---|

| ENRICH-iST Kit | Enriches low-abundance proteins onto paramagnetic beads | Plasma/Serum proteomics; compatible with human samples and mammalian species [9] |

| SPE Sorbents (C18, Silica, Ion-Exchange) | Selective retention of target analytes | Sample clean-up and concentration; reversed-phase for hydrophobic compounds [10] |

| Immunoaffinity Depletion Columns | Remove top 7-14 abundant plasma proteins | Sample pre-fractionation; reduces dynamic range by ~90% [8] |

| QuEChERS Kits | Quick, Easy, Cheap, Effective, Rugged, Safe extraction | Food and environmental analyses; complex matrices [10] |

| Trypsin | Proteolytic enzyme for protein digestion | Digests proteins into peptides for MS analysis [9] |

| LYSE Reagent | Denatures, reduces, and alkylates proteins | Protein preparation for digestion [9] |

Successfully navigating the dynamic range challenge in plasma and serum proteomics requires a multifaceted approach combining strategic sample preparation, appropriate fractionation techniques, and rigorous troubleshooting protocols. By implementing the bead-based enrichment methodologies, optimization strategies, and contamination control measures outlined in this technical guide, researchers can significantly improve their detection of low-abundance proteins. These advances in overcoming the dynamic range obstacle are crucial for unlocking the full potential of plasma proteomics in biomarker discovery, disease mechanism elucidation, and drug development.

Frequently Asked Questions (FAQs)

What is the "masking effect" in proteomics? The masking effect refers to the analytical challenge in mass spectrometry where a small number of highly abundant proteins (such as albumin and immunoglobulins in blood plasma) account for approximately 90% of the total protein mass. This vast concentration difference, which can exceed 10 orders of magnitude, causes high-abundance proteins to dominate the MS signal, effectively preventing the detection of many low-abundance proteins that often have critical biological functions [2].

Why are low-abundance proteins important if they are present in such small amounts? Despite their low concentration, many low-abundance proteins, such as cytokines, hormones, and growth factors, carry significant biological importance. They play pivotal roles in regulating immune responses, cell growth, and tissue homeostasis. Deviations in their concentrations often serve as early indicators of disease onset or progression, making them promising targets for novel drug targets and mechanistic biomarkers in pharmaceutical R&D [2].

What are the main technological approaches to overcome this masking effect? There are two primary approaches. First, affinity-based platforms like Olink's PEA and SomaLogic's SomaScan use antibody- or aptamer-based binding for predefined targets, offering exceptional sensitivity for large-scale biomarker panels. Second, mass spectrometry (MS)-based workflows provide unbiased exploration of thousands of proteins and are highly adaptable. Within MS, key strategies include immunodepletion of top abundant proteins, specific enrichment of low-abundance proteins using kits like ProteoMiner, and multidimensional fractionation to reduce sample complexity [2].

How much can enrichment improve protein detection? The improvement from enrichment is profound. Direct analysis without enrichment typically identifies only a few hundred proteins, predominantly high-abundance ones. Implementing optimized low-abundance protein enrichment protocols can surge protein identifications to well over a thousand—often reaching 3,000–5,000 proteins per sample—representing a multi-fold increase and revealing previously hidden cytokines and tissue-specific markers [2].

Troubleshooting Common Experimental Issues

Issue: Low number of protein identifications in plasma/serum samples.

- Potential Cause: The dynamic range of the sample exceeds the detection capabilities of the standard MS workflow, with high-abundance proteins masking the signal of low-abundance ones.

- Solution: Implement a pre-fractionation or enrichment step prior to MS analysis.

- Immunodepletion: Use commercial multi-affinity removal columns (e.g., MARS) to simultaneously deplete the top 7–14 most abundant proteins, removing up to 97–99% of total protein mass [2].

- Ligand-Based Enrichment: Use technologies like ProteoMiner, which leverages hexapeptide ligand libraries to normalize protein concentrations. High-abundance proteins saturate their ligands, while low-abundance proteins are selectively retained [2].

- Verification: After implementing enrichment, check that the number of identified proteins has significantly increased. Functional pathway analysis should reveal a broader spectrum of biological processes (e.g., inflammation, cell communication) beyond the basic pathways (e.g., coagulation, complement) seen in unenriched samples [2].

Issue: High technical variability and poor reproducibility in large-scale studies.

- Potential Cause: Traditional multi-step sample preparation processes are often manual and prone to variability.

- Solution:

- Automate Workflows: Where possible, automate sample preparation steps to minimize manual handling.

- Implement Robust QC: Adopt advanced MS platforms like Bruker timsTOF instruments with PASEF and dual TIMS technologies, which are noted for high reproducibility [2].

- Use Scanning DIA: For label-free quantification, consider Zeno trap-enabled scanning data-independent acquisition (ZT Scan DIA) on instruments like the ZenoTOF 7600+ system. This method has been shown to improve the detection of quantifiable protein groups by up to 50% at sub-nanogram levels and delivers better quantitative precision (lower CV%) compared to conventional discrete-window DIA [6].

Issue: A significant number of missing values in the data after MS analysis.

- Potential Cause: Missing values are abundant in label-free proteomics, especially for low-abundance peptides near the instrument's detection limit.

- Solution: Apply a sophisticated imputation strategy. Do not simply ignore missing values.

- Recommended Method: Consider using deep learning-based imputation approaches, such as the Proteomics Imputation Modeling Mass Spectrometry (PIMMS) workflow, which uses variational autoencoders (VAE). In one study, removing 20% of intensities and imputing with PIMMS-VAE recovered 15 out of 17 significant abundant protein groups. When applied to a full dataset, it identified 30 additional proteins (+13.2%) that were significantly differentially abundant compared to no imputation [13].

- Comparison: This method can outperform traditional imputation methods like random draws from a down-shifted normal (RSN) distribution or k-nearest neighbors (KNN) [13].

Table 1: Performance Comparison of Key Proteomics Technologies for Low-Abundance Protein Detection

| Technology / Method | Key Principle | Reported Protein Identifications | Advantages | Limitations |

|---|---|---|---|---|

| Standard LC-MS/MS (No Enrichment) | Direct digestion and analysis of plasma [2] | A few hundred proteins [2] | Simple, fast workflow | Overwhelmed by dynamic range; misses critical low-abundance signals |

| MS with Low-Abundance Enrichment | Immunodepletion or ProteoMiner to compress dynamic range [2] | 3,000 - 5,000+ proteins [2] | Dramatically increases depth; reveals signaling molecules | Adds complexity and cost to sample preparation |

| Olink PEA / SomaScan | Affinity-based (antibody/aptamer) binding to predefined targets [2] | Varies by panel size | Exceptional sensitivity and dynamic range | Limited to predefined targets; potential specificity issues |

| ZenoTOF 7600+ with ZT Scan DIA | Scanning quadrupole DIA with trap pulsing for enhanced sensitivity [6] | Up to 50% more quantifiable proteins at sub-nanogram loads [6] | Excellent for limited samples; high quantitative precision (<20% CV) [6] | Requires specific instrumentation |

Table 2: Impact of Data Imputation Methods on Protein Discovery

| Imputation Method | Type | Reported Performance |

|---|---|---|

| No Imputation | N/A | Baseline; misses proteins due to missing values [13] |

| Random Forest (RF) | Machine Learning | Fails to scale well on high-dimensional data [13] |

| k-Nearest Neighbors (KNN) | Machine Learning | Can be applied to larger datasets [13] |

| PIMMS (VAE) | Self-Supervised Deep Learning | Recovered 15/17 significant proteins in validation; found +30 (+13.2%) significant proteins in full analysis [13] |

Detailed Experimental Protocols

Protocol 1: Immunodepletion of High-Abundance Plasma Proteins

This protocol is for depleting the top 14 most abundant proteins from human plasma or serum using a commercial immunoaffinity column (e.g., MARS 14) to enhance the detection of low-abundance proteins [2].

Materials:

- Research Reagent: Multi-Affinity Removal System (MARS) Column (e.g., Hu-14)

- Equipment: HPLC or FPLC system compatible with the column

- Buffers: Buffer A (equilibration buffer) and Buffer B (stripping buffer) as specified by the column manufacturer

Procedure:

- Sample Preparation: Dilute the plasma or serum sample with the recommended Buffer A according to the manufacturer's instructions (often a 1:5 dilution). Centrifuge the diluted sample at high speed (e.g., 15,000 x g) for 10 minutes to remove any particulates.

- System Setup: Install the MARS column into the HPLC/FPLC system. Equilibrate the column with Buffer A at the recommended flow rate until a stable baseline is achieved (typically 5-10 column volumes).

- Sample Injection: Inject the clarified, diluted plasma sample onto the column.

- Flow-Through Collection: The low-abundance and mid-abundance proteins, which are not bound by the antibodies on the column, will flow through. Collect this fraction, which represents the enriched proteome.

- Column Regeneration: After the flow-through is collected, switch to 100% Buffer B to elute the bound, high-abundance proteins (e.g., albumin, IgG, etc.) from the column.

- Re-equilibration: Re-equilibrate the column with Buffer A for at least 5-10 column volumes before the next injection.

- Desalting/Concentration: The collected flow-through fraction (enriched proteome) must be desalted and concentrated (e.g., using a centrifugal filter with a 3-5 kDa cutoff) before proceeding to downstream steps like digestion and LC-MS/MS.

Troubleshooting Note: Be aware that proteins bound to depleted carriers (like albumin) may be unintentionally co-removed. The enrichment effect is profound, but not perfect [2].

Protocol 2: Zeno Trap-Enabled Scanning DIA (ZT Scan DIA) for Sensitive Detection

This protocol outlines the setup for a ZT Scan DIA method on a ZenoTOF 7600+ system for analyzing low-input protein samples, such as sub-nanogram digests [6].

Materials:

- Research Reagent: Trypsin-digested protein sample (e.g., K562 cell line digest)

- Equipment: ZenoTOF 7600+ mass spectrometer coupled to a nanoflow or microflow LC system

- Software: SCIEX OS software and DIA-NN (v1.8.1 or later) for data processing

Procedure:

- LC Separation:

- For nanoflow LC, use an analytical column (e.g., IonOpticks Aurora Elite SX, 15 cm x 75 µm) with a flow rate of 150 nL/min. Employ a 30-minute active gradient from 3% to 35% acetonitrile.

- For microflow LC, use a column (e.g., Phenomenex XB-C18, 15 cm x 300 µm) at a flow rate of 5 µL/min with a 15-minute active gradient.

Mass Spectrometer Method Setup:

- In the method editor, select the ZT Scan DIA acquisition mode.

- Set the MS and MS/MS mass ranges (e.g., 400-900 Da for MS, 100-1750 Da for MS/MS).

- Define the Estimated Peak Width at Half Height (PWHH) based on your LC method. For a 30-minute nanoflow gradient, a PWHH of

> 0.5 secis typical, which automatically configures a 7.5 Da-wide Q1 isolation window scanned at 375 Da/sec [6]. - Ensure that Zeno trap pulsing is enabled to maximize MS/MS sensitivity.

Data Acquisition: Inject the low-load sample (e.g., 0.5 ng on-column) and run the method in technical replicate (n=3) for robust quantitation.

Data Processing:

- Process the raw data files using DIA-NN software.

- Use the

--scanning-swathcommand line option when processing ZT Scan DIA data. - Search against an appropriate spectral library (e.g., a K562/HeLa library).

- In the report, filter protein groups based on quantification quality, using a coefficient of variation (CV) < 20% as a good benchmark for reliable quantification [6].

Essential Visualizations

Diagram 1: High-Abundance Protein Masking Effect and Solutions

High-Abundance Masking and Resolution Pathways

Diagram 2: Low-Abundance Proteomics Experimental Workflow

Low-Abundance Proteomics Workflow

The Scientist's Toolkit: Key Research Reagents & Materials

Table 3: Essential Research Reagents and Platforms for Low-Abundance Proteomics

| Item / Reagent | Function / Principle | Key Application Note |

|---|---|---|

| MARS Immunodepletion Columns | Immunoaffinity removal of the top 7-14 abundant plasma proteins (e.g., albumin, IgG) to compress dynamic range [2]. | Depletes up to 97-99% of total protein mass, enabling detection of thousands more proteins [2]. |

| ProteoMiner Kit | Uses a hexapeptide ligand library to normalize protein concentrations; binds and enriches low-abundance species [2]. | An alternative to depletion; retains low-abundance proteins that might be bound to carriers like albumin [2]. |

| ZenoTOF 7600+ System | Mass spectrometer employing Zeno trap pulsing and scanning DIA for ultra-sensitive MS/MS detection [6]. | Identifies up to 50% more quantifiable protein groups at sub-nanogram sample loads [6]. |

| DIA-NN Software | Computational tool for processing DIA mass spectrometry data, supporting advanced methods like ZT Scan DIA [6]. | Essential for decoding complex DIA data; used with --scanning-swath flag for ZT Scan DIA data [6]. |

| PIMMS Workflow | A self-supervised deep learning tool (using Variational Autoencoders) for imputing missing values in proteomics data [13]. | Recovers significant protein hits that would be lost without imputation, increasing analytical depth [13]. |

Proteomic analysis of unique biological matrices, such as human milk and single cells, presents distinct challenges that extend far beyond those encountered with plasma. While plasma proteomics must contend with an extreme dynamic range of protein concentrations, human milk contains complex lipid globules and extracellular vesicles, and single-cell analysis deals with vanishingly small starting material. This technical support center addresses the specific experimental hurdles in these domains, providing targeted troubleshooting guidance to enhance sensitivity, quantification accuracy, and overall data quality in low-abundance proteomic research.

Technical FAQs and Troubleshooting Guides

Human Milk Proteomics

Q: What are the primary challenges when analyzing the human milk proteome, and how can I improve protein recovery?

Human milk is a complex fluid containing proteins, lipids, extracellular vesicles (mEVs), and other bioactive molecules. Its composition varies by lactation stage and individual donor [14]. Key challenges include:

- Sample Complexity: The high fat content can interfere with protein extraction and subsequent LC-MS analysis.

- Dynamic Range: A wide range of protein abundances can obscure lower-abundance signaling molecules.

- Vesicle Isolation: Efficiently isolating extracellular vesicles, which carry important functional cargo, is technically challenging.

Troubleshooting Guide:

| Symptom | Possible Cause | Recommended Action |

|---|---|---|

| Low protein yield/coverage | Inefficient defatting; suboptimal extracellular vesicle (mEV) isolation | Perform sequential centrifugation: 300 g for 10 min, then 3000 g for 10 min at 4°C to remove fat globules [14]. |

| High background noise in MS | Polymer or keratin contamination; lipid carryover | Use filter tips and HPLC-grade water. Avoid autoclaving plastics and using detergents to clean glassware [4]. |

| Inconsistent mEV isolation | Using a method with low reproducibility for milk | Consider the ExoGAG isolation method, which has demonstrated higher efficiency, concentration of vesicle-related proteins, and reproducibility compared to ultracentrifugation (UC) and other techniques [14]. |

| Poor peptide detection | Over- or under-digestion of proteins; unsuitable peptide sizes | Optimize trypsin digestion time. Consider double digestion with two different proteases to generate a more ideal peptide population for MS detection [4]. |

Q: How does the protein composition of alternative milk sources compare to human milk?

Donkey milk has been identified as a closer match to human milk in its protein and endogenous peptide profile compared to cow's milk. Donkey milk proteins are less likely to cause allergic reactions and are being investigated as a novel raw material for infant formula [15].

Single-Cell Proteomics (SCP)

Q: My single-cell proteomics experiment shows low protein identifications. What steps can I take to optimize sensitivity?

Low protein counts are common in SCP due to the minute starting material. Losses at any step can drastically impact outcomes.

Troubleshooting Guide:

| Symptom | Possible Cause | Recommended Action |

|---|---|---|

| Low protein/peptide detection | Sample loss during processing; protein degradation | Scale up the number of cells sorted. Add broad-spectrum, EDTA-free protease inhibitor cocktails (e.g., PMSF) to all buffers during sample prep [4]. |

| Inability to detect low-abundance proteins | Signal overwhelmed by high-abundance proteins; limited MS sensitivity | Use a carrier channel in a multiplexed design (e.g., ScoPE-MS, TMTPro). A 200-cell equivalent carrier can boost signal for identification without severely compromising quantification [16]. |

| "Missing" proteins in specific samples | Protein not expressed or lost during lysis | Verify protein presence in the input sample by Western Blot. Use a more efficient, chaotropic lysis buffer (e.g., Trifluoroethanol-based) instead of pure water to improve lysis and peptide recovery [16]. |

| Poor quantitative accuracy | Insufficient ion sampling; low signal-to-noise | Increase MS injection times and AGC targets to improve ion counting statistics, balancing this against a potential reduction in proteome depth due to slower scan speeds [16]. |

Q: What are the critical considerations for designing a robust single-cell proteomics workflow?

The fundamental challenge is the analytical barrier posed by the small amount of protein in a single cell [17]. A robust SCP workflow must address:

- Cell Isolation: Using FACS to sort individual cells into lysis plates. Index-sorting, which records FACS parameters for each cell, allows for integration of surface marker data with proteomic data [16].

- Sample Preparation: Employing miniaturized, high-efficiency protocols like single-pot solid-phase-enhanced sample preparation (SP3) to minimize losses [17].

- Multiplexing: Using isobaric tags (e.g., TMTPro) to simultaneously analyze multiple single cells, increasing throughput and incorporating a carrier channel for signal enhancement [16].

- LC-MS/MS Analysis: Utilizing advanced instruments with ion mobility separation (e.g., FAIMS) to reduce interference and increase proteome depth [16].

- Data Analysis: Applying specialized computational tools (e.g., SCeptre) that can normalize batch effects, integrate index-sorting data, and handle the unique statistical nature of SCP data [16].

Experimental Protocols for Key Techniques

Protocol 1: Isolation of Extracellular Vesicles from Human Milk for Proteomics

Objective: To isolate mEVs from human milk with high purity and yield for downstream proteomic analysis, adapted from [14].

Reagents: PBS, ExoGAG reagent (or alternatives for UC, SEC), SDS buffer.

Procedure:

- Sample Collection & Defatting: Collect human milk and perform sequential centrifugation.

- Centrifuge at 300 g for 10 min at 4°C. Remove the fat globule layer.

- Centrifuge the supernatant at 3,000 g for 10 min at 4°C. Retain the supernatant.

- EV Isolation (ExoGAG Method):

- Incubate the defatted sample with ExoGAG reagent in a 1:2 ratio for 15 min at 4°C.

- Centrifuge at 16,000 g for 15 min at 4°C.

- Discard the supernatant and resuspend the pellet (containing mEVs) in 1% SDS for proteomics.

- Validation: Characterize vesicle size and concentration using Dynamic Light Scattering (DLS) [14].

Protocol 2: Multiplexed Single-Cell Proteomics Workflow Using TMTPro

Objective: To prepare and analyze proteomes from hundreds of individual cells, enabling the study of cellular heterogeneity, based on [16].

Reagents: FACS buffer, Trifluoroethanol (TFE)-based lysis buffer, TMTPro 16-plex kit, C18 StageTips.

Procedure:

- Cell Sorting and Lysis:

- Use FACS to sort single cells into individual wells of a 384-well PCR plate containing TFE-based lysis buffer. Enable index-sorting to record cell parameters.

- Lyse cells through a freeze-boil cycle.

- Digestion and Labeling:

- Digest proteins overnight with trypsin.

- Label the peptides in each well with a unique TMTPro channel.

- Carrier Channel Preparation:

- Sort 500-cell aliquots into a separate plate, digest, and label to create a "booster" channel.

- Clean up the booster peptide mix using C18 StageTips.

- Sample Pooling and MS Analysis:

- Pool the 14 single-cell samples and combine them with a 200-cell equivalent of the booster.

- Analyze the pooled sample via LC-MS/MS on an Orbitrap instrument equipped with FAIMS, using multiple compensation voltages to increase depth.

Comparative Data Tables

Table 1: Performance Comparison of Human Milk Extracellular Vesicle Isolation Methods

This table compares four common mEV isolation techniques based on a 2025 study, evaluating their performance for omics studies [14].

| Isolation Method | Principle | Relative Protein/Peptide Yield | Vesicle Purity | Reproducibility | Suitability for Omics |

|---|---|---|---|---|---|

| ExoGAG | Glycosaminoglycan binding | High | High | High | Excellent |

| Ultracentrifugation (UC) | Size/Denstity | Medium | High | Medium | Good |

| Size Exclusion Chromatography (SEC) | Size | Low | Medium | Medium | Moderate |

| Immunoprecipitation (IP_CD9) | Surface marker (CD9) | Low | High (for CD9+ EVs) | Low | Limited |

Table 2: Impact of MS Instrument Settings on Single-Cell Proteomics Performance

Data derived from testing different ion injection times on quantitative performance, using a booster-based workflow [16].

| MS Parameter Setting | Proteome Depth (Proteins/Cell) | Quantitative Accuracy | Quantitative Precision | Recommended Use Case |

|---|---|---|---|---|

| Injection Time: 150 ms | ~1100 | Lower | Lower | Maximum discovery depth |

| Injection Time: 300 ms | ~1000 | Medium | Medium | Balanced depth and quality |

| Injection Time: 500 ms | ~900 | High | High | High-quality quantification |

| Injection Time: 1000 ms | ~800 | Very High | Very High | Targeted/validation studies |

Workflow Visualizations

General Workflow for Low-Abundance Proteomics

Single-Cell Proteomics with Multiplexing

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Key Reagents for Advanced Proteomic Studies

| Item | Function | Example Application |

|---|---|---|

| ExoGAG | Isolates glycosylated extracellular vesicles via GAG binding. | Efficient isolation of mEVs from human milk for proteomic and transcriptomic analysis [14]. |

| TMTPro 16-plex | Isobaric mass tags for multiplexing samples. | Labeling and pooling up to 16 samples (e.g., single cells) for simultaneous LC-MS analysis, improving throughput [16]. |

| Trifluoroethanol (TFE) Lysis Buffer | Chaotropic agent for efficient cell lysis and protein denaturation. | Superior lysis of individual cells in low-volume wells compared to pure water, increasing peptide identifications [16]. |

| FAIMS Pro Interface | Gas-phase fractionation device that filters ions by mobility. | Reduces sample complexity and co-isolation interference during MS analysis, increasing proteome depth [16]. |

| Combinatorial Ligand Libraries | Libraries of diverse peptide baits to capture low-abundance proteins. | Concentrating the "low-abundance" proteome while reducing high-abundance compounds in complex samples like plasma [18]. |

Practical Strategies for Enhanced Sensitivity: From Sample Prep to Instrumentation

The analysis of low-abundance proteins is a significant challenge in proteomic research, particularly when they are potential biomarkers for disease. Biological fluids like plasma and serum possess a vast dynamic range of protein concentrations, often exceeding 10 orders of magnitude, where a small number of highly abundant proteins (HAPs) can constitute over 90% of the total protein mass [19] [20]. This dominance masks the detection of less abundant, but biologically critical, proteins. To overcome this, sample pre-fractionation is an essential first step. Two principal strategies are immunodepletion, which removes specific HAPs, and ProteoMiner-based equalization, which compresses the dynamic range by reducing high-abundance signals and simultaneously enriching low-abundance ones [20]. This guide explores these techniques to help you select and troubleshoot the optimal approach for your research on low-abundance proteomics.

Technology Deep Dive: Mechanisms and Comparisons

Immunodepletion utilizes antibodies immobilized on a solid support to selectively remove a predefined set of high-abundance proteins from a sample. The process involves passing the sample over the antibody-coated resin, where target HAPs bind, allowing the low-abundance proteins (LAPs) to be collected in the flow-through [21] [19].

- Mechanism: Antibody-antigen affinity capture.

- Outcome: Selective subtraction of HAPs (e.g., 6, 7, 14, or 20 proteins) to reveal LAPs.

ProteoMiner (Hexapeptide Library) technology uses a vast combinatorial library of hexapeptides bound to chromatographic support. Each unique bead carries a different peptide sequence that can bind to a specific protein. Because the binding capacity for each protein is limited, HAPs quickly saturate their ligands, and the excess is washed away. Conversely, LAPs are concentrated on their specific ligands and then eluted [3] [20].

- Mechanism: Combinatorial hexapeptide ligand library binding with limited capacity.

- Outcome: Dynamic range compression; reduction of HAPs and concurrent enrichment of LAPs.

The following diagram illustrates the fundamental operational differences between these two technologies.

Quantitative Performance Comparison

The choice between immunodepletion and ProteoMiner has significant implications for the outcome of your proteomic analysis. The table below summarizes a direct comparative study of these methods.

Table 1: Direct Comparison of Immunodepletion and ProteoMiner Performance

| Feature | Immunodepletion (e.g., ProteoPrep20) | ProteoMiner (Hexapeptide Library) |

|---|---|---|

| Primary Action | Targeted removal of specific HAPs (e.g., 6, 12, 14, 20) [19] [20] | Non-targeted dynamic range compression [3] [20] |

| HAP Removal Efficiency | Very high (>98% for targeted proteins) [19] | High (saturates and removes excess HAPs) [20] |

| LAP Enrichment | Indirect, by reducing background [20] | Direct, by concentrating LAPs on the beads [3] [20] |

| Number of Proteins Identified | Identifies a high number of medium-abundance proteins [20] | Identifies more unique, very low-abundance proteins [20] |

| Sample Throughput | Faster; suitable for spin columns or HPLC automation [19] [22] | Slower; involves incubation and multiple steps [20] |

| Cost & Reusability | Columns can be expensive; some can be regenerated [19] | Initial kit cost; beads are typically for single use [20] |

| Key Advantage | Deep, specific removal of known HAPs | Discovery-oriented; can reveal novel LAPs without predefining targets |

| Main Limitation | Limited to predefined targets; potential for non-specific binding [21] [20] | Does not completely remove any single protein; sample dilution possible [19] [20] |

Troubleshooting Guides & FAQs

Common Issues and Solutions for Immunodepletion

Problem: Inefficient Depletion of Target Proteins

- Potential Cause: Insufficient antibody amount or poor antibody affinity [21].

- Solution: Ensure you are using a purified antibody and increase the antibody concentration. The recommended range can be from a few µg/mL up to 100 µg/mL, which is much higher than Western blotting [21]. Use a significant excess (3-4 fold) of the bead conjugate over the estimated target protein load [21].

Problem: Co-depletion of Non-Target (Low-Abundance) Proteins

- Potential Cause: Non-specific binding to the support matrix or antibodies. In serum/plasma, target biomarkers can also be associated with carrier HAPs like albumin [19] [22].

- Solution: Optimize binding and wash buffers to minimize non-specific interactions. Using IgY antibodies (from egg yolks) can reduce cross-reactivity with human proteins (e.g., complement, rheumatoid factors) compared to mammalian IgG-based systems [19].

Problem: Low Recovery of Low-Abundance Proteins

- Potential Cause: Sample dilution during the process or incomplete elution of LAPs from the column.

- Solution: Concentrate the flow-through fraction after depletion. For methods assessing bound proteins, ensure the elution buffer is efficient and compatible with downstream MS analysis.

Common Issues and Solutions for ProteoMiner

Problem: Incomplete Dynamic Range Compression

- Potential Cause: Overloading the column with too much total protein, leading to inadequate capture of LAPs.

- Solution: Do not exceed the recommended protein load for the column size. The technology relies on limited binding sites; overloading will allow HAPs to pass through without being "normalized" [20].

Problem: High Background of HAPs in Final Eluate

- Potential Cause: Inadequate washing after sample loading, leaving excess HAPs in the column.

- Solution: Follow the manufacturer's washing protocol rigorously. Increase wash volumes or number of washes if necessary, ensuring the wash buffer is optimized to remove unbound and weakly bound proteins without eluting the captured LAPs.

Problem: Poor Reproducibility Between Experiments

- Potential Cause: Inconsistent sample loading, incubation times, or washing steps.

- Solution: Precisely standardize the protocol. Use consistent sample volumes, ensure thorough but gentle mixing during incubation, and strictly adhere to timing for each step. Automated liquid handlers can improve reproducibility.

Frequently Asked Questions (FAQs)

Q1: Which method is better for the discovery of novel biomarkers? A1: For truly novel biomarker discovery where target proteins are unknown, ProteoMiner has a potential advantage. It enriches for a broader spectrum of low-abundance proteins without being biased towards pre-defined HAP targets [20]. For studies focused on a specific pathway where the interfering HAPs are well-known, high-specificity immunodepletion may be more efficient.

Q2: Can I combine these two methods? A2: Yes, a multi-step approach can be highly effective. One common strategy is to first perform immunodepletion to remove the top 12-14 HAPs, followed by ProteoMiner treatment on the flow-through to further compress the dynamic range of the remaining proteins. This has been shown to enable detection of proteins at lower concentrations than either method alone [19].

Q3: What is a realistic expectation for depletion efficiency? A3: For immunodepletion, do not expect 100% removal. A reduction of 90% or more for each target HAP is considered quite good [21]. Efficiency should always be confirmed, for example by Western blotting, comparing the original and depleted samples side-by-side [21].

Q4: How does the choice of pre-fractionation impact downstream Mass Spectrometry analysis? A4: Both methods significantly improve the sensitivity of LC-MS/MS by reducing spectral crowding and allowing the instrument to sample peptides from LAPs rather than being overwhelmed by HAP-derived peptides [6] [3]. This can lead to a 50% increase in the identification of quantifiable protein groups at sub-nanogram levels when using advanced DIA methods like ZenoTOF with scanning DIA [6]. The following workflow integrates pre-fractionation with state-of-the-art MS analysis.

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 2: Key Commercial Kits and Reagents for Sample Pre-Fractionation

| Product Name | Type | Key Features / Targets | Primary Function |

|---|---|---|---|

| Multiple Affinity Removal System (MARS) [19] | Immunodepletion | Removes 6 (Albumin, IgG, IgA, etc.), 7, or 14 human HAPs. Also available for mouse (3 targets). | High-specificity removal of major HAPs via polyclonal antibodies. |

| ProteoPrep20 [19] [20] | Immunodepletion | Removes 20 human HAPs (Albumin, Ig complexes, Fibrinogen, etc.). | Deep depletion of a wider range of HAPs in a single step. |

| Seppro IgY-based Kits [19] | Immunodepletion | Removes 6, 12, or 14 HAPs. Uses chicken IgY antibodies to reduce cross-reactivity. | Specific depletion with minimal non-specific binding to human proteins. |

| ProteoMiner [3] [20] | Protein Equalization | Combinatorial hexapeptide library. | Dynamic range compression via non-targeted enrichment of LAPs. |

| ProteoSpin Abundant Serum Protein Depletion Kit [19] | Ion-Exchange Depletion | Depletes albumin, α-antitrypsin, transferrin, and haptoglobin. | Cost-effective, non-antibody-based depletion for various mammalian samples. |

Optimized Experimental Protocols

Standard Protocol for Immunodepletion (Spin Column Format)

This protocol is adapted for a typical commercial spin column kit like the ProteoPrep20 [20].

- Sample Preparation: Dilute your plasma/serum sample with the provided PBS buffer (e.g., 8 µL plasma to 100 µL total volume). Filter through a 0.2 µm centrifugal filter to prevent column clogging.

- Column Equilibration: Centrifuge the spin column briefly to remove storage buffer. Add recommended equilibration buffer (e.g., PBS), incubate, and centrifuge to waste. Repeat.

- Sample Loading & Incubation: Apply the prepared sample to the column. Incubate at room temperature for 20 minutes with gentle agitation.

- Collect Flow-Through: Centrifuge the column (e.g., 1500 RCF for 1 min) and collect the depleted plasma (flow-through). Perform additional wash steps with PBS buffer, pooling the flow-throughs.

- Concentrate Sample: Use a centrifugal concentrator with an appropriate molecular weight cutoff to concentrate the pooled flow-through to the desired volume for downstream processing.

- Column Regeneration (Optional): For reusable columns, elute the bound HAPs with a low-pH elution buffer (e.g., 0.1 M Glycine-HCl, pH 2.5). Re-equilibrate immediately with storage buffer.

Standard Protocol for ProteoMiner Enrichment

This protocol outlines the key steps for using the ProteoMiner kit [20].

- Sample Preparation: Dilute or mix your protein sample (e.g., plasma) with a suitable binding buffer.

- Sample Loading & Incubation: Add the sample to the ProteoMiner beads packed in a spin column. Incubate with gentle mixing for a specified time (e.g., 2-3 hours) to allow proteins to bind their cognate ligands.

- Washing: Centrifuge to collect the "wash-through" which contains the excess, unbound HAPs. Perform several washes with binding or wash buffer to remove all non-specifically bound proteins.

- Elution of Enriched Proteins: Elute the captured proteins using a small volume of a denaturing elution buffer (e.g., 5% acetic acid, 8 M urea, 2% CHAPS). This step releases the LAPs concentrated on the beads.

- Sample Preparation for MS: The eluted protein fraction can now be processed using standard protocols for reduction, alkylation, and tryptic digestion before LC-MS/MS analysis.

Advanced Enrichment Techniques for Low-Abundance and Low-Molecular-Weight Proteins

Frequently Asked Questions (FAQs)

Q1: Why is specialized enrichment necessary for studying low-abundance and low-molecular-weight proteins? Biological samples like plasma, serum, or tissue extracts contain a small number of proteins at very high concentrations, which can dominate analysis and mask the signal of less abundant species. The dynamic concentration range in human plasma, for example, can span over 12 orders of magnitude. Without enrichment, the detection of low-abundance proteins (LAPs) and low-molecular-weight proteins (LMWPs) by mass spectrometry is often impossible as they fall below the detection limit of the instrumentation [23] [24].

Q2: What are the primary strategic approaches for enriching these challenging proteins? There are four main strategic pathways, each with its own advantages and limitations:

- Depletion/Subtraction: Removing high-abundance proteins (e.g., via immunoaffinity columns) to reveal the underlying LAPs [23] [25].

- Enrichment/Capture: Using physical/chemical properties (e.g., size, charge, specific motifs) to directly concentrate the target protein group [26] [25].

- Protein Equalization: Using combinatorial peptide ligand libraries (CPLL) to compress the dynamic range by saturating and limiting the binding of abundant proteins while concentrating LAPs [23] [25].

- Fractionation: Separating the complex protein mixture into simpler fractions via chromatography or other methods to reduce complexity and increase depth of analysis [23].

Q3: My target protein is a cytokine present at trace levels in cell culture supernatant. What is a reliable method for sample preparation? For secreted proteins like cytokines, a two-pronged approach is recommended. First, you can inhibit secretion using Brefeldin A, causing the protein to accumulate inside the cell for easier lysis and detection. Alternatively, or in combination, you can concentrate the protein from the serum-free culture medium using centrifugal ultrafiltration with a molecular weight cutoff (MWCO) membrane at least two times smaller than your target protein's molecular weight [27].

Q4: I work with plant or seed extracts dominated by storage proteins. What are my options? Plant fluids and seeds are often dominated by a few very abundant proteins like RuBisCO or storage globulins. Effective methods for this context include protamine sulfate precipitation, which is a simple and low-cost way to deplete abundant storage proteins, or isopropanol extraction, which has been shown to significantly enrich LAPs in soybean seeds for subsequent gel electrophoresis and MS analysis [23] [25].

Q5: What are common pitfalls that lead to poor recovery of LAPs, and how can I avoid them? Common issues and their solutions include:

- Protein Degradation: Always add protease inhibitor cocktails (EDTA-free if followed by trypsin digestion) to all buffers during sample preparation [4].

- Sample Loss: Low-abundance proteins are easily lost. Monitor each step of your protocol by Western Blot, scale up the starting material, or use carrier proteins where compatible [4].

- Inefficient Depletion: Standard depletion columns can cause significant co-depletion of non-target proteins. Consider alternatives like CPLL or optimized precipitation [23].

- Contamination: Use filter tips, single-use plastics, and HPLC-grade water to avoid contaminants like keratin that interfere with MS detection [4].

Troubleshooting Guides

Table 1: Troubleshooting Low-Abundance Protein Enrichment

| Problem | Potential Cause | Solution |

|---|---|---|

| No signal for target LAP in MS | Protein lost during preparation or below detection limit | Include process controls; use Western Blot to monitor steps; increase starting material; employ an enrichment method like CPLL [4] [25]. |

| High background in MS chromatogram | Incomplete removal of high-abundance proteins (HAPs) | Optimize depletion protocol (e.g., buffer, incubation time); consider a sequential or orthogonal depletion strategy (e.g., precipitation followed by chromatography) [28] [23]. |

| Poor reproducibility between replicates | Inconsistent sample handling or technique | Automate sample preparation where possible; use color-coded and pre-measured reagents; strictly adhere to standardized protocols for incubation times and temperatures [24]. |

| Low protein yield after ultrafiltration | Protein adsorption to membrane or incorrect MWCO | Select a membrane with a different chemistry (e.g., Vivaspin tangential flow membrane); ensure the MWCO is at least 50% of the target protein's molecular weight [26] [27]. |

| Protein degradation | Protease activity during isolation | Keep samples at 4°C; add a broad-spectrum, EDTA-free protease inhibitor cocktail immediately upon lysis or collection [4]. |

Table 2: Troubleshooting Low-Molecular-Weight Protein/Peptide Analysis

| Problem | Potential Cause | Solution |

|---|---|---|

| LMWPs masked by HAP fragments | Ex vivo proteolysis of abundant proteins | Include protease inhibitors during blood collection and plasma processing; use enrichment methods specific for intact LMWPs like optimized perchloric acid precipitation [28] [26]. |

| Failure to detect small peptides | Peptides are lost during buffer exchange or desalting | Use magnetic bead-based clean-up protocols which can offer higher recovery for small volumes and low-abundance peptides [24] [25]. |

| Insufficient enrichment of LMF | Incorrect ultrafiltration parameters | Optimize centrifugal force, duration, and temperature. For plasma, a condition of 4000× g for 35 min at 20°C in 10% acetonitrile has been shown to be effective [26] [29]. |

Experimental Protocols

Protocol 1: Centrifugal Ultrafiltration for Enriching the Low-Molecular-Weight Plasma Proteome

This protocol is optimized for enriching proteins and peptides ≤ 25 kDa from human plasma, ideal for biomarker discovery [26] [29].

- Principal Materials: Vivaspin centrifugal ultrafiltration device (20 kDa MWCO, Sartorius), acetonitrile, formic acid, low-protein-binding microcentrifuge tubes.

- Step-by-Step Procedure:

- Sample Preparation: Dilute 100 µL of plasma (e.g., from EDTA-treated blood) with a buffer containing 5% acetonitrile and 0.1% formic acid.

- Loading: Transfer the diluted plasma to the Vivaspin ultrafiltration device.

- Centrifugation: Centrifuge at 4000× g for 35 minutes at 20°C.

- Collection: The filtrate (low-molecular-weight fraction, LMF) is collected into a fresh tube. This fraction can now be processed for 1D-SDS-PAGE and nano-LC-MS/MS analysis.

- Key Tips: The addition of acetonitrile helps reduce membrane fouling and improves the specificity of the separation. Always use the specified membrane type (tangential flow) for optimal recovery [26].

Protocol 2: Combinatorial Peptide Ligand Library (CPLL) Enrichment

CPLL technology is used to compress the dynamic range of proteomes, enhancing LAPs by reducing the concentration of abundant proteins [23].

- Principal Materials: Combinatorial peptide ligand library beads (e.g., ProteoMiner), binding buffer (physiological conditions, e.g., PBS), elution buffer (e.g., containing urea, thiourea, and CHAPS).

- Step-by-Step Procedure:

- Equilibration: Wash the CPLL beads with binding buffer.

- Loading: Incubate the complex protein extract (e.g., serum, tissue lysate) with the beads under gentle agitation to allow binding to saturation.

- Washing: Wash the beads extensively to remove unbound and loosely bound proteins.

- Elution: Elute the captured proteins using a strong denaturing eluent. Alternatively, perform on-bead digestion of the captured proteins followed by LC-MS/MS analysis of the resulting peptides [23].

- Key Tips: For maximal LAP enrichment, use a large sample volume to ensure low-abundance species have sufficient opportunity to find and saturate their specific ligands on the beads [23].

Workflow Visualization

LAP and LMWP Enrichment Pathways

Centrifugal Ultrafiltration Workflow

Research Reagent Solutions

Table 3: Essential Reagents and Kits for Enrichment Techniques

| Reagent / Kit | Primary Function | Example Application |

|---|---|---|

| Combinatorial Peptide Library (e.g., ProteoMiner) | Compresses the dynamic range of proteomes by equalizing protein concentrations. | Enrichment of LAPs from diverse samples like human serum, synovial fluid, and plant extracts [23] [25]. |

| Immunoaffinity Depletion Columns (e.g., Seppro IgY14) | Removes a defined set of high-abundance proteins (e.g., 14-60 proteins) via antibody binding. | Rapid depletion of dominant proteins from human serum or plasma to uncover LAPs [23] [25]. |

| Centrifugal Ultrafiltration Devices (e.g., Vivaspin) | Separates and enriches low-molecular-weight components based on size exclusion. | Isolation of the ≤25 kDa fraction from human plasma for biomarker discovery [26] [29]. |

| ENRICH-iST Kit | Paramagnetic bead-based enrichment of LAPs integrated with digestion and purification. | High-throughput, automated preparation of plasma or CSF samples for in-depth proteomic profiling [24]. |

| Protamine Sulfate | Precipitates abundant proteins based on charge, leaving LAPs in solution. | Depletion of storage proteins from legume seed extracts to enhance LAP concentration [25]. |

Performance and Quantitative Analysis

The table below summarizes the key quantitative performance characteristics of the Astral and timsTOF platforms, which are critical for low-abundance proteomic analysis.

| Feature | Orbitrap Astral MS [30] | timsTOF SCP & Systems [31] [32] |

|---|---|---|

| Core Technology | Asymmetric Track Lossless analyzer with high-resolution accurate mass (HRAM) detection [30] | Trapped Ion Mobility Spectrometry (TIMS) coupled with quadrupole time-of-flight (QTOF) [32] |

| Key Acquisition Method | Data Independent Acquisition (DIA) and dynamic DIA (dDIA) [30] | Parallel Accumulation Serial Fragmentation (PASEF) [31] [32] |

| Quantitative Sensitivity | Quantifies 5x more peptides per unit time than previous Orbitrap systems; demonstrated quantification of 5,163 plasma proteins in a single 70-min run [30] | Ultra-sensitive detection optimized for single-cell proteomics and low-abundance cellular proteins [31] |

| Sequencing Speed | Not explicitly stated, but high acquisition rate enables deep proteome coverage in short gradients [30] | >100 Hz MS/MS sequencing speed enabled by PASEF [32] |

| Ion Utilization | Nearly lossless ion transfer for high sensitivity [30] | Near 100% duty cycle for high sensitivity in shotgun proteomics [32] |

Troubleshooting FAQs and Guides

Astral Analyzer Troubleshooting

Q: My experiment is suffering from a high rate of missing values for low-abundance peptides. What steps can I take?

- Verify Sample Preparation: Ensure the use of robust, scalable protocols like protein aggregation capture (PAC) to minimize sample loss. For cell lysates, use lysis buffer with 2% SDS and sonication for efficient extraction [30].

- Optimize LC Gradient: For deep plasma proteome coverage, use a 60-minute or longer gradient on a 110 cm μPac Neo HPLC column to enhance separation [30].

- Check DIA Parameters: Employ dynamic DIA (dDIA) methods to maximize injection times across the mass range, which improves the detection of low-level signals [30].

- System Suitability Test: Regularly run a complex matrix-matched calibration curve (e.g., SILAC-labeled HeLa digests) to benchmark instrument quantification limits and identify sensitivity drops [30].

Q: How can I improve the depth of my plasma proteome coverage on the Astral system?

- Implement Enrichment Protocols: Use a magnetic bead-based membrane particle enrichment protocol, such as Mag-Net, to deplete high-abundance proteins and enrich for extracellular vesicles and low-abundance targets prior to LC-MS analysis [30].

- Spike-in Internal Standard: Include a retention time calibrant peptide cocktail (e.g., Pierce PRTC) at a consistent concentration (e.g., 50 fmol/μL) to monitor LC and MS performance [30].

timsTOF Troubleshooting

Q: I am not achieving the expected sequencing depth for single-cell proteomics. What should I check?

- Confirm PASEF Settings: Ensure the PASEF method is active. This technology is essential for achieving the >100 Hz sequencing speed and high sensitivity required for low-input samples [31] [32].

- Review Ion Mobility Separation: The TIMS device adds a separation dimension. Verify that the method is optimized for the ion mobility range of your peptide sample to increase peak capacity [32].

- Check Sample Loading: The system is optimized for nano-flow LC. Ensure your sample handling is designed for enhanced ion transfer from low sample amounts [31].

- Data Analysis Pipeline: Export data via MaxQuant to CSV and use compatible software (e.g., Sourcetable, Perseus) that supports the analysis of single-cell protein quantification and cellular heterogeneity data [31].

Q: My data shows inconsistent quantification. What are potential sources?

- Instrument Calibration: Ensure the TIMS and QTOF sections are properly calibrated according to the manufacturer's specifications.

- Data Processing: For differential expression analysis, use pre-built workflows that are specifically designed for the ultra-sensitive detection of the timsTOF SCP to ensure accurate quantification [31].

Experimental Protocols for Maximizing Sensitivity

Sample Preparation for Deep Plasma Proteome Analysis (Astral Focus)

This protocol is designed for quantifying over 5,000 plasma proteins and is critical for high-sensitivity research [30].

1. Membrane Particle Enrichment (Mag-Net Protocol) - Input: 100 μL of plasma. - Inhibitors: Add HALT cocktail (protease and phosphatase inhibitors). - Binding Buffer: Mix plasma 1:1 with 100 mM Bis-Tris Propane (pH 6.3), 150 mM NaCl. - Beads: Use MagReSyn strong anion exchange (SAX) beads. Equilibrate beads twice in 50 mM Bis Tris Propane (pH 6.5), 150 mM NaCl. - Enrichment: Combine beads with the plasma-Binding Buffer mixture in a 1:4 ratio (beads:starting plasma). Incubate for 45 minutes at room temperature with gentle agitation. - Washing: Wash beads three times for 5 minutes each with Equilibration/Wash Buffer (50 mM Bis Tris Propane, pH 6.5, 150 mM NaCl) with gentle agitation [30].

2. Protein Solubilization, Reduction, and Alkylation - Solubilization/Reduction: Solubilize the enriched particles on the beads in 50 mM Tris (pH 8.5), 1% SDS, and 10 mM Tris(2-carboxyethyl)phosphine (TCEP). Add a process control (e.g., 800 ng enolase) at this stage. - Alkylation: Alkylate with 15 mM iodoacetamide in the dark for 30 minutes. - Quenching: Quench the reaction with 10 mM DTT for 15 minutes [30].

3. Protein Aggregation Capture (PAC) Digestion - Precipitation: Adjust the sample to 70% acetonitrile to precipitate proteins onto the bead surface. Incubate for 10 minutes at room temperature. - Washing: Wash beads three times in 95% acetonitrile and twice in 70% ethanol. - Digestion: Digest with trypsin (20:1 protein:enzyme ratio) in 100 mM ammonium bicarbonate for 1 hour at 47 °C. - Quenching & Storage: Quench digestion with 0.5% formic acid. Spike in a retention time calibrant peptide cocktail (to 50 fmol/μL). Lyophilize peptides and store at -80°C [30].

Sample Preparation for High-Sensitivity Cellular Proteomics

1. SILAC Labeling for Quantitative Benchmarking - Culture: Grow HeLa S3 cells in SILAC DMEM containing either light (L-lysine, L-arginine) or heavy (13C6 15N2 L-lysine, 13C6 15N4 L-arginine) isotopes, supplemented with 10% FBS and GlutaMAX [30]. - Labeling Efficiency: Harvest cells after 8 doublings at ~85% confluence [30].

2. Cell Lysis and Digestion - Lysis: Lyse cell pellets using probe sonication in a buffer containing 2% SDS. - Quantification: Determine protein concentration with a BCA assay and dilute lysates to 2 μg/μL in 1% SDS. - Reduction/Alkylation: Reduce with 20 mM DTT and alkylate with 40 mM iodoacetamide. - PAC Digestion: Dilute samples to 70% acetonitrile and bind to hydroxylated magnetic beads. Wash, then digest with trypsin (1:33 enzyme-to-protein ratio) in 50 mM ammonium bicarbonate for 4 hours at 47°C [30]. - Sample Pooling: For calibration curves, combine light and heavy digests to create known ratios (e.g., 0%, 0.5%, 1%, 5%, 10%, 25%, 50%, 70%, 100% light) [30].

Liquid Chromatography-Mass Spectrometry Methods

Astral DIA Method - LC System: Vanquish Neo UHPLC with a 110 cm μPac Neo HPLC column. - Gradient: 24-minute to 60-minute gradient from 4% to 45% B at 750 nL/min (Mobile phase A: 0.1% FA in water; B: 80% ACN, 0.1% FA) [30]. - MS Acquisition: Data independent acquisition mode with normalized collision energy of 25%. For benchmarking, MS1 (Orbitrap) at 30,000 resolution every 0.6 s; MS/MS (Orbitrap) at 15,000 resolution with max injection time of 23 ms. The Astral analyzer itself provides high-resolution accurate mass detection [30].

timsTOF PASEF Method - Core Principle: The PASEF method synchronizes the quadrupole with the elution of mobility-separated ions from the TIMS tunnel. The quadrupole isolates a specific ion species during its elution and immediately shifts to the next precursor, enabling high-speed sequencing [32]. - Benefit: This process allows parent and fragment spectra to be aligned by mobility values, increasing confidence in identifications and the sensitivity for low-abundance peptides [32].

Essential Research Reagent Solutions

The table below lists key reagents and materials used in sensitive proteomic workflows.

| Reagent/Material | Function | Example Use Case |

|---|---|---|

| MagReSyn Hydroxyl Beads [30] | Magnetic beads for protein aggregation capture (PAC), enabling efficient digestion and low sample loss. | Protein clean-up and digestion for cell lysates and plasma samples [30]. |

| MagReSyn SAX Beads [30] | Strong anion exchange beads for enriching membrane particles and extracellular vesicles from plasma. | Depletion of high-abundance proteins and enrichment of low-abundance targets in plasma proteomics [30]. |

| SILAC DMEM Kit [30] | Metabolic labeling for accurate, multiplexed quantitative proteomics. | Creating internal standards for quantitative benchmarking (e.g., calibration curves) [30]. |

| Pierce Retention Time Calibrant (PRTC) [30] | A cocktail of synthetic peptides that elute across the LC gradient to monitor system performance. | Spiked into every sample to monitor LC retention time stability and MS sensitivity [30]. |

| Trypsin [30] | Protease for digesting proteins into peptides for LC-MS/MS analysis. | Standard protein digestion following reduction and alkylation [30]. |

Workflow Visualization

Astral Analyzer DIA Quantitative Proteomics Workflow

timsTOF PASEF Method for Enhanced Sensitivity

The Chip-Tip protocol represents a significant breakthrough in single-cell proteomics (SCP), enabling researchers to identify over 5,000 proteins from individual cells, a substantial increase from the previous 1,000-2,000 protein groups typically identified [33]. This nearly loss-less workflow addresses core challenges of low protein identification numbers, low sensitivity, and sample preparation difficulties that have historically limited SCP [34]. By minimizing sample loss through integrated design, the method provides the sensitivity required for low-abundance proteomic analysis, allowing researchers to directly investigate cellular heterogeneity, disease mechanisms, and developmental processes with unprecedented depth [34] [33].

The Chip-Tip workflow integrates specialized instrumentation and methods to achieve high-sensitivity analysis. The following diagram illustrates the complete experimental process, from single-cell preparation to data analysis.

The workflow's performance is quantified in the table below, demonstrating its exceptional sensitivity and coverage across different sample sizes.

Table 1: Quantitative Performance Metrics of the Chip-Tip Workflow [33]

| Sample Type | Median Proteins Identified | Median Peptides Identified | Protein Sequence Coverage | Key Instrumentation |

|---|---|---|---|---|

| Single HeLa Cell | 5,204 | 41,700 | 12.9% | Evosep One LC, Orbitrap Astral, nDIA |

| 20-Cell Sample | >7,000 | 98,054 | 25.0% | Evosep One LC, Orbitrap Astral, nDIA |

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful implementation of the Chip-Tip protocol requires the following specialized reagents and equipment, which form the core of this standardized workflow.

Table 2: Key Research Reagent Solutions for the Chip-Tip Workflow [34] [33] [35]

| Item Name | Function/Application |

|---|---|

| cellenONE X1 Platform | Automated platform for precise single-cell isolation and dispensing into the proteoCHIP. |

| proteoCHIP EVO 96 | A chip designed for parallel processing of 96 single cells in nanoliter volumes, minimizing sample loss. |

| Evotip | Disposable trap column that captures the sample directly from the proteoCHIP, eliminating transfer losses. |

| Evosep One LC System | Liquid chromatography system using optimized "Whisper" flow gradients for high-sensitivity separation. |

| Aurora Elite XT UHPLC Column | (e.g., 15 cm x 75 µm, C18) Provides high-resolution peptide separation. |

| Orbitrap Astral Mass Spectrometer | High-sensitivity mass spectrometer for detecting low-abundance peptides from single cells. |

| Narrow-Window DIA (nDIA) | Data-independent acquisition method (e.g., 4 Th windows) that boosts identification rates in SCP. |

Troubleshooting Common Experimental Issues

FAQ 1: Low Protein Identification Rates

Q: My experiment is identifying significantly fewer than 5,000 proteins per cell. What are the primary factors I should investigate?

- A: The following decision diagram outlines a systematic approach to diagnose and resolve the most common causes of low identification rates.

Solution Details:

- Carrier Proteome Effect: The database search strategy is critical. When using Spectronaut, perform the search alongside matched higher-quantity samples (e.g., a 1-ng digest or a 20-cell sample). When using DIA-NN, ensure the "match-between-runs" (MBR) feature is enabled in conjunction with these carrier samples. This strategy can increase identifications from ~4,000 to over 5,000 proteins [33].

- MS Instrument Method: The use of narrow-window DIA (nDIA) on the Orbitrap Astral is a key differentiator. The recommended method uses 4-Th DIA windows with a 6-ms maximum injection time (4Th6ms). Wider windows or longer injection times can increase chemical noise and reduce identifications [33].

- Sample Preparation: The workflow is designed to be nearly loss-less. Adhere strictly to the protocol to avoid manual pipetting that can lead to sample loss. The direct transfer from the proteoCHIP to the Evotip is a crucial step for maintaining high sensitivity [35].

FAQ 2: Challenges with Data Analysis and Search Strategies

Q: What is the impact of different database search tools and strategies on my final protein count, and how do I choose?

- A: The choice of search software and strategy significantly impacts protein identification numbers in SCP. A systematic evaluation of Spectronaut and DIA-NN reveals that incorporating a "carrier proteome" (data from larger samples like 20-cell pools) substantially boosts identifications in single-cell samples [33]. While both tools show a large overlap in identifications, Spectronaut may provide marginally higher protein quantification correlations (R = 0.91) between technical replicates [33]. For the most reliable error-rate estimation, employ an entrapment database approach during your search to control for false discoveries [33].

FAQ 3: Applying the Protocol to Complex Sample Types

Q: Can the Chip-Tip workflow be applied to solid tissues or complex 3D models like spheroids?

- A: Yes, but it requires an optimized initial dissociation step. The developers successfully applied the method to cancer cell spheroids by using a newly developed tissue dissociation buffer that enables effective single-cell disaggregation without compromising protein integrity [33]. For tissues, establish a robust dissociation protocol that yields a high viability single-cell suspension before proceeding with the standard Chip-Tip dispensing and preparation steps.

FAQ 4: Detecting Post-Translational Modifications (PTMs)

Q: Does the high sensitivity of this workflow allow for the detection of PTMs like phosphorylation in single cells?

- A: Yes. A major advantage of the Chip-Tip protocol is its ability to facilitate the direct detection of PTMs, including phosphorylation and glycosylation, in single cells without the need for specific enrichment protocols [33]. The deep coverage at the peptide level (over 40,000 peptides per cell) enables the identification of these modified peptides, opening new avenues for studying cell signaling and regulatory mechanisms at a single-cell resolution.

Selecting the appropriate data acquisition mode is a critical decision in quantitative proteomics, directly impacting the depth, accuracy, and reproducibility of your results, especially when targeting low-abundance proteins. This guide provides a detailed comparison of two prominent techniques: Label-Free Quantification using Data-Independent Acquisition (DIA-LFQ) and Tandem Mass Tag multiplexing using Data-Dependent Acquisition (DDA-TMT). We focus on their application in improving sensitivity for low-abundance proteomic analysis, helping researchers, scientists, and drug development professionals make an informed choice based on their specific project goals, sample type, and resource constraints.

Frequently Asked Questions (FAQs)

1. Which method is more sensitive for detecting low-abundance proteins, and why?