Improving Loop Modeling Accuracy in Homology Models: A Guide for Biomedical Researchers

Accurate loop modeling remains a critical challenge in homology modeling, directly impacting the reliability of protein structures used in drug design and functional analysis.

Improving Loop Modeling Accuracy in Homology Models: A Guide for Biomedical Researchers

Abstract

Accurate loop modeling remains a critical challenge in homology modeling, directly impacting the reliability of protein structures used in drug design and functional analysis. This article provides a comprehensive guide for researchers and drug development professionals, exploring the foundational principles behind loop modeling difficulties and presenting a detailed examination of current methodologies, from data-based fragment assembly to advanced machine learning techniques. We offer practical troubleshooting strategies for refining models and a rigorous framework for validation and comparative assessment, synthesizing the latest advances in the field to empower scientists in building more trustworthy structural models for their biomedical research.

Why Loops Are the Critical Challenge in Protein Structure Prediction

The Critical Role of Loops in Protein Function and Drug Binding

Frequently Asked Questions (FAQs)

FAQ 1: Why is loop prediction so critical in structure-based drug design?

Flexible loop regions are often directly involved in ligand binding and molecular recognition. Using an incorrect loop configuration in a structural model can be detrimental to drug design studies if that loop is capable of interacting with the ligand. The inherent flexibility of loops means they can adopt alternative configurations upon ligand binding, a process described by the conformational selection model. This model posits that the apo (ligand-free) form of the protein samples higher energy conformations, and the ligand selectively binds to and stabilizes a holo-like conformation [1].

FAQ 2: What are the main challenges in accurately modeling loop regions?

Accurately modeling loops remains difficult for several key reasons [1]:

- Inherent Flexibility: Loops often lack regular secondary structure, making their conformation hard to predict.

- Scoring Function Limitations: No single scoring function consistently ranks native-like loop configurations accurately across different protein systems. The optimal choice often depends on the specific system being studied.

- Ligand-Induced Changes: Modeling how loop conformations change upon ligand binding adds a layer of complexity.

- Data Gaps: Due to their flexibility, loop regions are frequently missing from experimentally solved protein structures, creating gaps in homology models.

FAQ 3: How can I improve the ranking of native-like loop configurations in my predictions?

Research indicates that physics-based scoring functions can offer improvements. Studies comparing scoring methods have found that single snapshot Molecular Mechanics/Generalized Born Surface Area (MM/GBSA) scoring often provides better ranking of native-like loop configurations compared to statistically-based functions like DFIRE. Furthermore, re-ranking predicted loops in the presence of the bound ligand can sometimes yield more accurate results [1].

FAQ 4: Are there new computational methods that help with modeling flexible loops in specific conformations?

Yes, recent advances allow the integration of experimental data or biological hypotheses to guide loop and protein conformation. Distance-AF is one such method built upon AlphaFold2. It allows users to specify distance constraints between residues, which are incorporated into the loss function during structure prediction. This is particularly useful for [2]:

- Fitting protein structures into cryo-electron microscopy density maps.

- Modeling specific functional states (e.g., active vs. inactive conformations of GPCRs).

- Generating ensembles that satisfy NMR-derived distance restraints.

Troubleshooting Guides

Issue 1: Low Accuracy in Predicted Loop Conformations

Problem: Your homology model has a loop region that is predicted with low accuracy, and you suspect it is impacting your analysis of a binding site.

Solution:

- Generate an Ensemble: Use a specialized loop prediction program like CorLps to generate an ensemble of hundreds to thousands of possible loop conformations [1].

- Apply Multiple Scoring Functions: Do not rely on a single scoring function. The original DFIRE score in CorLps may not rank the best loop highly. Re-rank the top loop decoys using alternative functions like MM/GBSA or the optimized MM/GBSA-dsr [1].

- Consider the Ligand: If the ligand is known, re-score the loop ensembles in the presence of the ligand to identify conformations that are complementary to the binder.

- Incorporate Experimental Data: If available, use experimental constraints (e.g., from cross-linking mass spectrometry, NMR, or cryo-EM maps) with tools like Distance-AF to guide the prediction towards a biologically relevant conformation [2].

Issue 2: Modeling Loop-Ligand Interactions

Problem: You are studying a protein-ligand complex where a flexible loop is known to interact with the ligand, but your static model doesn't capture this interaction well.

Solution:

- Adopt an Ensemble Docking Approach: Instead of docking into a single rigid structure, use an ensemble of low-energy loop conformations generated from the apo protein structure. This helps mimic the conformational selection mechanism of binding [1].

- Explore De Novo Loop Design: For creating entirely new binders, consider designs that incorporate structured, buttressed loops. Recent methods allow for the de novo design of proteins with multiple long loops (9-14 residues) that are stabilized by extensive hydrogen-bonding networks. These designed loops can form one side of an extended binding pocket for peptides or other ligands [3].

- Identify "Hot Loops": Use comprehensive analysis tools like LoopFinder to identify interface loops that contribute significantly to binding energy (so-called "hot loops"). Mimicking these critical loops with synthetic macrocycles or constrained peptides is a promising strategy for inhibitor design [4].

Experimental Protocols

Protocol 1: Loop Prediction and Ranking Using CorLps

This protocol outlines the steps for predicting and selecting loop conformations for a region of interest [1].

1. Input Preparation:

- Obtain your protein structure (e.g., a homology model) and identify the loop residues to be predicted.

- Remove all co-factors, water molecules, and co-crystallized ligands from the structure file.

2. Loop Conformation Generation:

- Use the CorLps program, which employs the ab initio predictor loopy.

- Initialize the run to generate a large ensemble of loop conformations (e.g., 10,000).

- Output the top several hundred energetically favorable loops.

3. Clustering and Uniqueness:

- Use a Quality Threshold (QT) clustering algorithm to remove redundant conformations.

- Set a maximum cluster diameter (e.g., 1-2 Å) to produce a set of several hundred unique loop conformations.

4. Side-Chain Optimization:

- Repack the side chains of residues within a 6 Å zone around the predicted loop region using a tool like SCAP.

5. Initial Ranking:

- Rank the top 100 predicted loop configurations using the DFIRE scoring function. This is your DFIRE ensemble.

6. Advanced Re-ranking:

- Re-rank the DFIRE ensemble using more sophisticated scoring functions like MM/GBSA or Optimized MM/GBSA-dsr.

- Perform this re-ranking both with and without the bound ligand present to identify the best candidate.

Protocol 2: Identifying Critical Binding Loops with LoopFinder

This protocol describes how to use LoopFinder to systematically identify loops that are critical for protein-protein interactions, which can be prime targets for inhibitor design [4].

1. Data Collection:

- Acquire multi-chain protein structures from the PDB, filtered by resolution (e.g., ≤ 4Å) and sequence identity (e.g., < 90%).

2. Loop Identification:

- Run LoopFinder with customizable parameters. A standard setup includes:

- Loop length: 4 to 8 consecutive amino acids.

- Interface proximity: At least 80% of loop residues must have an atom within 6.5 Å of the binding partner.

- Loop geometry: A distance cutoff between Cα atoms of the loop termini (e.g., 6.2 Å) to ensure a true loop conformation and exclude extended secondary structures.

3. Energy Analysis:

- Perform computational alanine scanning on all identified loops using Rosetta or PyRosetta.

- Calculate the predicted ΔΔG for each residue upon mutation to alanine.

4. "Hot Loop" Selection:

- Identify "hot loops" by applying filters such as:

- Loops containing two consecutive hot spot residues (ΔΔG ≥ 1 kcal/mol).

- Loops with at least three hot spot residues.

- Loops where the average ΔΔG per residue is greater than 1 kcal/mol.

Data Presentation

Table 1: Comparison of Scoring Functions for Loop Prediction

This table summarizes the performance of different scoring functions evaluated for ranking native-like loop configurations in a scientific study [1].

| Scoring Function | Type | Key Principle | Reported Performance in Loop Ranking |

|---|---|---|---|

| DFIRE | Statistical | Knowledge-based potential derived from known protein structures. | Did not accurately rank native-like loops in tested systems. |

| MM/GBSA | Physics-based | Molecular Mechanics combined with Generalized Born and Surface Area solvation. | Provided the best ranking of native-like loop configurations in general. |

| Optimized MM/GBSA-dsr | Physics-based | MM/GBSA optimized for decoy structure refinement. | Performance was system-dependent; not consistently superior to standard MM/GBSA. |

Table 2: Research Reagent Solutions for Loop Modeling

This table lists key computational tools and resources essential for researching protein loops.

| Tool/Resource Name | Type | Primary Function in Loop Research |

|---|---|---|

| CorLps [1] | Software Suite | Performs ab initio loop prediction and generates ensembles of loop conformations. |

| Rosetta/PyRosetta [4] | Software Suite | Used for computational alanine scanning and energy calculations to identify critical "hot spot" residues in loops. |

| AlphaFold2 & Variants [5] [2] | AI Structure Prediction | Provides highly accurate initial models; variants like Distance-AF can incorporate constraints for modeling specific loop conformations. |

| LoopFinder [4] | Analysis Algorithm | Comprehensively identifies and analyzes peptide loops at protein-protein interfaces within the entire PDB. |

| PDB | Database | Source of experimental protein structures for analysis and use as templates in homology modeling [1] [6]. |

| ATLAS, GPCRmd | Specialized Database | Databases of molecular dynamics trajectories for analyzing loop and protein dynamics [6]. |

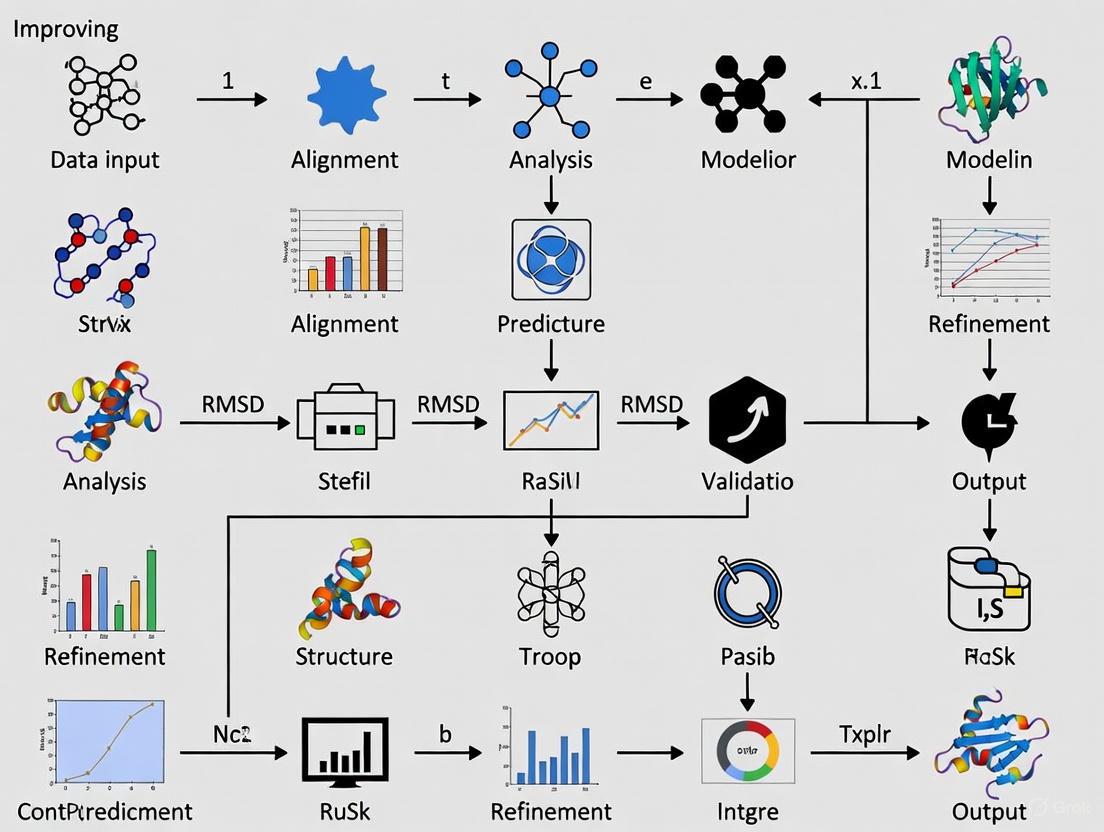

Workflow and Relationship Visualizations

Loop Prediction and Validation Workflow

Scoring Function Decision Guide

In protein structure prediction, the "conformational sampling problem" refers to the significant challenge of efficiently exploring the vast number of possible three-dimensional structures that flexible protein regions can adopt. This problem is particularly acute in loop modeling, where even short loops can exhibit remarkable flexibility, making them difficult to predict accurately in homology models. Loops often play critical functional roles in ligand binding, catalysis, and molecular recognition, making their accurate modeling essential for reliable structure-based drug design [7] [8].

The core of the problem lies in the astronomical number of possible conformations a protein loop can sample. With each residue having multiple torsion angles that can rotate, the conformational space grows exponentially with loop length. Computational methods must navigate this high-dimensional energy landscape to identify biologically relevant structures from among countless possibilities [9]. While molecular dynamics (MD) simulations can, in principle, characterize conformational states and transitions, the energy barriers between states can be high, preventing efficient sampling without substantial computational resources [9].

Frequently Asked Questions (FAQs)

Q1: Why is loop modeling particularly challenging in homology modeling?

Loop modeling represents a "mini protein folding problem" under geometric constraints. The challenge arises from the high flexibility of loops compared to structured secondary elements, the exponential increase in possible conformations with loop length, and the difficulty in accurately scoring which conformations are biologically relevant. Even in high-identity homology modeling, loops often differ significantly between homologous proteins, necessitating ab initio prediction methods [7] [8].

Q2: What are the key limitations in current conformational sampling methods?

The primary limitations include:

- Computational expense: Comprehensive sampling requires significant resources, especially for longer loops (>8 residues)

- Force field accuracy: Inaccurate energy functions may favor non-native conformations

- Sampling barriers: High energy barriers between conformational states impede efficient exploration

- Timescale gap: Biologically relevant conformational changes often occur on timescales (microseconds to milliseconds) that are challenging for standard molecular dynamics [7] [9]

Q3: How can I improve sampling for long loops (≥12 residues)?

For longer loops, consider:

- Implementing enhanced sampling techniques like replica-exchange molecular dynamics

- Using specialized loop modeling software like PLOP or LoopBuilder that combine extensive sampling with sophisticated scoring

- Incorporating experimental restraints from NMR or cryo-EM when available

- Applying collective variable biasing to focus sampling on relevant conformational subspaces [7] [9] [10]

Q4: What is the relationship between conformational sampling and scoring functions?

Sampling and scoring are intrinsically linked—extensive sampling is useless without accurate scoring to identify native-like conformations, while perfect scoring functions cannot compensate for poor sampling that misses near-native conformations. The most successful protocols apply hierarchical filtering, using fast statistical potentials initially followed by more computationally expensive all-atom force fields for final ranking [7].

Troubleshooting Common Sampling Issues

Table 1: Common Conformational Sampling Problems and Solutions

| Problem | Possible Causes | Recommended Solutions |

|---|---|---|

| Sampling stuck in local minima | High energy barriers; Inadequate sampling algorithm | Implement replica-exchange MD; Use torsion angle dynamics; Apply biasing methods [9] [10] |

| Poor loop closure | Insufficient closure algorithms; Steric clashes | Employ advanced closure methods like Direct Tweak; Check for loop-protein interactions during closure [7] |

| Long computation times for sampling | Inefficient sampling method; Too many degrees of freedom | Use hierarchical filtering with DFIRE potential; Implement internal coordinate methods; Focus sampling on relevant collective variables [7] [11] [9] |

| Accurate sampling but poor final selection | Inadequate scoring function; Insufficient final refinement | Apply molecular mechanics force field minimization (e.g., OPLS); Use multiple scoring functions; Include solvation effects [7] |

| Difficulty sampling specific loop conformations | Limited template diversity; Incorrect initial alignment | Blend sequence- and structure-based alignments; Utilize multiple templates; Account for crystal contacts when available [7] [12] |

Table 2: Typical Loop Modeling Accuracy by Method and Loop Length

| Loop Length | LOOPY (Å) | RAPPER (Å) | Rosetta (Å) | PLOP (Å) | PLOP II (with crystal contacts) (Å) |

|---|---|---|---|---|---|

| 8 residues | 1.45 | 2.28 | 1.45 | 0.84 | NA |

| 10 residues | 2.21 | 3.48 | NA | 1.22 | NA |

| 12 residues | 3.42 | 4.99 | 3.62 | 2.28 | 1.15 |

Table 3: Performance Comparison of Enhanced Sampling Methods

| Method | Sampling Approach | Best Application | Computational Efficiency |

|---|---|---|---|

| Torsion Angle MD (GNEIMO) | Freezes bond lengths/angles; samples torsion space | Domain motions; conformational transitions | High (allows 5 fs timesteps) [10] |

| Replica-Exchange MD | Parallel simulations at different temperatures | Overcoming energy barriers; exploring substates | Moderate to high (parallelizable) [10] |

| Collective Variable Biasing | Bias potential along defined coordinates | Focused sampling of specific transitions | Variable (depends on CV quality) [9] |

| Metadynamics | History-dependent bias potential | Free energy calculations; barrier crossing | Moderate (requires careful parameterization) [9] |

Experimental Protocols for Enhanced Conformational Sampling

LoopBuilder Protocol for High-Accuracy Loop Modeling

The LoopBuilder protocol employs a hierarchical approach combining extensive sampling with sophisticated filtering and refinement:

Initial Sampling Phase:

- Use the Direct Tweak algorithm to generate sterically feasible backbone conformations

- Check for interactions between the loop and the rest of the protein during closure

- Generate an ensemble of at least 10,000 initial conformations for an 8-residue loop

Statistical Potential Filtering:

- Apply the DFIRE statistical potential to select a subset (typically 5-10%) of conformations closest to native structure

- This filtering step significantly reduces computational time for subsequent refinement

All-Atom Refinement:

- Perform energy minimization using the OPLS/SBG-NP force field

- Include generalized Born solvation terms

- Rank final conformations based on force field energy

This protocol has been shown to achieve prediction accuracies of 0.84 Å RMSD for 8-residue loops and maintains reasonable accuracy (<2.3 Å) for loops up to 12 residues [7].

Torsion Angle Molecular Dynamics with GNEIMO

The GNEIMO (Generalized Newton-Euler Inverse Mass Operator) method enhances sampling by focusing on torsional degrees of freedom:

System Preparation:

- Partition protein into rigid clusters connected by hinges

- Freeze bond lengths and bond angles via holonomic constraints

- Use implicit solvation model (Generalized Born) with interior dielectric constant of 4.0

Simulation Parameters:

- Integration timestep: 5 fs (compared to 1-2 fs for Cartesian MD)

- Temperature: 310 K maintained with Nosé-Hoover thermostat

- Nonbonded forces: switched off at 20 Å cutoff

- For enhanced sampling: Combine with replica-exchange (REXMD)

Analysis:

- Monitor torsional angle distributions

- Calculate RMSD to known experimental structures

- Identify conformational substates using cluster analysis

This approach has successfully sampled conformational transitions in flexible proteins like fasciculin and calmodulin that are rarely observed in conventional Cartesian MD simulations [10].

Multi-Template Hybridization for Low-Identity Homology Modeling

For modeling loops when template identity is below 40%:

Template Selection and Alignment:

- Select multiple templates (typically 3-5) covering different conformational states

- Create structure-based alignments focusing on conserved structural motifs

- Manually curate loop alignments based on Cα to Cβ vectors and secondary structure

Hybrid Model Building:

- Maintain all templates in defined global geometry

- Randomly swap template segments using Monte Carlo sampling

- Simultaneously incorporate peptide fragments from PDB-derived library

Loop Refinement:

- Close loops simultaneously during template swapping

- Apply specialized loop scoring terms

- Use iterative refinement cycles with increasing spatial restraints

This protocol has enabled accurate modeling of GPCRs using templates as low as 20% sequence identity [12].

Essential Research Reagent Solutions

Table 4: Key Software Tools for Conformational Sampling

| Tool Name | Primary Function | Application in Loop Modeling |

|---|---|---|

| PLOP | Systematic dihedral-angle build-up with OPLS force field | High-accuracy loop prediction; Long loops with crystal contacts [7] |

| LoopBuilder | Hierarchical sampling, filtering, and refinement | Balanced accuracy and efficiency for medium-length loops [7] |

| GNEIMO | Torsion angle molecular dynamics | Enhanced sampling of conformational transitions; Domain motions [10] |

| Rosetta | Fragment assembly with Monte Carlo | Multiple template hybridization; Low-identity homology modeling [12] |

| MDAnalysis | Trajectory analysis and toolkit development | Analysis of sampling completeness; Custom analysis scripts [13] |

| Modeller | Comparative modeling with spatial restraints | Standard homology modeling; Multiple template approaches [12] |

Table 5: Force Fields and Scoring Functions for Loop Modeling

| Scoring Method | Type | Best Use Case |

|---|---|---|

| DFIRE | Statistical potential | Initial filtering of loop conformations [7] |

| OPLS/SBG-NP | All-atom force field with implicit solvation | Final ranking and refinement [7] |

| AMBER ff99SB | All-atom force field | Cartesian and torsion dynamics simulations [10] |

| Rosetta Scoring Function | Mixed statistical/physico-chemical | Template hybridization and fragment assembly [12] |

| RAPDF | Statistical potential | Rapid screening of loop ensembles [7] |

Workflow and Pathway Visualizations

Loop Modeling Sampling Workflow

Enhanced Sampling Method Classification

Sampling Problem Troubleshooting Guide

Frequently Asked Questions (FAQs)

1. What is a "template gap" in homology modeling? A template gap occurs when there are regions in your target protein sequence, often loops or flexible domains, that have no structurally similar or homologous counterpart in the available template structures. This is most problematic in low-homology regions where sequence identity is low, leading to alignment errors and inaccurate models [14] [15].

2. Why are loops, especially the H3 loop in antibodies, particularly hard to model? Loops are often surface-exposed and flexible, exhibiting great diversity in length, sequence, and structure. The H3 loop in antibodies is especially challenging because its conformation is not determined by a robust canonical structure model like the other five complementary determining region (CDR) loops. Its high variability makes finding suitable templates difficult, and ab initio methods are hampered by an incomplete understanding of the physicochemical principles governing its structure [16].

3. When should I consider using multiple templates? Using multiple templates can be beneficial when no single template provides good coverage for all regions of your target protein. It can help model different domains from their best structural representatives and extend model coverage. However, use it judiciously, as automatic inclusion of multiple templates does not guarantee improvement and can sometimes produce models worse than the best single-template model [14].

4. How can I improve the accuracy of my loop models? For critical loops, especially in applications like antibody engineering, consider specialized methods. One effective approach uses machine learning (e.g., Random Forest) to select structural templates based not only on sequence similarity but also on structural features and the likelihood of specific interactions between the loop and the rest of the protein framework [16].

5. What are the key steps in a standard homology modeling workflow? The classical steps are: (1) Template identification and selection, (2) Target-Template alignment, (3) Model building, (4) Loop modeling, (5) Side-chain modeling, (6) Model optimization, and (7) Model validation [15].

Troubleshooting Guides

Problem: Poor Model Quality in Specific Loop Regions

Symptoms: High root-mean-square deviation (RMSD) in loop regions when comparing your model to a later-determined experimental structure; steric clashes or unusual torsion angles in loops.

Solution: Implement a specialized loop modeling protocol.

Experimental Protocol: Machine Learning-Based Template Selection for Loops

This methodology is adapted from a successful approach for antibody H3 loops and can be generalized for other difficult loops [16].

Create a Non-Redundant Loop Structure Library

- Action: Scan the PDB for all proteins (or a specific subset like antibodies) using tools like HMMER with profile Hidden Markov Models (HMMs) [16] [15].

- Quality Control: Filter structures for resolution (e.g., better than 3.0 Å) using a server like PISCES [16].

- Clustering: Cluster the remaining loops based on sequence identity (e.g., 90% threshold) using a tool like CD-HIT. Select one representative structure from each cluster to create a non-redundant dataset [16].

Feature Extraction for Machine Learning

- Action: For a target loop, calculate features against all loops in your library. Key features include:

- Sequence similarity scores.

- Loop length compatibility.

- Presence of key residue interactions (e.g., with the framework).

- Environment-specific substitution scores [16].

- Action: For a target loop, calculate features against all loops in your library. Key features include:

Train a Random Forest Model for Prediction

- Action: Use the R package

randomForestto train a regression model. The input features (from Step 2) are used to predict the 3D structural similarity (e.g., TM-score) between loop pairs [16]. - Output: The model will predict the most structurally similar loop(s) from your library for your target sequence.

- Action: Use the R package

Build and Rank Models

- Action: Use the top-ranked template loops to build your loop model.

- Validation: Rank the resulting models using a scoring function that evaluates intramolecular interactions. The quality estimate provided by the Random Forest model can also be a reliable indicator [16].

Problem: Deciding When to Use Multiple Templates

Symptoms: Your single-template model has regions with no structural coordinates ("gaps") or regions that are known to be inaccurate.

Solution: A strategic multi-template approach.

Experimental Protocol: Strategic Multi-Template Modeling

Generate High-Quality Input Alignments

Build and Evaluate Single-Template Models

Build Multi-Template Models

- Action: Feed the alignments from Step 1 into a modeling program capable of using multiple templates (e.g., Modeller). Limit the number of templates to 2 or 3, as performance often degrades with more templates [14].

Compare and Select the Final Model

- Action: Critically compare the multi-template model to your best single-template model.

- Core Residue Analysis: Compare the quality only for the residues that were present in your best single-template model. This removes the "trivial" improvement from simply extending the model length and shows if the multi-template approach genuinely improved the core structure [14].

- Final Selection: Use an MQAP like ProQ to rank the models. If the multi-template model scores significantly higher, select it. If not, the single-template model may be your best option [14].

The following workflow diagram summarizes the decision process for addressing template gaps:

Quantitative Data on Multi-Template Modeling

The table below summarizes findings from a systematic study on the impact of using multiple templates on model quality, measured by TM-score (a measure of structural similarity where 1.0 is a perfect match) [14].

Table 1: Impact of Multiple Templates on Model Quality (TM-score)

| Number of Templates | Modeling Program | Average TM-Score Change (All Residues) | Average TM-Score Change (Core Residues Only) | Notes |

|---|---|---|---|---|

| 1 | Nest | Baseline | Baseline | Slightly better single-template models than Modeller. |

| 2-3 | Modeller | +0.01 | Slight Improvement | Optimal range for Modeller. Most improved cases produced by Modeller. |

| >3 | Modeller, Nest | Gradual Decrease | Gets Worse | Lower-ranked alignments often of poor quality. |

| 3 | Pfrag (Average) | Largest Improvement | Gets Worse | - |

| All Available | Pfrag (Shotgun) | Continuous Improvement | Gets Worse | Less peak improvement than Modeller/Nest. |

Research Reagent Solutions

Table 2: Key Software Tools for Addressing Template Gaps

| Tool Name | Function | Application in Addressing Template Gaps |

|---|---|---|

| HHblits [15] | HMM-HMM-based lightning-fast iterative sequence search | Generates more accurate target-template alignments for low-homology regions compared to sequence-based methods. |

| Modeller [14] [15] | Homology modeling | A standard program that can utilize multiple templates for model building; performs best with 2-3 templates. |

| Rosetta Antibody [16] | Antibody-specific structure prediction | Combines template selection with ab initio CDR H3 loop modeling; a benchmark for antibody loop prediction. |

| FREAD [16] | Fragment-based loop prediction | Identifies best loop fragments using local sequence and geometric matches from a database. |

| Random Forest (R package) [16] | Machine Learning Algorithm | Can be trained to select the best structural templates for difficult loops based on sequence and structural features. |

| ProQ [14] | Model Quality Assessment Program | Ranks models and identifies the best one from a set of predictions; crucial for selecting between single and multi-template models. |

| TM-score [14] [16] | Structural Comparison Metric | Used to evaluate model quality; weights shorter distances more heavily than RMSD, providing a more global measure of similarity. |

Frequently Asked Questions (FAQs) and Troubleshooting Guide

This technical support resource addresses common challenges researchers face in protein structure modeling, with a specific focus on improving loop modeling accuracy within homology modeling projects.

The accuracy of loop modeling is highly dependent on the length of the loop and the methodological approach used. A major source of error is selecting an inappropriate method for a given loop length. The table below summarizes a quantitative performance comparison of different methods across various loop lengths, based on a multi-method study [17].

Table 1: Loop Modeling Method Performance by Loop Length

| Loop Length (residues) | Recommended Method | Average Accuracy (Å) | Key Rationale |

|---|---|---|---|

| 4 - 8 | MODELLER | Lower RMSD | Superior for short loops via spatial restraints and energy minimization [17]. |

| 9 - 12 | Hybrid (MODELLER+CABS) | Intermediate RMSD | Combination improves accuracy over individual methods [17]. |

| 13 - 25 | CABS or ROSETTA | Higher RMSD (2-6 Å) | Coarse-grained de novo modeling more effective for conformational search of long loops [17]. |

FAQ 2: Why are my secondary structure predictions often inaccurate for specific regions, and could this indicate a problem?

Inconsistent or inaccurate secondary structure predictions can be a significant troubleshooting clue. While standard predictors like JPred, PSIPRED, and SPIDER2 are highly accurate for most single-structure proteins, they are designed to output one best guess. If a protein region can adopt two different folds (a Fold-Switching Region or FSR), the prediction will conflict with at least one of the experimental structures [18].

- Quantitative Evidence: One study found that secondary structure prediction accuracies (Q3 scores) for known FSRs were significantly lower (mean Q3 < 0.70) than for non-fold-switching regions (mean Q3 > 0.85) [18].

- Troubleshooting Action: If you observe a region with consistently poor secondary structure prediction across different algorithms, consult the literature for evidence of metamorphic behavior or intrinsic disorder. This region may require modeling in multiple conformations [18].

FAQ 3: How can I validate and refine side-chain packing in my model?

Inaccurate side-chain packing is a common issue that can affect the analysis of binding sites and protein interactions.

- Use of Advanced Suites: Leverage all-atom structure prediction tools like AlphaFold, which explicitly models the 3D coordinates of all heavy atoms, including side chains, with high accuracy [19]. Similarly, RoseTTAFold uses a "three-track" neural network that simultaneously reasons about sequence, distance, and 3D structure, leading to improved side-chain packing [20].

- Energy Minimization: Use refinement suites like Rosetta to repack side chains and perform energy minimization. This is a standard step in the third stage of classical homology modeling to improve the physical realism and local geometry of the model [21] [17].

Experimental Protocols for Loop Modeling

Protocol 1: Multi-Method Loop Modeling Workflow

This protocol is adapted from a comparative study that recommends methods based on loop length [17].

Objective: To accurately model a loop region of a protein using the most effective method for its length.

Materials:

- Template structure with the missing loop region.

- Target sequence.

- Software: MODELLER, ROSETTA, and/or CABS.

- Computing cluster or high-performance computer.

Methodology:

- Loop Definition: Identify the start and end residues of the loop to be modeled and its length.

- Method Selection: Refer to Table 1 above to choose the appropriate modeling method.

- Model Generation:

- For short loops (4-8 residues): Use MODELLER with default parameters to generate multiple models (e.g., 500). Select the top model based on the Discrete Optimized Protein Energy (DOPE) score [17].

- For medium loops (9-12 residues): Generate an initial set of models with MODELLER. Select the top 10 ranked models and use them as multiple templates for a subsequent CABS modeling run to refine the loop conformation [17].

- For long loops (13-25 residues): Use a coarse-grained de novo method like CABS or the loop modeling protocols in ROSETTA for extensive conformational sampling [17].

- Validation: Superimpose the model onto the template structure and calculate the Root-Mean-Square Deviation (RMSD) of the loop's Cα atoms. Assess steric clashes and side-chain rotamer合理性.

The following diagram illustrates this multi-method workflow:

Protocol 2: Identifying Fold-Switching Regions via Secondary Structure Prediction Inconsistencies

Objective: To identify protein regions that may adopt alternative secondary structures, which could complicate modeling efforts [18].

Materials:

- Protein amino acid sequence.

- Access to secondary structure prediction servers (e.g., JPred, PSIPRED, SPIDER2).

Methodology:

- Prediction: Run the target protein sequence through at least three different secondary structure prediction servers (e.g., JPred, PSIPRED, SPIDER2).

- Comparison: Align all prediction outputs and the secondary structure from your experimental template or reference structure.

- Analysis: Identify regions with significant discrepancies. Pay particular attention to HE (helix-to-strand) and EC (strand-to-coil) errors, as these are strong indicators of potential fold-switching [18].

- Interpretation: A protein region with a consensus Q3 accuracy score below 0.60 from multiple predictors is a strong candidate for being a Fold-Switching Region and warrants further literature investigation [18].

The Scientist's Toolkit: Key Research Reagents and Software

Table 2: Essential Computational Tools for Structure Modeling and Analysis

| Tool Name | Type | Primary Function in Modeling | Relevance to Loop/Inaccuracy Research |

|---|---|---|---|

| MODELLER [17] | Software Package | Comparative homology modeling by satisfaction of spatial restraints. | Method of choice for short loop modeling (≤8 residues) [17]. |

| ROSETTA [21] [17] | Software Suite | De novo structure prediction, loop modeling, and side-chain refinement. | Effective for long loop modeling and high-resolution refinement of side-chain packing [21] [17]. |

| CABS [17] | Software Tool | Coarse-grained de novo modeling for protein structure and dynamics. | Recommended for modeling long, challenging loops (≥13 residues) [17]. |

| AlphaFold [19] | Deep Learning Network | End-to-end 3D coordinate prediction from sequence. | Provides highly accurate backbone and side-chain models; useful as a reference or for de novo modeling [19]. |

| RoseTTAFold [20] | Deep Learning Network | Three-track neural network for integrated sequence-distance-structure prediction. | Accurate modeling of protein structures and complexes; accessible via the Robetta server [20] [22]. |

| Bio3D [23] | R Package | Analysis of ensemble of structures, PCA, and dynamics. | Comparative analysis of multiple models/conformers to assess variability and stability [23]. |

The logical relationships and data flow between these key tools in a structural bioinformatics pipeline can be visualized as follows:

The Impact of Loop Length on Prediction Difficulty and Accuracy

Frequently Asked Questions

Q1: Why are longer loops generally more difficult to predict accurately? Longer loops possess greater conformational flexibility and a larger number of degrees of freedom. This results in a more complex energy landscape with many local minima, making it challenging to identify the single, native conformation. Statistical analyses of loop banks reveal that prediction accuracy, measured by Root-Mean-Square Deviation (RMSD), systematically decreases as loop length increases [24].

Q2: What is the quantitative relationship between loop length and prediction error? Based on exhaustive analyses of loops from protein structures, the average RMSD between predicted and native loop structures shows a clear correlation with loop length. The following table summarizes the expected accuracy for canonical loop modeling approaches [24]:

| Loop Length (residues) | Average Prediction RMSD (Å) |

|---|---|

| 3 | 1.1 Å |

| 4 | ~1.5 Å |

| 5 | ~2.1 Å |

| 6 | ~2.7 Å |

| 7 | ~3.2 Å |

| 8 | 3.8 Å |

Q3: Which amino acids are over-represented in loops and why? Loop sequences are not random. Statistical analysis of loop banks shows significant over-representation of specific amino acids: Glycine (most abundant, especially in short loops), Proline, Asparagine, Serine, and Aspartate [24]. Glycine's flexibility (lacks a side chain) and Proline's rigidity (restricts backbone torsion angles) are particularly important for loop structure and nucleation.

Q4: How do modern deep learning methods like AlphaFold2 handle CDR-H3 loops in antibodies? The Complementarity Determining Region H3 (CDR-H3) loop in antibodies is notoriously diverse and difficult to predict. Deep learning methods have made significant strides. Specialized tools like H3-OPT, which combines AlphaFold2 with protein language models, have achieved an average RMSD of 2.24 Å for CDR-H3 backbone atoms against experimentally determined structures, outperforming other computational methods [25]. The Ibex model further advances this by explicitly predicting both bound (holo) and unbound (apo) conformations from a single sequence [26].

Q5: How does loop length affect the stability of non-canonical structures like G-Quadruplexes? For RNA G-Quadruplexes, thermodynamic stability can be modulated by loop length. Biophysical studies using UV melting and circular dichroism spectroscopy have systematically investigated this relationship, finding that stability is strongly influenced by the length of the loops connecting the G-tetrad stacks [27].

Troubleshooting Guides

Issue: Poor Long Loop Prediction Accuracy

Problem: Your homology model has a long loop (e.g., >8 residues) that is poorly modeled, with high RMSD or steric clashes.

Solutions:

- Utilize Deep Learning Models: For the best accuracy, use state-of-the-art structure prediction tools like AlphaFold2, AlphaFold3, or ESMFold. For antibody-specific loops, employ specialized tools like H3-OPT [25], IgFold [25], or Ibex [26].

- Leverage Advanced Template Servers: Use web servers like Phyre2.2, which incorporate deep learning. Phyre2.2 can automatically identify the most suitable AlphaFold2 model from databases to use as a template for your query sequence, often improving loop regions [28].

- Consider Conformational States: If you are modeling an antibody for drug design and suspect induced fit upon binding, use a tool like Ibex. It allows you to specify whether you want to predict the apo (unbound) or holo (bound) conformation, which can be critical for accurately modeling flexible CDR-H3 loops [26].

Issue: Loop Closure Failures

Problem: The modeled loop does not connect properly to the main protein framework, resulting in broken backbone chains or unrealistic bond geometries.

Solutions:

- Fragment-Based Assembly: Follow protocols like that in Phyre2.2, which uses a library of fragments (2-15 residues long) from the PDB. The algorithm searches for fragments that match the loop sequence and can be geometrically melded onto the framework residues flanking the loop. The final model is achieved through energy minimization to ensure proper closure [28].

- Energy Minimization and Refinement: After initial loop building, always perform all-atom energy minimization with restraints on the stable framework regions. This allows the loop and its connection points to relax into a sterically favorable and chemically reasonable configuration [24] [28].

Experimental Protocols & Data

Protocol: Benchmarking Loop Prediction Accuracy

This protocol is used in the development and evaluation of tools like H3-OPT and Ibex [25] [26].

- Dataset Curation: Compile a high-quality, non-redundant set of protein structures (e.g., from the PDB) with high-resolution X-ray crystal structures (<2.5 Å). For antibody-specific benchmarks, use datasets from SAbDab.

- Structure Prediction: Run the loop modeling or structure prediction algorithm of interest on all sequences in the benchmark dataset.

- Structural Alignment: Superimpose the predicted model onto the experimental (native) structure using the backbone atoms of the framework regions (everything except the loop being assessed).

- RMSD Calculation: Calculate the Root-Mean-Square Deviation (RMSD) of the heavy (Cα) atoms for the loop region only, after the framework alignment. This quantifies the local accuracy of the loop prediction.

- Statistical Analysis: Report the average RMSD and standard deviation across the entire dataset, and stratify results by loop length or type (e.g., CDR-H1, H2, H3).

Quantitative Benchmarks of Advanced Models

The table below summarizes the performance of various state-of-the-art models on antibody CDR-H3 loops, demonstrating the progress brought by deep learning. All values are RMSD in Ångströms (Å) [26].

| Model Type | Model Name | CDR-H3 Loop RMSD (Å) - Antibodies | CDR-H3 Loop RMSD (Å) - Nanobodies |

|---|---|---|---|

| General Protein | ESMFold | 3.15 | 3.60 |

| Antibody-Specific | ABodyBuilder3 | 2.86 | 3.31 |

| Chai-1 | 2.65 | 3.76 | |

| Boltz-1 | 2.96 | 2.83 | |

| Ibex | 2.72 | 3.12 |

Workflow Visualization

The following diagram illustrates a robust, iterative workflow for loop modeling that integrates both traditional and modern deep learning approaches.

The Scientist's Toolkit: Research Reagent Solutions

| Tool / Resource Name | Type | Primary Function in Loop Modeling |

|---|---|---|

| AlphaFold2/3 | Software / Web Server | Highly accurate ab initio structure prediction; provides excellent initial models for entire proteins, including loops [25] [28]. |

| H3-OPT & Ibex | Specialized Software | Predict antibody/nanobody structures with state-of-the-art accuracy for the highly variable CDR-H3 loop; Ibex predicts both apo/holo states [25] [26]. |

| Phyre2.2 | Web Server | Template-based homology modeling server that automatically leverages AlphaFold2 models as templates and includes robust loop and side-chain modeling protocols [28]. |

| SCWRL4 | Software Algorithm | Fast and accurate side-chain conformation prediction, crucial for refining loop models after backbone placement [28]. |

| PDB (Protein Data Bank) | Database | Source of high-resolution experimental structures used for fragment libraries, template identification, and benchmark validation [24] [28]. |

| SAbDab | Specialized Database | The Structural Antibody Database; essential for benchmarking antibody-specific loop predictions and accessing known antibody structures [25] [26]. |

Modern Loop Modeling Techniques: From Fragment Assembly to AI-Driven Approaches

Troubleshooting Guides

Common Loop Modeling Failures and Solutions

Table 1: Troubleshooting Common Loop Modeling Issues

| Problem | Potential Causes | Solutions & Diagnostic Steps |

|---|---|---|

| Poor quality initial homology model | Template with low sequence identity; misalignments; poor flanking regions. | - Verify TM-score of initial model is >0.5 [29].- Ensure flanking residues (4 on each side) are in stable secondary structures (helices/sheets) [29].- Re-evaluate template selection using multiple templates or PSI-BLAST [30]. |

| High candidate clash scores | Steric conflicts between candidate loop and protein core; inaccurate side-chain packing. | - Apply clash filtering during candidate selection [31].- Utilize the server's clash report for multiple loops to select compatible candidates [32].- Consider side-chain repacking or short energy minimization post-modeling. |

| Low confidence scores | Lack of suitable fragments in PDB; unusual loop length or sequence. | - Check the predicted confidence score and level from the server output [32] [29].- For low-confidence loops, consider extending the definition to include more stable flanking regions [29].- For long loops (>12 residues), use a method like DaReUS-Loop specifically validated for lengths up to 30 residues [31] [32]. |

| Inconsistent results for multiple loops | Inter-loop clashes; poor combinations of independent models. | - Use the "remodeling" mode in DaReUS-Loop, which models loops in parallel while keeping others fixed, proven to be most accurate [32] [29].- Consult the general clash report to find non-clashing combinations across different loops [32]. |

Input and Data Preparation Issues

Table 2: Resolving Input and Technical Problems

| Issue | Resolution |

|---|---|

| Incorrect residue numbering | Ensure the protein structure (PDB file) and the input sequence follow the exact same numbering scheme. Residue "ALA 16" in the structure must correspond to the 16th character in the sequence [29]. |

| Handling non-standard residues | The server accepts only the 20 standard amino acids. Manually remove water molecules, co-factors, ions, and ligands from the input PDB file [29]. |

| Server performance and long run times | Typical runs take about one hour, depending on server load. For multiple loops, the server models them in parallel, which is more efficient than sequential modeling [32] [29]. |

Frequently Asked Questions (FAQs)

Q1: What is the fundamental difference between data-based methods (like DaReUS-Loop, FREAD) and ab initio methods for loop modeling? Data-based methods identify candidate loop structures by mining existing protein structures in databases like the PDB based on the geometry of the flanking regions. These candidates are then filtered and scored [31]. In contrast, ab initio methods computationally explore the conformational space of the loop from scratch using energy functions, which is more time-consuming [31] [32]. Hybrid methods like Sphinx combine both approaches [31].

Q2: Why is my loop modeling accuracy low even when using a good template? The most common reason is poor quality of the flanking regions. In homology modeling, the flanks are not perfect as in crystal structures and can have large deviations from the native structure. Ensure that the residues immediately adjacent to the loop gap are accurate and part of a helix or sheet [31] [29]. Data-based methods are sensitive to the geometry imposed by these flanks.

Q3: Can I use DaReUS-Loop to model loops in an experimentally solved structure? While technically possible, the DaReUS-Loop protocol is specifically optimized for the non-ideal conditions of homology models, where flank regions are perturbed [32] [29]. Its performance on high-resolution crystal structures with perfect flanks has not been the focus of its validation.

Q4: How does DaReUS-Loop handle long loops, which are traditionally problematic? DaReUS-Loop was specifically designed and validated to handle long loops effectively. It can model loops of up to 30 residues [29]. The method outperforms other state-of-the-art approaches for loops of at least 15 residues, showing a significant increase in accuracy [31] [32].

Q5: How should I select the best model from the 10 candidates returned by DaReUS-Loop? DaReUS-Loop does not reliably predict the single best model among the 10 candidates [29]. The strategy is to achieve high accuracy in at least one of the returned models. You should rely on the provided confidence score, which correlates with the expected accuracy of the best loop, and inspect the candidates visually or using additional experimental data (e.g., SAXS) if available [32] [29].

Q6: What is the advantage of using a data-based method that mines the entire PDB? A key advantage is the ability to discover candidate loops from remote or unrelated proteins that are not homologous to your target. Strikingly, over 50% of successful loop models generated by DaReUS-Loop are derived from unrelated proteins. This indicates that protein fragments under similar spatial constraints can adopt similar structures beyond evolutionary homology [31].

Experimental Protocols & Workflows

DaReUS-Loop Protocol for Loop Remodeling

The following diagram illustrates the core workflow of the DaReUS-Loop method for remodeling a loop in a homology model.

Protocol Steps:

- Input Preparation: Provide the initial homology model in PDB format and the full protein sequence. For remodeling, the sequence should have the target loop regions indicated by gaps ("-") [32] [29].

- PDB Mining: The server uses BCLoopSearch to mine the entire Protein Data Bank for protein fragments whose flanking regions geometrically match the flanks of the loop to be modeled [31] [29].

- Candidate Filtering: The pool of candidates is progressively refined through several filters [31]:

- Sequence Filter: Candidates are filtered based on sequence similarity using a positive BLOSUM62 score threshold.

- Conformation Filter: The local conformational profile of candidates is compared to the target using Jensen-Shannon Divergence (JSD), filtering out candidates with high JSD.

- Clash Filter: Candidates that cause steric clashes with the rest of the protein structure are eliminated.

- Clustering and Ranking: The remaining candidates are clustered to reduce redundancy. The best candidates are selected based on local conformation profile and flank Root-Mean-Square Deviation (RMSD) [31] [29].

- Model Building and Output: Complete 3D models are built by superimposing the candidate loops onto the flanks of the input structure. The server returns 10 models for each loop, along with a confidence score that correlates with expected accuracy [31] [32] [29].

Performance Validation Protocol

To validate the performance of a loop modeling method like DaReUS-Loop against other tools, follow this benchmarking workflow.

Validation Steps:

- Benchmark Selection: Use standardized test sets from critical assessment experiments like CASP11 and CASP12, which contain targets for template-based modeling [31] [32].

- Method Comparison: Run DaReUS-Loop alongside other state-of-the-art methods for comparison. Typical comparisons include [31] [32]:

- Ab initio methods: Rosetta NGK, GalaxyLoop-PS2.

- Data-based methods: LoopIng, FREAD.

- Hybrid methods: Sphinx.

- Accuracy Metric: The primary metric is the loop RMSD (in Ångströms) after superimposing the model on the flanking residues of the native structure. The percentage of models achieving high accuracy (e.g., RMSD < 1.0 Å or < 2.0 Å) is also calculated [31] [32].

- Analysis: Analyze the results grouped by loop length (e.g., short loops of 3-12 residues vs. long loops of ≥15 residues) and by the method's confidence score to verify its predictive power [31] [32].

Table 3: Comparative Loop Modeling Accuracy (Average RMSD, Å) Data represents the average RMSD of the best of the top 10 models on CASP11 and CASP12 test set loops. Lower values indicate better performance. Adapted from [32].

| Method | Type | CASP11 (set_ai) | CASP12 (set_ai) |

|---|---|---|---|

| DaReUS-Loop Server | Data-based | 2.00 Å | 2.35 Å |

| DaReUS-Loop (Original) | Data-based | 1.91 Å | 2.30 Å |

| Rosetta NGK | Ab initio | 2.59 Å | 2.99 Å |

| GalaxyLoop-PS2 | Ab initio | 2.34 Å | 2.88 Å |

| LoopIng | Data-based | > 3.28 Å | > 3.63 Å |

| Sphinx | Hybrid | > 2.89 Å | > 3.24 Å |

| MODELLER | Template-based | 2.94 Å | 3.29 Å |

Table 4: DaReUS-Loop Filtering Impact on Candidate Quality Data from the CASP11 test set showing how each filtering step improves the fraction of high-accuracy loop candidates. Adapted from [31].

| Processing Stage | Key Filtering Action | Result / Fraction of Candidates with RMSD < 4Å |

|---|---|---|

| Post-PDB Mining | Initial candidate set from BCLoopSearch | 49% |

| Post-Sequence Filtering | Keep fragments with positive BLOSUM62 score | 62% |

| Post-Clustering | Cluster candidates and select representatives | 70% |

| Post-Conformation Filtering | Filter by Jensen-Shannon Divergence (JSD < 0.4) | 74% |

| Post-Clash Filtering | Remove candidates with steric clashes | 84% |

The Scientist's Toolkit: Research Reagent Solutions

Table 5: Essential Resources for Data-Based Loop Modeling

| Resource Name | Type | Function in Experiment |

|---|---|---|

| DaReUS-Loop Web Server | Automated Web Server | Primary tool for (re-)modeling loops in homology models using a data-based approach. Accepts up to 20 loops in parallel [32] [29]. |

| Protein Data Bank (PDB) | Database | The primary source of experimental protein structures used as the fragment library for data-based mining by tools like DaReUS-Loop and FREAD [31] [33]. |

| BCLoopSearch | Algorithm / Software | The core search tool used by DaReUS-Loop to mine the PDB for fragments that geometrically match the loop's flanking regions [31]. |

| SWISS-MODEL / MODELLER | Homology Modeling Server / Software | Used to generate the initial homology model required as input for DaReUS-Loop [29] [30]. |

| NGL Viewer | Visualization Tool | Integrated into the DaReUS-Loop results page for interactive visual inspection and comparison of the generated loop candidates [32] [29]. |

| PDB-REDO Database | Refined Structure Database | A resource of re-refined and re-built crystal structures, which can provide improved templates or insights into "buildable" loop regions [33]. |

Frequently Asked Questions (FAQs)

Q1: What are the primary use cases for Rosetta NGK and GalaxyLoop-PS2? Both are state-of-the-art ab initio loop modeling methods used to predict the 3D structure of loop regions in proteins. They are particularly valuable for:

- Completing Homology Models: Accurately modeling loops in regions where template structures are unavailable or unreliable [34] [31].

- Refining Crystal Structures: Rebuilding missing or poorly resolved loops in experimental structures [34].

- Functional Site Prediction: Modeling loops involved in binding sites, which is critical for drug design and understanding protein function [34] [31].

Q2: My Rosetta NGK run failed to generate any models. What could be wrong? This common issue can often be traced to a few key areas:

- Incorrect Loop Definition File: Ensure your loop file follows the exact format. The cut point residue number must be within the start and end residues of the loop, or a segmentation fault can occur [35].

- Missing Flags: Confirm that the essential command-line flags to activate NGK are present:

-loops:remodel perturb_kicand-loops:refine refine_kic[35]. - Residue Numbering Mismatch: Residue indices in the loop definition file must use Rosetta numbering (continuous numbering from 1). You may need to renumber your input PDB file to match [35].

Q3: How can I improve the sampling accuracy of Rosetta NGK for difficult loops? You can employ several advanced strategies to enhance sampling:

- Use Taboo Sampling: Promote conformational diversity by preventing repeated sampling of the same torsion bins with the

-loops:taboo_samplingflag [35] [36] [37]. - Enable Neighbor-Dependent Sampling: Use more precise, neighbor-dependent Ramachandran distributions with

-loops:kic_rama2b(note: this increases memory usage to ~6GB) [35]. - Apply Energy Function Ramping: Smooth the energy landscape by ramping repulsive and Ramachandran terms during refinement using

-loops:ramp_fa_repand-loops:ramp_rama[35] [36].

Q4: The side chains around my loop look poor in the model. How can I fix this?

By default, NGK may fix the side chains of residues neighboring the loop. To allow these side chains to be optimized during modeling, set the flag -loops:fix_natsc to false [35].

Q5: How do I choose between a knowledge-based method like DaReUS-Loop and an ab initio method like NGK or GalaxyLoop-PS2? The choice depends on your specific goal and the problem context, as shown in the table below.

| Method | Type | Best Application Context | Key Advantage |

|---|---|---|---|

| Rosetta NGK [35] [36] | Ab Initio | Accurate, high-resolution framework structures; de novo loop construction. | Robotics-inspired algorithm ensures local moves and exact chain closure. |

| GalaxyLoop-PS2 [34] | Ab Initio | Loops in inaccurate environments (e.g., homology models, low-resolution structures). | Hybrid energy function tolerates environmental errors in the framework. |

| DaReUS-Loop [31] | Knowledge-based | Fast prediction in homology modeling; long loops (≥15 residues). | Speed; confidence score correlates with prediction accuracy. |

Troubleshooting Guides

Common Rosetta NGK Errors and Solutions

| Error / Symptom | Probable Cause | Solution |

|---|---|---|

| Segmentation fault | Cut point in loop definition file is outside the loop start/end residues [35]. | Ensure the cut point residue number is ≥ startRes and ≤ endRes. |

| No output models generated | Missing critical -loops:remodel or -loops:refine flags [35]. |

Include -loops:remodel perturb_kic and -loops:refine refine_kic in command line. |

| Low memory error | Using the -loops:kic_rama2b flag on a system with insufficient RAM [35]. |

Ensure at least 6GB of memory per CPU is available. |

| Loop residues are not connected | Starting loop conformation is disconnected (e.g., in de novo modeling) [35]. | Set the "Extend loop" column in the loop definition file to 1 to randomize and connect the loop. |

Performance Benchmarking and Validation

When evaluating your loop models, it is critical to compare their performance against established benchmarks. The following table summarizes the typical accuracy you can expect from these methods on standard test sets.

| Method | Typical Accuracy (Short Loops, ~12 residues) | Performance on Long Loops (≥15 residues) | Key Identifying Feature |

|---|---|---|---|

| Rosetta NGK | Median fraction of sub-Ångström models increased 4-fold over standard KIC [36] [37]. | Can model longer segments that previous methods could not [36]. | Combination of intensification and annealing strategies. |

| GalaxyLoop-PS2 | Comparable to force-field-based approaches in crystal structures [34]. | Not specifically highlighted. | Hybrid energy function combining physics-based and knowledge-based terms. |

| DaReUS-Loop | Significant increase in high-accuracy loops in homology models [31]. | Outperforms other approaches for long loops [31]. | Data-based approach using fragments from remote/ unrelated PDB structures. |

Experimental Protocols

Core Protocol: Running a Rosetta NGK Experiment

Objective: Reconstruct a missing loop region in a protein structure. Input Files Required:

- Starting PDB File: The protein structure with the missing loop.

- Loop Definition File: A text file specifying the loop(s) to be modeled. Example for a single loop from residue 88 to 95 with a cutpoint at 92:

Example Command:

Workflow: Rosetta Next-Generation KIC (NGK)

The following diagram illustrates the integrated steps of the NGK protocol, showing how different sampling strategies are applied across the centroid and full-atom stages.

The Scientist's Toolkit

Key Research Reagent Solutions

| Item | Function in Experiment | Specification / Note |

|---|---|---|

| Rosetta Software | Primary modeling suite for NGK. | Academic licenses are free. Source code in C++ must be compiled on a Unix-like OS (Linux/MacOS) [38]. |

| Input Protein Structure | Framework for loop modeling. | PDB file format. Residues outside the loop must have real coordinates [35]. |

| Loop Definition File | Specifies the location and parameters of the loop to be modeled. | Critical to use correct Rosetta numbering to avoid errors [35]. |

| Fragment Libraries | Guide conformational search in some protocols. | Can be generated using the Robetta server [38]. |

| Computational Resources | Running Rosetta simulations. | Recommended: Multi-processor cluster with at least 1GB memory per CPU. NGK with Rama2b requires ~6GB [35] [38]. |

Troubleshooting Guide: Common Experimental Issues

Problem 1: Homology model exhibits high energy loops despite data mining pre-filtering

- Symptoms: The target loop region, even when built from a template with high sequence similarity identified through data mining, shows unstable energy profiles, high torsion strain, or poor rotameric side-chain placement in the refined model.

- Impact: The overall model accuracy is compromised, potentially leading to incorrect conclusions about protein function or ligand binding sites in drug development research [39].

- Context: This frequently occurs when the selected template, though similar in sequence, originates from a different structural context (e.g., apo vs. holo form) or has a divergent backbone conformation [39].

| Step | Action | Expected Outcome |

|---|---|---|

| 1 | Verify Template Suitability: In your Phyre2.2 results, check if the template is listed as an "apo" or "holo" structure. Cross-reference the template's PDB entry to confirm its bound state and overall fold similarity beyond the local loop region [39]. | Confirmation that the template's structural context is appropriate for your target. |

| 2 | Run Energy Diagnostic Check: Use your refinement software's energy evaluation function on the isolated loop. Note specific energy terms that are outliers (e.g., van der Waals clashes, Ramachandran outliers). | Identification of the specific physical chemistry terms causing the high energy. |

| 3 | Apply Hybrid Strategy: Use the data-mined template as a starting conformation, then apply an energy-based refinement algorithm. If using a method like the Boosted Multi-Verse Optimizer (BMVO), it can help optimize the model's weights and increase its generalization effectiveness to find a lower energy conformation [40]. | A refined loop structure with a lower overall energy score and improved stereochemistry. |

Problem 2: Energy minimization protocol causes distortion of the data-mined backbone

- Symptoms: After applying an energy-based refinement, the overall backbone geometry of the core protein structure, which was accurate from homology modeling, becomes distorted or shifts significantly.

- Impact: The global fold is lost, rendering the model unusable for its intended research applications, such as virtual screening.

- Context: This is common when the energy minimization parameters are too aggressive or when restraints are not properly applied to the well-conserved regions of the model.

| Step | Action | Expected Outcome |

|---|---|---|

| 1 | Apply Positional Restraints: Restrain the heavy atoms of the protein backbone and core side-chains during refinement. Allow only the target loop and immediate surrounding residues to move freely. | The global fold is preserved during the energy minimization process. |

| 2 | Use a Multi-Stage Protocol: Begin refinement with a strong force constant on the restraints, then gradually reduce it in subsequent stages. This allows the high-energy regions to relax without compromising the entire structure. | A stable minimization trajectory that corrects local issues without global distortion. |

| 3 | Validate with Multiple Metrics: Post-refinement, check global structure metrics like Root Mean Square Deviation (RMSD) against the initial template and verify the Ramachandran plot for the core regions has not deteriorated. | Quantitative confirmation that model quality has been maintained or improved. |

Problem 3: Inability to achieve target contrast ratio in visualization of loop conformational clusters

- Symptoms: When generating diagrams of different loop conformations sampled during refinement, the colors used for arrows or symbols lack sufficient contrast against the background, making them hard to distinguish.

- Impact: Reduces the clarity and professional presentation of research findings, hindering effective communication in publications or presentations.

- Context: This often occurs when using default color palettes without checking for accessibility or when using colors of similar luminance [41] [42].

| Step | Action | Expected Outcome |

|---|---|---|

| 1 | Select High-Contrast Colors: Choose foreground and background colors from the approved palette that have a high contrast ratio. For critical data, ensure a contrast ratio of at least 4.5:1 for normal text and 3:1 for large graphics [41] [42]. | Diagrams are legible for all audiences, including those with low vision or color blindness. |

| 2 | Use an Analyzer Tool: Before finalizing a figure, use a color contrast analysis tool to verify the ratio between the arrow/symbol color and the background color meets WCAG guidelines [42]. | Objective verification that the color choices are sufficient. |

| 3 | Explicitly Set Colors in DOT Script: In your Graphviz DOT script, explicitly define the fontcolor for any node text to ensure high contrast against the node's fillcolor. Do not rely on default settings. |

Rendered diagrams have clear, readable text labels on all shapes. |

Frequently Asked Questions (FAQs)

Q1: What is the core advantage of a hybrid strategy over using data mining or energy-based refinement alone? A1: A hybrid strategy leverages the complementary strengths of both approaches. Data mining through tools like Phyre2.2 rapidly identifies structurally plausible starting conformations from known proteins by finding suitable templates from the AlphaFold database or PDB [39]. Energy-based refinement then acts as a physical chemistry filter, optimizing these starting points to find the most stable, energetically favorable conformation that data mining alone might miss. This combination addresses the shortcomings of methods that fail to capture complex nonlinear interactions, leading to more reliable and accurate models [40].

Q2: In the context of the BMVO algorithm mentioned for optimization, what does "generalization effectiveness" mean for my loop model? A2: Generalization effectiveness in this context refers to the optimized model's ability to perform well not just on the specific computational data it was trained on, but to produce a physically realistic and accurate loop structure that is consistent with the principles of protein stereochemistry. A model with high generalization effectiveness is less likely to be "overfitted" to the peculiarities of the template and is more likely to represent a native-like conformation [40].

Q3: My refinement seems stuck in a high-energy local minimum. What strategies can help escape this? A3: This is a common challenge in energy minimization. Strategies include:

- Hybrid Optimizers: Employ algorithms like the Boosted Multi-Verse Optimizer (BMVO), which are designed to have a better global search capability, helping to escape local minima compared to more traditional optimizers [40].

- Enhanced Sampling: Implement protocols like replica exchange molecular dynamics, which simulates the system at multiple temperatures, allowing high-energy barriers to be crossed.

- Multi-Modal Search: Run multiple independent refinements from slightly different starting points (e.g., different loop conformations from data mining) to see if they converge on the same low-energy structure.

Q4: How do I validate the success of a hybrid loop modeling protocol? A4: Use a combination of quantitative and qualitative checks:

- Energetics: The refined model should have a lower overall energy and show no significant steric clashes or high-energy torsion angles.

- Geometry: Validate using MolProbity or similar services to check Ramachandran outliers, rotameric quality, and clash scores.

- Comparison to Data (if available): If an experimental structure is known but not used in modeling, compute the RMSD of the loop to the true structure.

- Consensus: If multiple independent refinements converge on a similar loop conformation, it increases confidence in the result.

Experimental Protocols & Data

Protocol: Hybrid Data-Mining and Energy Refinement for Loop Modeling

1. Data Mining Phase (Template Identification)

- Input: Target protein sequence with defined loop boundaries.

- Tool: Submit sequence to Phyre2.2 web portal for intensive mode analysis [39].

- Methodology: Phyre2.2 will search its expanded template library, which includes a representative structure for every protein sequence in the PDB, plus dedicated apo and holo structures. It will use a new ranking system to highlight potential templates for different domains in your query [39].

- Output: A list of template structures, confidence scores, and an initial homology model.

2. Hybrid Refinement Phase (Energy-Based Optimization)

- Input: Initial homology model from Phyre2.2, focusing on the loop region of interest.

- Technique: Apply an optimized improved Support Vector Machine (SVM) or other machine learning method, enhanced by a hybrid optimization technique like the Boosted Multi-Verse Optimizer (BMVO) [40].

- Methodology:

- The improved SVM incorporates a modified genetic algorithm based on kernel function for stability.

- The BMVO technique is employed to optimize the combined model's weights and increase its generalization effectiveness.

- This approach is designed to handle the complicated nonlinear interactions between the loop and its environment that simpler methods fail to capture [40].

- Output: A refined protein model with an energetically stable loop.

Quantitative Performance Data

The table below summarizes potential improvements from using a robust hybrid strategy, based on performance metrics from related optimization research [40].

| Performance Metric | Basic ANN Method | Proposed BMVO/ISVM Hybrid Model | Improvement |

|---|---|---|---|

| Root Mean Square Error (RMSE) | Baseline | Reduced by 53.72% [40] | Significant |

| Mean Absolute Percent Error (MAPE) | Baseline | Reduced by 55.22% [40] | Significant |

| Optimal Sample Size | Variable | 1735 samples [40] | For lowest MAPE |

The Scientist's Toolkit: Research Reagent Solutions

| Tool / Resource | Function in Hybrid Strategy |

|---|---|

| Phyre2.2 Web Portal | A template-based protein structure modeling portal. Its main development is facilitating a user to submit their sequence and then identifying the most suitable AlphaFold model to be used as a template, providing a high-quality starting point for the data mining phase [39]. |

| Boosted Multi-Verse Optimizer (BMVO) | A hybrid optimization technique employed to optimize a model's weights and increase its generalization effectiveness, which is crucial for the energy-based refinement phase [40]. |

| Improved Support Vector Machine (SVM) | An algorithm that can be used for prediction and classification in the refinement pipeline. The "improved" version incorporates a modified genetic algorithm based on kernel function to enhance the stability of the model during optimization [40]. |

| Color Contrast Analyzer | Tools used to verify that diagrams of signaling pathways, workflows, and logical relationships meet minimum color contrast ratio thresholds (e.g., 4.5:1), ensuring accessibility and clarity for all researchers [41] [42]. |

Workflow and Signaling Pathways

Hybrid Strategy Workflow

This diagram illustrates the sequential integration of data mining and energy-based refinement for improving loop modeling accuracy. The process begins with the target sequence and systematically progresses through template selection and energy optimization to produce a validated, high-quality model.

Energy Refinement Feedback Loop

This diagram shows the iterative feedback loop at the heart of the energy-based refinement phase. The BMVO optimizer repeatedly adjusts the loop conformation based on energy calculations until a stable, low-energy structure is achieved.

Core Concepts: Homology Grafting and the PDB-REDO Pipeline

What is homology grafting in the context of protein structure modeling? Homology grafting is a computational technique that identifies regions missing from a target protein structure model and transplants ("grafts") these regions from homologous structures found in the Protein Data Bank (PDB). Inherent protein flexibility, poor resolution diffraction data, or poorly defined electron-density maps often result in incomplete structural models during X-ray structure determination. This method is particularly valuable for modeling missing loops, which are often difficult to build due to their conformational flexibility. The grafted regions are subsequently refined and validated within the context of the target structure to ensure a proper fit and geometric correctness [33].

How does PDB-REDO integrate homology grafting for loop modeling? The PDB-REDO pipeline incorporates a specific algorithm called Loopwhole to perform homology-based loop grafting. This process is automated and consists of several key stages:

- Identification: The system detects unmodeled regions (loops) in the input structure and identifies high-identity homologous structures that have these regions modeled.

- Grafting: For each candidate homolog, the loop (plus two adjacent residues on either side) is structurally aligned and transferred into the target model.

- Refinement: The newly grafted loop is refined within the PDB-REDO pipeline to fit the experimental electron-density map in real space.

- Validation: The completed model is validated against geometric criteria and the electron density to ensure the grafted loop is both structurally sound and supported by the data [33] [43].

This constructive validation approach goes beyond simple error correction, aiming for the best possible interpretation of the electron density data. The entire procedure, including these grafting developments, is publicly available through the PDB-REDO databank for pre-optimized existing PDB entries, and the PDB-REDO server for optimizing your own structural models [44] [45].

Troubleshooting FAQs

FAQ 1: My homology-grafted loop has poor real-space correlation or steric clashes after refinement. What should I do?

Poor density fit or clashes often indicate a suboptimal graft or refinement issue. Follow this systematic troubleshooting guide:

- Verify Homolog Selection Criteria: The grafting algorithm requires a sequence identity of at least 50% in the loop and flanking regions. Re-check the sequence similarity of your source homolog. Also, ensure the structural alignment of the flanking residues had a backbone RMSD of less than 2.0 Å before transfer [33].

- Inspect the Electron Density: Use the PDB-REDO output maps to visually inspect the

2mFo-DFcandmFo-DFcmaps around the grafted loop. A strong, well-defined2mFo-DFcmap and minimal noise in themFo-DFcmap suggest the loop is buildable, but the current conformation may be incorrect. A weak or absent density might indicate intrinsic disorder. - Check for Side-Chain Interactions: Examine if mutated side chains in the grafted loop are causing steric clashes. The PDB-REDO pipeline performs side-chain cropping in case of mutations, but manual inspection and adjustment might be necessary using a molecular graphics program [33].

- Run Extended Refinement: If the initial PDB-REDO output shows minor clashes, consider submitting the job again with more aggressive refinement cycles. PDB-REDO optimizes refinement settings like geometric restraint weights and B-factor models, which can sometimes resolve minor conflicts [43] [45].