qPCR Data Normalization: A Comprehensive Guide to Methods, Validation, and Troubleshooting for Reliable Gene Expression Analysis

This article provides a comprehensive guide to qPCR data normalization, a critical step for ensuring the accuracy and reproducibility of gene expression results in biomedical research and drug development.

qPCR Data Normalization: A Comprehensive Guide to Methods, Validation, and Troubleshooting for Reliable Gene Expression Analysis

Abstract

This article provides a comprehensive guide to qPCR data normalization, a critical step for ensuring the accuracy and reproducibility of gene expression results in biomedical research and drug development. It covers foundational principles, from the necessity of normalization to minimize technical variability to the detailed mechanics of the ΔΔCq method. The guide explores established and emerging methodological strategies, including the use of single or multiple reference genes and global mean normalization. It delivers practical troubleshooting advice for common pitfalls and a rigorous framework for validating and comparing normalization approaches, empowering researchers to produce robust, reliable, and publication-ready data.

Why Normalization is Non-Negotiable: The Foundation of Accurate qPCR Data

The Critical Role of Normalization in Minimizing Technical Variability

Frequently Asked Questions

What is the primary goal of normalization in qPCR experiments?

Normalization aims to eliminate technical variation introduced during sampling, RNA extraction, and cDNA synthesis procedures. This ensures your analysis focuses exclusively on biological variation resulting from experimental intervention rather than technical artifacts. Proper normalization is fundamental for accurate data quantification and interpretation [1] [2].

How many reference genes should I use for reliable normalization?

The MIQE guidelines recommend using at least two validated reference genes [3]. However, studies have shown that using three or more stable reference genes can provide even more robust normalization. For example, one study identified HPRT, 36B4, and HMBS as a stable triplet for reliable normalization in adipocyte research [4], while another found RPS5, RPL8, and HMBS formed a stable combination for canine gastrointestinal tissue [1].

Can I use a single reference gene like GAPDH or ACTB?

Using a single reference gene, particularly without validation, is strongly discouraged. Commonly used genes like GAPDH and ACTB have frequently been shown to exhibit variable expression under different experimental conditions. One study concluded that "the widely used putative genes in similar studies—GAPDH and Actb—did not confirm their presumed stability," emphasizing the need for experimental validation of internal controls [4].

What alternative methods exist beyond traditional reference gene approaches?

Several data-driven normalization methods offer alternatives to traditional reference genes, particularly when profiling many genes:

- Global Mean (GM): Uses the average expression of all profiled genes; performs well when profiling >55 genes [1]

- Quantile Normalization: Assumes the overall distribution of gene expression is constant across samples [5]

- Rank-Invariant Set Normalization: Identifies genes with stable rank order across conditions from your dataset [5]

- NORMA-Gene: Uses a least squares regression algorithm to calculate a normalization factor [3]

How do I validate the stability of my reference genes?

Stability should be assessed using specialized algorithms:

- geNorm: Calculates stability measure M; lower M values indicate greater stability [1] [4]

- NormFinder: Evaluates intra- and inter-group variation [1] [3]

- BestKeeper: Uses raw Cq values to determine stability [4]

- RefFinder: Aggregates results from multiple algorithms [3]

Troubleshooting Guides

Problem: High Technical Variation Persists After Normalization

Potential Causes and Solutions:

| Problem Area | Specific Issue | Solution |

|---|---|---|

| Reference Gene Selection | Using unvalidated single reference gene | Validate multiple genes (2-3) using geNorm/NormFinder [1] [4] |

| Sample Quality | Degraded RNA or inconsistent cDNA synthesis | Check RNA integrity, use consistent reverse transcription protocols [6] |

| Amplification Efficiency | Varying efficiency between target/reference genes | Determine efficiency via standard curve, apply corrections [6] |

| Normalization Method | Suboptimal method for your experimental design | Consider switching to global mean for large gene sets (>55 genes) [1] |

Problem: Inconsistent Results Between Technical Replicates

Investigation Protocol:

- Check Amplification Efficiency: Confirm efficiencies between 90-110% for all assays [1]

- Verify Replicate Consistency: Remove replicates differing by >2 PCR cycles [1]

- Assess Reaction Quality: Identify bubbles or irregularities in PCR runs [1]

- Evaluate Specificity: Check for single peaks in melting curves [3]

Problem: Reference Gene Performance Varies Across Experimental Conditions

Solution Strategy:

- Pre-validation: Test candidate reference genes in pilot studies matching your experimental conditions [4]

- Multi-Algorithm Assessment: Use both geNorm and NormFinder for complementary stability assessment [1]

- Functionally Diverse Genes: Select reference genes from different functional pathways to avoid co-regulation [1]

- Alternative Methods: Consider data-driven normalization (NORMA-Gene, quantile) if stable reference genes cannot be identified [5] [3]

Normalization Method Comparison

The table below summarizes the performance characteristics of different normalization approaches based on recent studies:

| Normalization Method | Optimal Use Case | Advantages | Limitations |

|---|---|---|---|

| Multiple Reference Genes (2-3 validated) | Most qPCR studies with limited targets | Well-established, MIQE-compliant | Requires validation, reduces sample for targets [1] [4] |

| Global Mean (GM) | Large gene sets (>55 genes) | Data-driven, no pre-selection | Requires many genes, not for small panels [1] |

| NORMA-Gene | Studies with ≥5 target genes | Reduces variance effectively, fewer resources | Less familiar to reviewers [3] |

| Quantile Normalization | High-throughput qPCR across multiple plates | Corrects plate effects, robust distribution alignment | Complex implementation, assumes same distribution [5] |

| Pairwise/Triplet Normalization | miRNA studies, diagnostic panels | High accuracy, model stability | Computational complexity [7] |

Experimental Protocols

Protocol 1: Reference Gene Validation for qPCR Normalization

Purpose: To identify and validate stable reference genes for specific experimental conditions.

Materials:

- cDNA samples from all experimental conditions

- qPCR reagents and instrument

- Primers for candidate reference genes (minimum 6-8 candidates recommended)

Procedure:

- Select Candidate Genes: Choose 6-8 candidate reference genes from different functional classes [4]

- qPCR Amplification: Run all candidates across all experimental samples (minimum 3 biological replicates per condition)

- Data Quality Control:

- Stability Analysis:

- Final Selection: Choose 2-3 most stable genes with different biological functions [1]

Protocol 2: Global Mean Normalization Implementation

Purpose: To implement global mean normalization when profiling large gene sets.

Materials:

- qPCR data for all genes across all samples

- Statistical software (R, Python, or specialized packages)

Procedure:

- Data Curation:

- Calculate Global Mean:

- Compute average Cq value of all genes for each sample [1]

- Normalize Expression:

- Subtract sample-specific global mean from each gene's Cq value

- Alternatively, use the global mean as denominator in 2^(-ΔΔCq) calculations

- Performance Validation:

Research Reagent Solutions

| Reagent Category | Specific Examples | Function in Normalization |

|---|---|---|

| Reference Gene Assays | RPS5, RPL8, HMBS, HPRT1, HSP90AA1, B2M | Stable endogenous controls for sample-to-sample variation [1] [3] |

| RNA Quality Tools | RNeasy Mini Kits, QIAzol Lysis Reagent, DNase treatment kits | Ensure input RNA quality and genomic DNA removal [3] [4] |

| qPCR Master Mixes | SYBR Green, TaqMan probes, Power SYBR Green chemistry | Consistent amplification chemistry across samples [2] [8] |

| Stability Analysis Software | geNorm, NormFinder, BestKeeper, RefFinder | Algorithmic assessment of reference gene stability [1] [3] [4] |

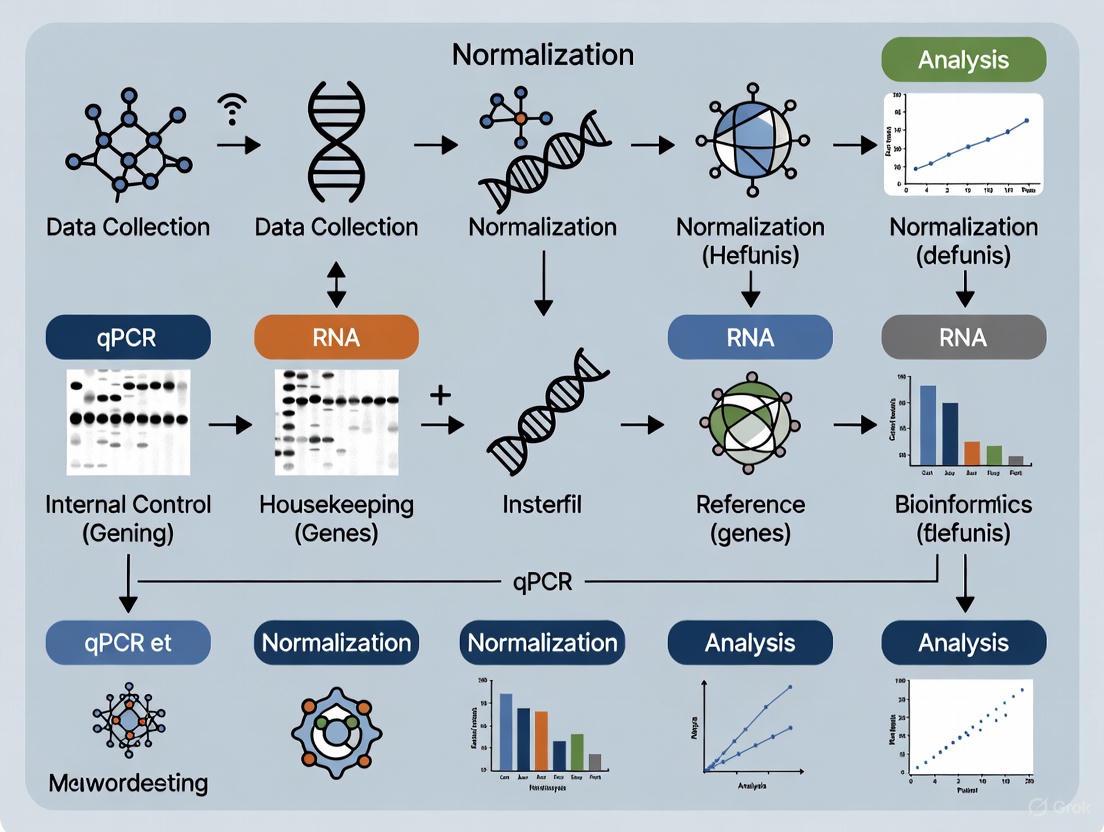

Workflow Diagram: qPCR Normalization Strategy Selection

Technical Note: Statistical Approaches Beyond 2^(-ΔΔCq)

While the 2^(-ΔΔCq) method remains widely used, recent research suggests alternative statistical approaches can provide enhanced rigor. Analysis of Covariance (ANCOVA) offers greater statistical power and isn't affected by variability in qPCR amplification efficiency. ANCOVA uses raw Cq values as the response variable in a linear model, providing a flexible multivariable approach to differential expression analysis [9].

Quantitative real-time PCR (qPCR) is a powerful technique for quantifying nucleic acids, but its accuracy and reproducibility are heavily influenced by multiple sources of variation. Understanding and controlling these variables is crucial for generating reliable, publication-quality data, especially in the context of normalizing qPCR data for gene expression studies.

Variation in a qPCR experiment can be categorized into three main types: system variation (inherent to the measuring equipment and reagents), biological variation (true variation in target quantity among samples within the same group), and experimental variation (the measured variation which estimates biological variation) [10]. System variation can significantly impact experimental variation, making its minimization a primary goal during experimental design and execution [10].

Pre-Analytical Variation

Pre-analytical variation encompasses all inconsistencies occurring before the qPCR run itself, from sample collection to cDNA synthesis.

Sample Collection and Storage

The initial steps of handling biological material introduce significant variability. Using a dedicated pre-PCR workspace, physically separated from post-PCR areas, is essential to prevent contamination from amplified PCR products [11]. Samples should be stored correctly; DNA is best preserved at -20°C or -70°C under slightly basic conditions to prevent depurination [11].

Nucleic Acid Extraction and Quality Assessment

The quality of the starting template is paramount. Inaccurate quantification of nucleic acid concentration or the presence of inhibitors can severely skew results.

- Purity and Concentration: Use a spectrophotometer or fluorometer to assess sample quality and concentration. A 260/280 nm absorbance ratio within 1.8-2.0 indicates pure DNA [11].

- Inhibitors: Template material containing inhibitors is a common cause of poor amplification efficiency, unusually shaped amplification curves, and irreproducible data [12]. Diluting the input sample can sometimes mitigate this effect [12].

Reverse Transcription and cDNA Synthesis

The reverse transcription step, crucial for gene expression analysis, is a major source of variability.

- gDNA Contamination: Genomic DNA (gDNA) contamination in RNA samples can lead to falsely early Cq values. A recommended corrective step is to treat RNA samples with DNase before reverse transcription [12].

- Reagent Quality: Degraded reagents or inefficient reverse transcription can lead to failed reactions and "no data" outcomes [12]. Using master mixes that include reagents to remove gDNA and inhibit RNase activity is a best practice [11].

Analytical Variation

Analytical variation arises during the setup and execution of the qPCR reaction.

Reagent Quality and Pipetting

- Reagent Integrity: Degraded reagents, such as dNTPs or master mix, can result in a lower-than-expected amplification plateau [12]. Aliquoting reagents prevents degradation from multiple freeze-thaw cycles and reduces contamination risk [11].

- Pipetting Error: This is a primary contributor to system variation and can lead to high variability between technical replicates (Cq differences > 0.5 cycles) [12]. To improve precision, calibrate pipettes regularly, use positive-displacement pipettes with filtered tips, and ensure proper vertical pipetting technique [12] [10].

Reaction Plate Setup and Instrumentation

- Plate Preparation: Bubbles in a well can cause baseline drift and a jagged amplification signal [12]. After sealing the plate, centrifuging it removes bubbles and ensures all liquid is at the bottom of the wells [10].

- Instrument Performance: Regular maintenance, including temperature verification and calibration, is necessary for optimal instrument performance [10].

Assay Design and Optimization

- Primer Design: Poor primer specificity can cause multiple issues, including unexpected data values, earlier-than-anticipated Cq values (due to non-specific amplification or primer-dimer formation), and irreproducible data [12]. Primers should be designed to have similar melting temperatures (within 2-5°C), and their formation of primer-dimers should be checked with melt curve analysis [12] [11].

- Amplification Efficiency: Poor PCR efficiency, potentially caused by an inappropriate annealing temperature or unanticipated variants in the target sequence, leads to unusually shaped amplification curves and later-than-expected Cq values [12]. Assay efficiency should be optimized and tested against carefully quantified controls [12].

The following workflow summarizes the key sources of variation and their impact on the qPCR process:

Frequently Asked Questions (FAQs)

Q1: My No Template Control (NTC) shows exponential amplification. What is wrong? This indicates contamination, likely from laboratory exposure to the target sequence or from the reagents themselves. Corrective steps include cleaning the work area with 10% bleach, preparing the reaction mix in a clean lab space separated from template sources, and ordering new reagent stocks [12].

Q2: The amplification curves for my samples are jagged. What could be the cause? A jagged signal throughout the amplification plot is often due to poor amplification, a weak probe signal, or a mechanical error. Ensure a sufficient amount of probe is used, try a fresh batch of probe, and mix the primer/probe/master solution thoroughly during reaction setup [12].

Q3: My technical replicates are too variable (Cq difference > 0.5 cycles). How can I fix this? High variability between technical replicates is commonly caused by pipetting error or insufficient mixing of solutions. Calibrate your pipettes, use positive-displacement pipettes with filtered tips, and mix all solutions thoroughly during preparation [12].

Q4: I see a much lower plateau phase than expected. What does this mean? A low plateau suggests limiting or degraded reagents (e.g., dNTPs or master mix), an inefficient reaction, or incorrect probe concentration. Check your master mix calculations and repeat the experiment with fresh stock solutions [12].

Q5: What is the difference between technical and biological replicates? Technical replicates are repetitions of the same sample reaction, helping to estimate system precision and identify outliers. Biological replicates are different samples from the same experimental group, accounting for the natural variation within a population. Both are essential for robust statistical analysis [10].

Troubleshooting Guide for Common qPCR Issues

The table below summarizes frequent problems, their potential causes, and recommended solutions based on observed amplification curve anomalies and data outputs.

| Observation | Potential Causes | Corrective Steps |

|---|---|---|

| Exponential amplification in NTC [12] | Contamination from lab environment or reagents. | Clean work area with 10% bleach; use new reagent stocks; prepare mix in a clean lab [12] [11]. |

| High noise in early cycles; data point looping [12] | Baseline set too early; too much template. | Reset baseline; dilute input sample to within linear range [12]. |

| Unusually shaped amplification; late Cq [12] | Poor reaction efficiency; inhibitors; suboptimal annealing temperature. | Optimize primer concentration and annealing temp; redesign primers; dilute sample to reduce inhibitors [12]. |

| Plateau much lower than expected [12] | Limiting or degraded reagents; inefficient reaction. | Check master mix calculations; repeat with fresh stock solutions [12] [11]. |

| Cq much earlier than anticipated [12] | gDNA contamination in RNA; high primer-dimer; poor specificity. | DNase-treat RNA; redesign primers for specificity; optimize annealing temperature [12]. |

| Jagged amplification signal [12] | Poor amplification/weak probe; mechanical error; bubble in well. | Use more probe; try fresh probe; mix solutions thoroughly; centrifuge plate [12] [10]. |

| Variable technical replicates (Cq >0.5 cycles apart) [12] | Pipetting error; insufficient mixing; low expression. | Calibrate pipettes; use filtered tips; mix solutions thoroughly; add more sample [12]. |

| Irreproducible sample comparisons [12] | Low amplification efficiency; RNA degradation; inaccurate dilutions. | Redesign primers; repeat with fresh reagents/sample; check sample dilutions [12]. |

The Scientist's Toolkit: Essential Reagents and Materials

The following table lists key reagents and materials crucial for minimizing variation and ensuring successful qPCR experiments.

| Item | Function | Best Practice / Rationale |

|---|---|---|

| Filtered Pipette Tips [12] [11] | To prevent aerosol contamination from entering the pipette barrel and cross-contaminating samples. | Use consistently for all pre-PCR setup. |

| Master Mix [11] | A pre-mixed solution containing core PCR reagents (e.g., Taq polymerase, dNTPs, buffer). | Reduces pipetting steps, well-to-well variation, and improves reproducibility. |

| Nuclease-Free Water [11] | Used to dilute samples and as a component in reactions. | Should be autoclaved and filtered through a 0.45-micron filter dedicated to pre-PCR use. |

| UNG (Uracil-N-Glycosylase) [11] | Enzyme used in some master mixes to prevent carryover contamination from previous PCR products. | Renders prior dUTP-containing amplicons non-amplifiable. |

| Passive Reference Dye [10] | A dye included in the reaction at a fixed concentration to normalize for non-PCR-related fluorescence variations. | Corrects for differences in well volume and optical anomalies, improving precision. |

| DNase I [12] | Enzyme that degrades genomic DNA. | Critical for RNA work to prevent false positives from gDNA contamination during RT-qPCR. |

| Stable Reference Genes (RGs) [1] [13] | Genes used for data normalization to correct for technical variation. | Must be validated for stability under specific experimental conditions; using a combination of RGs is often best. |

| 3-Methylglutarylcarnitine | 3-Methylglutarylcarnitine, CAS:102673-95-0, MF:C13H23NO6, MW:289.32 g/mol | Chemical Reagent |

| 8-Dehydrocholesterol | 8-Dehydrocholesterol (8-DHC) for SLOS Research |

Normalization Strategies to Minimize Variation

Normalization is a critical process to minimize technical variability and reveal true biological variation [1]. The choice of strategy can significantly impact data interpretation.

Reference Gene (RG) Normalization

This is the most common method, using internal control genes presumed to be stably expressed across all samples.

- Validation is Crucial: So-called "housekeeping" genes (e.g., GAPDH, ACTB) are not always stable. Their expression can vary with tissue type, disease state, and experimental conditions [1] [13]. It is essential to validate RG stability for each specific experimental setup.

- Use Multiple RGs: The MIQE guidelines recommend using more than one verified reference gene [1]. Using a combination of stable genes can balance out minor fluctuations in individual genes. GeNorm and NormFinder are standard algorithms used to rank candidate RGs by their expression stability [1] [13].

Global Mean (GM) Normalization

This method uses the geometric mean of the expression of a large number of genes (often tens to hundreds) as the normalizer.

- When to Use: The GM method can be a superior alternative to RGs, particularly when profiling many genes. One study found GM to be the best-performing method for reducing technical variability when more than 55 genes were profiled [1].

- Advantage: It does not rely on the stability of a small number of pre-selected genes, potentially offering a more robust normalization factor.

The Gene Combination Method

An emerging approach involves finding an optimal combination of a fixed number (k) of genes whose individual expressions balance each other across all conditions of interest, even if the individual genes are not particularly stable [13]. This method can be identified in silico using comprehensive RNA-Seq databases before experimental validation [13].

Quantitative Polymerase Chain Reaction (qPCR) is a fundamental technique for quantifying nucleic acids, with absolute and relative quantification representing two principal analytical pathways. The choice between these methods significantly impacts data interpretation and biological conclusions in research and diagnostic applications. Within the broader context of normalization methods for qPCR data research, understanding this distinction is crucial for experimental accuracy. Absolute quantification determines the exact copy number or concentration of a target sequence, while relative quantification measures fold changes in gene expression between different samples. This technical support center provides troubleshooting guidance and detailed protocols to help researchers navigate these methodologies effectively.

Core Concepts: Absolute and Relative Quantification

What is Absolute Quantification?

Absolute quantification is a method that calculates the exact numerical quantity of a target nucleic acid sequence in a sample, typically expressed as copy number or concentration [14] [15]. This approach requires comparison to standards of known concentration to generate a standard curve, against which unknown samples are measured [16].

Key Principles:

- Relies on external standards with known concentrations

- Generates absolute numerical values (e.g., copies/μL)

- Requires precise serial dilutions of standard material

- Accounts for amplification efficiency through standard curve validation

What is Relative Quantification?

Relative quantification determines the fold difference in target abundance between test and reference samples (such as untreated controls), normalizing to an endogenous reference gene [14] [17] [15]. The result is expressed as a ratio relative to the calibrator sample, which is set to a baseline of 1-fold change [16].

Key Principles:

- Measures changes in expression relative to a control sample

- Normalizes data using internal reference genes

- Expresses results as fold-changes rather than absolute numbers

- Requires validation of reference gene stability under experimental conditions

Comparative Analysis: Absolute vs. Relative Quantification

Table 1: Fundamental differences between absolute and relative quantification approaches

| Parameter | Absolute Quantification | Relative Quantification |

|---|---|---|

| Output | Exact copy number or concentration | Fold-change relative to calibrator |

| Standard Requirement | Standards of known absolute quantity | Relative standards sufficient |

| Reference | External standard curve | Endogenous control/reference gene |

| Primary Application | Viral load quantification, genetically modified organism copy number | Gene expression studies, comparative transcriptomics |

| Data Interpretation | Direct quantitative measurement | Ratio-based comparison |

| Key Assumption | Equal amplification efficiency between standard and target | Stable reference gene expression across conditions |

Experimental Protocols and Workflows

Absolute Quantification Workflow

Detailed Methodology:

Standard Preparation: Create standards using plasmid DNA, PCR fragments, or in vitro transcribed RNA with precisely determined concentrations [16]. For RNA quantification, RNA standards are preferred as they account for reverse transcription efficiency.

Concentration Calculation: Calculate copy number using appropriate formulas:

Standard Curve Generation: Prepare at least five serial dilutions spanning the expected concentration range of unknown samples. Each dilution should differ by 10-fold or less.

qPCR Execution: Amplify standard dilutions and unknown samples simultaneously using identical reaction conditions.

Data Analysis: Plot threshold cycle (Ct) values against the logarithm of standard concentrations. Use linear regression to generate the standard curve equation, then interpolate unknown sample concentrations from their Ct values.

Quality Control: Ensure amplification efficiency falls between 90-110% (slope of -3.1 to -3.6), with correlation coefficient (R²) >0.99 [17].

Relative Quantification Workflow

Detailed Methodology:

Reference Gene Selection: Identify and validate stable reference genes appropriate for your experimental system using algorithms like geNorm or NormFinder [1] [17] [18]. Normalization to multiple reference genes increases accuracy [17].

Efficiency Validation:

- Prepare five 10-fold dilutions of cDNA

- Run qPCR with both target and reference gene primers

- Plot Ct values against log dilution factor

- Calculate amplification efficiency: E = 10^(-1/slope) [17]

- Ideal efficiency = 2 (100%), with 90-110% generally acceptable

Calculation Methods:

Normalization Strategies for Reliable Data

Reference Gene Normalization

Reference genes (housekeeping genes) serve as internal controls to correct for technical variations in RNA quality, cDNA synthesis efficiency, and sample loading [18]. Proper validation is essential, as commonly used reference genes like GAPDH and β-actin can vary significantly under different experimental conditions [18].

Stability Assessment Methods:

- geNorm: Ranks reference genes by stability using pairwise variation [1] [18]

- NormFinder: Evaluates intra- and inter-group variation [1] [3]

- BestKeeper: Uses raw Ct values for stability assessment [3]

Recent research in canine gastrointestinal tissues identified RPS5, RPL8, and HMBS as the most stable reference genes across different pathological conditions [1].

Alternative Normalization Approaches

Table 2: Comparison of normalization methods for qPCR data analysis

| Method | Principle | Requirements | Advantages | Limitations |

|---|---|---|---|---|

| Reference Genes | Normalization to stably expressed internal controls | 2+ validated reference genes | Well-established, widely accepted | Reference gene stability must be verified |

| Global Mean (GM) | Normalization to mean expression of all genes | Large gene sets (>55 genes recommended) | No need for reference gene validation | Requires profiling many genes [1] |

| NORMA-Gene | Algorithm-based normalization using least squares regression | Expression data of ≥5 genes | Reduces variance effectively, fewer resources | Less established in some fields [3] |

The global mean method has demonstrated superior performance in reducing technical variability when profiling large gene sets (>55 genes), outperforming reference gene-based normalization in canine intestinal tissue studies [1]. Similarly, NORMA-Gene provided more reliable normalization than reference genes in sheep liver studies, effectively reducing variance in target gene expression with fewer resource requirements [3].

Troubleshooting Guides and FAQs

Frequently Asked Questions

Q1: When should I choose absolute over relative quantification?

- Absolute: When you need to know exact copy numbers (viral load, bacterial counts, GMO copy number) [14]

- Relative: When measuring fold-changes in gene expression between experimental conditions [14] [17]

Q2: My amplification efficiency falls outside 90-110%. What should I do?

- Redesign primers with better specificity

- Optimize reaction conditions (Mg²⺠concentration, annealing temperature)

- Check for PCR inhibitors in sample preparation

- Use the Pfaffl method for relative quantification if efficiencies differ [17]

Q3: How many reference genes should I use for reliable normalization?

- MIQE guidelines recommend at least two validated reference genes [3]

- Use algorithms like geNorm to determine the optimal number (V value <0.15) [18]

- Normalization with multiple reference genes increases accuracy compared to single genes [17]

Q4: What are the implications of different amplification efficiencies between sample and standard in absolute quantification?

- Significant quantification errors (up to orders of magnitude) can occur if efficiencies differ [20]

- Consider the One-Point Calibration (OPC) method that corrects for efficiency differences [20]

- Always verify that your standard and sample have similar efficiencies

Troubleshooting Common Issues

Problem: High variation between technical replicates

- Potential Causes: Pipetting errors, inadequate mixing, bubble formation in wells

- Solutions: Use calibrated pipettes, mix reagents thoroughly, centrifuge plates before run

Problem: Standard curve with poor linearity (R² < 0.99)

- Potential Causes: Improper standard dilution, degradation of standards, inhibitor carryover

- Solutions: Prepare fresh standard dilutions, aliquot and freeze standards, include purification steps

Problem: Reference gene shows variable expression across samples

- Potential Causes: Experimental conditions affect reference gene stability

- Solutions: Validate reference genes for your specific conditions, use multiple reference genes, consider global mean normalization for large gene sets [1]

Problem: Discrepancies between RNAseq and qPCR results

- Potential Causes: Different normalization strategies, probe vs. read coverage biases

- Solutions: Use consistent normalization approaches, verify qPCR with multiple reference genes [1]

Research Reagent Solutions

Table 3: Essential reagents and materials for qPCR quantification experiments

| Reagent/Material | Function | Key Considerations |

|---|---|---|

| Standard Templates (Plasmid DNA, in vitro transcribed RNA) | Absolute quantification standards | Known copy number, identical primer binding sites to target [16] |

| Primer Pairs | Target amplification | Validate efficiency (90-110%), specific amplification, avoid primer-dimers |

| Reverse Transcriptase | cDNA synthesis (for RNA targets) | High efficiency, minimal RNase activity, consistent across samples |

| qPCR Master Mix | Amplification reaction | Contains polymerase, dNTPs, buffers, fluorescence detection chemistry |

| Reference Gene Assays | Normalization control | Validated for stability in your experimental system [1] [18] |

| RNA Isolation Kit | Nucleic acid purification | High purity (A260/280 ~2.0), intact RNA, minimal genomic DNA contamination |

The choice between absolute and relative quantification pathways depends primarily on the research question and required output. Absolute quantification provides concrete numerical values essential for diagnostic applications and precise copy number determination, while relative quantification excels in comparative gene expression studies. Critically, proper normalization remains fundamental to both approaches, with emerging methods like global mean normalization and NORMA-Gene offering compelling alternatives to traditional reference genes, particularly in complex experimental systems. By implementing the troubleshooting guides and standardized protocols outlined in this technical support center, researchers can enhance the reliability and reproducibility of their qPCR data, ensuring accurate biological interpretations in their research and drug development efforts.

Core Principles of the 2^(-ΔΔCq) Method for Relative Quantification

The 2-ΔΔCq method (commonly known as the 2-ΔΔCt method) is a foundational strategy in quantitative real-time PCR (qPCR) for determining relative changes in gene expression [21]. This approach calculates the fold change in expression of a target gene between an experimental sample and a reference sample (such as an untreated control), normalized to one or more reference genes used as an internal control [14]. Its widespread adoption is largely due to its convenience, as it directly uses the threshold cycle (Cq or Ct) values generated by the qPCR instrument, eliminating the need for constructing standard curves in every run [22] [23].

Core Principles and Theoretical Foundation

The 2-ΔΔCq method is built upon several key principles and mathematical assumptions that researchers must understand to apply it correctly.

The Mathematical Workflow

The calculation follows a clear, stepwise procedure to arrive at the final fold-change value [23]:

Calculate ΔCq for Each Sample: For every sample (both test and control), subtract the Cq of the reference gene from the Cq of the target gene.

- ΔCq (test) = Cq (target, test) - Cq (ref, test)

- ΔCq (control) = Cq (target, control) - Cq (ref, control)

Calculate ΔΔCq: Subtract the ΔCq of the control sample from the ΔCq of the test sample.

- ΔΔCq = ΔCq (test) - ΔCq (control)

Calculate Fold Change: Use the result as the exponent for base 2.

- Fold Change = 2^(-ΔΔCq)

The final value represents the fold change of your gene of interest in the test condition relative to the control, normalized to the reference gene[s] [23]. A value of 1 indicates no change, a value above 1 indicates upregulation, and a value below 1 indicates downregulation.

Foundational Assumptions

The validity of the 2-ΔΔCq method rests on three critical assumptions [22] [23]:

- Optimal PCR Efficiency: The method assumes that the amplification efficiencies of both the target and reference genes are 100%, meaning the amount of PCR product doubles every cycle (represented by the base of 2 in the formula) [22].

- Equal Efficiencies: It assumes that the amplification efficiencies of the target and reference genes are approximately equal [14].

- Stable Reference Genes: The reference gene(s) must be stably expressed across all experimental conditions and unaffected by the experimental treatment [23].

Comparison of qPCR Quantification Methods

The 2-ΔΔCq method is one of several approaches for analyzing qPCR data. Understanding its position relative to other methods provides context for its appropriate application [14] [24].

| Method | Core Principle | Key Advantages | Key Limitations | Ideal Use Case |

|---|---|---|---|---|

| 2-ΔΔCq (Relative) | Calculates fold change relative to a calibrator sample, normalized to a reference gene [21]. | No standard curve needed; increased throughput; simple calculation [14]. | Relies on strict efficiency and reference gene stability assumptions [22]. | Large number of samples, few genes, when assumptions are validated [23]. |

| Standard Curve (Relative) | Determines relative quantity from a standard curve, normalized to a reference gene [14]. | Less optimization than comparative CT; runs target and control in separate wells [14]. | Requires running a standard curve, uses more wells [14]. | When amplification efficiencies are not equal or are unknown [14]. |

| Standard Curve (Absolute) | Relates Cq to a standard curve with known starting quantities to find absolute copy number [24]. | Provides absolute copy number, not just fold change [24]. | Requires pure, accurately quantified standards; prone to dilution errors [14]. | Determining absolute viral copies, transgene copies [14] [24]. |

| Digital PCR (Absolute) | Partitions sample into many reactions and counts positive vs. negative partitions [14]. | No standards needed; highly precise; tolerant to inhibitors [14]. | Requires specialized instrumentation; limited dynamic range. | Absolute quantification of rare alleles, copy number variation [14]. |

Troubleshooting Guides

FAQ: Validating the 2-ΔΔCq Method

Q1: How do I validate that my primers have near-100% and equal amplification efficiencies? A validation experiment is required before using the 2-ΔΔCq method [14]. Prepare a serial dilution (e.g., 1:10) of your cDNA sample and run it with both your target and reference gene primers. Plot the Cq values against the logarithm of the dilution factor. The slope of the resulting standard curve should be between -3.1 and -3.6, which corresponds to an efficiency between 110% and 90% [25]. The efficiencies for the target and reference genes must be within 5% of each other to use this method reliably [23].

Q2: My reference gene seems to be regulated by the experimental treatment. What should I do? Using an unstable reference gene is a major source of inaccurate results. You should [1]:

- Test multiple reference genes: Identify and use the most stable ones.

- Use a geometric mean of multiple genes: Combining several stable reference genes (e.g., the 2-3 most stable) increases normalization accuracy [1].

- Consider data-driven normalization: For high-throughput qPCR (dozens to hundreds of genes), methods like the Global Mean (GM) or Quantile Normalization can be more robust alternatives, as they use the entire dataset for normalization rather than relying on a few pre-selected genes [5] [1].

Q3: My fold change results seem biologically implausible. What could be wrong? Implausible results often stem from violations of the method's core assumptions [22] [25]:

- Check PCR Efficiencies: Re-run the validation experiment. Even small efficiency differences between target and reference genes can lead to large miscalculations [25].

- Review Cq Values: Check for very high Cq values (e.g., >35), which indicate low template concentration and increased variability. Also, ensure the background fluorescence has been correctly handled, as improper subtraction can distort results [22].

- Re-inspect Raw Data: Always look at the amplification and melt curves for anomalies like primer-dimers or non-specific amplification, which can lead to inaccurate Cq calls [25].

Q4: Can I compare ΔCq or ΔΔCq values directly between different experimental runs or laboratories? No, this is not recommended. Cq values are highly dependent on machine-specific settings, the chosen quantification threshold, and reagent efficiencies, which can vary between runs and laboratories [25]. The 2-ΔΔCq calculation is designed for comparison within a single, optimally calibrated run. For comparisons across runs, the use of an inter-run calibrator sample is advised.

Research Reagent Solutions

The following table outlines essential materials and their critical functions in a typical 2-ΔΔCq experiment.

| Reagent/Material | Function | Key Considerations |

|---|---|---|

| Specific Primers | To amplify the target and reference genes with high specificity. | Must be validated for efficiency and specificity. Amplicon length should be kept similar [24]. |

| qPCR Master Mix | Contains DNA polymerase, dNTPs, buffer, and fluorescent dye (e.g., SYBR Green) for detection. | Choice of dye or probe chemistry affects sensitivity and specificity [25]. |

| RNA/DNA Template | The sample material containing the genetic target to be quantified. | For gene expression, high-quality RNA with a high RIN is crucial. Input amount must be consistent [25]. |

| Reverse Transcriptase | (For gene expression) Converts RNA to cDNA for PCR amplification. | RT efficiency can be a major source of variation and should be kept consistent across samples [14]. |

| Nuclease-Free Water | Serves as a solvent and negative control. | Essential for preventing degradation of reagents and templates. |

| Validated Reference Genes | Used for normalization of technical variations. | Must be confirmed to be stable under your specific experimental conditions (e.g., GAPDH, ACTB, ribosomal genes) [22] [1]. |

Normalization is a critical step in the analysis of quantitative PCR (qPCR) data, serving to minimize technical variability introduced during sample processing so that the analysis focuses on true biological variation. When performed poorly or omitted, normalization can lead to severe data misinterpretation and irreproducible results, undermining research validity. This guide details the consequences of inadequate normalization and provides troubleshooting advice to help researchers avoid these common pitfalls, framed within the broader context of methodological rigor in qPCR research.

Frequently Asked Questions (FAQs)

1. What is the primary purpose of normalizing qPCR data? Normalization aims to eliminate technical variation introduced during sampling, RNA extraction, cDNA synthesis, and loading differences. This ensures that observed gene expression changes result from biological variation due to the experimental intervention and not from technical artifacts [1].

2. Why is using a single reference gene like GAPDH or ACTB often insufficient? Using a single reference gene is problematic because so-called "housekeeping" genes can vary under different physiological or pathological conditions. For example, studies have shown that GAPDH is not stable in models of age-induced neuronal apoptosis, and ACTB varies in ischemic/hypoxic conditions [26]. Relying on a single, unstable gene for normalization can introduce significant bias.

3. What are the minimum information guidelines for publishing qPCR experiments? The MIQE (Minimum Information for Publication of Quantitative Real-Time PCR Experiments) guidelines were established to standardize reporting and avoid misinterpretations. A key recommendation is using multiple, validated reference genes for reliable normalization, not just one [26] [9].

4. When can the global mean (GM) method be a good alternative to reference genes? The global mean of expression of all profiled genes can be a robust normalization strategy, particularly when a large number of genes (e.g., more than 55) are being assayed. One study found GM to be the best-performing method for reducing variability in complex sample sets [1].

5. How can poor normalization affect my final results? Poor normalization can skew normalized data, causing a significant bias. This can lead to both false-positive results (type I errors), where you believe an effect exists when it does not, and false-negative results (type II errors), where you miss genuine biological effects [27].

Troubleshooting Common Normalization Problems

Problem 1: Unstable Reference Genes

- Symptoms: High variability in target gene expression within the same treatment group; reference gene expression shows significant changes across experimental conditions.

- Causes: The chosen reference gene is regulated by the experimental treatment. This is common in processes like ageing or disease states. For instance, in a study of ageing mouse brains, common reference genes like Hmbs, Sdha, and ActinB showed statistically significant variation in structures like the hippocampus and cerebellum [26].

- Solutions:

- Validate Gene Stability: Prior to your main experiment, test candidate reference genes using algorithms like GeNorm or NormFinder to rank their stability in your specific experimental system [26] [1].

- Use Multiple Genes: Never rely on a single gene. Normalize using a normalization factor based on the geometric mean of several (at least two) of the most stable reference genes [26].

- Choose Functionally Diverse Genes: If using multiple reference genes, avoid selecting genes from the same functional pathway (e.g., multiple ribosomal proteins), as they may be co-regulated. Incorporate genes with distinct cellular functions for a more robust baseline [1].

Problem 2: High Technical Variation After Normalization

- Symptoms: Inconsistent results between biological replicates; high coefficient of variation (CV) after normalization.

- Causes: Inconsistency can stem from RNA degradation, minimal starting material, or pipetting errors. Furthermore, the normalization method itself may be ineffective at removing non-biological noise [28].

- Solutions:

- Check RNA Quality: Prior to reverse transcription, check RNA concentration and integrity. A 260/280 ratio outside the ideal 1.9–2.0 range can indicate contamination, and a smeared gel can indicate degradation [28].

- Consider Alternative Methods: For high-throughput qPCR profiling dozens of genes, data-driven normalization methods like Quantile Normalization or the NORMA-Gene algorithm can be more robust than standard housekeeping gene approaches [5] [27].

- Improve Pipetting Technique: Perform technical replicates and ensure proficiency to minimize pipetting errors [29] [28].

Problem 3: Inability to Reproduce Published Findings

- Symptoms: Your qPCR results do not match previously published data, even when using the same reference genes.

- Causes: A widespread reliance on the 2–ΔΔCT method often overlooks critical factors such as variations in amplification efficiency and reference gene stability between different experimental setups [9]. Furthermore, a lack of shared raw data and analysis code prevents proper evaluation [9].

- Solutions:

- Go Beyond 2–ΔΔCT: Consider using statistical methods like ANCOVA (Analysis of Covariance), which can offer greater statistical power and robustness by directly accounting for efficiency variations [9].

- Adhere to FAIR Principles: Share your raw qPCR fluorescence data and detailed analysis scripts. This allows others to evaluate potential biases and reproduce your findings accurately [9].

- Use Automated, Reproducible Tools: Leverage open-source analysis software like Auto-qPCR to create a systematic, error-minimized workflow from raw data to final analysis, reducing "user-dependent" variation [30].

Reference Gene Stability Across Conditions

The table below summarizes quantitative data from a study investigating reference gene stability in different mouse brain structures during ageing, illustrating that a gene stable in one context may be unstable in another [26].

Table 1: Stability of Common Reference Genes in Ageing Mouse Brain Structures P-values from ANOVA test for expression differences across ages; lower p-value indicates less stability.

| Gene | Cortex | Hippocampus | Striatum | Cerebellum |

|---|---|---|---|---|

| Ppib | 0.0407 * | 0.2252 | 0.7391 | 0.5919 |

| Hmbs | 0.5114 | 0.0078 | 0.0344 * | 0.0047 |

| ActinB | 0.4707 | 0.0011 | 0.4552 | <0.0001 * |

| Sdha | 0.0017 | 0.0045 | 0.1322 | <0.0001 * |

| GAPDH | 0.0501 | 0.0279 * | 0.5062 | 0.0593 |

| Significance | p<0.05; * p<0.01; * p<0.001* |

Comparison of Normalization Methods

Different normalization strategies offer varying levels of effectiveness in reducing technical variability. The following table compares several common approaches.

Table 2: Performance Comparison of qPCR Normalization Methods

| Method | Principle | Best Use Case | Key Advantage | Key Limitation |

|---|---|---|---|---|

| Single Reference Gene | Adjusts data based on one stably expressed gene | Quick, low-cost pilot studies; when a gene's stability is thoroughly validated in the specific system | Simplicity and low resource requirement | High risk of bias; many classic housekeeping genes (GAPDH, ACTB) are often unstable [26] [5] |

| Multiple Reference Genes | Uses a normalization factor from several stable genes (e.g., via GeNorm) | Most standard qPCR experiments; MIQE guideline recommendation [26] | More robust than single-gene; reduces impact of co-regulation | Requires upfront validation; consumes samples for extra assays [1] |

| Global Mean (GM) | Normalizes to the average Cq of all profiled genes | High-throughput studies profiling many genes (>55) [1] | Data-driven; no need for pre-selected reference genes | Requires a large number of genes; assumes most genes are not differentially expressed [1] |

| Quantile Normalization | Forces the distribution of expression values to be identical across all samples | High-throughput qPCR where samples are distributed across multiple plates [5] | Effectively removes plate-to-plate technical effects | Makes strong assumptions about the data distribution [5] |

| NORMA-Gene | Data-driven algorithm that estimates and reduces systematic bias per replicate | Studies with a limited number of target genes (as few as 5) [27] | Does not require reference genes; handles missing data well | Less known and adopted; performance depends on number of genes [27] |

Workflow: From Poor to Robust Normalization

The following diagram illustrates a robust workflow for avoiding the consequences of poor normalization, from experimental design to data analysis.

Table 3: Key Research Reagent Solutions and Computational Tools

| Item | Function / Purpose | Example(s) / Notes |

|---|---|---|

| Stable Reference Genes | Genes with invariant expression used as internal controls for normalization. | Genes like RPS5, RPL8, HMBS were identified as stable in canine GI tissue; stability must be validated for your system [1]. |

| qPCR Plates & Seals | Physical consumables for housing reactions. | Ensure plates are properly sealed to prevent evaporation, which causes inconsistent traces and poor replication [29]. |

| RNA Quality Assessment Tools | To verify RNA integrity before cDNA synthesis. | Spectrophotometer (for 260/280 ratio), agarose gel electrophoresis. Degraded RNA is a major source of irreproducible results [28]. |

| Stability Analysis Software | Algorithms to objectively rank candidate reference genes by stability. | GeNorm [1], NormFinder [1]. Integrated into software like QBase+ [26]. |

| Data-Driven Normalization Software | Tools that perform normalization without pre-defined reference genes. | qPCRNorm R package (Quantile Normalization) [5], NORMA-Gene Excel workbook [27], Auto-qPCR web app [30]. |

From Theory to Bench: Implementing Robust qPCR Normalization Strategies

What are housekeeping genes and why are they important for qPCR? Housekeeping genes, also known as reference or endogenous controls, are constitutively expressed genes that regulate basic and ubiquitous cellular functions essential for cellular existence [31] [32]. In quantitative reverse transcription PCR (RT-qPCR), these genes serve as critical internal controls to normalize gene expression data, correcting for variations in sample quantity, RNA quality, and technical efficiency across samples [33]. This normalization is mandatory for accurate interpretation of results, as it ensures that observed expression changes reflect true biological differences rather than technical artifacts [31].

What are the key criteria for an ideal reference gene? An ideal reference gene should demonstrate stable expression under all experimental conditions, cell types, developmental stages, and treatments being studied [32] [33]. While early definitions focused primarily on genes expressed in all tissues, current best practices require that potential reference genes also be expressed at a constant level across the specific conditions of the experiment [34]. The expression of a suitable reference gene cannot be influenced by the experimental conditions [35].

Validating Reference Genes: Experimental Protocols

Step-by-Step Validation Procedure

Before using reference genes in your study, they must be empirically validated. Follow this detailed protocol to test candidate gene stability:

Select Candidate Genes: Choose 3-10 potential reference genes from literature reviews or endogenous control panels. Include genes with different cellular functions to avoid co-regulation [36] [33]. The TaqMan endogenous control plate provides 32 stably expressed human genes for initial screening [33].

Prepare Representative Samples: Collect RNA samples across all experimental conditions, time points, and tissue types relevant to your study. Ensure consistent RNA purification methods across all samples [33].

Conduct Reverse Transcription: Convert equal amounts of RNA to cDNA using consistent methodology. In two-step RT-qPCR, use a mixture of random hexamers and oligo(dT) primers for comprehensive cDNA representation [37].

Perform qPCR Analysis: Amplify candidate genes across all sample types in at least triplicate reactions. Use the same volume of cDNA template for each reaction to maintain consistency [33].

Analyze Expression Stability: Calculate Ct values and assess variability using specialized algorithms. The most suitable candidate genes will show the least variation in Ct values (lowest standard deviation) across all tested conditions [33].

Workflow Diagram for Reference Gene Validation

Troubleshooting Common Issues

How many reference genes should I use for accurate normalization? The MIQE guidelines recommend using multiple reference genes rather than relying on a single gene [35]. The optimal number can be determined using the geNorm algorithm, which calculates a pairwise variation value (V) to determine whether adding another reference gene improves normalization stability [38]. Generally, including three validated reference genes provides significantly more reliable normalization than using one or two genes.

What should I do if my favorite housekeeping gene (GAPDH, ACTB) shows variable expression? Many commonly used housekeeping genes like GAPDH and ACTB show significant variability across different tissue types and experimental conditions [31] [33]. If your initial testing reveals instability in these classic reference genes:

- Expand your candidate panel to include less traditional housekeeping genes such as TBP, RPLP2, YWHAZ, or CYC1 [31].

- Use statistical algorithms like geNorm or NormFinder to identify the most stable genes for your specific experimental system [38] [36].

- Consider alternative genes from different functional pathways that may be more stable in your particular experimental context.

How do I handle tissue-specific or condition-specific reference gene selection? Gene expression stability is highly context-dependent, meaning a gene stable in one tissue or condition may be variable in another [31]. For example, wounded and unwounded tissues show contrasting housekeeping gene expression stability profiles [31]. To address this:

- Always validate reference genes specifically for your experimental conditions.

- Consult databases like NCBI Gene Expression Omnibus to check expression patterns of candidate genes in your tissue of interest [33].

- Consider that genes stably expressed in healthy tissues may show variability in disease states or after experimental manipulations.

What if my reference genes show high variability (Ct value differences >0.5)? High variability in Ct values (standard deviation >0.5 cycles between samples) indicates an inappropriate reference gene [33]. Address this by:

- Verifying RNA quality and cDNA synthesis consistency across samples.

- Testing additional candidate genes to identify more stable alternatives.

- Using statistical methods to identify genes with the lowest M-values (geNorm) or highest equivalence (network-based methods) [31] [36].

Research Reagent Solutions

Table 1: Essential Reagents for Reference Gene Validation

| Reagent Type | Specific Examples | Function & Application Notes |

|---|---|---|

| Reverse Transcriptase Enzymes | Moloney Murine Leukemia Virus (M-MLV) RT, Avian Myeloblastosis Virus (AMV) RT | Converts RNA to cDNA; select enzymes with high thermal stability for RNA with secondary structure [37]. |

| qPCR Master Mixes | SYBR Green, TaqMan assays | Provides fluorescence detection for quantification; TaqMan assays offer higher specificity through dual probes [31]. |

| Reference Gene Assays | TaqMan Endogenous Control Panel (32 human genes) | Pre-optimized assays for screening potential reference genes [33]. |

| Primer Options | Oligo(dT), random hexamers, gene-specific primers | cDNA synthesis priming; mixture of random hexamers and oligo(dT) recommended for comprehensive coverage [37]. |

| RNA Stabilization Reagents | RNAlater | Preserves RNA integrity in tissues prior to extraction [31]. |

Statistical Analysis and Data Interpretation

Several statistical algorithms are available to assess reference gene stability:

- geNorm: Determines the most stable reference genes from a set of candidates and calculates the optimal number of genes needed for accurate normalization [38]. The algorithm computes an M-value representing expression stability, with lower M-values indicating greater stability [31].

- NormFinder: Another popular algorithm that ranks candidate genes by stability, though it may produce different rankings than geNorm [36].

- Network-based equivalence tests: A newer method that uses equivalence tests on expression ratios to select genes proven to be stable with controlled statistical error [36].

Decision Framework for Normalization Strategy

Advanced Applications and Considerations

How do I approach reference gene selection for specialized applications like cancer research or developmental studies? In specialized contexts like cancer biology, where gene expression patterns are significantly altered, the use of multiple controls is essential [33]. Studies classifying tumors into subtypes based on gene expression patterns typically select 2-3 optimal control genes from a larger panel of 11 or more candidates [33]. Similarly, in developmental studies with multiple stages, validate reference genes specifically for each developmental time point.

What are the emerging trends and computational tools for reference gene selection? Recent approaches include:

- Data-driven normalization: Methods like quantile normalization that directly correct for technical variation without presuming specific housekeeping genes, especially useful when standard reference genes are regulated by experimental conditions [5].

- Gini coefficient analysis: A statistical measure quantifying inequality in expression across samples, with lower values indicating more stable expression [32].

- Global mean normalization: Particularly useful for normalizing data from large, unbiased gene sets such as miRNA expression profiles [38].

Table 2: Common Reference Genes and Their Cellular Functions

| Gene Symbol | Gene Name | Primary Cellular Function | Stability Considerations |

|---|---|---|---|

| GAPDH | Glyceraldehyde-3-phosphate dehydrogenase | Glycolysis, dehydrogenase activity | Highly variable across tissues; requires validation [31] [33] |

| ACTB | Actin, beta | Cytoskeleton structure | Commonly used but often variable; shorter introns/exons [31] [34] |

| B2M | Beta-2-microglobulin | Histocompatibility complex antigen | Frequently used but stability varies by condition [31] |

| TBP | TATA box binding protein | Transcription initiation | Often shows high stability in validation studies [31] |

| RPLP2 | Ribosomal protein large P2 | Translation, ribosomal function | Good candidate with stable expression in many systems [31] |

| YWHAZ | Tyrosine 3-monooxygenase activation protein | Signal transduction | Validated as stable in multiple models [31] [34] |

| 18S | 18S ribosomal RNA | Ribosomal RNA component | Highly expressed; may require dilution in reactions [33] |

Why shift from a single reference gene to a geometric mean of multiple genes? The use of a single housekeeping gene for normalization in quantitative PCR (qPCR) can lead to significant errors, as no single gene is expressed at a constant level across all experimental conditions [39]. It has been demonstrated that the conventional use of a single gene for normalization leads to relatively large errors in a significant proportion of samples tested [39]. This technical guide outlines a robust strategy to overcome this limitation by implementing a normalization factor based on the geometric mean of multiple, carefully validated reference genes, a method popularized by the geNorm algorithm [38]. This approach is a prerequisite for accurate RT-PCR expression profiling and is crucial for studying the biological relevance of small expression differences [39].

Core Concepts and Rationale

Why a Single Reference Gene Is Insufficient

Gene-expression analysis is a critical tool in biological research, but it is susceptible to technical variations introduced during sample processing, RNA extraction, and enzymatic efficiencies. Normalization controls for these variables. While internal control genes, or housekeeping genes, are widely used, their expression can vary considerably depending on the tissue or experimental treatment [39]. Relying on a single, unvalidated housekeeping gene is a common but risky practice. For example, a systematic evaluation of ten housekeeping genes across various human tissues showed that a single gene normalization strategy is prone to substantial inaccuracies [39].

The Solution: Geometric Mean of Multiple Genes

The geometric mean of multiple, stably expressed reference genes provides a more reliable "virtual reference gene." This method is more robust because it averages out minor, non-coordinated variations in the expression of individual reference genes [39] [38]. This strategy requires two key steps: first, identifying the most stably expressed control genes from a candidate set in your specific experimental panel, and second, determining the optimal number of genes required to calculate a robust normalization factor [38].

Step-by-Step Experimental Protocol

Step 1: Selecting Candidate Reference Genes

The first step is to carefully select a panel of candidate reference genes (typically between 6 and 12) for evaluation. Adhere to the following principles:

- Choose Genes from Different Functional Classes: This reduces the likelihood of co-regulation. For instance, do not select only ribosomal proteins or only cytoskeletal genes [39] [1]. The original geNorm study evaluated genes from various classes including cytoskeletal (ACTB), glycolytic (GAPD), and ribosomal (RPL13A) genes [39].

- Avoid Known Regulation: Based on literature or preliminary data, avoid genes that are likely to be regulated by your experimental conditions.

- Design High-Quality Assays: Ensure primers are specific, span an exon-exon junction to avoid genomic DNA amplification, and have high PCR efficiency [40] [41].

Step 2: qPCR Experiment and Data Collection

Run your qPCR experiment on all samples in your study, including all candidate reference genes and your target genes of interest.

- Replication: Perform at least technical triplicates to account for pipetting variability.

- Controls: Include no-template controls (NTCs) to check for contamination and no-reverse transcription controls to assess genomic DNA contamination [40].

- Data Quality Check: Inspect amplification curves, melting curves, and PCR efficiencies. Remove any data points from genes with poor amplification signals or non-specific products [1].

Step 3: Determine Gene Stability and Optimal Number

Use a dedicated algorithm like geNorm or NormFinder to rank your candidate genes based on their expression stability.

- geNorm Algorithm: This algorithm calculates a stability measure (M) for each gene; a lower M value indicates more stable expression. Genes are sequentially eliminated to identify the most stable pair [38].

- Optimal Number of Genes: geNorm also determines the optimal number of reference genes by calculating the pairwise variation (V) between sequential normalization factors. A common cut-off is V < 0.15, below which adding another reference gene is not required [38]. A 2025 study on canine tissues found that three reference genes (RPS5, RPL8, and HMBS) were suitably stable for their experimental setup [1].

Step 4: Calculate the Normalization Factor

For each sample, calculate the normalization factor (NF) using the geometric mean of the Cq values of the top ( n ) most stable reference genes, where ( n ) is the optimal number determined in the previous step.

The formula is: [ NF = \left( \prod{i=1}^{n} Cqi \right)^{1/n} ]

Where ( Cqi ) is the quantification cycle value for reference gene ( i ), and ( n ) is the number of reference genes used. In practice, this is calculated using the arithmetic mean of the log-transformed data: [ NF = \frac{\sum{i=1}^{n} Cq_i}{n} ]

The normalized expression for a target gene in a given sample is then derived from this factor for subsequent statistical analysis, for example, using the ( \Delta Cq ) method (( Cq_{\text{target}} - NF )) [42].

Troubleshooting Guide

| Problem | Possible Cause | Solution |

|---|---|---|

| High variation in reference gene Cq values | RNA degradation, pipetting errors, or the gene is not stable in your experiment. | Check RNA quality (A260/280 ratio ~1.9-2.0) [28]; use a master mix for pipetting consistency; re-evaluate gene stability with geNorm [38]. |

| geNorm recommends a large number of genes | High heterogeneity in your sample panel (e.g., multiple tissues or severe pathologies). | Consider using the global mean normalization method if profiling many genes (>55) [1], or accept the recommended number for accuracy. |

| Inconsistent results after normalization | The selected reference genes are co-regulated or the minimum number required was not used. | Select genes from different functional classes to avoid co-regulation [39] [1]. Use the number of genes determined by the pairwise variation analysis in geNorm. |

| Poor PCR efficiency for a candidate gene | Faulty primer/probe design or reaction inhibitors. | Redesign the assay; check for a single peak in the melt curve; dilute template to reduce inhibitors [40] [41]. |

Frequently Asked Questions (FAQs)

Q: What is the minimum number of reference genes I should use? A: The MIQE guidelines recommend using more than one validated reference gene. The optimal number is data-driven and should be determined for each experiment using algorithms like geNorm. While two genes can be sufficient, three or more are often needed when comparing heterogeneous samples [1] [38].

Q: Can I use ribosomal RNA genes as reference genes? A: It is generally not advised. rRNA constitutes the majority of total RNA and is not representative of the mRNA fraction. Furthermore, its high abundance makes accurate baseline subtraction difficult, and it is absent from purified mRNA samples [39].

Q: My reference genes are stable within one tissue but not across different tissues. What should I do? A: This is a common challenge. You need to identify a set of genes that are universally stable across all tissue types in your study. Run the geNorm analysis on the entire, combined dataset to find the genes with the lowest overall M values [39] [1].

Q: Are there alternatives to the geometric mean method? A: Yes, other data-driven normalization methods exist, especially for high-throughput qPCR profiling dozens to hundreds of genes. These include quantile normalization and the global mean (GM) method, which uses the average Cq of all assayed genes as the normalizer [1] [5]. The GM method was shown to be the best-performing method in a 2025 study profiling 81 genes [1].

Research Reagent Solutions

| Item | Function | Example |

|---|---|---|

| RNase Inhibitor | Protects RNA samples from degradation during handling and storage. | RNAsin Ribonuclease Inhibitor [40]. |

| DNase Treatment Kit | Removes genomic DNA contamination from RNA samples prior to reverse transcription. | Various commercial kits [28]. |

| High-Quality Master Mix | Provides consistent enzyme performance and resistance to PCR inhibitors found in complex samples. | GoTaq Endure qPCR Master Mix [40]. |

| Software Tools | For stability analysis and calculation of the normalization factor. | geNorm (in qbase+), NormFinder, RefFinder, Click-qPCR [42] [38]. |

Advanced Analysis and Visualization

Once a stable normalization factor is established, you can proceed with robust differential expression analysis. Tools like Click-qPCR can automate the subsequent ( \Delta Cq ) and ( \Delta \Delta Cq ) calculations, statistical testing, and generation of publication-quality graphs [42]. For maximum rigor and reproducibility, share your raw qPCR fluorescence data and analysis scripts, which is encouraged by the MIQE and FAIR guidelines [9].

Accurate normalization is a foundational step in reliable quantitative real-time PCR (qPCR) gene expression analysis. Technical variations introduced during sample collection, RNA extraction, reverse transcription, and PCR amplification can significantly obscure true biological differences [3] [1]. Normalization controls for this technical noise, ensuring that observed expression changes reflect experimental conditions rather than procedural artifacts. The use of internal reference genes (RGs), or housekeeping genes (HKGs), is the most common normalization strategy. These genes, involved in basic cellular maintenance, are presumed to be stably expressed across various tissues and conditions. However, a growing body of evidence confirms that no single reference gene is universally stable; their expression can vary considerably depending on the species, tissue, experimental treatment, and even pathological state [43] [1] [44]. The inappropriate selection of an unstable reference gene can lead to inaccurate data, misleading fold-change calculations, and incorrect biological conclusions [3] [45].

To address this challenge, algorithm-assisted selection methods have been developed to systematically identify the most stable reference genes for a specific experimental setup. This technical support document, framed within a thesis on normalization methods for qPCR data research, provides a detailed guide to utilizing three cornerstone algorithms: geNorm, NormFinder, and BestKeeper. It offers troubleshooting guides and FAQs to help researchers, scientists, and drug development professionals navigate common pitfalls and implement these powerful tools effectively in their experiments.

Understanding the Algorithms: Principles and Workflow

The three algorithms, geNorm, NormFinder, and BestKeeper, employ distinct mathematical approaches to rank candidate reference genes based on their expression stability. Using them in concert provides a robust, consensus-based selection.

Algorithm Comparison and Workflow

The table below summarizes the core principles, outputs, and key considerations for each algorithm.

Table 1: Comparison of geNorm, NormFinder, and BestKeeper Algorithms

| Algorithm | Core Principle | Primary Output | Key Strength | Key Consideration |

|---|---|---|---|---|

| geNorm [46] | Pairwise comparison of variation between all candidate genes. | M-value: Lower M-value indicates higher stability. Pairwise variation (V): Determines optimal number of RGs (V<0.15 is typical cutoff) [43]. | Intuitively identifies the best pair of genes; recommends the optimal number of RGs. | Tends to select co-regulated genes; cannot rank a single best gene [47]. |

| NormFinder [1] | Model-based approach estimating intra- and inter-group variation. | Stability value: Lower value indicates higher stability. | Accounts for sample subgroups within the experiment; less likely to select co-regulated genes. | Requires pre-defined group structure (e.g., control vs. treatment) for best results. |

| BestKeeper [46] | Correlates each candidate gene's Cq values to a synthetic index (geometric mean of all candidates). | Standard Deviation (SD) & Coefficient of Variation (CV): Lower values indicate higher stability. Correlation coefficient (r) with the BestKeeper Index. | Provides direct measures of expression variability (SD/CV) based on raw Cq values. | Relies on raw Cq values and assumes high PCR efficiency; can be sensitive to outliers [47]. |

To integrate the rankings from these algorithms, the tool RefFinder is often used. It employs a geometric mean to aggregate results from geNorm, NormFinder, BestKeeper, and the comparative ΔCt method, providing a comprehensive stability ranking [43] [48].

The following diagram illustrates the typical experimental workflow for algorithm-assisted reference gene selection.

The Scientist's Toolkit: Essential Research Reagents and Software

Successful implementation of algorithm-assisted selection requires careful planning and the right tools. The table below lists essential materials and software used in the featured experiments.

Table 2: Research Reagent Solutions for Reference Gene Validation

| Category / Item | Specific Examples from Literature | Function / Purpose |

|---|---|---|

| RNA Extraction | Trizol reagent [3] [45], RNeasy Plant Mini Kit [43] | Isolation of high-quality, intact total RNA from biological samples. |

| DNase Treatment | RQ1 RNase-Free DNase [3] | Removal of genomic DNA contamination from RNA samples. |

| cDNA Synthesis | Maxima H Minus Double-Stranded cDNA Synthesis Kit [43] | Reverse transcription of RNA into stable complementary DNA (cDNA). |

| qPCR Master Mix | Not specified in results, but essential. | Contains DNA polymerase, dNTPs, buffers, and dyes for efficient amplification. |

| Stability Algorithms | geNorm [46], NormFinder [1], BestKeeper [46] | Excel-based software to calculate gene expression stability. |

| Comprehensive Ranking Tool | RefFinder [43] [48] | Web tool that integrates results from multiple algorithms for a final ranking. |

| (Z)-7-Dodecen-1-ol | (Z)-7-Dodecen-1-ol, CAS:20056-92-2, MF:C12H24O, MW:184.32 g/mol | Chemical Reagent |

| Bombykol | Bombykol | Bombykol, the first characterized insect sex pheromone. For Research Use Only. Not for human or veterinary use. Study olfaction and pest control. |

Troubleshooting Guides and FAQs

Frequently Asked Questions (FAQs)

Q1: Why can't I just use a single, well-known reference gene like GAPDH or ACTB?

A: It is a common misconception that classic HKGs are universally stable. Numerous studies demonstrate that their expression can vary significantly with experimental conditions. For instance, in canine gastrointestinal tissue, ACTB was less stable than ribosomal proteins [1]. In Vigna mungo under stress, TUB was the least stable gene [43]. Using an unvalidated single gene risks introducing substantial bias into your data [3] [44].

Q2: What is the minimum number of candidate genes I should test? A: The MIQE guidelines recommend using at least two validated reference genes [3]. In practice, you should start with a panel of 3 to 10 candidate genes selected from the literature relevant to your species, tissue, and experimental treatment [43] [48]. Testing too few genes may not provide a stable normalization factor.

Q3: My results from geNorm, NormFinder, and BestKeeper are slightly different. Which one should I trust? A: Discrepancies are common and expected due to their different computational principles [44] [47]. The most robust approach is to use an integrated tool like RefFinder, which generates a comprehensive ranking based on all three methods [43] [48]. Alternatively, you can manually compare the outputs and select genes that consistently rank in the top tier across all algorithms.

Q4: I am profiling a large number of genes. Are there alternative normalization methods? A: Yes. When profiling tens to hundreds of genes, the Global Mean (GM) method can be a powerful alternative. This method uses the geometric mean of the expression of all reliably detected genes as the normalization factor. One study in canine tissues found the GM method outperformed traditional reference gene normalization when more than 55 genes were profiled [1]. Another algorithm-based method, NORMA-Gene, which requires data from at least five genes and uses least-squares regression, has been shown to reduce variance effectively and requires fewer resources than traditional reference gene validation [3].

Troubleshooting Common Experimental Issues

Problem: High variation in Cq values for all candidate genes.

- Potential Cause 1: Poor RNA quality or inconsistent cDNA synthesis.

- Solution: Check RNA integrity (e.g., RIN > 8.0) using an instrument like a Bioanalyzer. Standardize RNA quantity and quality input for all reverse transcription reactions [3] [43].

- Potential Cause 2: Inefficient or variable PCR amplification.

- Solution: Check primer efficiencies; they should be between 90-110% and consistent across assays. Optimize qPCR conditions to ensure specific amplification with a single peak in the melt curve [3] [45].

Problem: geNorm recommends too many genes (high V-value).

- Potential Cause: No set of genes in your panel is sufficiently stable, or the experimental conditions profoundly affect cellular physiology.

- Solution: Re-evaluate your candidate gene panel. Include genes from different functional classes (e.g., cytoskeletal, ribosomal, metabolic) to avoid co-regulation. Consider using an alternative normalization strategy like NORMA-Gene or the Global Mean method if applicable [3] [1].

Problem: Discrepancy between algorithm rankings and RefFinder output.