Solving Batch Effects in Microarray Data: A Comprehensive Guide for Robust Genomic Analysis

This article provides a detailed roadmap for researchers, scientists, and drug development professionals tackling the pervasive challenge of batch effects in microarray data.

Solving Batch Effects in Microarray Data: A Comprehensive Guide for Robust Genomic Analysis

Abstract

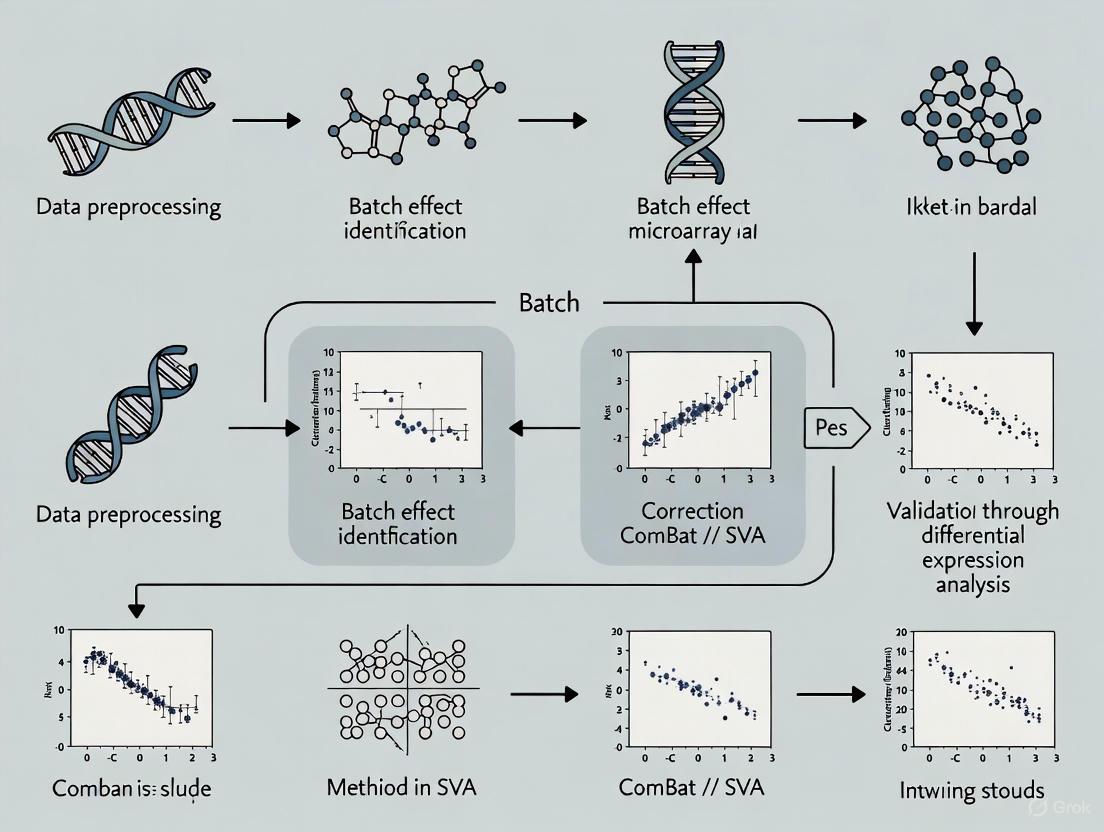

This article provides a detailed roadmap for researchers, scientists, and drug development professionals tackling the pervasive challenge of batch effects in microarray data. It covers the foundational understanding of how technical variations arise and their profound negative impact on data integrity and research reproducibility. The guide delves into established and novel correction methodologies, including ComBat, Limma, and ratio-based scaling, offering practical application advice. It further addresses critical troubleshooting and optimization strategies for complex real-world scenarios and provides a framework for the rigorous validation and comparative assessment of correction performance. By synthesizing insights from recent multiomics studies and benchmarking efforts, this resource aims to empower scientists to enhance the reliability and biological relevance of their microarray analyses.

Understanding Batch Effects: The Hidden Threat to Microarray Data Integrity

What Are Batch Effects? Defining Technical Variation in High-Throughput Experiments

What is a batch effect?

A batch effect is a type of non-biological variation that occurs when non-biological factors in an experiment cause systematic changes in the produced data [1]. These technical variations become a major problem when they are correlated with an outcome of interest, potentially leading to incorrect biological conclusions [2].

In high-throughput experiments, batch effects represent sub-groups of measurements that have qualitatively different behavior across conditions that are unrelated to the biological or scientific variables in a study [2]. They are notoriously common technical variations in omics data and may result in misleading outcomes if uncorrected [3] [4].

What causes batch effects?

Batch effects can arise from multiple sources throughout the experimental process. The table below summarizes the most common causes:

Table: Common Sources of Batch Effects in High-Throughput Experiments

| Source Category | Specific Examples | Affected Stages |

|---|---|---|

| Personnel & Time [2] [1] | Different technicians, processing dates, time of day | Experiment execution |

| Reagents & Equipment [2] [1] | Different reagent lots, instrument calibration, laboratory conditions | Sample processing, data generation |

| Experimental Conditions [1] [5] | Atmospheric ozone levels, laboratory temperatures | Sample processing, data generation |

| Sample Handling [3] | Sample storage conditions, freeze-thaw cycles, centrifugation protocols | Sample preparation and storage |

| Study Design [3] | Non-randomized sample collection, confounded batch and biological groups | Study design |

Common causes of batch effects grouped by category.

How do I detect batch effects in my data?

Detecting batch effects is a crucial first step before attempting correction. The table below outlines common qualitative and quantitative assessment methods:

Table: Methods for Detecting Batch Effects

| Method Type | Specific Technique | How It Works | Interpretation |

|---|---|---|---|

| Visualization [5] [6] | Principal Component Analysis (PCA) | Projects data onto top principal components | Data separates by batch rather than biological source |

| Visualization [5] [6] | t-SNE/UMAP | Non-linear dimensionality reduction | Cells from different batches cluster separately |

| Visualization [5] | Clustering & Heatmaps | Creates dendrograms of sample similarity | Samples cluster by batch instead of treatment |

| Quantitative Metrics [5] [6] | k-Nearest Neighbor Batch Effect Test (kBET) | Measures batch mixing at local level | Values closer to 1 indicate better batch mixing |

| Quantitative Metrics [5] [6] | Adjusted Rand Index (ARI) | Compares clustering similarity | Lower values suggest stronger batch effects |

| Quantitative Metrics [5] [6] | Normalized Mutual Information (NMI) | Measures batch-clustering dependency | Lower values indicate less batch dependency |

Workflow for detecting batch effects using visualization and quantitative methods.

What methods can correct for batch effects?

Various statistical techniques have been developed to correct for batch effects. The choice of method often depends on your data type and study design:

General Purpose & Microarray Methods

- ComBat: Empirical Bayesian method that adjusts for location and scale (additive and multiplicative) batch effects [1] [7]. It is one of the best-known BECAs and assumes batch effects have both additive and multiplicative loadings [8].

- Surrogate Variable Analysis (SVA): Identifies and estimates surrogate variables for unknown batch effects and other unwanted variation [9].

- Remove Unwanted Variation (RUV): Uses factor analysis on control genes (genes not differentially expressed) to estimate and remove batch effects [9].

- Ratio-Based Methods (e.g., Ratio-G): Scales absolute feature values of study samples relative to those of concurrently profiled reference materials [4]. This approach has been shown to be particularly effective when batch effects are completely confounded with biological factors [4].

Specialized Methods for Specific Scenarios

- BRIDGE (Batch effect Reduction of mIcroarray data with Dependent samples usinG empirical Bayes): Specifically designed for longitudinal microarray studies with "bridge samples" - technical replicates profiled at multiple timepoints/batches [7].

- Longitudinal ComBat: Extension of ComBat that accounts for within-subject repeated measures by including subject-specific random effects [7].

- Harmony: Iterative clustering method that maximizes diversity within each cluster while calculating correction factors [5] [6]. Particularly effective for single-cell RNA-seq data [10].

- Mutual Nearest Neighbors (MNN): Corrects batch effects by identifying mutual nearest neighbors between datasets and using them as anchors for correction [1] [6].

Table: Batch Effect Correction Algorithms and Their Applications

| Algorithm | Primary Data Type | Key Feature | Considerations |

|---|---|---|---|

| ComBat [1] [7] | Microarray, bulk RNA-seq | Empirical Bayes adjustment | Assumes sample independence |

| SVA [9] | Microarray, bulk RNA-seq | Estimates surrogate variables | May remove biological signal |

| Ratio-G [4] | Multi-omics | Uses reference materials | Requires reference samples |

| BRIDGE [7] | Longitudinal microarray | Uses bridge samples | Specific to dependent samples |

| Harmony [5] [6] | Single-cell RNA-seq | Iterative clustering | Good for complex data |

| MNN Correct [1] [6] | Single-cell RNA-seq | Mutual nearest neighbors | Computationally intensive |

What are common troubleshooting issues with batch effect correction?

Overcorrection: Removing Biological Signal

One common issue is overcorrection, where biological signals are mistakenly removed along with technical variation. Signs of overcorrection include [5] [6]:

- Distinct cell types clustering together on dimensionality reduction plots

- Complete overlap of samples from very different biological conditions

- Cluster-specific markers comprising genes with widespread high expression (e.g., ribosomal genes)

- Absence of expected canonical markers for known cell types

- Scarcity of differential expression hits in pathways expected based on sample composition

Sample Imbalance

Sample imbalance - differences in cell type numbers, proportions, or cells per type across samples - significantly impacts integration results and biological interpretation [5]. This is particularly problematic in cancer biology with significant intra-tumoral and intra-patient discrepancies [5].

Confounded Study Designs

When biological factors and batch factors are completely confounded (e.g., all controls in one batch and all cases in another), most batch effect correction methods struggle to distinguish technical variations from true biological differences [4]. In such extreme scenarios, ratio-based methods using reference materials have shown promise [4].

How is batch effect correction different for single-cell RNA-seq versus bulk RNA-seq?

While the purpose of batch correction (mitigating technical variations) remains the same, the algorithmic approaches differ significantly due to data characteristics [6]:

- Data Scale & Sparsity: Single-cell RNA-seq data is much larger (thousands of cells vs. tens of samples) and sparser (with high dropout rates) than bulk RNA-seq data [3] [6].

- Algorithm Suitability: Bulk RNA-seq methods may be insufficient for single-cell data due to size and sparsity, while single-cell methods may be excessive for bulk experimental designs [6].

- Specialized Tools: Single-cell specific methods like Harmony, Seurat, and MNN Correct are designed to handle the unique challenges of single-cell data [5] [6].

Essential Research Reagent Solutions for Batch Effect Management

Table: Key Research Materials for Batch Effect Mitigation

| Material/Reagent | Function in Batch Effect Management | Application Context |

|---|---|---|

| Reference Materials [4] | Provides stable benchmark for ratio-based correction | Multi-batch studies, quality control |

| Standardized Reagents [2] | Minimizes lot-to-lot variability | All experimental phases |

| Control Samples [9] | Enables monitoring of technical variation | Quality assurance across batches |

| "Bridge Samples" [7] | Technical replicates profiled across batches | Longitudinal studies, method validation |

| Multiplexed Reference Standards [4] | Multi-omics quality control and integration | Large-scale multi-omics studies |

How do I choose the right batch effect correction method?

Selecting an appropriate batch effect correction algorithm (BECA) requires considering multiple factors:

- Assess Your Entire Workflow: Choose BECAs compatible with your complete data processing workflow, not just what is popular [8]. The compatibility of a BECA with other workflow steps (normalization, missing value imputation, etc.) is crucial.

- Evaluate with Downstream Sensitivity Analysis: Test how different BECAs affect your biological findings by comparing differentially expressed features before and after correction [8].

- Don't Rely Solely on Visualization: While PCA and t-SNE plots are useful, they can be misleading for subtle batch effects. Combine visualization with quantitative metrics [8].

- Consider Using Evaluation Frameworks: Tools like SelectBCM can help rank BECAs based on multiple evaluation metrics, though you should examine raw evaluation measurements rather than just ranks [8].

Decision process for selecting an appropriate batch effect correction method.

Batch effects are technical variations introduced during the processing of microarray experiments that are unrelated to the biological factors of interest. These non-biological variations can originate at multiple stages of the workflow—from initial sample preparation through final data acquisition—and can profoundly impact data quality and interpretation. When uncorrected, batch effects can mask true biological signals, reduce statistical power, or even lead to incorrect conclusions that compromise research validity and reproducibility [11]. This technical support guide identifies common sources of batch effects in microarray workflows and provides practical troubleshooting solutions to help researchers maintain data integrity.

Frequently Asked Questions (FAQs) on Microarray Batch Effects

1. What are the most critical steps in the microarray workflow where batch effects originate?

Batch effects can emerge at virtually every stage of microarray processing. Key vulnerability points include:

- Sample preparation and storage: Variations in sample collection, protocol procedures, and reagent lots [11]

- Hybridization process: Evaporation due to improper sealing, incorrect temperature, or insufficient humidifying buffer [12]

- Data acquisition: Variations in scanner performance, environmental conditions, and reagent flow patterns [12] [13]

2. How can I determine if my microarray data is affected by batch effects?

Technical issues that suggest batch effects include:

- High background signal indicating impurities binding nonspecifically to the array [13]

- Unusual reagent flow patterns in BeadChip images [12]

- Inconsistent results from different probe sets for the same gene [13]

- Poor clustering of quality control replicates in principal component analysis

3. What are the consequences of not addressing batch effects in microarray data?

Uncorrected batch effects can:

- Mask genuine biological signals and reduce statistical power in differential expression analyses

- Generate false positive results when batch conditions correlate with biological outcomes [11]

- Compromise research reproducibility, potentially leading to retracted findings and economic losses [11]

- Invalidate cross-study comparisons and meta-analyses

Troubleshooting Guide: Common Microarray Batch Effects and Solutions

Table: Common Batch Effect Issues and Resolutions in Microarray Workflows

| Symptoms | Probable Causes | Recommended Solutions | Stage |

|---|---|---|---|

| Insufficient reagent coverage on BeadChip | Reagents stuck to tube lids/sides; Incorrect pipettor settings | Centrifuge tubes after thawing; Verify pipettor calibration and settings [12] | Sample Preparation |

| High background signal | Impurities (cell debris, salts) binding nonspecifically to array | Improve sample purification; Ensure proper washing steps [13] | Data Acquisition |

| Unusual reagent flow patterns | Dirty glass backplates; Debris trapped between components | Thoroughly clean glass backplates before and after each use [12] | Data Acquisition |

| Wet BeadChips after vacuum desiccation | Insufficient drying time; Old or contaminated reagents | Extend drying time; Replace with fresh ethanol and XC4 solutions [12] | Processing |

| Uncoated areas on BeadChips after XC4 coating | Air bubbles preventing solution contact | Briefly reposition chips in solution with back-and-forth movement [12] | Processing |

| Evaporation during hybridization | Loose chamber clamps; Brittle gaskets; Incorrect oven temperature | Ensure tight seals; Verify gasket condition; Monitor oven temperature [12] [13] | Hybridization |

| Inconsistent results for same gene across probe sets | Alternative splicing; Sequence variations; Probe homology issues | Verify transcript variants; Check for sample sequence variations [13] | Data Analysis |

Microarray Workflow with Batch Effect Risk Points

The following diagram maps the microarray workflow and highlights critical control points where batch effects commonly originate:

Experimental Protocols for Batch Effect Evaluation

Establishing Quality Control Standards (QCS)

Implementing systematic quality controls enables objective monitoring of technical variations throughout the microarray workflow:

Tissue-Mimicking QCS Preparation:

- Create a controlled quality control standard using propranolol in a gelatin matrix (concentrations of 10, 20, 40, 80 mg/mL)

- Prepare QCS solution by mixing propranolol or propranolol-d7 (internal standard) with gelatin solution in a 1:20 ratio

- Spot QCS solution alongside experimental samples on multiple slides (recommended: 18 spots per slide) [14]

- Use these standards to evaluate variation caused by sample preparation and instrument performance

Batch Effect Assessment Protocol:

- Process QCS slides alongside experimental samples across multiple batches

- Measure technical variation using the QCS signals across batches

- Apply computational batch effect correction methods (ComBat, limma) to QCS data

- Evaluate correction efficiency by measuring reduction in QCS variation and improved sample clustering in multivariate principal component analysis [14]

Table: Key Research Reagent Solutions for Batch Effect Mitigation

| Item | Function | Considerations |

|---|---|---|

| Tissue-mimicking QCS (propranolol in gelatin) | Monitors technical variation across full workflow; Evaluates ion suppression effects [14] | Prepare fresh; Standardize spotting volume and pattern |

| Internal standards (e.g., propranolol-d7) | Controls for technical variation in sample processing; Normalization reference [14] | Use stable isotope-labeled versions of analytes |

| Fresh ethanol solutions | Prevents absorption of atmospheric water during processing | Replace regularly; Verify concentration |

| Fresh XC4 solution | Ensures consistent BeadChip coating | Reuse only up to six times during a two-week period [12] |

| Calibrated pipettors | Ensures accurate reagent dispensing | Perform yearly gravimetric calibration using water [12] |

| Humidifying buffer (PB2) | Prevents evaporation during hybridization | Verify correct volume in chamber wells [12] |

Batch effects remain a significant challenge in microarray workflows that can compromise data quality and research validity. By implementing systematic quality control measures, adhering to standardized protocols, and applying appropriate computational corrections when necessary, researchers can significantly reduce technical variations. The troubleshooting guidelines and experimental protocols provided here offer practical approaches to identify, mitigate, and correct batch effects, ultimately enhancing the reliability and reproducibility of microarray data in biomedical research.

FAQs: Understanding the Batch Effect Problem

What are batch effects and how do they arise? Batch effects are systematic technical variations introduced into data due to differences in experimental conditions rather than biological factors. These unwanted variations can arise from multiple sources, including:

- Different processing times, instruments, or machines

- Different laboratory personnel or sites

- Different reagent lots or analysis pipelines

- Sample storage conditions and freeze-thaw cycles In essence, any technical variable that creates consistent patterns of variation separate from your biological question of interest can constitute a batch effect [3] [15].

Why are batch effects particularly problematic in microarray research? Batch effects introduce non-biological variability that can confound your results in several ways:

- They can mask genuine biological signals, reducing statistical power

- They can create false associations that lead to incorrect conclusions

- In worst-case scenarios, they can completely drive observed differences between groups when batch is confounded with experimental conditions [8] [3] The high-dimensional nature of microarray data makes it especially vulnerable as these technical variations can systematically affect hundreds or thousands of data points simultaneously [16].

What is the difference between balanced and confounded study designs?

- Balanced Design: Your experimental groups are equally distributed across batches. For example, both case and control samples are processed on each chip and across different processing days. This allows technical variability to be "averaged out" during analysis [15] [17].

- Confounded Design: Your experimental groups are completely or partially separated by batch. For example, all control samples were processed in January while all case samples were processed in February. In this scenario, biological and technical effects become indistinguishable, making valid conclusions nearly impossible [15] [16].

Can batch effects really lead to paper retractions? Yes. The literature contains documented cases where batch effects directly contributed to irreproducible findings and subsequent retractions. In one prominent example, a study developing a fluorescent serotonin biosensor had to be retracted when the sensitivity was found to be highly dependent on reagent batch (specifically, the batch of fetal bovine serum), making key results unreproducible [3]. Another retracted study on personalized ovarian cancer treatment falsely identified gene expression signatures due to uncorrected batch effects [8].

Troubleshooting Guides

Problem: Unexpectedly Large Number of Significant Findings After Batch Correction

Symptoms:

- Thousands of significant differentially expressed genes appear only AFTER batch correction

- These genes show no or minimal significance before correction

- Biological interpretation of results seems implausible

Diagnosis: This pattern suggests possible over-correction or false signal introduction by your batch correction method, particularly when using empirical Bayes methods like ComBat with unbalanced designs [16] [18].

Solutions:

- Verify study design balance: Check if your biological groups are confounded with batch factors

- Apply more conservative correction: Consider using simpler methods like including batch as a covariate in linear models

- Validate with positive controls: Use genes known to be associated with your biological question as validation

- Try multiple correction approaches: Compare results across different algorithms to identify consistent findings

Prevention: Always randomize sample processing to ensure balanced distribution of experimental groups across batches. If complete randomization isn't possible, ensure each batch contains at least some samples from each biological group [16].

Problem: Persistent Batch Clustering After Correction

Symptoms:

- Samples continue to cluster by batch in PCA plots after correction

- Biological signal remains weak compared to technical variation

- Batch effects appear stronger than biological effects

Diagnosis: Your batch correction method may be insufficient for the magnitude of technical variation in your data, or you may have unidentified batch sources [8].

Solutions:

- Identify hidden batch factors: Use PCA to identify unknown sources of technical variation

- Increase correction stringency: Adjust parameters or try more aggressive algorithms

- Apply multiple correction steps: Address different batch sources sequentially

- Consider data removal: In extreme cases, exclude batches with irreconcilable technical issues

Problem: Loss of Biological Signal After Correction

Symptoms:

- Known biological differences disappear after batch correction

- Samples become overly homogenized

- Biological groups that previously separated well now mix completely

Diagnosis: Your correction method may be over-removing biological variation, especially when batch and biological factors are partially confounded [8].

Solutions:

- Use biological controls: Include samples with known differences to monitor signal preservation

- Try less aggressive methods: Switch to harmony, limma, or other more conservative approaches

- Adjust correction parameters: Reduce strength of correction where possible

- Apply supervised methods: Use methods that specifically protect biological variables of interest

Quantitative Impact Assessment

Table 1: Documented Cases of Batch Effect Consequences in Biomedical Research

| Study Type | Impact of Batch Effects | Consequences | Citation |

|---|---|---|---|

| Ovarian cancer biomarker study | False gene expression signatures identified | Study retraction | [8] |

| Clinical trial risk classification | Incorrect classification of 162 patients, 28 received wrong chemotherapy | Clinical harm potential | [3] |

| DNA methylation pilot study (n=30) | 9,612-19,214 significant differentially methylated sites appearing only after ComBat correction | False discoveries | [16] |

| Cross-species gene expression analysis | Apparent species differences greater than tissue differences; reversed after correction | Misinterpretation of fundamental biological relationships | [3] |

| Serotonin biosensor development | Sensitivity dependent on reagent batch | Key results unreproducible, paper retracted | [3] |

Table 2: Performance of Batch Effect Correction Methods Under Different Conditions

| Correction Method | Balanced Design Performance | Confounded Design Performance | Key Limitations | Citation |

|---|---|---|---|---|

| ComBat | Excellent | Risk of false positives | Can introduce false signals in unbalanced designs | [16] [18] |

| limma removeBatchEffect() | Good | Moderate | Less aggressive, may leave residual batch effects | [8] [19] |

| BRIDGE (for longitudinal data) | Excellent | Good | Requires bridging samples | [7] |

| SVA/RUV | Good for unknown batch effects | Variable performance | May capture biological signal if confounded | [8] |

| Harmony | Good | Good | Developed for single-cell, adapting to microarrays | [20] |

Experimental Protocols

Protocol 1: Systematic Batch Effect Assessment in Microarray Data

Purpose: Identify and quantify batch effects in your microarray dataset before proceeding with differential expression analysis.

Materials:

- Normalized microarray expression data

- Experimental metadata (batch information, biological groups)

- R statistical environment with following packages: limma, sva, pcaMethods

Procedure:

- Prepare data matrix: Start with your normalized expression values (log2-transformed recommended)

- Perform Principal Component Analysis (PCA):

- Test association between PCs and experimental variables:

- For each principal component (PC1-PC10), test association with:

- Batch factors (chip, row, processing date)

- Biological variables (disease status, treatment group)

- Sample characteristics (age, sex, BMI if relevant)

- Use ANOVA for categorical variables, correlation tests for continuous variables

- For each principal component (PC1-PC10), test association with:

- Visualize associations: Create boxplots of PC loadings colored by batch and biological groups

- Calculate batch effect magnitude:

- Compute variance explained by batch factors in each PC

- Use PVCA (Principal Variance Component Analysis) to partition variance sources

Interpretation:

- Strong association of early PCs (PC1-PC3) with batch factors indicates significant batch effects

- Biological variables should explain more variance than technical factors in well-controlled experiments

- If batch explains >25% of variance in early PCs, correction is necessary [16]

Protocol 2: Comparative Batch Effect Correction Evaluation

Purpose: Systematically evaluate multiple batch correction methods to select the most appropriate approach for your specific dataset.

Materials:

- Raw normalized microarray data

- Batch information (categorical)

- Biological group information

- R environment with: sva, limma, pamr

Procedure:

- Split data by batch: If you have multiple batches, analyze each batch separately for differential expression to establish batch-specific results [8]

- Create reference sets:

- Identify differentially expressed features in each batch (FDR < 0.05)

- Create a union set (all unique significant features across batches)

- Create an intersect set (features significant in all batches)

- Apply multiple correction methods:

- Process your data with 3-4 different BECAs (e.g., ComBat, limma, SVA, Harmony)

- Use default parameters initially

- Evaluate performance:

- For each corrected dataset, perform differential expression analysis

- Calculate recall: proportion of union reference set detected

- Calculate false positive rate: proportion of significant features not in union set

- Check preservation of intersect set: these should remain significant

Interpretation:

- The optimal method maximizes recall while minimizing false positives

- Methods that miss many features from the intersect set may be over-correction

- Consistent performance across multiple evaluation metrics indicates robustness [8]

Visual Guide to Batch Effect Concepts

Title: Impact of Batch Effect Management on Research Outcomes

Title: Balanced vs Confounded Study Design Impact

Table 3: Key Computational Tools for Batch Effect Management

| Tool Name | Primary Function | Best Use Scenario | Implementation | |

|---|---|---|---|---|

| ComBat | Empirical Bayes batch correction | When batch factors are known and design is balanced | R/sva package | |

| limma removeBatchEffect() | Linear model-based correction | Mild batch effects with balanced design | R/limma package | |

| BRIDGE | Longitudinal data correction | Time series studies with bridging samples | Custom R implementation | [7] |

| SelectBCM | Automated method selection | Initial screening of multiple BECAs | Available as described in literature | [8] |

| PCA | Batch effect visualization | Initial diagnostic assessment | Multiple R packages |

Table 4: Experimental Quality Control Materials

| Material Type | Purpose | Implementation Example | |

|---|---|---|---|

| Reference Samples | Monitor technical variation | Include same reference sample in each batch | |

| Bridging Samples | Connect batches technically | Split same biological sample across batches | [7] |

| Positive Controls | Verify biological signal preservation | Samples with known large biological differences | |

| Randomized Processing Order | Prevent confounding | Randomize sample processing across experimental groups | |

| Balanced Design | Enable statistical separation | Ensure each batch contains all experimental groups |

Advanced Troubleshooting: Special Scenarios

Longitudinal Studies with Time-Batch Confounding

Special Challenge: When batch is completely confounded with time points (all time point 1 samples in batch 1, all time point 2 in batch 2), traditional correction methods fail.

Solution: Apply specialized methods like BRIDGE that use "bridging samples" - technical replicates measured across multiple batches/timepoints to inform the correction [7].

Protocol:

- Include a subset of samples measured at multiple timepoints in both batches

- Use these bridging samples to estimate true biological temporal changes

- Apply empirical Bayes framework that incorporates bridging sample information

- Correct all samples based on the bridging sample-informed model

Challenge: Most real-world datasets have multiple, interacting batch effects (e.g., chip, row, processing date, technician).

Solution Approach:

- Identify all potential batch sources through systematic PCA association testing

- Determine correction order: Address larger sources first, or correct simultaneously if using multivariate methods

- Validate after each correction: Check if one batch correction introduces artifacts for other batch types

- Use conservative approaches: When multiple strong batch effects exist, consider including them as covariates in your final model rather than aggressive pre-correction

When to Abandon a Dataset

In some cases, batch effects may be irreconcilable. Consider excluding batches or entire datasets when:

- Batch effects are larger than the strongest biological effects in your system

- The experimental design is perfectly confounded with no bridging samples

- Multiple correction approaches yield completely different results with no consensus

- Positive controls (known biological differences) disappear after any reasonable correction attempt

Remember that publishing results from irredeemably confounded studies risks contributing to the reproducibility crisis, so ethical considerations may warrant dataset exclusion rather than forced analysis [3] [16].

What are the primary visual tools for diagnosing batch effects?

The most common visual tool for an initial assessment of batch effects is Principal Component Analysis (PCA). When you plot your data, typically using the first two principal components, a clear separation of data points by batch (rather than by biological condition) is a strong visual indicator that batch effects are present [21] [22].

For a more advanced visualization, Uniform Manifold Approximation and Projection (UMAP) is widely used. Like PCA, a UMAP plot that shows clusters corresponding to their source batch suggests a significant batch effect. The open-source platform Batch Effect Explorer (BEEx), for instance, incorporates UMAP specifically for this purpose, allowing researchers to qualitatively assess batch effects in medical image data [23].

The following diagram illustrates a typical diagnostic workflow that integrates these visual tools:

Which statistical metrics quantify the severity of batch effects?

While visual tools are intuitive, statistical metrics are essential for quantifying the severity of batch effects. The following table summarizes key diagnostic metrics:

| Metric Name | What It Measures | Interpretation | Common Tools |

|---|---|---|---|

| Silhouette Score [22] | How similar a sample is to its own batch vs. other batches (on a scale from -1 to 1). | Scores near 1 indicate strong batch clustering (strong batch effect). Scores near 0 or negative indicate no batch structure. | BEEx [23], Custom scripts |

| k-Nearest Neighbor Batch Effect Test (kBET) [24] [22] | The proportion of a sample's neighbors that come from different batches. | A high rejection rate indicates that batches are not well-mixed (strong batch effect). A low rate suggests successful correction. | HarmonizR [25], FedscGen [24] |

| Average Silhouette Width (ASW) [25] | Similar to the Silhouette Score, but often reported specifically for batch (ASWbatch) and biological label (ASWlabel). | A high ASWbatch indicates a strong batch effect. A high ASWlabel after correction indicates biological signal was preserved. | BERT [25] |

| Principal Variation Component Analysis (PVCA) [23] | The proportion of total variance in the data explained by batch versus biological factors. | A high proportion of variance attributed to "batch" indicates a significant batch effect. | BEEx [23] |

| Batch Effect Score (BES) [23] | A composite score designed to quantify the extent of batch effects from multiple analysis perspectives. | A higher score indicates a more pronounced batch effect. | BEEx [23] |

I've applied a correction method. How do I check if it worked?

Evaluating the success of a batch-effect correction procedure involves using the same diagnostic tools on the corrected data and comparing the results to the original, uncorrected data.

- Visual Inspection: Regenerate PCA and UMAP plots using the corrected data. Successful correction is indicated by the intermingling of data points from different batches, with clusters now ideally forming based on biological conditions rather than technical origins [24] [22].

- Statistical Validation: Recalculate the quantitative metrics.

- The kBET acceptance rate should increase, indicating better mixing [24].

- The Silhouette Score with respect to batch should decrease significantly, moving closer to zero [22].

- The ASW Batch score should decrease, while the ASW Label score (measuring biological cluster cohesion) should be maintained or improved, showing that biological signal was preserved while technical noise was removed [25].

What is a recommended experimental protocol for a comprehensive batch effect diagnosis?

Below is a detailed workflow you can follow to systematically diagnose batch effects in your microarray dataset, incorporating tools like BEEx [23] and BERT [25].

Objective: To qualitatively and quantitatively determine the presence and magnitude of batch effects in a multi-batch microarray dataset.

Materials and Inputs:

- Data Matrix: A normalized, preprocessed gene expression matrix (features x samples).

- Batch Metadata: A file specifying the batch ID for each sample.

- Biological Covariates: A file specifying biological conditions (e.g., disease state, treatment) for each sample.

- Software/Tools: R/Python environment with packages like

sva(for ComBat),limma,umap, and access to specialized tools like BEEx [23] or BERT [25].

Procedure:

Data Preprocessing: Ensure your data is normalized and filtered. Log-transformation is often applied to microarray data to stabilize variance.

Qualitative (Visual) Assessment:

- Generate PCA Plot: Perform PCA on your expression matrix. Color the data points by

batchand, separately, bybiological condition. A clear separation by batch in the PCA plot is an initial red flag. - Generate UMAP Plot: Create a UMAP projection of your data. Again, color points by batch and biological condition. Look for clustering driven by batch identity.

- Generate Heatmap & Dendrogram: Perform hierarchical clustering on the samples and visualize it with a heatmap. A dendrogram that groups samples primarily by batch indicates a strong batch effect.

- Generate PCA Plot: Perform PCA on your expression matrix. Color the data points by

Quantitative (Statistical) Assessment:

- Calculate Silhouette Scores: Compute the silhouette score where the "cluster" label is the batch ID. A high average score confirms the visual observation from the plots.

- Perform kBET: Run the k-nearest neighbor batch effect test on your data. A high rejection rate across many samples quantifies the failure of batches to mix.

- Run PVCA: Use Principal Variation Component Analysis to partition the total variance in your dataset. Note the percentage of variance attributed to "batch" versus your biological factors of interest.

Interpretation and Reporting:

- Correlate the findings from all visual and statistical methods.

- A consensus across multiple diagnostics (e.g., clear batch clustering in PCA/UMAP, high silhouette score, high kBET rejection rate, and high batch variance in PVCA) provides robust evidence for the presence of batch effects.

- This comprehensive diagnosis forms the basis for deciding whether and how to proceed with batch-effect correction.

The Scientist's Toolkit: Essential Research Reagents & Solutions

The following table lists key computational tools and statistical solutions used in the field of batch effect diagnostics and correction, as identified in the search results.

| Tool/Solution Name | Type/Function | Key Application Context |

|---|---|---|

| BEEx (Batch Effect Explorer) [23] | Open-source platform for qualitative & quantitative batch effect detection. | Medical images (Pathology & Radiology); provides visualization and a Batch Effect Score (BES). |

| ComBat [26] [21] [22] | Empirical Bayes framework for location/scale adjustment. | Microarray, Proteomics, Radiomics; robust for small sample sizes. |

Limma (removeBatchEffect) [25] [22] |

Linear models to remove batch effects as a covariate. | General omics data (Transcriptomics, Proteomics), Radiomics. |

| BERT [25] | High-performance, tree-based framework for data integration. | Large-scale, incomplete omic data (Proteomics, Transcriptomics, Metabolomics). |

| HarmonizR [25] | Imputation-free framework using matrix dissection. | Integration of arbitrarily incomplete omic profiles. |

| kBET [24] [22] | Statistical test to quantify batch mixing. | Evaluation of batch effect correction efficacy in single-cell RNA-seq and other data. |

| Silhouette Width (ASW) [25] | Metric for cluster cohesion and separation. | Global evaluation of data integration quality, applicable to any clustered data. |

| RECODE/iRECODE [27] | High-dimensional statistics-based tool for technical noise reduction. | Single-cell omics data (scRNA-seq, scHi-C, spatial transcriptomics). |

Batch Effect Correction Tools: From ComBat to Cutting-Edge Ratio Methods

Frequently Asked Questions (FAQs)

1. What is the fundamental difference between ComBat and Limma's removeBatchEffect?

ComBat uses an empirical Bayes framework to actively adjust your data by shrinking batch effect estimates toward a common mean, making it particularly powerful for small sample sizes. In contrast, Limma's removeBatchEffect function performs a linear model adjustment, simply subtracting the estimated batch effect from the data without any shrinkage. Crucially, removeBatchEffect is intended for visualization purposes and not for data that will be used in downstream differential expression analysis; for formal analysis, the batch factor should be included directly in the design matrix of your statistical model [28] [29].

2. When should I use SVA instead of ComBat or Limma?

You should use Surrogate Variable Analysis (SVA) when the sources of batch effects are unknown or unmeasured [8] [30]. While ComBat and removeBatchEffect require you to specify the batch factor, SVA is designed to identify and adjust for these hidden sources of variation by estimating surrogate variables from the data itself. These surrogate variables can then be included as covariates in your downstream models [30].

3. I'm getting a "non-conformable arguments" error when running ComBat. What should I do?

This error often relates to issues with the data matrix or model structure [31]. A common solution is to filter out low-varying or zero-variance genes from your dataset before running ComBat. You should also check that your batch vector does not contain any NA values and that it has the same number of samples as your data matrix [31].

4. Can these batch correction methods be used for data types other than gene expression? Yes, the core principles of these algorithms are applied across various data types. For instance, they have been successfully used in radiogenomic studies of lung cancer patients [22]. Furthermore, specialized variants like ComBat-met have been developed for DNA methylation data (β-values), which use a beta regression framework to account for the unique distributional properties of such data [32].

5. What is the most important consideration for a successful batch correction? A balanced study design is paramount [15]. If your biological conditions of interest are perfectly confounded with batch (e.g., all controls are in batch 1 and all treatments are in batch 2), no statistical method can reliably disentangle the technical artifacts from the true biological signal. Whenever possible, ensure that each batch contains a mixture of all biological conditions you plan to study [15] [33].

Troubleshooting Guides

Problem 1: Poor Batch Correction Performance

Symptoms: After correction, Principal Component Analysis (PCA) plots still show strong clustering by batch, or downstream analysis (e.g., differential expression) yields unexpected or biologically implausible results.

| Potential Cause | Recommended Action |

|---|---|

| Severe design imbalance | Review your experimental design. If the batch is perfectly confounded with a condition, correction is not advised. Re-assess the feasibility of the analysis [15]. |

| Incorrect algorithm selection | Re-evaluate your choice. For known batches, use ComBat or include batch in the model. For unknown batches, use SVA or RUV [8] [30]. |

| Incompatible data preprocessing | Ensure the batch correction method is compatible with your entire workflow (e.g., normalization, imputation). The choice of preceding steps can significantly impact the BECA's performance [8]. |

| Over-correction | Aggressive correction can remove biological signal. Use sensitivity analysis to check if key biological findings are consistent across different BECAs [8]. |

Problem 2: Errors During ComBat Execution

Symptoms: Errors such as "non-conformable arguments" or "missing value where TRUE/FALSE needed" [31].

| Potential Cause | Recommended Action |

|---|---|

| Genes with zero variance | Filter your data matrix to remove genes with zero variance across all samples. This is a very common fix [31]. |

| Zero variance within a batch | Remove genes that have zero variance in any of the batches, not just across all samples [31]. |

NA values in the data or batch vector |

Check for and remove any NA values in your batch vector or data matrix [31]. |

Performance and Methodology Comparison

The table below summarizes the core methodologies and applications of ComBat, Limma, and SVA.

| Algorithm | Core Methodology | Primary Use Case | Key Assumptions | Data Types |

|---|---|---|---|---|

| ComBat | Empirical Bayes framework that shrinks batch effect estimates towards a common mean [8]. | Correcting for known batch effects, especially with small sample sizes [29]. | Batch effects fit a predefined model (e.g., additive, multiplicative) [8]. | Microarray data, RNA-seq count data (ComBat-seq) [32]. |

Limma's removeBatchEffect |

Fits a linear model and subtracts the estimated batch effect [22]. | Preparing data for visualization (e.g., PCA plots). Not for downstream DE analysis [28]. | Batch effects are linear and additive [22]. | Normalized, continuous data (e.g., log-CPMs from microarray or RNA-seq). |

| SVA | Identifies latent factors ("surrogate variables") that capture unknown sources of variation [30]. | Correcting for unknown batch effects or unmeasured confounders [8]. | Surrogate variables represent technical noise and can be estimated from the data [30]. | Can be applied after appropriate normalization for various data types. |

Experimental Protocols

Detailed Methodology for Benchmarking Batch Effect Correction Algorithms

This protocol outlines a sensitivity analysis to evaluate the performance of different BECAs, ensuring robust and reproducible results [8].

1. Experimental Setup and Data Splitting

- Begin with a dataset comprising multiple batches.

- Split the data into its individual batches for a ground-truth comparison (e.g., Batch A, Batch B, etc.) [8].

2. Establishing Reference Sets via Differential Expression Analysis

- Perform a differential expression (DE) analysis separately on each individual batch.

- From these individual analyses, create two crucial reference sets:

- The Union Set: Combine all unique differentially expressed (DE) features found in any of the individual batches.

- The Intersect Set: Identify the DE features that are consistently found in every single batch. This set acts as a high-confidence biological signal [8].

3. Applying and Evaluating Batch Correction Methods

- Apply a variety of BECAs (e.g., ComBat, Limma, SVA) to the original, full dataset.

- Conduct a DE analysis on each of the batch-corrected datasets.

- For each BECA, calculate performance metrics by comparing its DE results to the reference sets:

- Recall: The proportion of features in the Union Set that were successfully rediscovered after correction.

- False Positive Rate: The proportion of features called significant after correction that were not present in the Union Set.

- A reliable BECA will show high recall and a low false positive rate. Additionally, it should retain most features from the Intersect Set; missing these suggests the correction may be too aggressive and is removing real biological signal [8].

Workflow for a Standard Limma-voom Analysis with Batch Covariates

For RNA-seq count data, this is a statistically sound workflow that incorporates batch information directly into the model for differential expression [28] [29].

- Create a DGEList object using your raw count data and sample metadata.

- Normalize the data using the Trimmed Mean of M-values (TMM) method with

calcNormFactors. - Apply the

voomtransformation, which converts counts to log2-counts per million (log-CPM) and calculates observation-level weights for linear modeling. Plot thevoomobject to check data quality. - Create a design matrix that includes both your biological condition of interest and the known batch factor(s).

- Fit a linear model using the

lmFitfunction with thevoom-transformed data and your design matrix. - Apply empirical Bayes moderation to the standard errors using the

eBayesfunction. - Extract the results of your differential expression analysis using the

topTablefunction.

The Scientist's Toolkit: Essential Research Reagents & Materials

| Item | Function/Brief Explanation |

|---|---|

| High-Dimensional Data | The primary input (e.g., from microarrays, RNA-seq, or methylation arrays) requiring correction for technical noise [8]. |

| Batch Metadata | A critical file (often a CSV) that maps each sample to its processing batch. Essential for ComBat and Limma [29]. |

| R Statistical Software | The standard environment for running these analyses. Key packages include sva (for ComBat and SVA), limma (for removeBatchEffect and linear modeling), and edgeR or DESeq2 for normalization and DE analysis [29]. |

| Negative Control Genes | A set of genes known not to be affected by the biological conditions of interest. Required for methods like RUV but can be challenging to define. In practice, non-differentially expressed genes from a preliminary analysis are sometimes used as "pseudo-controls" [30]. |

| Reference Batch | A specific batch chosen as the baseline to which all other batches are adjusted. This is an option in tools like ComBat and can be useful when one batch is considered a "gold standard" [22]. |

| Visualization Tools (PCA) | Essential for diagnosing batch effects before and after correction. PCA plots provide an intuitive visual assessment of whether sample clustering is driven by batch or biology [8] [33]. |

BECA Selection and Evaluation Workflow

The following diagram outlines a logical workflow for selecting, applying, and evaluating a batch effect correction strategy, incorporating key considerations from the FAQs and troubleshooting guides.

What is the fundamental principle behind Empirical Bayes frameworks like ComBat? Empirical Bayes frameworks, such as ComBat, address the pervasive issue of batch effects in high-throughput genomic datasets. Batch effects are technical artifacts that introduce non-biological variability into data due to processing samples in different batches, at different times, or by different personnel. If left uncorrected, this noise can reduce statistical power, dilute true biological signals, and potentially lead to spurious or misleading scientific conclusions [7] [34] [35]. ComBat uses an Empirical Bayes approach to robustly estimate and adjust for these batch-specific artifacts, allowing for the more valid integration of datasets from multiple studies or processing batches [34].

How does the Empirical Bayes method in ComBat differ from a standard linear model? While a standard linear model might directly estimate and subtract batch effects, this can be unstable for studies with small sample sizes per batch. ComBat's key innovation is its use of shrinkage estimation. It assumes that batch effect parameters (e.g., the amount by which a batch shifts a gene's expression) across all genes in a dataset follow a common prior distribution (e.g., a normal distribution for additive effects). ComBat then uses the data itself to empirically estimate the parameters of this prior distribution and "shrinks" the batch effect estimates for individual genes toward the common mean. This pooling of information across genes makes the estimates more robust and prevents overfitting, especially for genes with high variance or batches with small sample sizes [7] [34].

Troubleshooting Guides and FAQs

Model Selection and Application

Q: My study has a longitudinal design where the same subjects are profiled over time, and time is completely confounded with batch. Is standard ComBat appropriate? A: No, standard ComBat, which assumes sample independence, is not ideal for dependent longitudinal samples and may overcorrect the data [7]. For such designs, you should consider specialized methods:

- Longitudinal ComBat: This extension incorporates subject-specific random effects into the ComBat model to account for the within-subject correlation introduced by repeated measurements [7].

- BRIDGE (Batch effect Reduction of mIcroarray data with Dependent samples usinG empirical Bayes): This method is specifically designed for confounded longitudinal studies and requires the inclusion of "bridge samples"–technical replicates from a subset of participants that are profiled across multiple batches. These bridges explicitly inform the batch-effect correction [7].

Q: When should I use a reference batch in ComBat? A: Using a reference batch is highly recommended in biomarker development pipelines [34]. In this scenario:

- The initial training set is designated as the reference batch.

- All future validation or test batches are adjusted to align with this reference.

- This ensures the training data and the derived biomarker signature remain fixed, avoiding the "sample set bias" where adding new batches alters the adjusted values of previously processed samples. This guarantees the biomarker can be consistently applied to new data without retraining [34].

Data Input and Preprocessing

Q: What are the basic data structure requirements for running ComBat? A: Your data should be structured as a features-by-samples matrix (e.g., Genes x Samples). The model requires you to specify a batch covariate (e.g., processing site or date) for each sample. You can also optionally include other biological or technical covariates in the design matrix to preserve their effects during correction [7] [34].

Q: My data is distributed across multiple institutions and cannot be centralized due to privacy regulations. Can I still use ComBat? A: Yes, a Decentralized ComBat (DC-ComBat) algorithm has been developed for this purpose. It uses a federated learning approach where local nodes (institutions) calculate summary statistics from their data. These statistics are then aggregated by a central node to compute the grand mean and variance needed for the Empirical Bayes estimation. The individual patient data never leaves the local institution, preserving privacy while achieving harmonization results nearly identical to the pooled-data approach [36].

Interpretation and Output

Q: After running ComBat, how can I validate the success of the batch correction? A: You should use both visual and quantitative diagnostics:

- Principal Component Analysis (PCA) Plots: Visualize the data before and after correction. Samples should no longer cluster strongly by batch in the corrected PCA plot.

- Distributional Metrics: Examine the moments of the data distribution (mean, variance, skewness, kurtosis) across batches before and after correction. Effective harmonization should align these distributions [34].

ComBat Workflow and Signaling Pathways

The following diagram illustrates the logical workflow and data flow of the Empirical Bayes estimation process in ComBat.

Key Parameter Estimates in the ComBat Model

ComBat corrects for two types of batch effects by estimating the following parameters for each gene in each batch. These are adjusted using the Empirical Bayes shrinkage method [7] [34] [36].

Table 1: Core Batch Effect Parameters in the ComBat Model

| Parameter | Symbol | Type of Batch Effect | Interpretation |

|---|---|---|---|

| Additive Batch Effect | (\gamma_{i,v}) | Location / Mean | A gene- and batch-specific term that systematically shifts the mean expression level. |

| Multiplicative Batch Effect | (\delta_{i,v}) | Scale / Variance | A gene- and batch-specific term that scales the variance (spread) of the expression values. |

The Scientist's Toolkit: Essential Research Reagents and Solutions

For researchers conducting microarray experiments and subsequent batch effect correction, the following tools and conceptual "reagents" are essential.

Table 2: Key Research Reagents and Solutions for Batch Effect Correction

| Item | Function / Interpretation | Considerations for Use |

|---|---|---|

| Bridge Samples | Technical replicate samples from a subset of participants profiled in multiple batches. They serve as a direct link to inform batch-effect correction in longitudinal studies [7]. | Logistically challenging and costly to obtain, but are crucial for confounded longitudinal designs. |

| Reference Batch | A single, high-quality batch designated as the standard to which all other batches are aligned. Preserves data integrity in biomarker studies [34]. | Prevents "sample set bias" and ensures a fixed training set for biomarker development. |

| Sensitive Attribute (Z) | A protected variable (e.g., race, age) the model is explicitly prevented from using, often enforced via adversarial training in fairness-focused applications [37]. | Requires careful specification and is part of advanced de-biasing techniques beyond standard batch correction. |

| Covariate Matrix (X) | A design matrix specifying known biological or treatment conditions of interest. ComBat uses this to model and preserve these effects during batch removal [34] [36]. | Critical for preventing the removal of true biological signal along with batch noise. |

| Shrinkage Estimators | The mathematical mechanism that stabilizes batch effect estimates by borrowing information across all genes, reducing the influence of high-variance genes [7] [34]. | The core of the Empirical Bayes approach, providing more robust corrections, especially with small batch sizes. |

Frequently Asked Questions (FAQs) on Ratio-Based Batch Effect Correction

FAQ 1: What is the core principle behind ratio-based batch effect correction? The ratio-based method, sometimes referred to as Ratio-G, works by scaling the absolute feature values (e.g., gene expression, protein intensity) of study samples relative to the values of one or more concurrently profiled reference materials analyzed in the same batch [4]. This transforms the raw measurements into a ratio scale, effectively canceling out batch-specific technical variations. The underlying assumption is that any technical variation affecting the study samples will also affect the reference material, allowing the ratio to isolate the biological signal [4] [38].

FAQ 2: When is a ratio-based approach particularly advantageous over other methods? Ratio-based correction is especially powerful in confounded scenarios, where batch effects are completely confounded with the biological factors of interest [4]. For instance, if all samples from biological Group A are processed in Batch 1 and all samples from Group B in Batch 2, it becomes impossible for many algorithms to distinguish technical from biological variation. In such cases, the ratio-based method, which uses an internal anchor (the reference material), performs significantly better at preserving true biological differences while removing batch effects [4].

FAQ 3: What are the critical considerations when selecting a reference material? An ideal reference material should be both stable and representative.

- Stability: The material must be homogenous and available in sufficient quantity to be profiled alongside every batch in a long-term study [4].

- Representativeness: Its composition should broadly reflect the study samples. For example, in proteomics, the Quartet Project's matched DNA, RNA, protein, and metabolite reference materials derived from B-lymphoblastoid cell lines are designed for this purpose [4]. In large-scale plasma proteomics studies, using pooled plasma from multiple healthy donors as a quality control (QC) sample has been shown to be effective [38].

FAQ 4: My data is on a different scale after ratio transformation. Does this impact downstream analysis? Yes, applying a ratio-based transformation will change the scale of your data. This is a fundamental characteristic of the method. While this scaling is precisely what corrects the batch effects, it is crucial to ensure that the statistical models and algorithms used in downstream analyses (e.g., differential expression, clustering) are compatible with ratio-scaled data. Always verify that your downstream tools can handle this data type appropriately.

FAQ 5: Can the ratio method be combined with other normalization techniques? Yes, ratio-based correction is often part of a larger data preprocessing workflow. It is common to perform initial normalization (e.g., for library size in RNA-seq) on the raw data before calculating the ratios relative to the reference material. The ratio step itself is the primary batch-effect correction, and its output can then be used directly for downstream statistical modeling.

Troubleshooting Common Experimental Issues

Problem: Inconsistent Correction Across Features

- Symptoms: After correction, some genes or proteins still show strong batch-associated variance, while others are over-corrected.

- Possible Causes: The chosen reference material might not be optimal for all feature types. For example, a reference material with a narrow dynamic range may not effectively correct features with very high or low expression.

- Solutions:

- Validate the dynamic range of your reference material against your study samples prior to large-scale deployment.

- Consider using a pooled reference comprising multiple samples to better capture the diversity of your study's features [38].

Problem: Introduction of Noise by Low-Abundance Features

- Symptoms: Increased variability in measurements for low-intensity genes/proteins after ratio application.

- Possible Causes: When the reference material's value for a specific feature is very low or near the detection limit, the ratio calculation can become unstable and amplify noise.

- Solutions:

- Implement a filtering step to remove features where the reference material's signal is consistently low or undetectable across batches.

- As a quality control flag, consider excluding proteins where more than half of the bridging control measurements fall below the limit of detection, as this indicates unreliable data [39].

Problem: Poor Batch Effect Removal in PCA Plots

- Symptoms: Samples still cluster by batch in a Principal Component Analysis (PCA) plot after ratio correction.

- Possible Causes:

- The batch effect may be non-additive or non-linear, which a simple scaling factor cannot fully address.

- Strong sample-specific batch effects might be present, which require a method capable of modeling these complex variations [39].

- Solutions:

- Visually inspect the data to diagnose the type of batch effect. Plotting measurements from two batches against each other can reveal if effects are protein-specific, sample-specific, or plate-wide [39].

- For complex, multi-type batch effects, consider more robust regression-based methods like BAMBOO that are specifically designed to handle them using bridging controls [39].

Performance Comparison of Batch Effect Correction Algorithms

The table below summarizes the performance of various batch effect correction algorithms (BECAs) across different data types and experimental scenarios, as evidenced by benchmarking studies.

Table 1: Performance Comparison of Batch-Effect Correction Algorithms

| Algorithm | Underlying Principle | Recommended Data Type(s) | Strengths | Key Limitations |

|---|---|---|---|---|

| Ratio-Based | Scaling to reference material(s) | Multi-omics (Transcriptomics, Proteomics, Metabolomics) [4] | Superior in confounded batch-group scenarios; broadly applicable [4]. | Requires carefully characterized reference materials. |

| ComBat | Empirical Bayes framework | Microarray, RNA-seq (ComBat-seq) [32] [40] | Widely adopted; effective for mean shifts in balanced designs [38]. | Assumes normal distribution; can be impacted by outliers in bridging controls [39]. |

| Harmony | PCA-based iterative clustering | Single-cell RNA-seq, Multi-omics [4] | Performs well in balanced and some confounded scenarios [4]. | Performance may vary across omics types. |

| BAMBOO | Robust regression on bridging controls | Proximity Extension Assay (PEA) Proteomics [39] | Robust to outliers; corrects protein-, sample-, and plate-wide effects [39]. | Requires multiple (e.g., 10-12) bridging controls. |

| ComBat-met | Beta regression | DNA Methylation (β-values) [32] | Tailored for proportional data (0-1); controls false positives [32]. | Specifically designed for methylation data. |

| Median Centering | Mean/median scaling per batch | Proteomics [38] | Simple and fast. | Lower accuracy; significantly impacted by outliers [39]. |

Standard Experimental Protocol for Ratio-Based Correction

This protocol provides a step-by-step guide for implementing a ratio-based batch effect correction in a multi-batch study, using the Quartet Project as a model [4].

Step 1: Experimental Design and Reference Material Selection

- Identify a stable and representative reference material. For multi-omics studies, consider using matched reference materials like the Quartet suites [4].

- Design your experiment so that the same reference material is profiled in every batch. The number of technical replicates for the reference material should be determined based on desired precision.

Step 2: Data Generation and Preprocessing

- Generate your multi-batch data (e.g., transcriptomics, proteomics).

- Perform initial, basic normalization on the raw data as required by your platform (e.g., library size normalization for RNA-seq, log transformation for microarray data).

Step 3: Ratio Calculation

- For each feature (gene, protein) ( i ) in a study sample from batch ( b ), calculate the ratio value as follows: ( \text{Ratio}{i,b} = \frac{\text{Normalized value of study sample}{i,b}}{\text{Normalized value of reference material}_{i,b}} )

- Here, the "Normalized value of reference material" is typically the mean or median of the technical replicates of the reference material profiled in the same batch ( b ).

Step 4: Data Integration and Downstream Analysis

- The resulting ratio-scaled matrix is your batch-corrected dataset.

- Proceed with downstream analyses such as differential expression, clustering, or predictive modeling. Ensure that the methods used are compatible with ratio-scaled data.

The workflow below summarizes this process.

The Scientist's Toolkit: Essential Research Reagents & Materials

The successful implementation of a ratio-based correction strategy relies on key reagents and resources. The table below lists essential items for setting up such an approach.

Table 2: Key Research Reagent Solutions for Ratio-Based Methods

| Item | Function & Role in Batch Correction | Example from Literature |

|---|---|---|

| Cell Line-Derived Reference Materials | Provides a stable, renewable source of DNA, RNA, protein, and metabolites for system-wide batch correction. | Quartet Project's matched multiomics reference materials from four family members' B-lymphoblastoid cell lines [4]. |

| Pooled Plasma/Serum QC Samples | Serves as a reference material for clinical proteomics and metabolomics studies, mimicking the sample matrix. | Pooled plasma from 16 healthy males used as a QC sample in a large-scale T2D patient proteomics study [38]. |

| Bridging Controls (BCs) | Identical samples included on every processing plate (e.g., in PEA protocols) to directly measure and model plate-to-plate variation. | At least 8-12 bridging controls per plate are recommended for robust correction using methods like BAMBOO [39]. |

| Commercial Reference Standards | Well-characterized, commercially available standards (e.g., Universal Human Reference RNA) that can be used as a common denominator across labs. | Various sources; often used in method development and cross-platform comparisons to anchor measurements. |

Frequently Asked Questions

Q1: My batch-corrected data shows unexpected clustering. What could be wrong? In a fully confounded study design, where your biological groups of interest perfectly separate by batch, it may be impossible to disentangle biological signals from technical batch effects [15]. If a batch correction method is applied in this scenario, it might remove biological signal along with the batch effect, leading to misleading clustering. Always check your experimental design for balance before proceeding.

Q2: What should I do if my ComBat model fails to converge?

Try increasing the number of genes used in the empirical Bayes estimation by adjusting the gene_subset_n parameter [41]. Using a larger subset of genes can stabilize the model fitting process. Additionally, ensure that your model matrix for covariates (covar_mod) is correctly specified and contains only categorical variables.

Q3: How do I handle missing values in my batch or covariate data?

The pycombat_seq function offers the na_cov_action parameter to control this. You can choose to:

"raise"an error and stop execution."remove"samples with missing covariates and issue a warning."fill"by creating a distinct covariate category per batch for the missing values [41]. Your choice should be guided by the extent and nature of the missing data.

Q4: Should I correct for batch effects before or after normalization? Batch effect correction is typically performed after data normalization. In RNA-Seq analyses, upstream processing steps like quality control and normalization should be performed within each batch before applying a batch effect correction method like ComBat-Seq [42].

Q5: After correction, a known biological signal seems weakened. Is this normal? Overly aggressive correction is a known risk. Some methods, especially those that do not retain "true" between-batch differences, can inadvertently remove or weaken strong biological signals if they are correlated with a batch [8] [43]. It is crucial to use downstream sensitivity analyses to verify that key biological findings are preserved after correction.

Troubleshooting Common Scenarios

Scenario 1: Correcting RNA-Seq Count Data in Python Problem: You have a raw count matrix from an RNA-Seq experiment conducted over several batches and need to correct for batch effects using a method designed for count data.

Solution: Use the pycombat_seq function, which is a Python port of the ComBat-Seq method.

Key Parameters:

covar_mod: A model matrix if you need to preserve signals from specific covariates.ref_batch: Specify a batch id to use as a reference, against which all other batches will be adjusted [41].

Scenario 2: Comparing Multiple Batch Correction Methods in R Problem: You are unsure which batch correction method is most appropriate for your biomarker data and want to compare several approaches.

Solution: Use the batchtma R package, which provides a unified interface for multiple methods.

Method Selection Guide from batchtma: [43]

| Method | Approach | Retains "True" Between-Batch Differences? |

|---|---|---|

simple |

Simple means | No |

standardize |

Standardized batch means | Yes |

ipw |

Inverse-probability weighting | Yes |

quantreg |

Quantile regression | Yes |

quantnorm |

Quantile normalization | No |

Scenario 3: Integrating Single-Cell RNA-Seq Data in R Problem: You have multiple batches of single-cell RNA-seq data where the cell population composition is unknown or not identical across batches.

Solution: Use the batchelor package and its quickCorrect() function, which is designed for this context.

Critical Pre-Correction Steps: [42]

- Subset to Common Features: Ensure both datasets use the same set of genes.

- Rescale Batches: Use

multiBatchNorm()to adjust for differences in sequencing depth between batches. - Select Highly Variable Genes (HVGs): Use

combineVar()andgetTopHVGs()to select genes that drive population structure.

Experimental Protocols & Evaluation

Protocol: Evaluating Correction Performance with Downstream Sensitivity Analysis

This protocol helps you assess how different BECAs affect your biological conclusions, a recommended best practice [8].

- Split Data by Batch: Treat each of your batches as an individual dataset.

- Perform DEA Per Batch: Conduct a differential expression analysis (DEA) on each batch separately to obtain lists of differentially expressed (DE) features for each.

- Create Reference Sets:

- Union Reference: Combine all unique DE features from all individual batches.

- Intersect Reference: Identify the DE features that are common to all batches.

- Apply Multiple BECAs: Correct your full dataset using several batch correction methods.

- Perform DEA on Corrected Data: Run DEA on each batch-corrected dataset.

- Calculate Performance Metrics:

- Recall: The proportion of the Union Reference found by the DEA on the corrected data.

- Check Intersect: Ensure that features in the Intersect Reference are still present after correction; their absence may indicate over-correction.

The method that yields the highest recall while preserving the intersect features can be considered the most reliable for your data.

The Scientist's Toolkit

| Essential Material / Software | Function |

|---|---|

sva / inmoose R/Package |

Provides the standard ComBat (for normalized data) and ComBat-Seq (for count data) algorithms for batch effect adjustment using empirical Bayes frameworks [41] [40]. |

limma R Package |

Contains the removeBatchEffect() function, a linear-model-based method for removing batch effects, commonly used for microarray and RNA-Seq data [8] [42]. |

batchelor R Package (Bioconductor) |

A specialized package for single-cell data, offering multiple correction algorithms (e.g., MNN, rescaleBatches) that do not assume identical cell population composition across batches [42]. |

batchtma R Package |

Provides a suite of methods for adjusting batch effects in biomarker data, with a focus on retaining true between-batch differences caused by confounding sample characteristics [43]. |

| Principal Component Analysis (PCA) | A dimensionality reduction technique used to visualize batch effects before and after correction. Persistent batch clustering in PCA plots after correction suggests residual batch effects [8] [42]. |

Batch Effect Correction Workflow

The following diagram outlines the logical workflow for a standard batch effect correction process, from data preparation to evaluation.

Batch Effect Correction Workflow

Method Selection Logic

Choosing the right batch correction method is critical. The following diagram provides a logical pathway for selecting an appropriate algorithm based on your data type and experimental design.

Algorithm Selection Guide

ComBat-met FAQs: Core Methodology and Application

Q1: What is ComBat-met and how does it fundamentally differ from standard ComBat?

ComBat-met is a specialized batch effect correction method designed specifically for DNA methylation data. Unlike standard ComBat, which assumes normally distributed data, ComBat-met employs a beta regression framework that accounts for the unique characteristics of DNA methylation β-values, which are constrained between 0 and 1 and often exhibit skewness and over-dispersion. The method fits beta regression models to the data, calculates batch-free distributions, and maps the quantiles of the estimated distributions to their batch-free counterparts [32].

Q2: When should I choose ComBat-met over other batch correction methods?

ComBat-met is particularly advantageous when:

- Your data consists of β-values from DNA methylation studies

- You require high statistical power for differential methylation analysis

- Controlling false positive rates is a critical concern

- You need to handle data with both additive and multiplicative batch effects

Simulation studies demonstrate that ComBat-met followed by differential methylation analysis achieves superior statistical power compared to traditional approaches while correctly controlling Type I error rates in nearly all cases [32].

Q3: What are the key preprocessing steps before applying ComBat-met?

Proper preprocessing is essential for effective batch correction:

- Quality Control: Remove poor-quality samples and probes using standard methylation QC pipelines

- Normalization: Apply appropriate normalization for your platform (450K/EPIC)

- M-Value Conversion: While ComBat-met works with β-values, the underlying model uses M-values (logit-transformed β-values) for statistical modeling [32] [44]

Q4: Can ComBat-met handle reference-based adjustments?

Yes, ComBat-met supports both common batch effect adjustment (adjusting all batches to a common mean) and reference-based adjustment, where all batches are adjusted to the mean and precision of a specific reference batch. This is particularly useful when you have a gold-standard batch or when integrating new data with previously established datasets [32].

Performance Comparison of Batch Effect Correction Methods

Table 1: Comparative performance of DNA methylation batch effect correction methods based on simulation studies

| Method | Underlying Model | Data Type | Key Advantages | Limitations/Considerations |

|---|---|---|---|---|

| ComBat-met | Beta regression | β-values | Specifically designed for methylation data; maintains β-value constraints; improved power in simulations | Newer method with less established track record |